TensorFlow or Theano – TensorFlow, along with PyTorch, is currently the best known and most widely used machine learning framework. However, the choice of tool should never depend on one’s own preferences, but should be adapted to the data to be examined. Especially in the Big data area, this can prevent a decisive loss of performance. It is therefore also worthwhile to look off the beaten track and to look at other frameworks and libraries in addition to the top dogs.

Theano is one such open source Python library. In the following article, we will introduce both tools and explain the differences.

Table of Contents

What is Tensorflow?

The open source framework TensorFlow is the direct successor of Google’s first deep learning tool DistBelief and primarily also forms the basis for neural networks in the environment of language and image processing tasks. With TensorFlow, own models can be developed and processed, but also pre-trained models can be accessed. TF runs on a variety of platforms and is implemented in Python and C++.

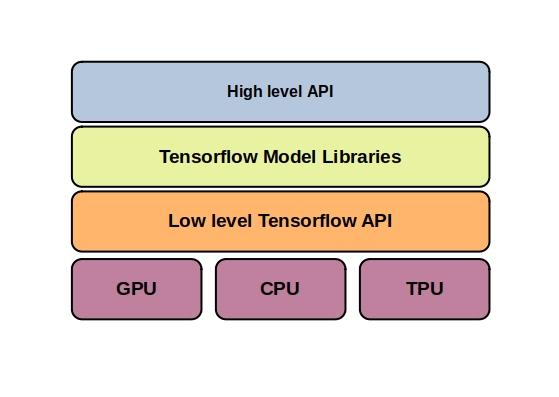

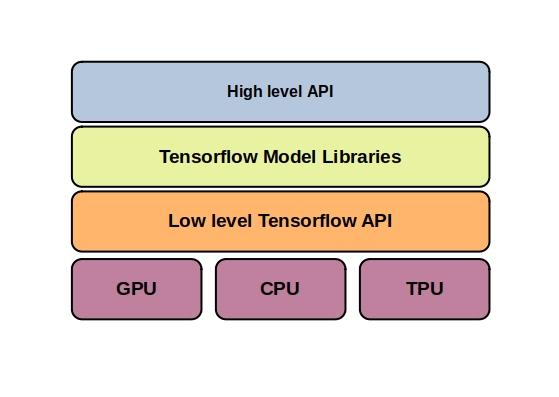

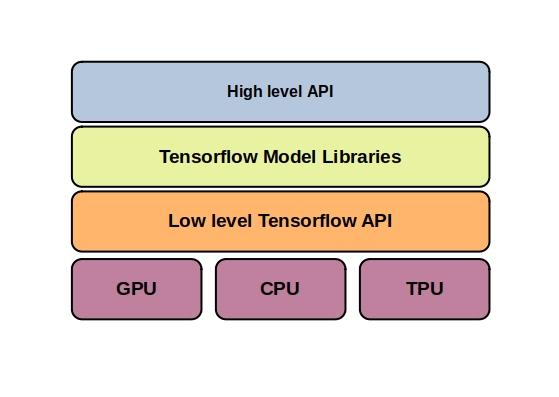

TF offers low-level APIs for CPU, GPU or TPU. In this way, the hardware resources can be optimally adapted to the process through dynamic allocations.

In addition to the low level APIs, there are also various high level APIs, such as Keras, one of the best known and most frequently used. If you want to know more about Keras, check out our article on the topic.

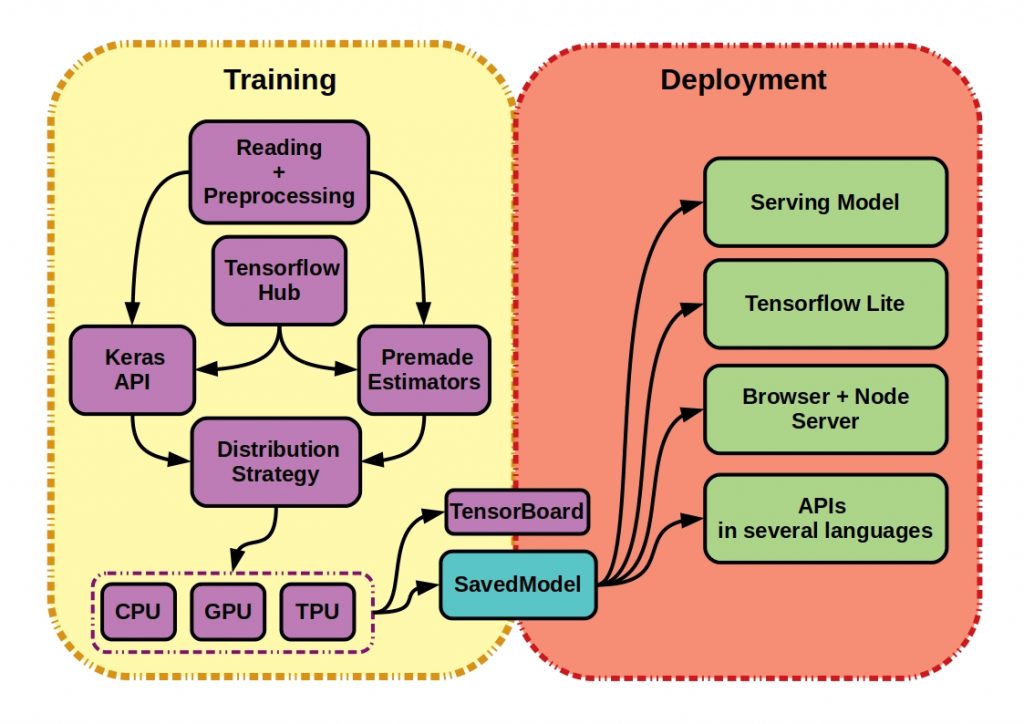

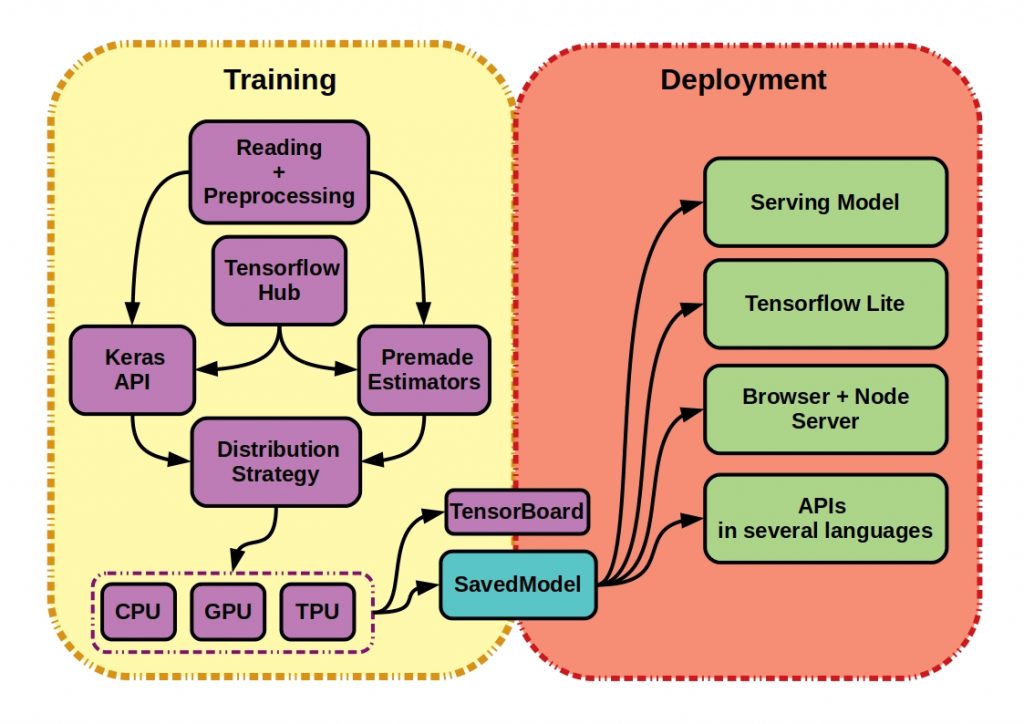

Framework Architecture

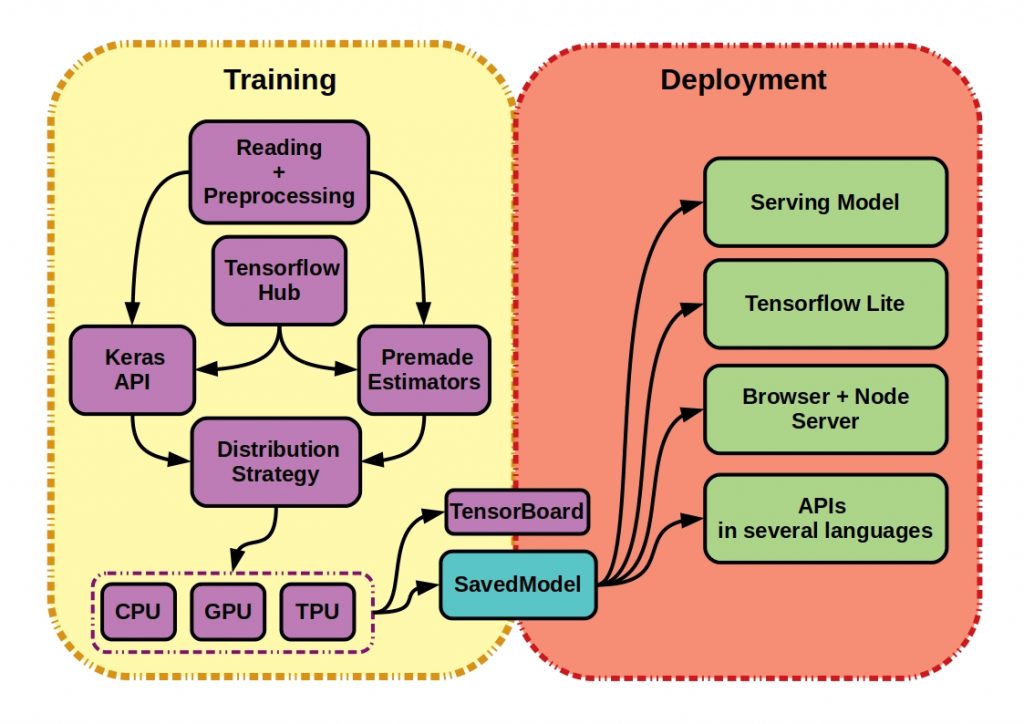

Mainly, the TensorFlow framework can be divided into the components needed for training, where the models are prepared for field use, and for the final deployment, for example on mobile and IoT devices with TensorFlowLite. To simplify the training, TensorFlow offers the developer some useful services besides the already mentioned dynamic allocation. For example, a premade estimator offers a high-level representation of a complete model.Via the TensorFlow Hub, a kind of repository, even trained machine learning models can be other language bindings can be accessed.

The TensorBoard and StoredModels services act as connecting elements between training and deployment. TensorBoard is the visualization toolkit of TensorFlow with which the experiment results can be visualized. So here it is more of a monitoring solution for the human interface. With the StoredModels both deployment services and training services can share the models. This service thus forms a kind of intermediary, but contains a complete TensorFlow program, including all weights and calculations.

TensorFlow – Data Structure

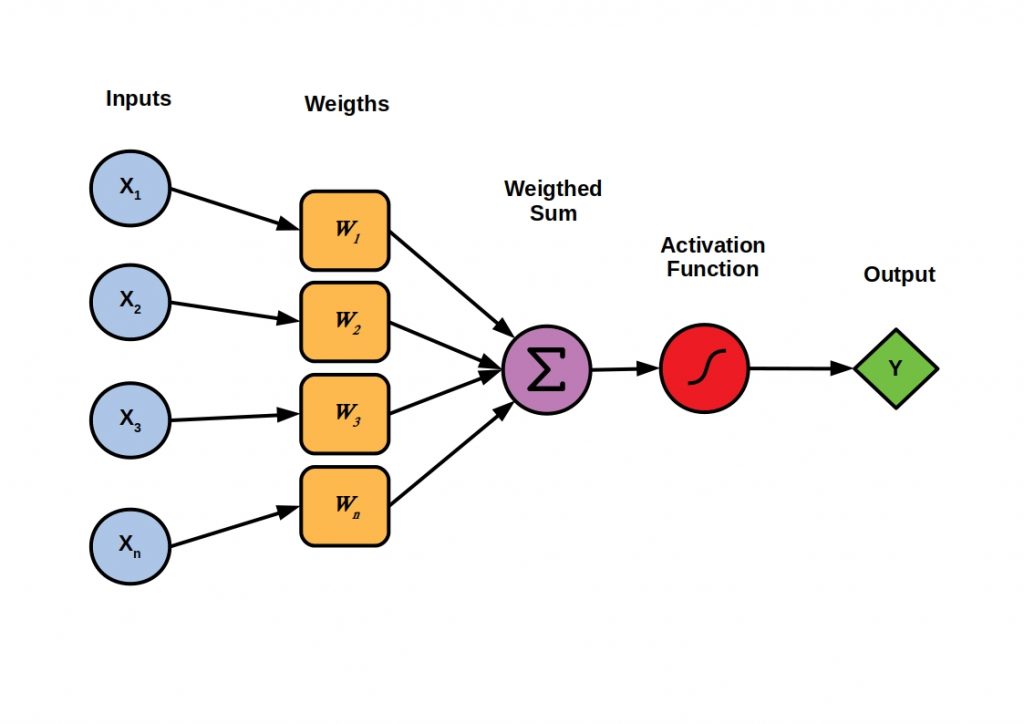

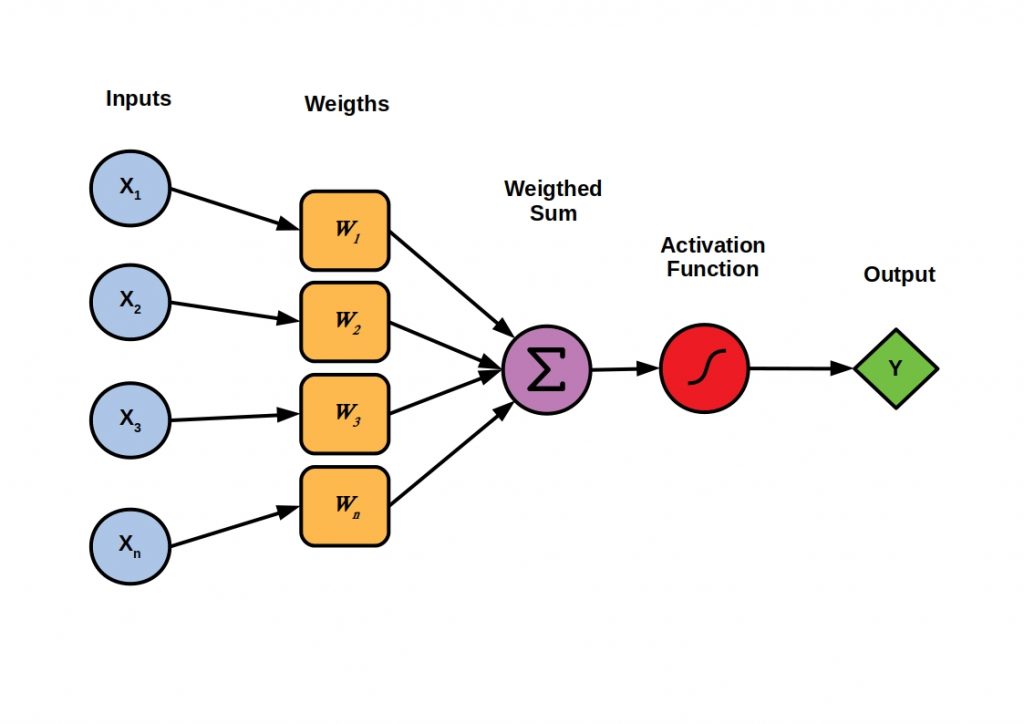

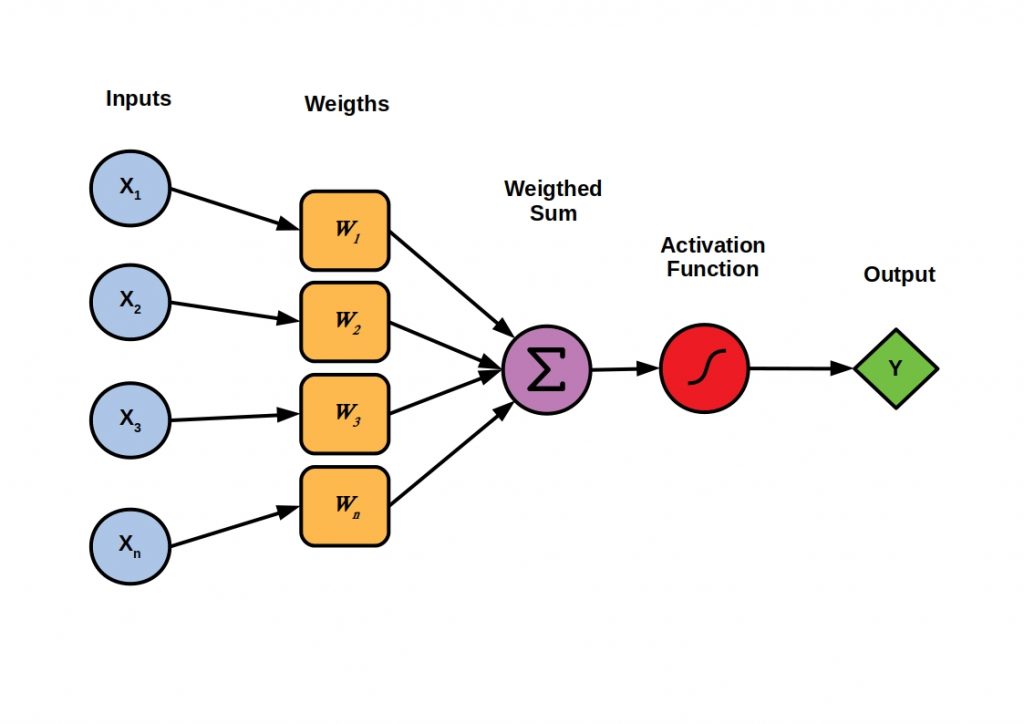

Neural networks are represented by directed cycle-free graphs. These graphs can be represented and computed beyond the computer limits of training. A graph basically consists of nodes connected by edges. The extent to which the nodes are interconnected also usually determines the learning procedure and thus the structure of an artificial neural network.

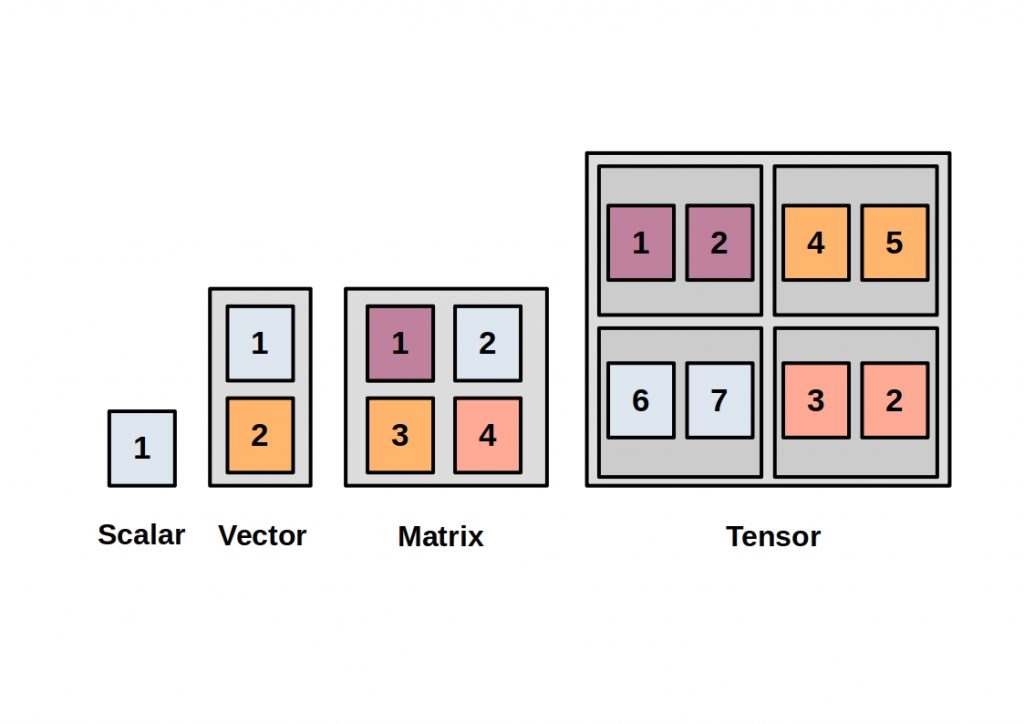

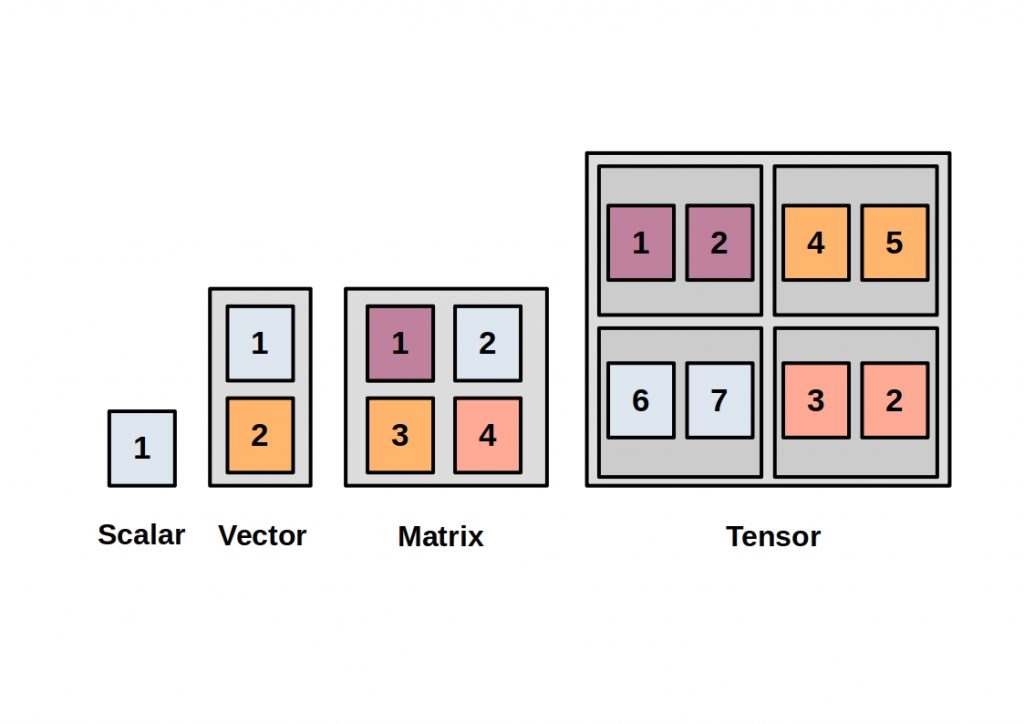

The inputs and outputs of the individual calculation steps represent multidimensional data arrays, so-called tensors.

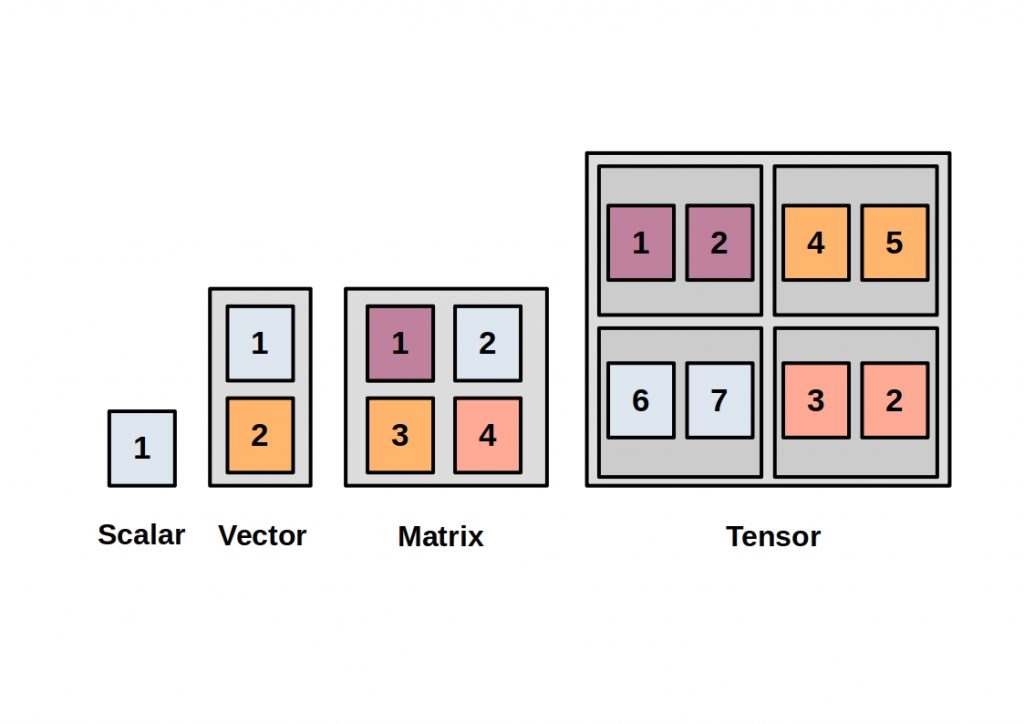

The mathematical term tensor corresponds to a generalization of vectors and matrices. It is thus an elementary data structure for data representation and processing. In TensorFlow the implementation is done as multidimensional arrays . A vector thus corresponds to a one-dimensional tensor.

Additional dimensions can be added to a tensor up to infinity. Common tensor types are 3-dimensional tensors for time series, images are usually 4-dimensional, and videos are 5-dimensional tensors.

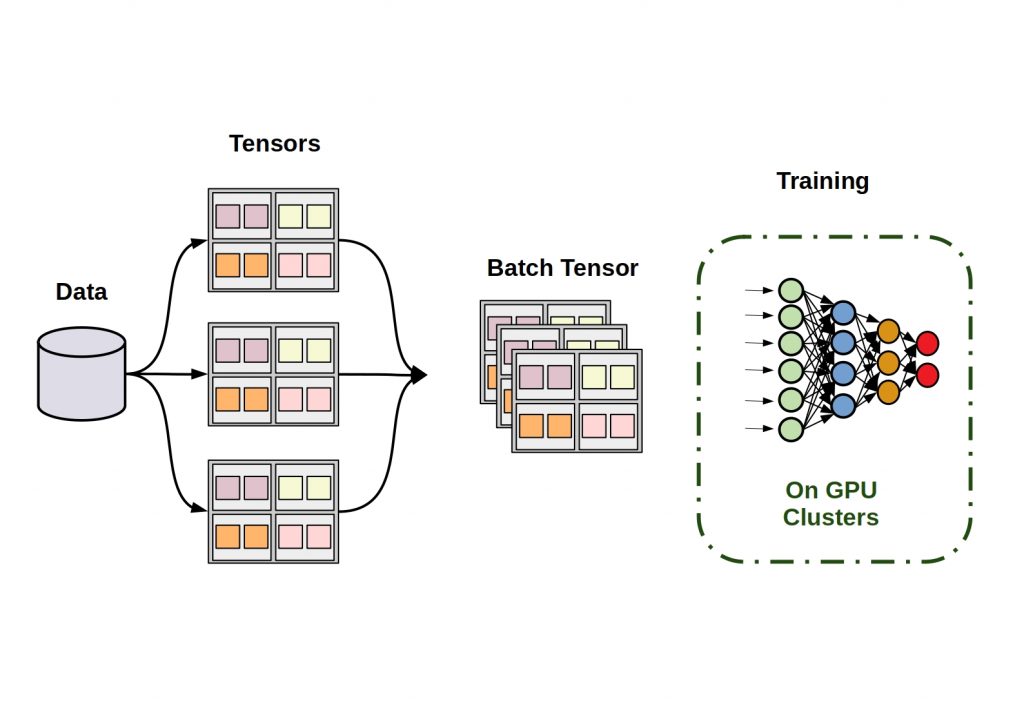

TensorFlow methods manipulate tensors for linear algebra operations. These processes can be executed with high performance by moving the tensor objects to the graphics card memory or tensor optimized TPUs.

TensorFlow – Training

The training itself then proceeds in such a way that training data are iteratively fed into the computers and at the same time the weights within the graph are varied. The output is then approximated to a target output value. To this end, separate test data can be used to periodically verify that the training is effective for arbitrary or different input data.

Theano – Old but Gold

Theano is an open source Python library for machine learning and neural network programming, and compiler for mathematical expression computation. It was released back in 2007 by the Montreal Institute for Learning Algorithms (MILA) at the University of Montreal.

It is particularly suitable for the definition, optimization and evaluation of mathematical expressions involving multidimensional arrays. For this purpose, Theano accesses the NumPy program library for dealing with matrices, large multidimensional arrays and vectors. First, read our article on NumPy. Here we introduce you to this elementary Python library and explain its basic data management.

Mathematical expressions are programmed and symbolized in Theano using a NumPy-like syntax.

The calculation instructions are done in C++ or CUDA code, thus very close to the machine and accordingly very efficient on CPUs or graphics processing units (GPUs).

Theano can also be used, like TensorFlow as a backend for the framework Keras. Keras thus forms an intersection for both technologies.

Graph Structure

Unlike TensorFlow, Theano focuses on supporting symbolic matrix expressions rather than tensors as a basic data type. Although all kinds of Python objects are supported, basic tensor functionality can be used with Theano, but these operations are not as optimized as with TensorFlow.

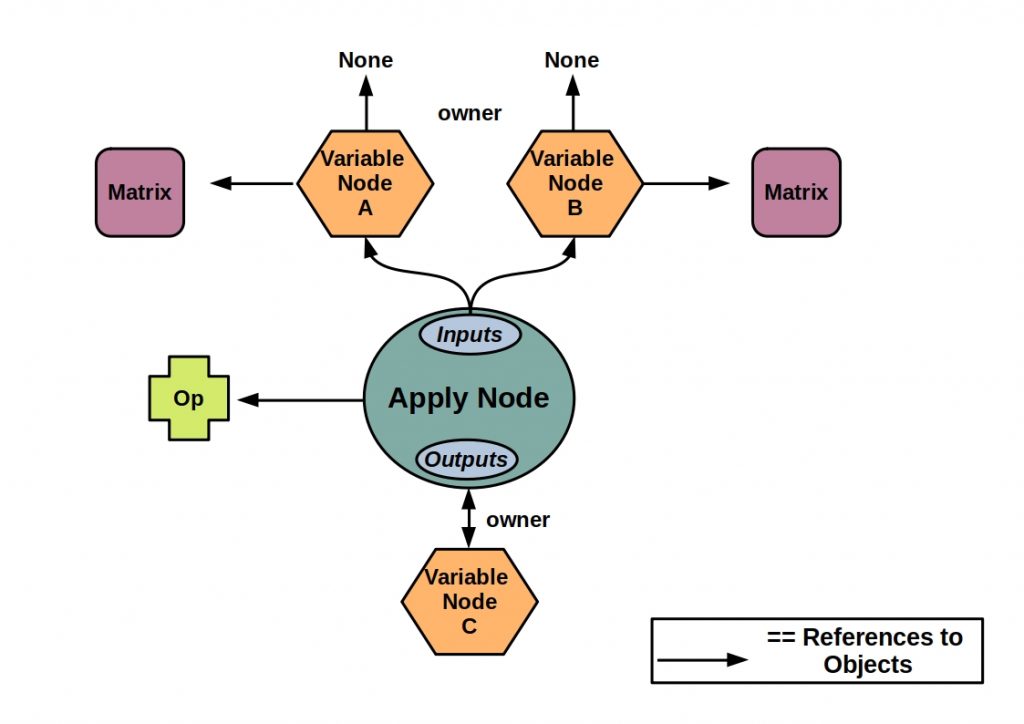

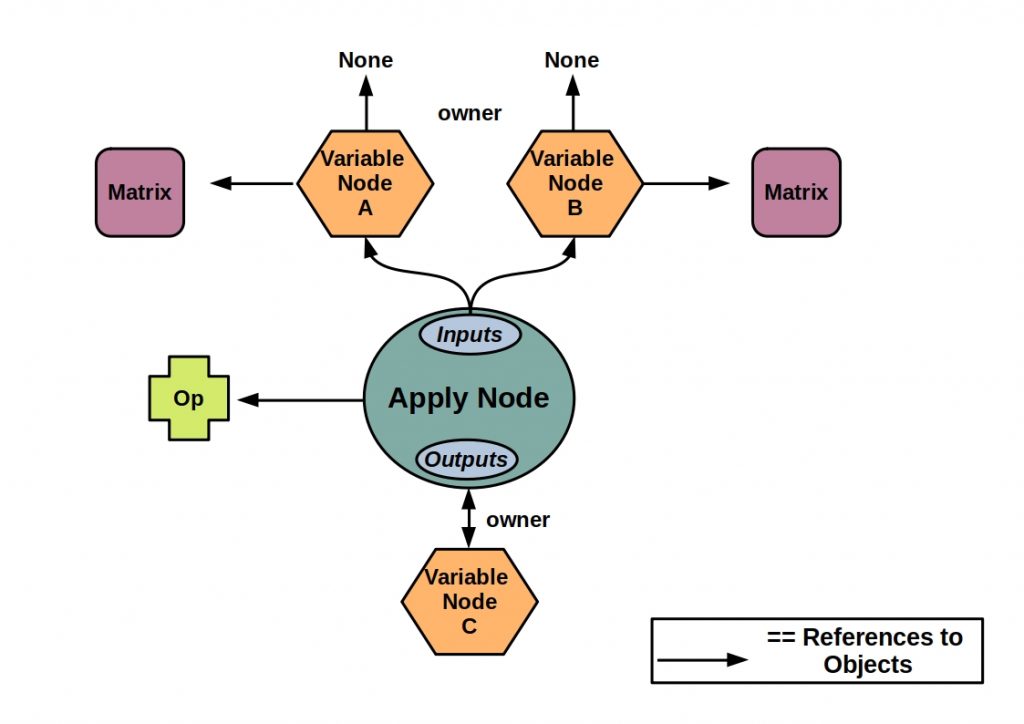

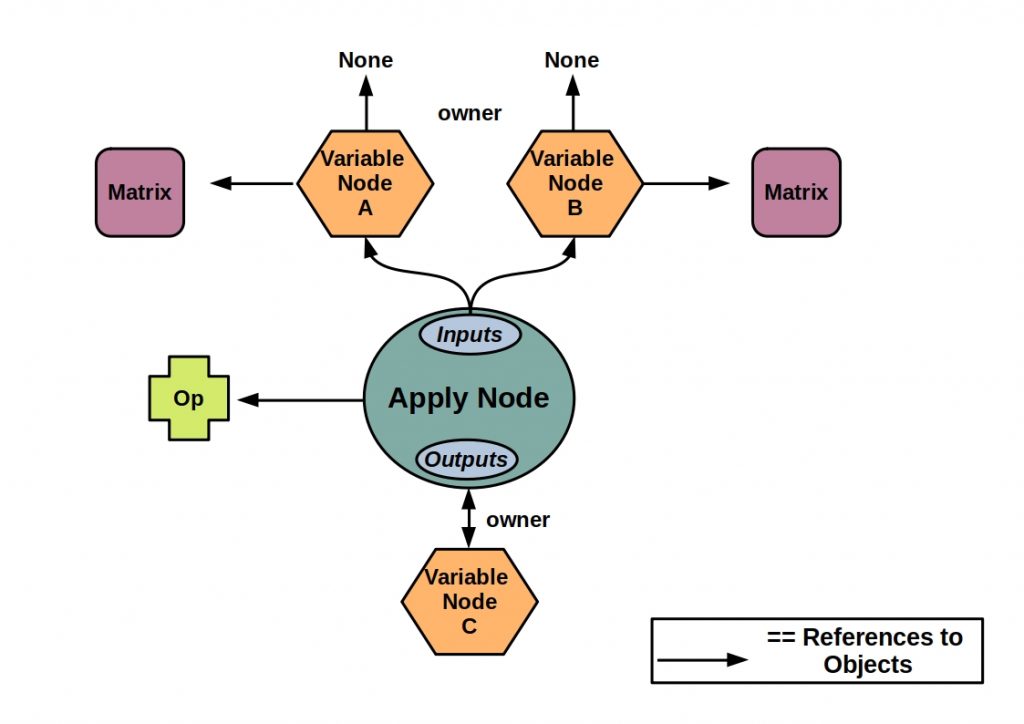

Theano performs the symbolic mathematical calculations are executed as graphs. These graphs are composed of interconnected Apply, Variable and Op nodes.

The Op node represents a particular computation on a particular type of input that produces a particular type of output. It thus corresponds to the definition of a computation.

The centrally located Apply node represents the application of an Op to some variables, that is, the application of computations to the current data, and is used to represent a computation graph. Each op is responsible for knowing how to build an Apply node from a list of inputs and thus determines the determines the function and transformation.

An Apply node additionally consists of the input or output fields. The inputs represent the arguments of the function, and the outputs represent the return values of the function.

The Apply nodes then refer to their input and output variables, the main data structure, in the graph via their input and output fields, respectively.

These Variable Nodes are defined by various fields. The variable type, the owner, which can be None or an Apply node of which the variable is an output, the index and the variable name.

TensorFlow or Theano?

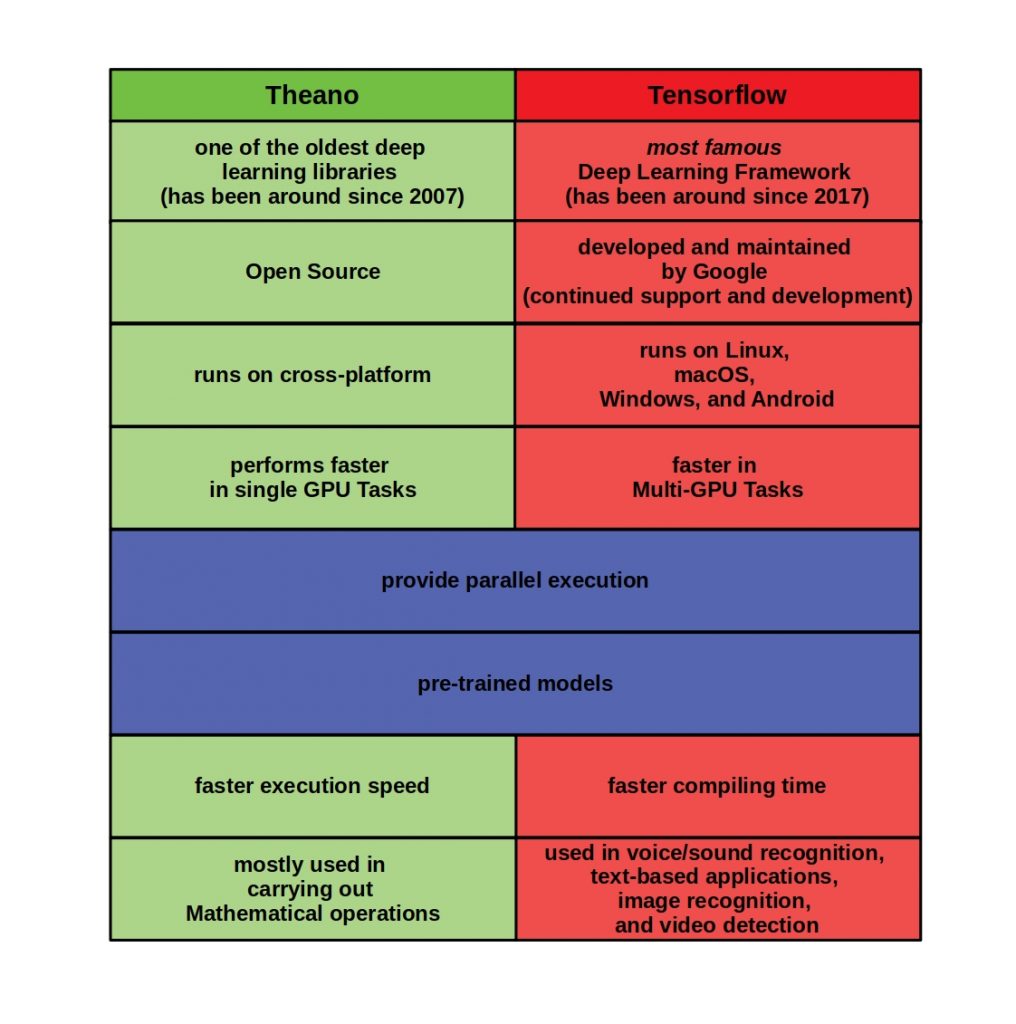

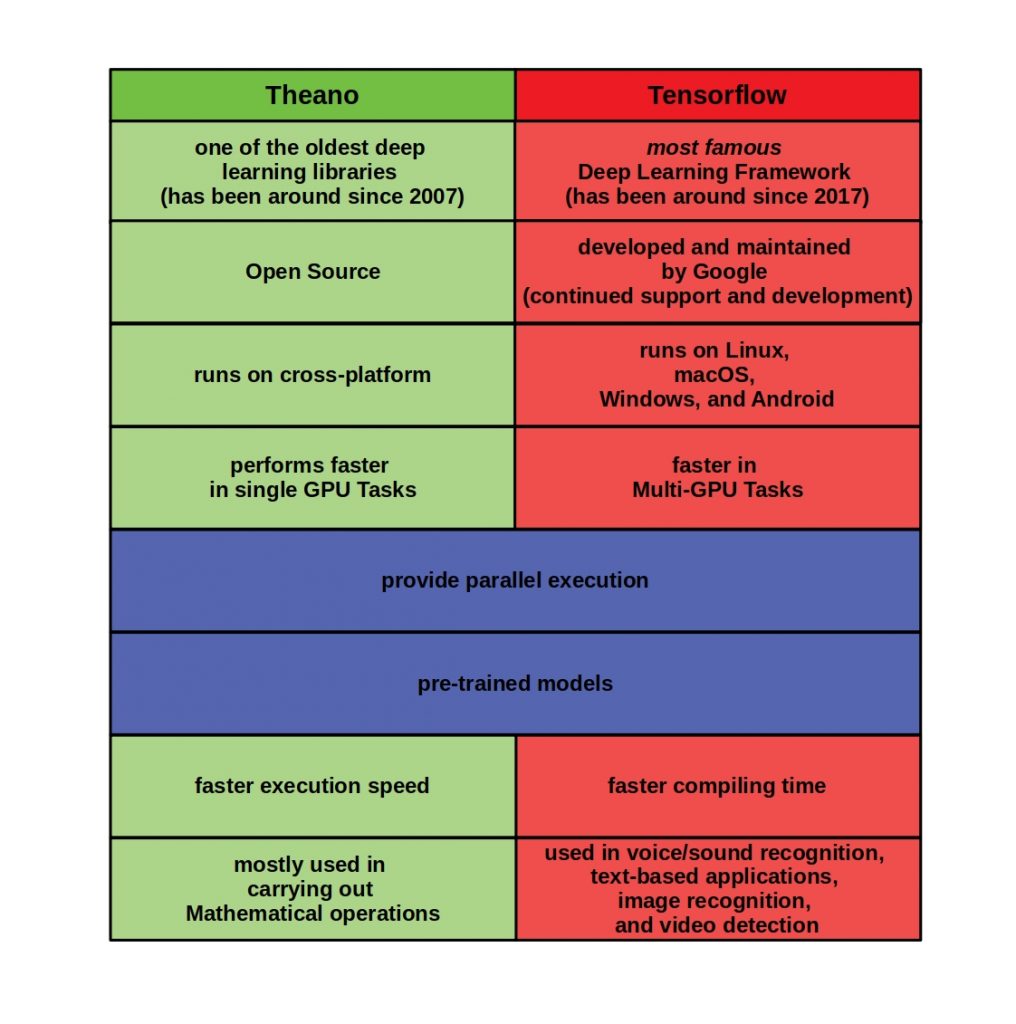

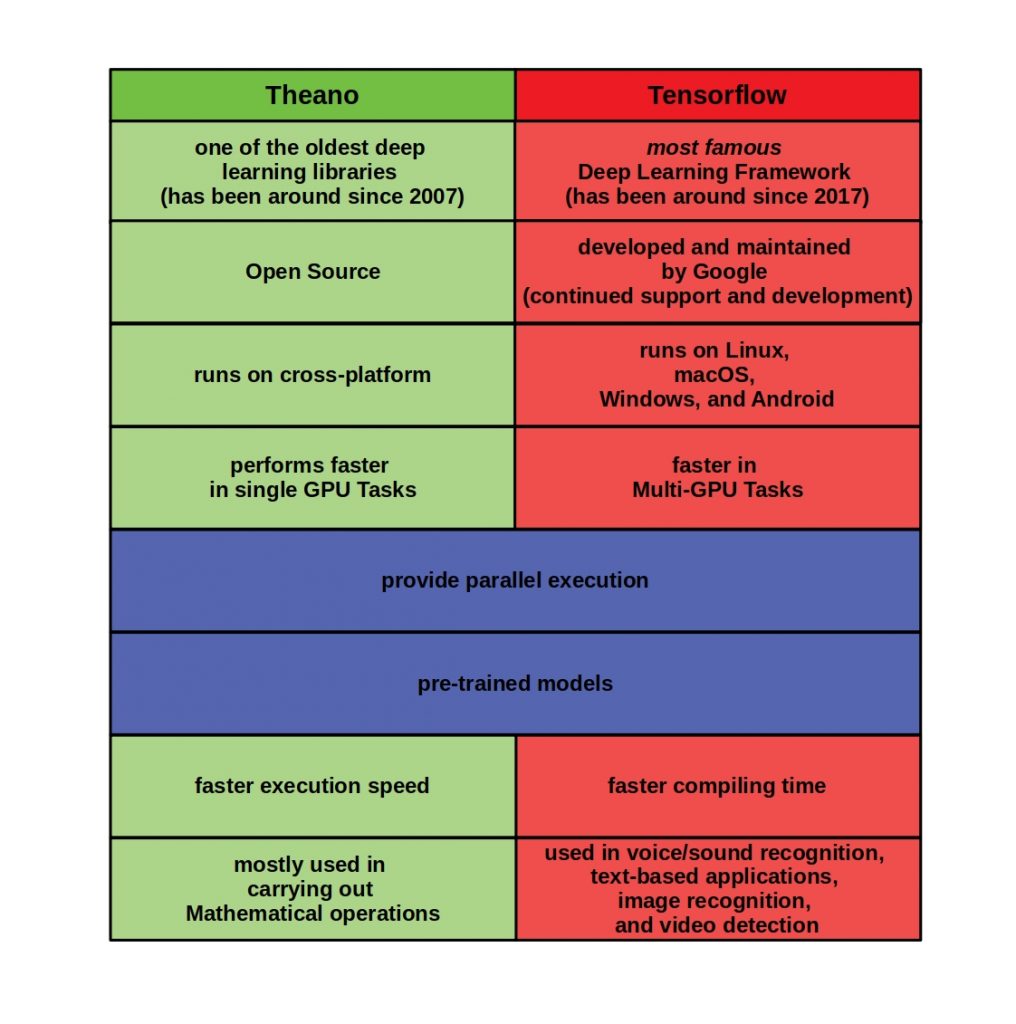

All in all, both technologies have their advantages and disadvantages. But both have their raison d’être. Here, too, the data set provides the tools.

In the table below, we have listed all the important points of difference in detail.

Especially when it comes to tensor processing, as in image processing and sound recognition, TensorFlow with its optimized operations should be the first choice. Another tensor-based alternative to the Google solution is PyTorch from Facebook. In this article we compared these two tools.

Despite its age, Theano is a high-performance and modern alternative for the calculation of matrix expressions.

0 Comments

1 Pingback