Apache Hive Architecture – On the way to Industry 4.0, companies are trying to record all business processes as far as possible in order to subsequently optimize them through analysis.

Data warehouse systems provide central data management. Thus, only one data truth exists. In addition to persistence, these information systems take care of sorting, preprocessing, translation and data analysis.

If you want to know more about what a data warehouse system is, check out our article on the subject.

What is Apache Hive

Hive is a data warehousing software project and part of Apache, an open source and free web server software. Learn more about Apache here.

It is built on the Big Data framework Apache Hadoop and was released in 2010. Since then it has been continuously improved and extended by an industrious community.

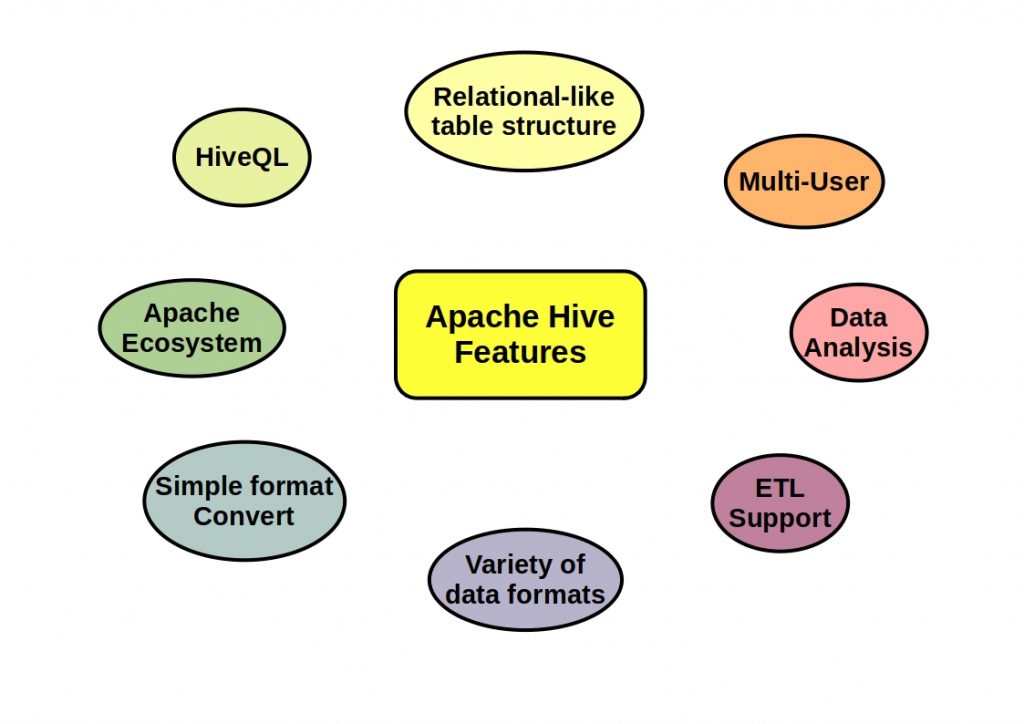

The query language used by Hive, called HiveQL, is SQL based and allows querying, aggregation and analysis of unstructured data. Hive does not work with the schema-on-write (SoW) approach like relational databases, but uses the so-called schema-on-read (SoR) approach.

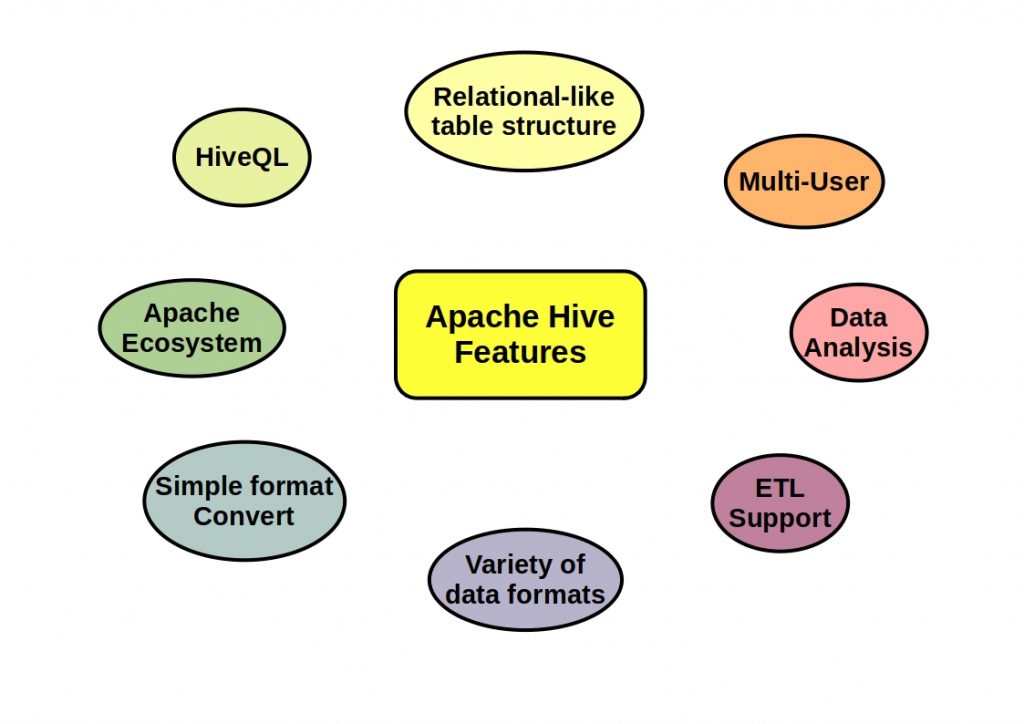

What are the biggest advantages of Hive?

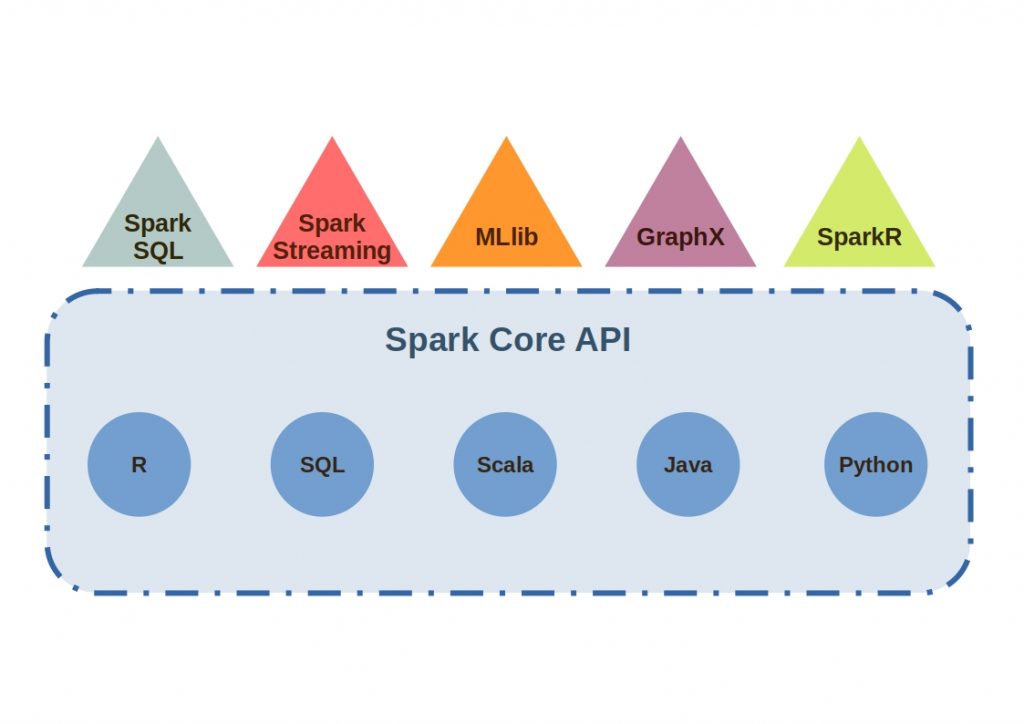

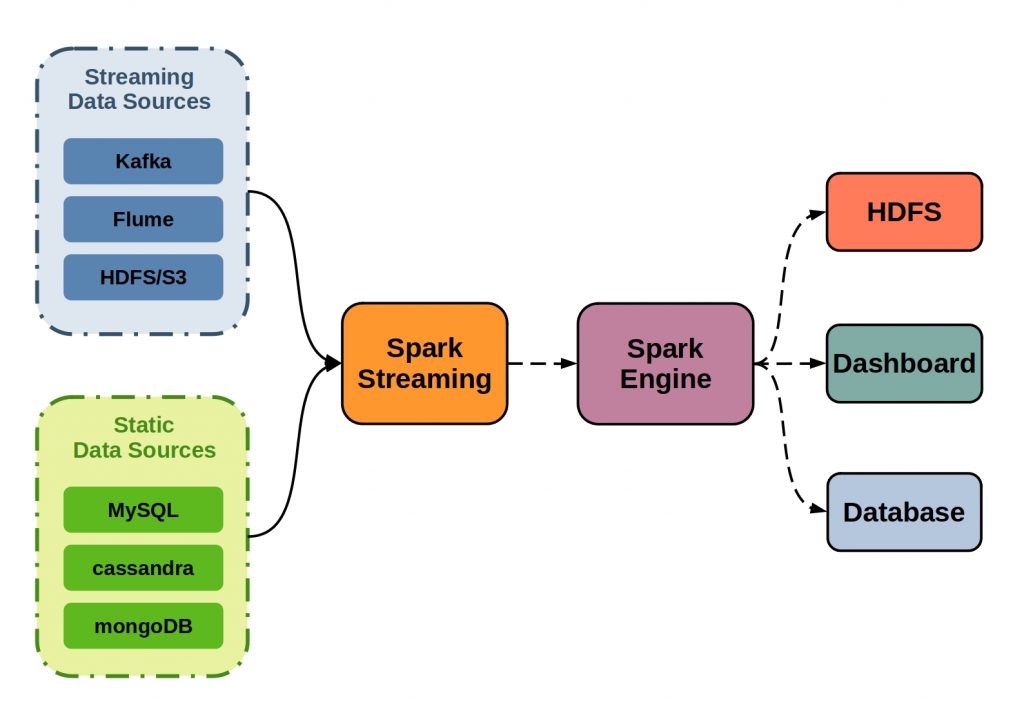

Data from relational databases is automatically converted into MapReduce or Tez or Spark jobs. Hadoopclusters are based on MapReduce, a Google programming model for concurrent computation on computer clusters, and powerful stream-based data analysis pipelines can be created with Apache Spark. This ensures full compatibility with the Apache ecosystem, which can be modularly tailored to the needs of an application.

Another advantage of Hive is that the tables are similar to the tables in a relational database. Data is queried using HiveQL. A declarative SQL-like language.

HiveQL allows multiple users to query data simultaneously. Hive supports a variety of data formats and provides a lightweight but powerful translation feature.

For data analysis, custom MapReduce processes can be written and run on clusters in parallel for high performance.

Apache Hive Architecture

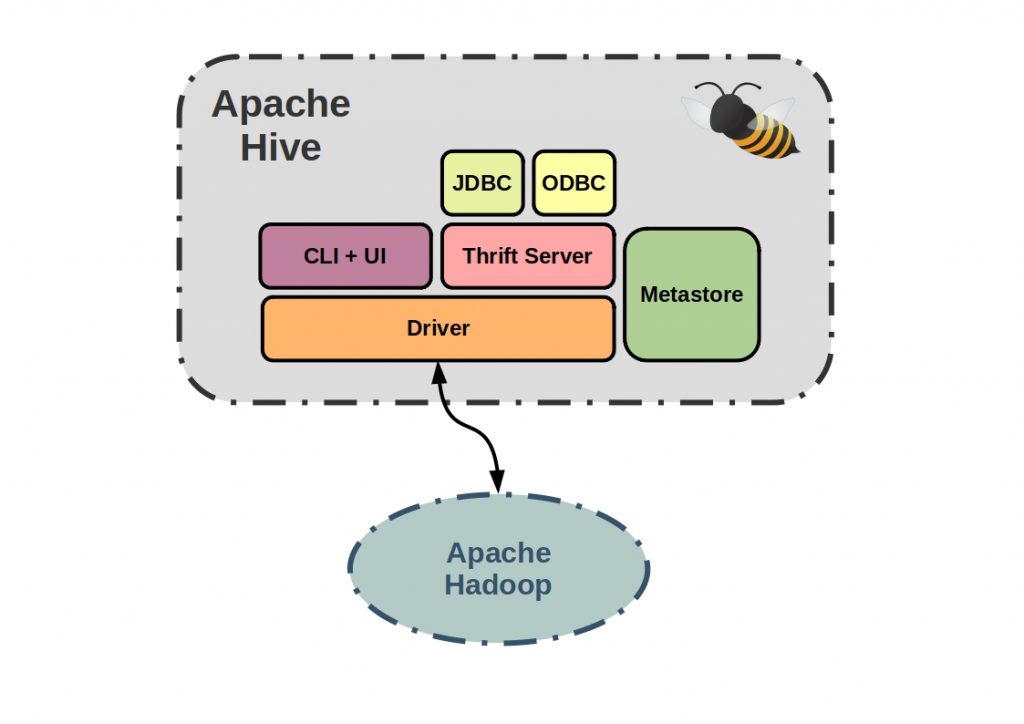

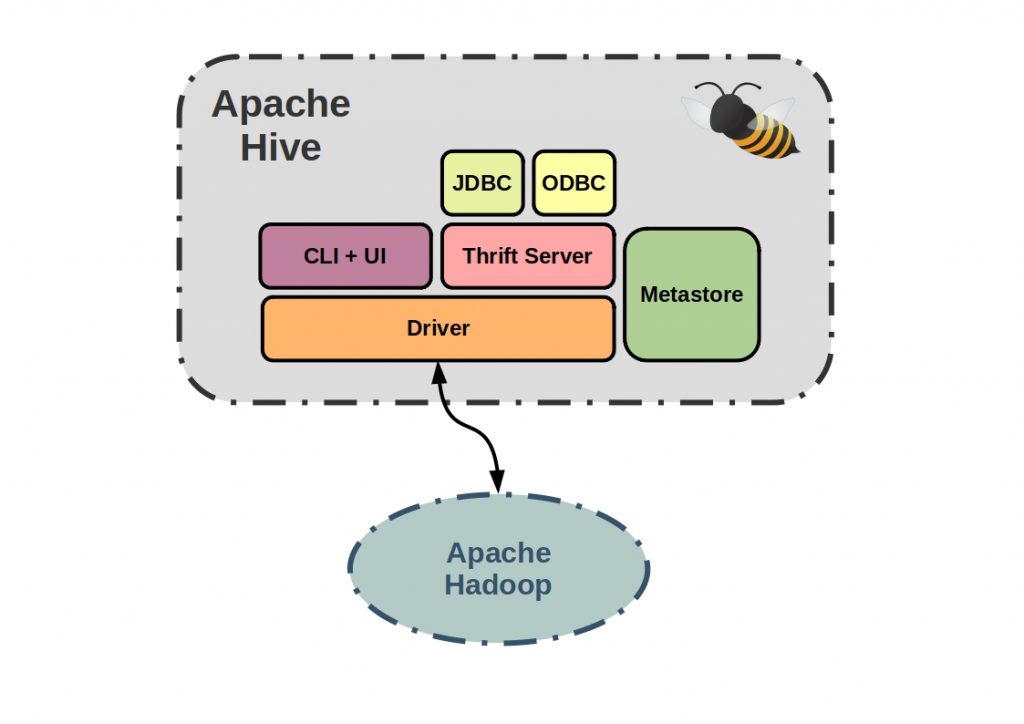

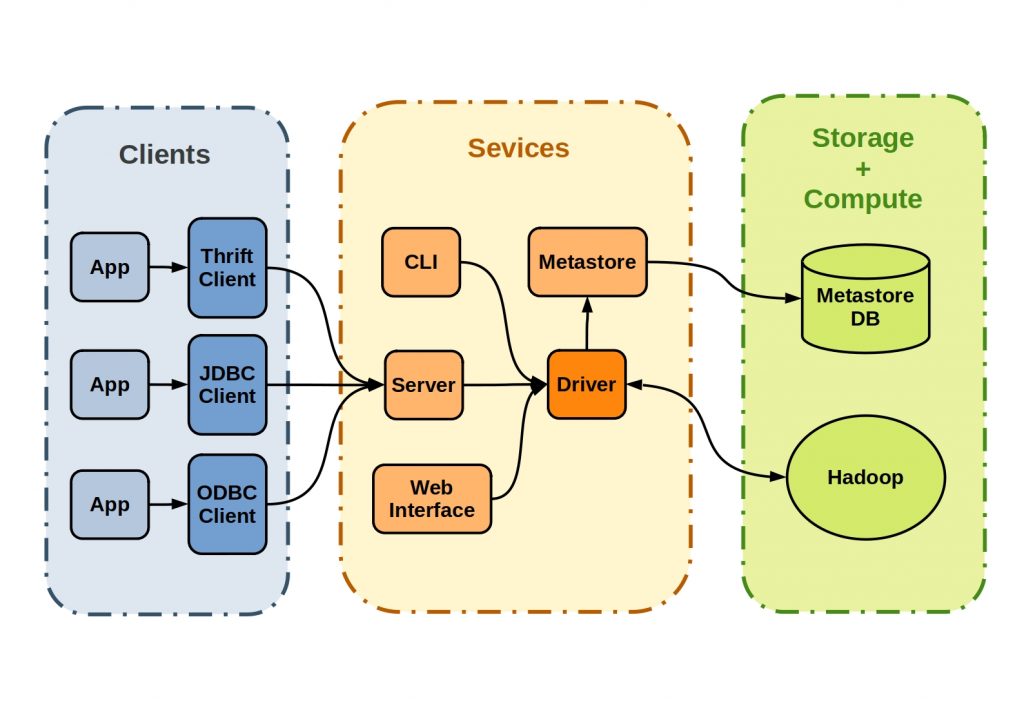

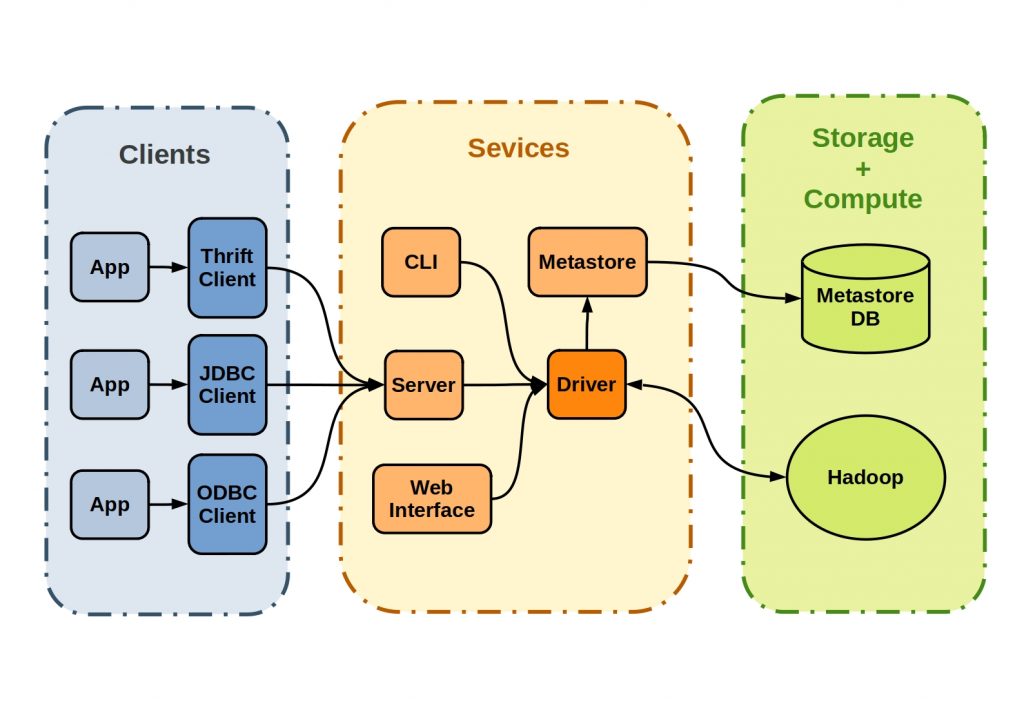

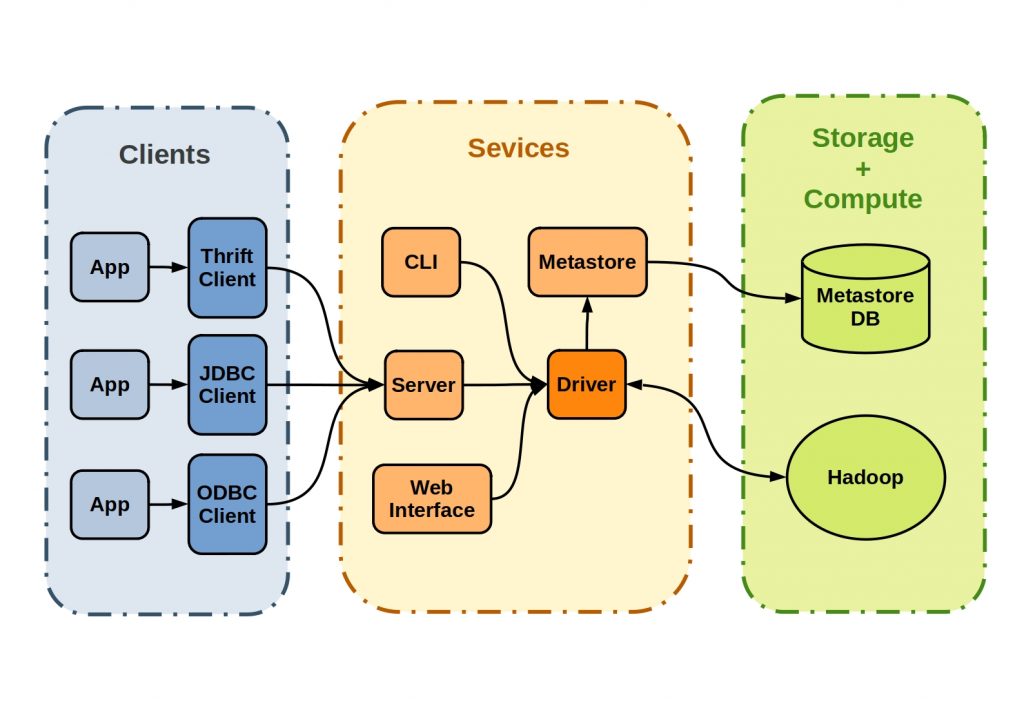

Basically, the architecture of Hive can be divided into three core areas. Hive communicates with other applications via the client area. The integration is then executed via the service area. In the last layer, Hive stores the metadata, for example, or computes the data via Hadoop.

Hive Clients

Apache Hive can be accessed via different clients. In addition to Open Database Connectivity (ODBC), an SQL-based application programming interface (API) created by Microsoft, there is Java Database Connectivity (JDBC), an SQL-based API developed by Sun Microsystems to allow Java applications to use SQL for database access. Hive also provides a high-performance Apache Thrift connection.

Hive Services

The core and central control of the Hive Services is the so-called driver. This

receives HiveQL commands and is responsible for their execution against the Hadoop system. It typically consists of a compiler that translates HiveQL requests into abstract syntax and executable tasks, an optimizer that aggregates, splits, and optimizes for better performance and scalability, and an executor that interacts with Hadoop’s job tracker and passes tasks to the system for execution.

Apache Hive also provides the ability to submit these tasks directly to the driver. Using the Command Line and User Interface (CLI + UI), it is possible to directly influence the process.

Metadata about persistent relational entities, i.e. databases, tables, columns and partitions are managed by the metastore.

Hive Storage and Computer

The metadata is stored here in a persistence. The results of the query and the data loaded into the tables are stored on HDFS in the Hadoop cluster.