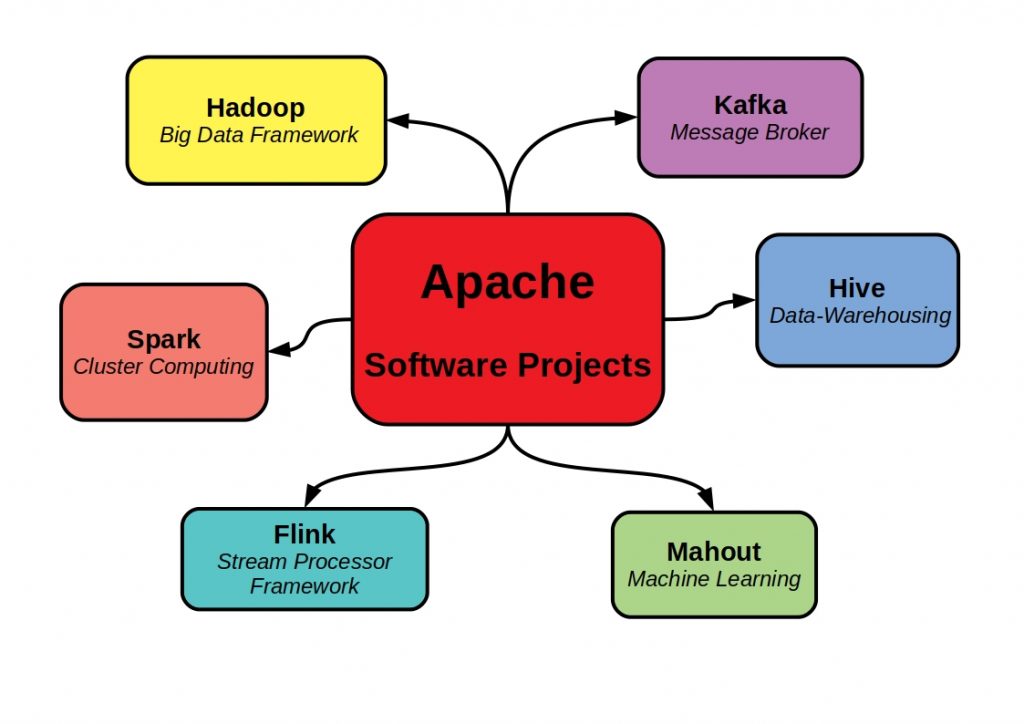

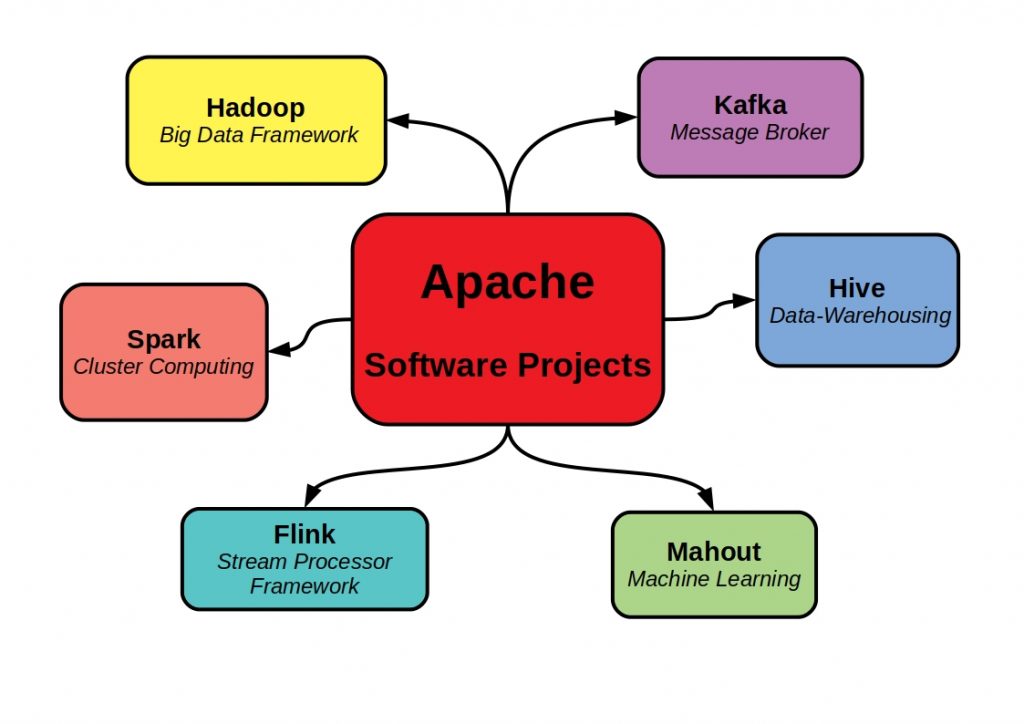

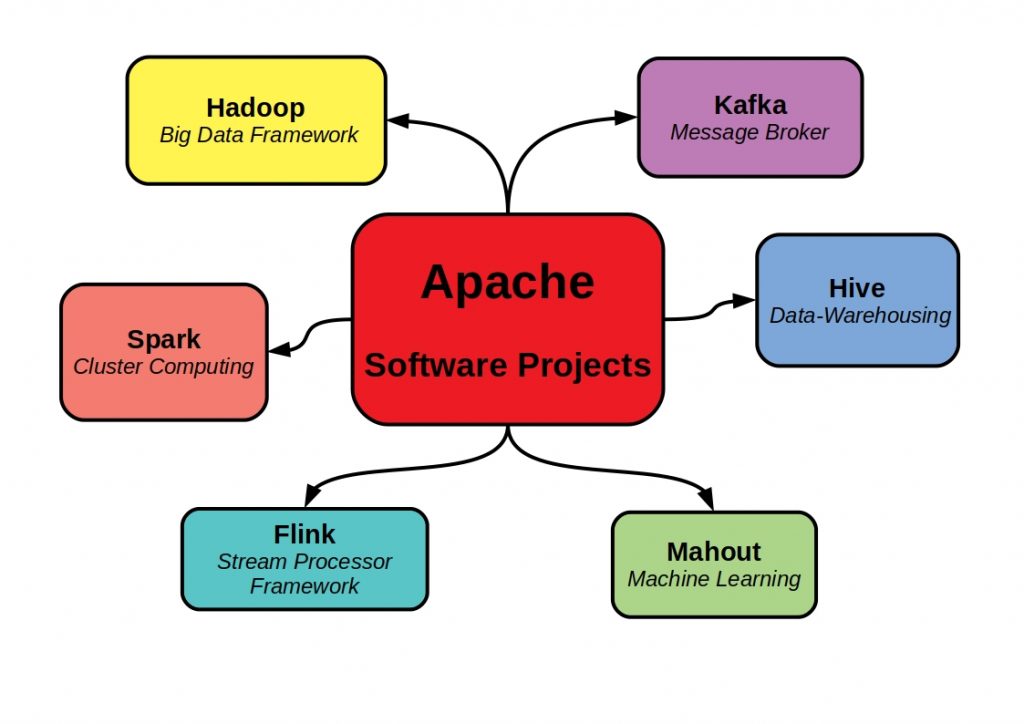

Apache Open Source Projects – Open source software has long been mistakenly considered inferior to proprietary software. But in the meantime many successful Apache Open Source projects could teach you better. They are often not only the big, whole solution, but can be used modularly for small problems and allow access to the know-how of many developers.

Especially in the data science sector, many exciting projects based on the Python programming language have been established in recent years, which are built, maintained and continuously expanded by large, very active communities. In the meantime, these solutions have also been accepted in the business world. Especially for small companies, cost-effective software solutions play a central role in development. But there are also licensing systems for supposedly free solutions. One of the largest models is the Apache license.

What is Apache?

Apache is an open source and free web server software. It is one of the first HTTP servers and was released back in 1995. It supports over 350 Apache open source projects. Experts from all over the world develop software solutions for various applications and individual user comfort. These are maintained by a large community. The projects are mainly offered free of charge and are constantly being expanded.

Apache open source Projects and Industry 4.0

Every company produces several million pieces of data every day. Properly analyzed, this information can be used to derive valuable business strategies and increase productivity.

A decision made at the management level should be implemented in production and at the same time remain controllable at all levels. Optimally, the system should be able to make its own analyses. AI algorithms can help here to find sensible decisions despite increasing complexity. This allows you to optimize your individual production steps and shorten life cycles.

Apache Kafka – Parallel Data Streaming of Big Data

With data streams, machine data can be read, processed and analyzed as it is created. There is no need for intermediate storage. This allows you to distribute large sensor data volumes to individual microservices with high performance. Apache offers you many interfaces to industrial data protocols (OPC UA, MQTT, …) and to machine controllers. In addition, the individual software solutions are fully compatible with each other.

Kafka is a very performant message broker system. This framework enables parallel writing and reading with partly very high-frequency data volume by many clients. The data is persisted in so-called topics as logs. Similar to a flight recorder. A time unlimited storage is possible, but Kafka is not yet designed for this, because of the existing Zookeeper dependency and the associated large metadata volume. A huge advantage of the message broker is that the performance, unlike a database is not dependent on the data volume.

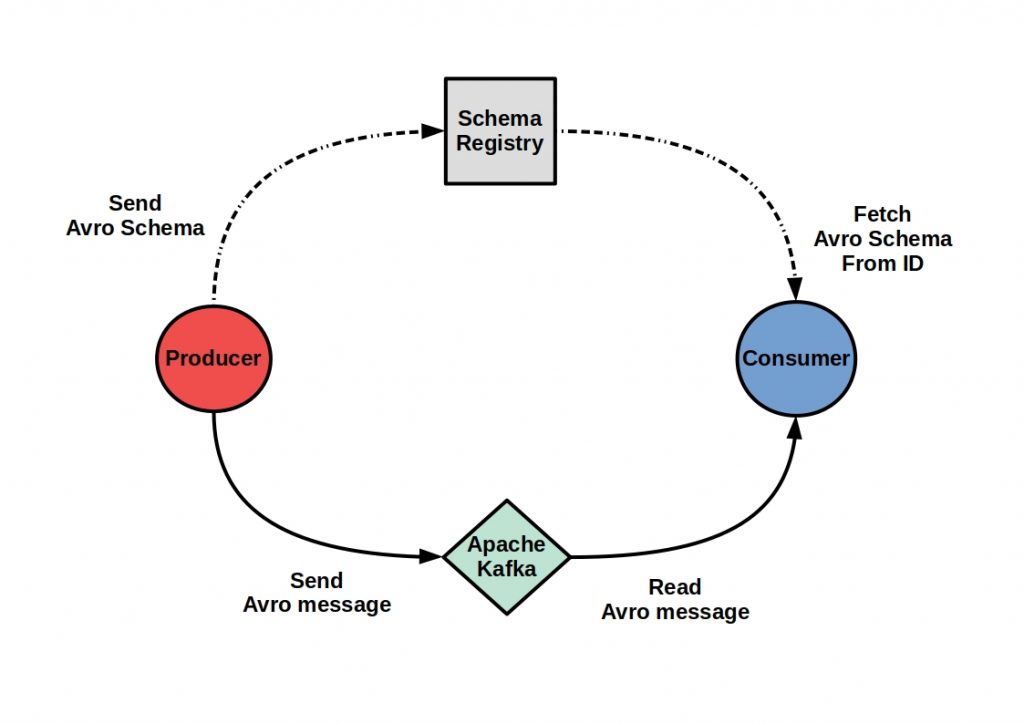

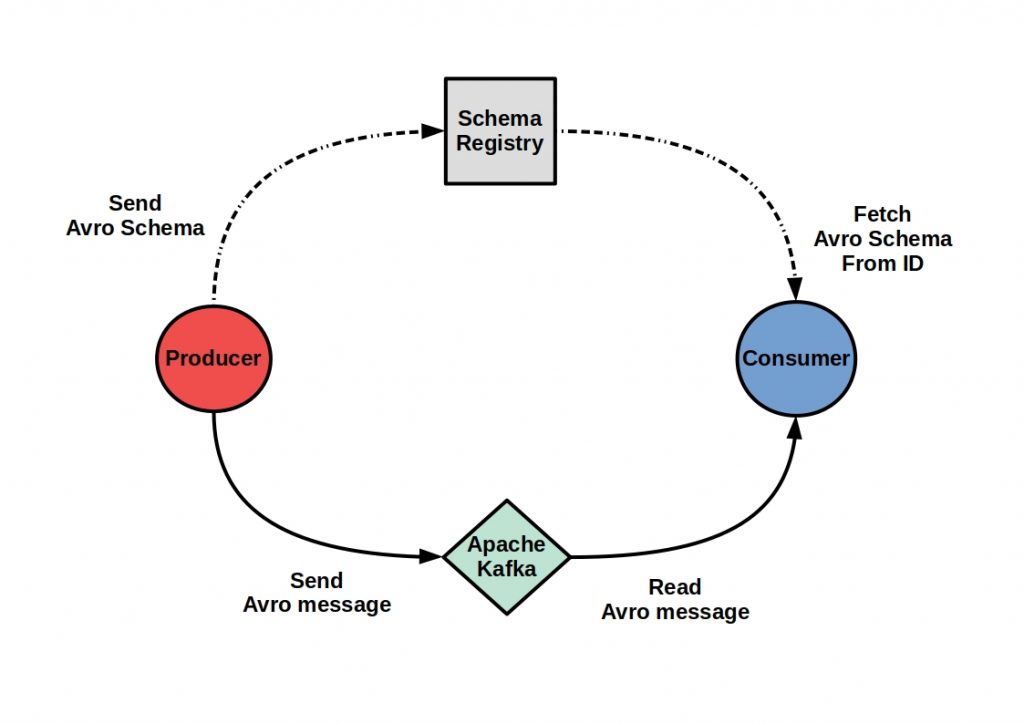

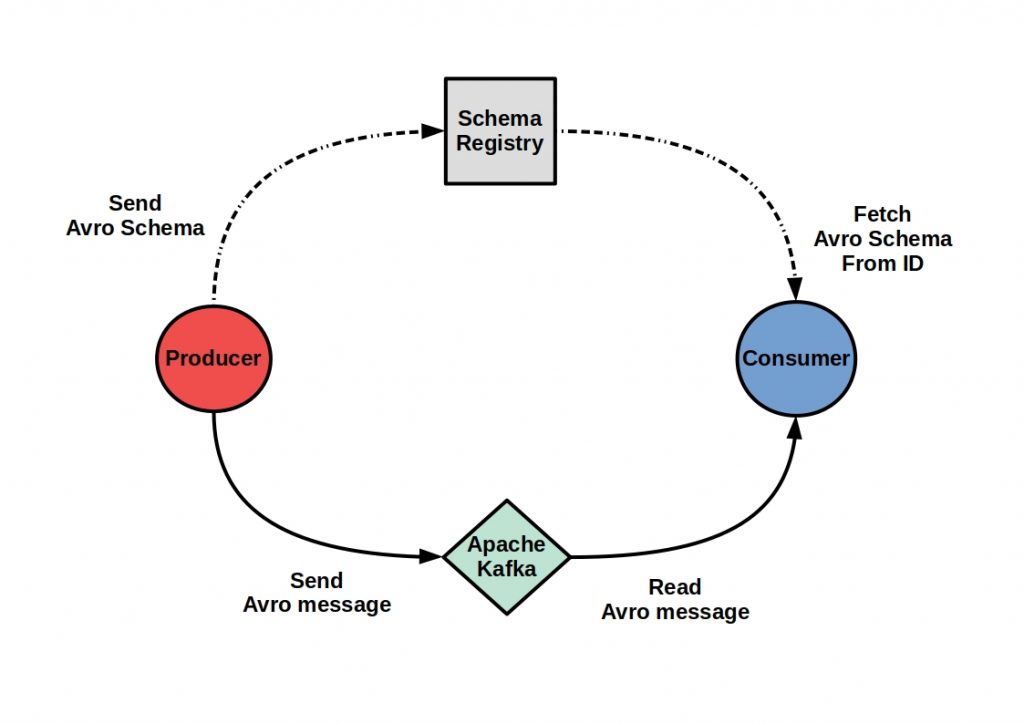

Apache Avro – The Data Serialization Framework for your Kafka Schema registration

In times of Big Data, every computing process has to be optimized. Even small computing times can lead to large delays with correspondingly large data throughput, and large data formats can block too many resources. The decisive factors are therefore speed and the smallest possible data formats that are stored. Avro is developed by the Apache community and is optimized for Big Data use. It offers you a fast and space-saving open source solution.

With Apache Avro, you get not only a remote procedure call framework, but also a framework for data serialization. This allows you to call functions in other address spaces on the one hand, and convert data to a more compact binary or text format on the other. This duality gives you some advantages in cross-network data pipelines and is justified by its development history.

Avro was released back in 2011 as part of Apache Hadoop. Here, Avro was intended to provide both a serialization format for data persistence and a data transfer format for communication between Hadoop nodes. To provide functionality in a Hadoop cluster, Avro needed to be able to access other address spaces. Because of its ability to serialize large amounts of data in a cost-effective manner, Avro can now be used in a Hadoop-independent manner.

When you let Avro manage your Kafka schema registry, it provides you with comprehensive, flexible, and automatic schema development. This means you can add additional fields and delete fields.

Even renaming is allowed within certain limits. At the same time, Avro schema is backward and forward compatible. This means that the schema versions of Reader and Writer can differ. There are solutions for managing schema registration, including Google Protocol Buffers and Apache Thrift. However, Avro is the most popular choice because of its JSON data structure.

Apache Spark – High-performance Cluster Computations on static and streaming Data Sources

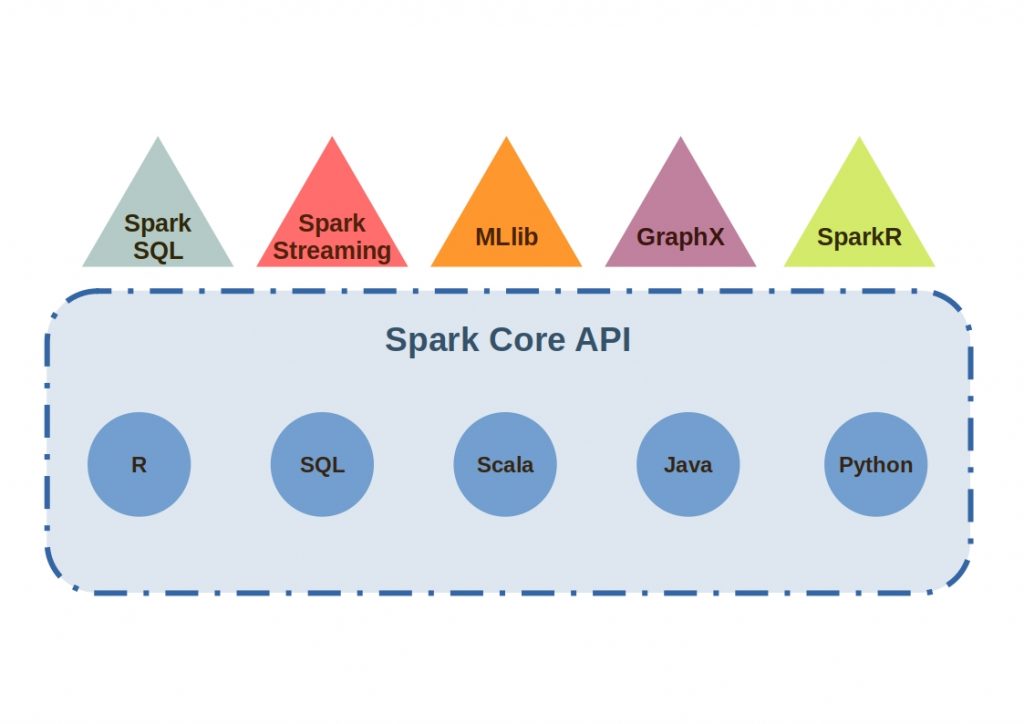

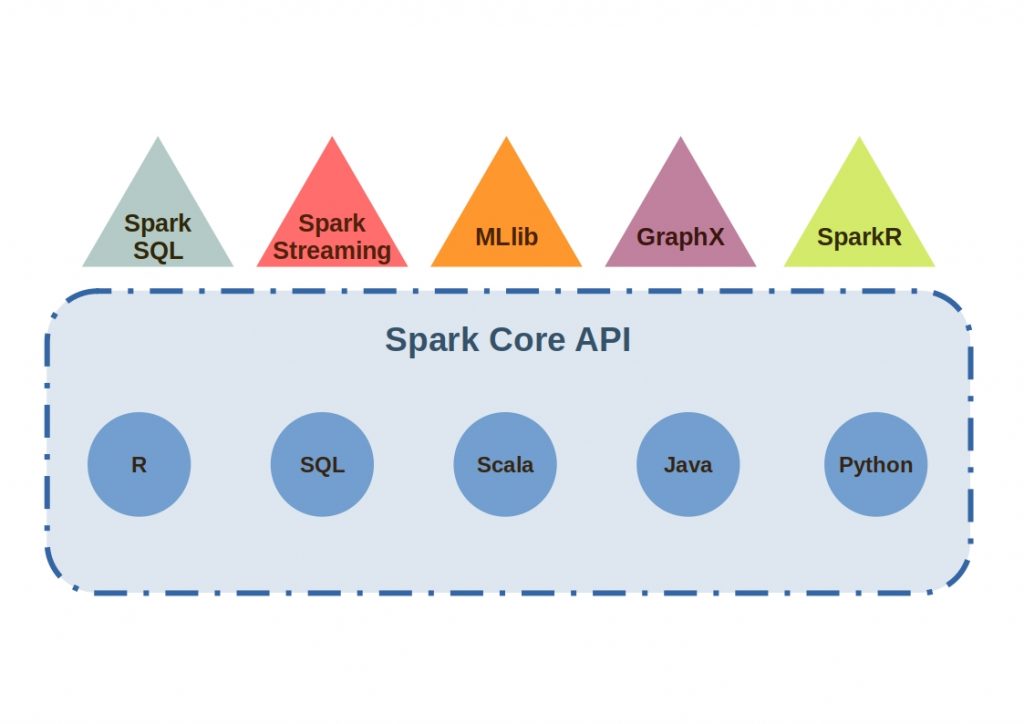

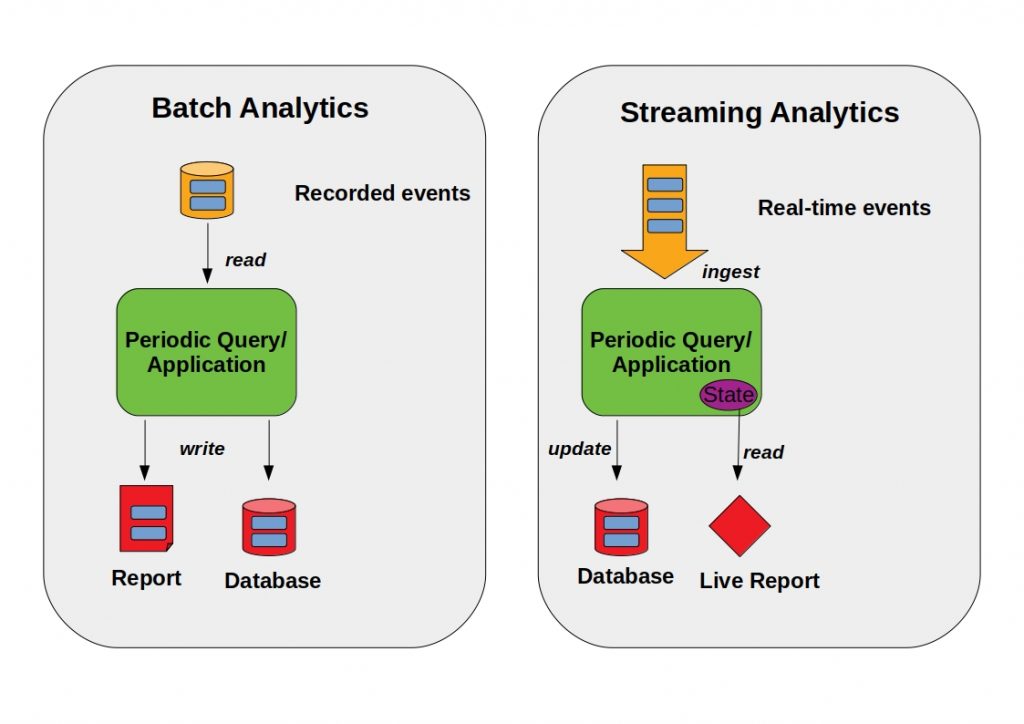

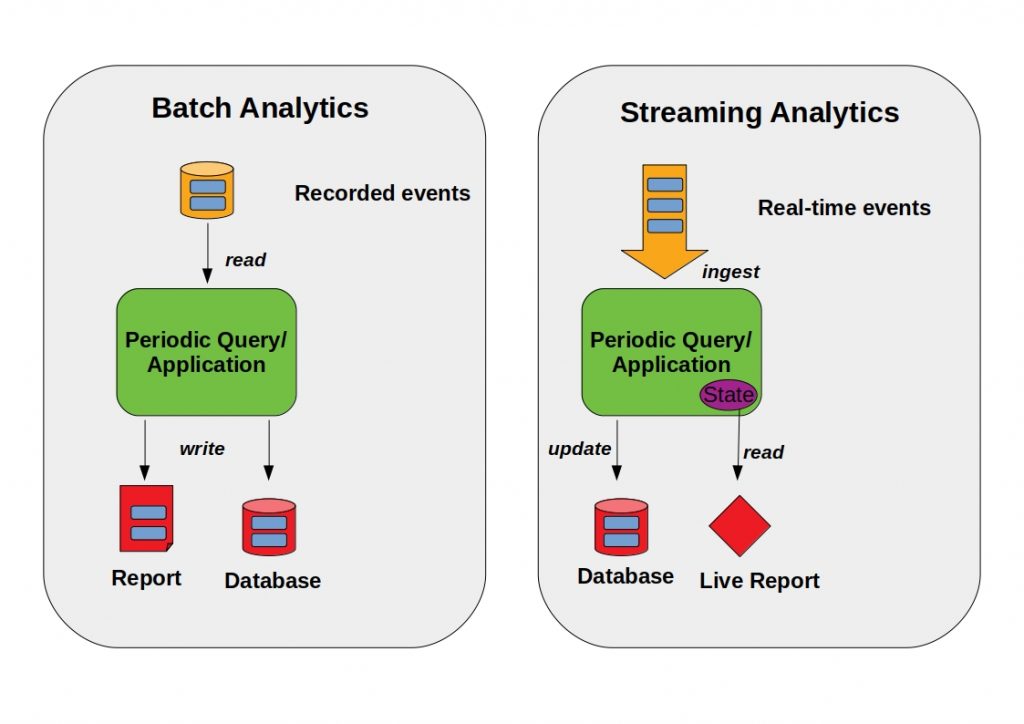

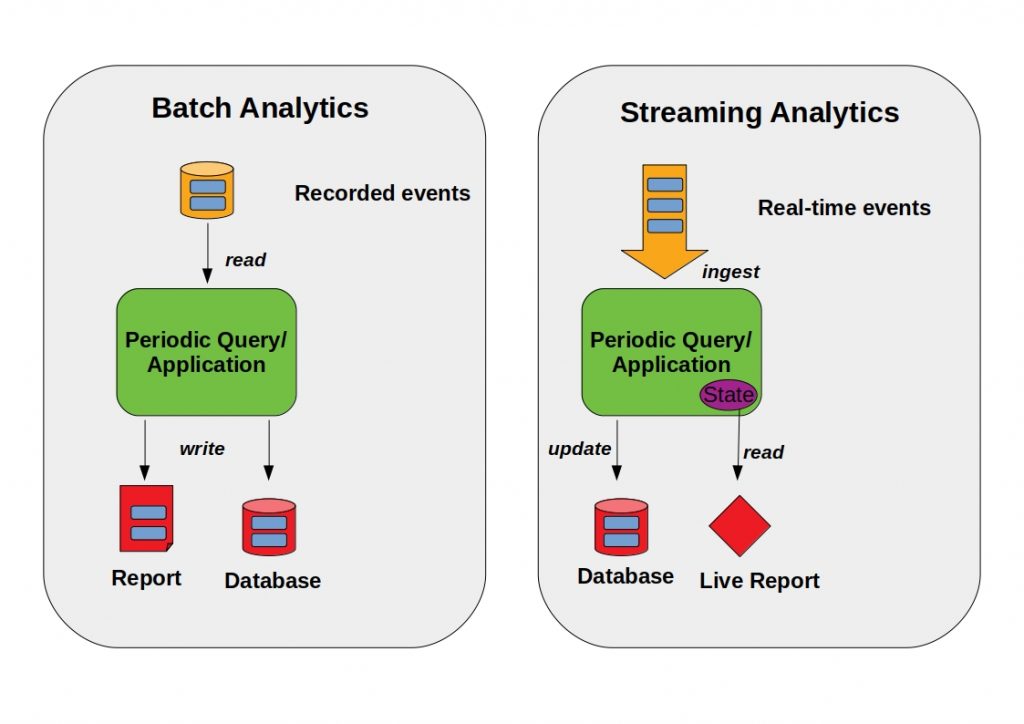

Apache Spark enables very performant analysis algorithms on static, but also streaming data sources. The framework has become one of the most important and performant unified data analytics on the market today.

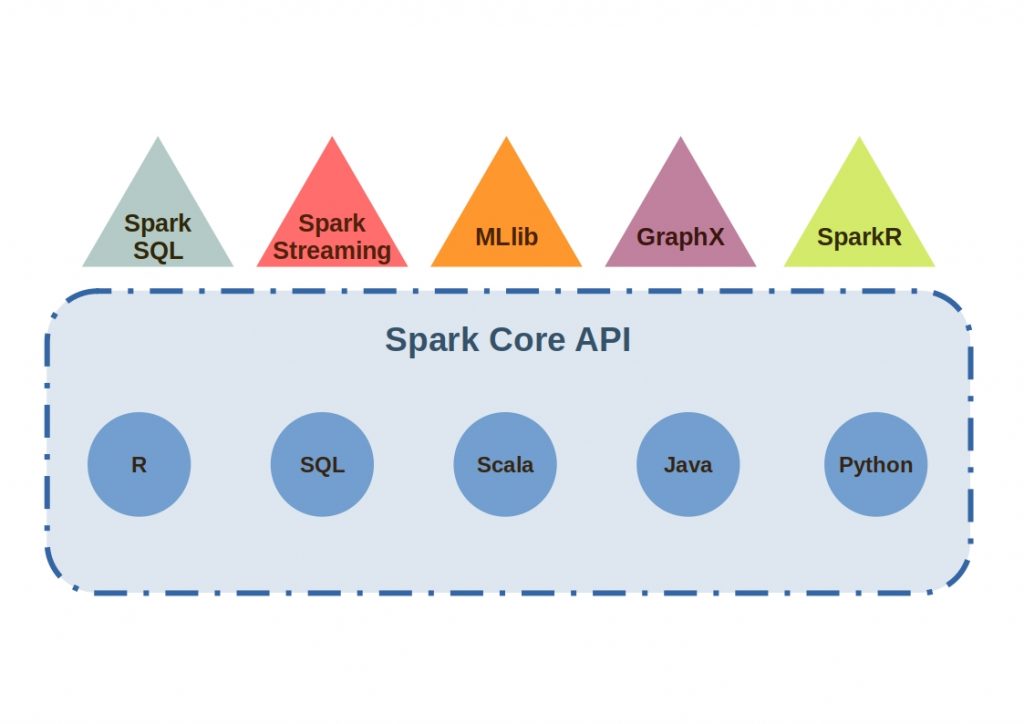

Spark includes libraries for various tasks, from SQL to machine learning, all of which are integrated via the Spark Core.

By allocating the individual processes to independent clusters, performance peaks are prevented. The processes are coordinated via the so-called SparkContext object.

Spark Core is the underlying unified computing engine on which all Spark functions are built. It enables parallel processing even for large data sets and thus ensures very high-performance processes.

Apache Flink – Continuous Analysis based on Data Streams

To ensure real-time analysis results, continuous analysis can already be performed based on data streams without first persisting the data to do so, or significantly reducing the flow rate. Apache Flink is an open source stream processor framework that can process and analyze high volume data streams with low delay and high speed.

The framework can consume directly from the data streams via a DataStream API, process them, and transfer them directly to various storage systems or to a UI via custom APIs.

Apache Mahout – Machine Learning with a Focus on Linear Algebra

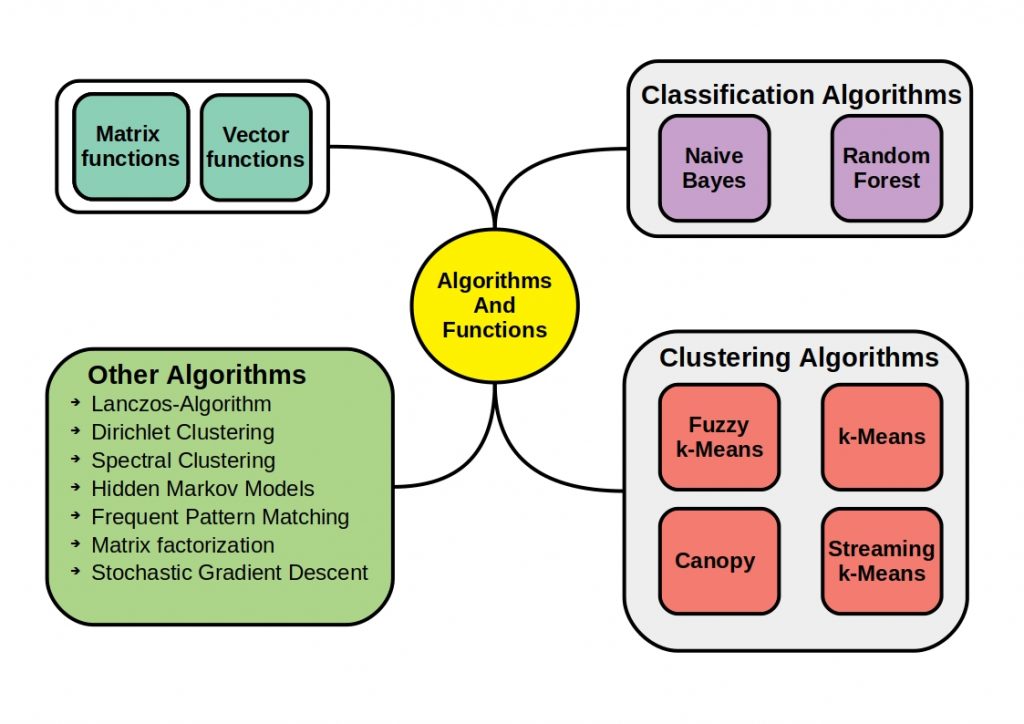

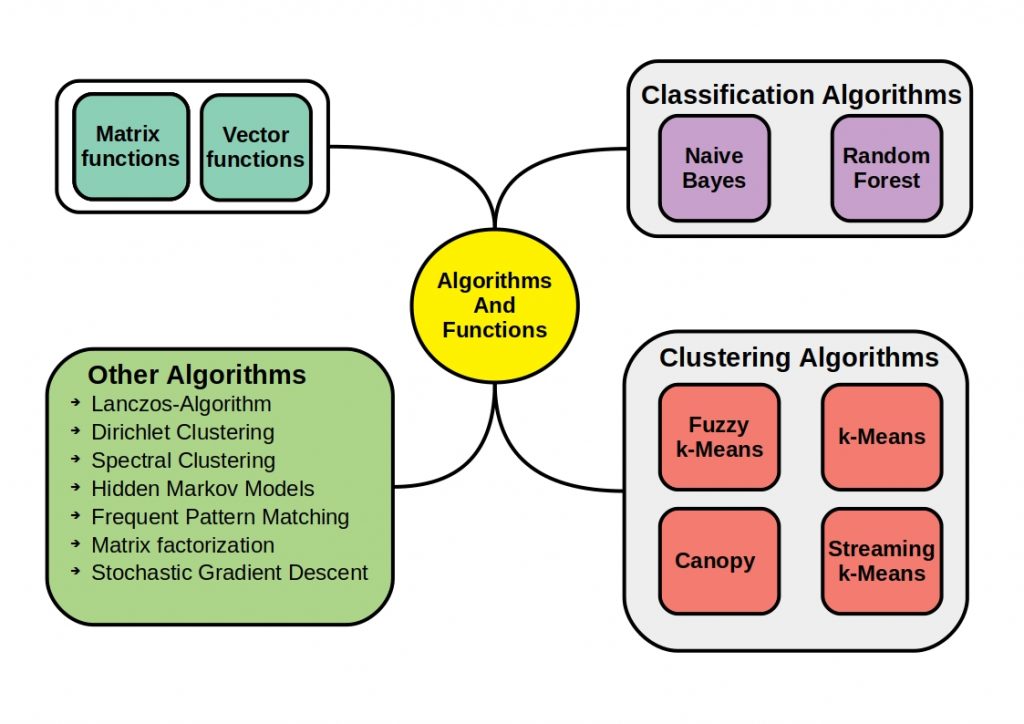

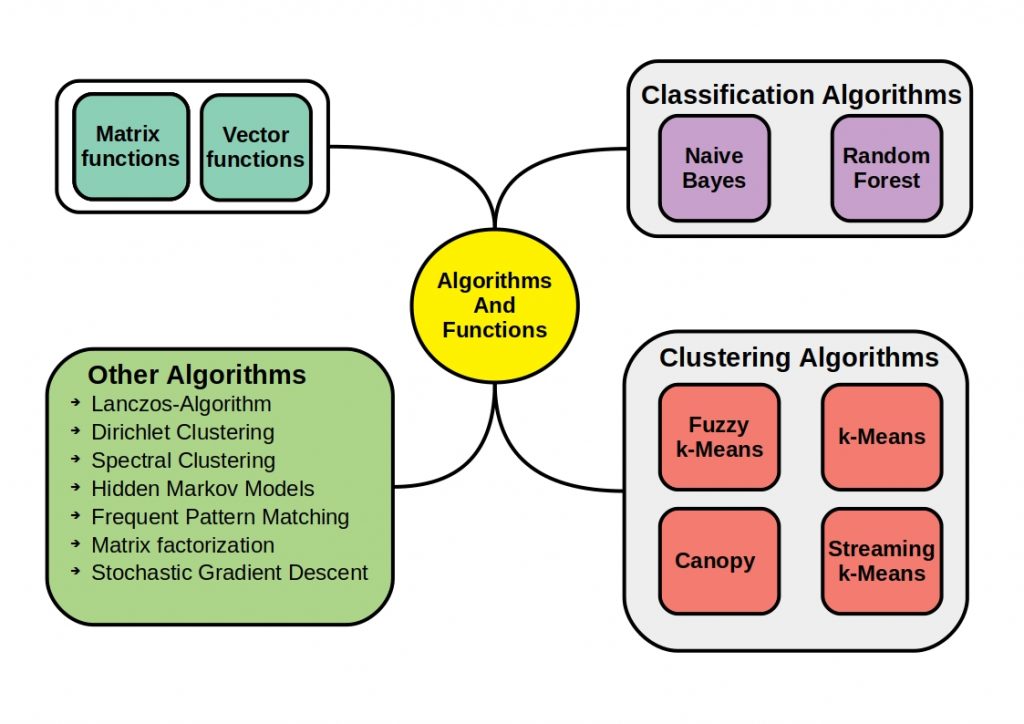

Apache Mahout is an open source machine learning project that builds implementations of scalable machine learning algorithms with a focus on linear algebra.

Mahout is usable for Big Data applications and statistical computing and provides an R-like Domain Specific Language (DSL).

The algorithms are scalable and cover a large portion of common machine learning tools

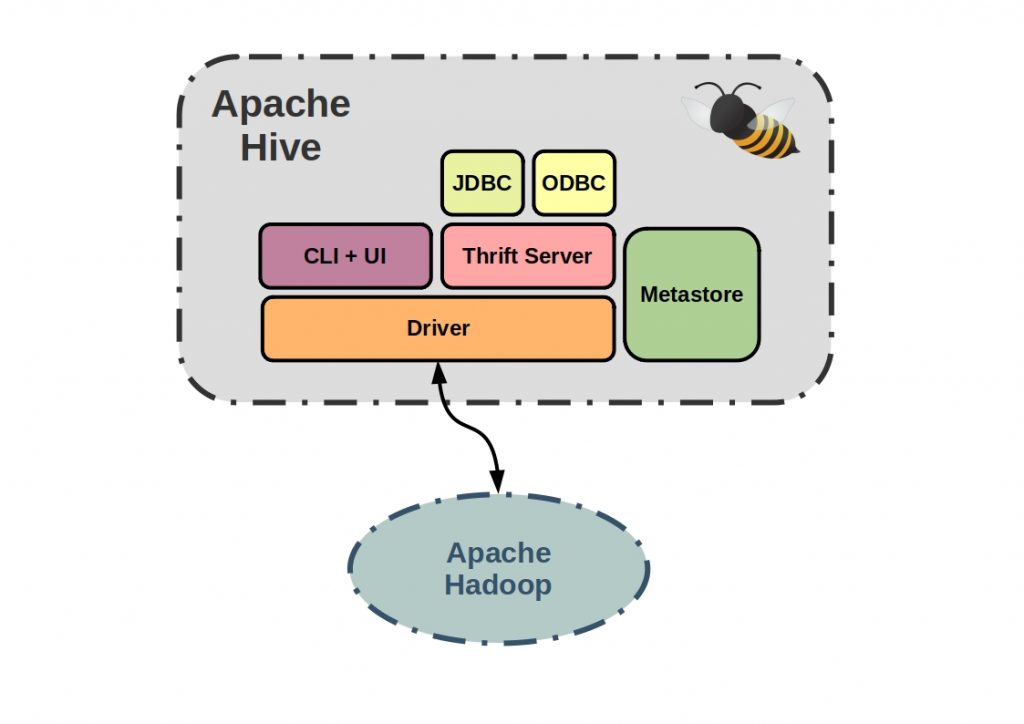

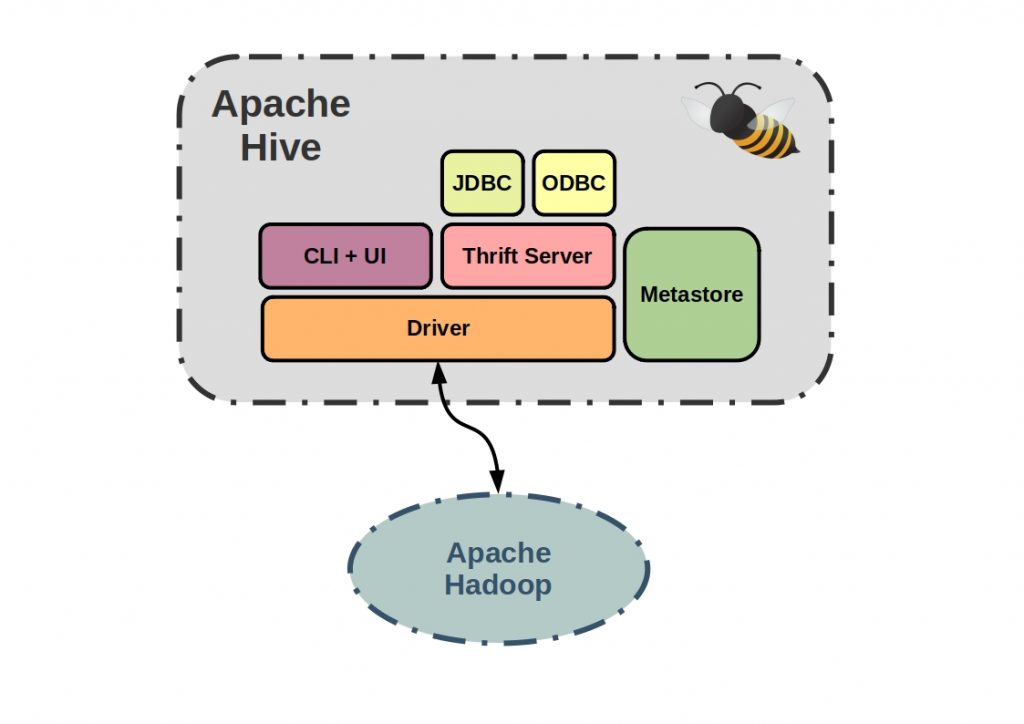

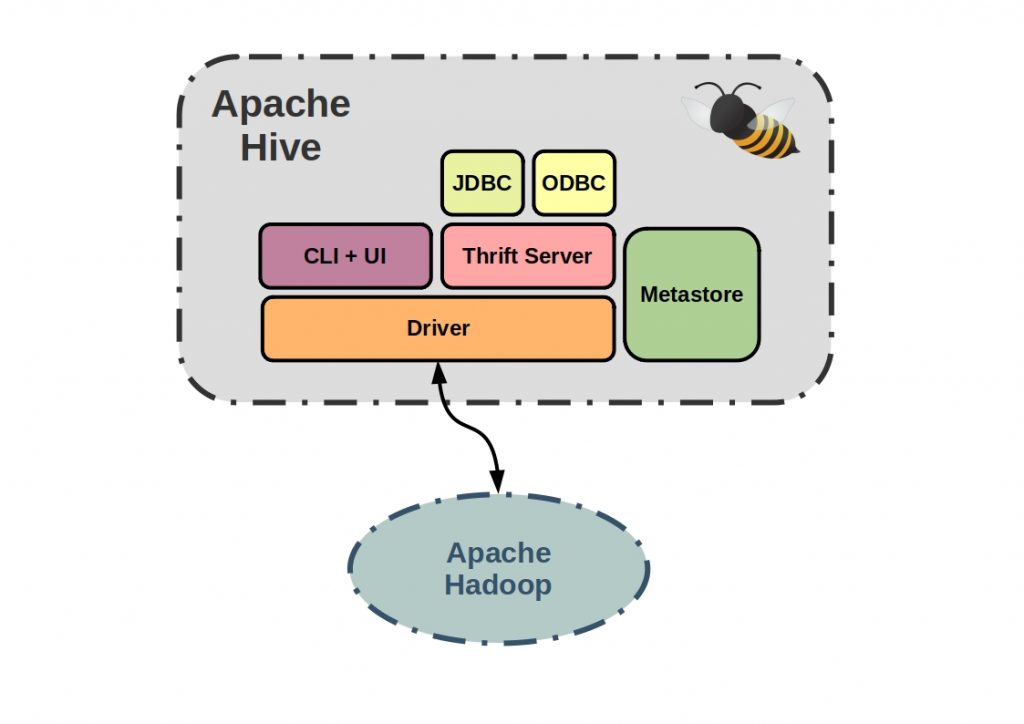

Apache Hive – Easy creation of data warehouse systems

Data warehouse systems provide centralized data storage, as well as sorting, pre-processing, translation and analysis of data.

Based on the Apache Hadoop Big Data framework, Apache Hive makes it easy to manage large data sets.

Data from relational databases is automatically transformed into MapReduce or Tez or Spark jobs. Therefore, other Apache frameworks can be integrated modularly via Hive and the system can be easily adapted to the needs.

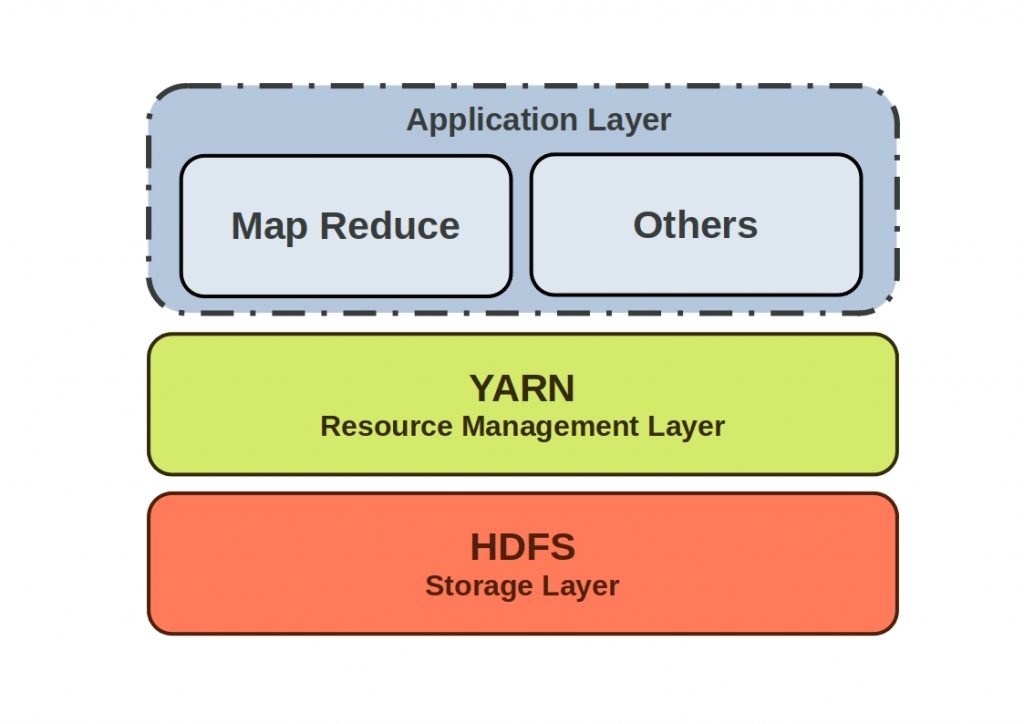

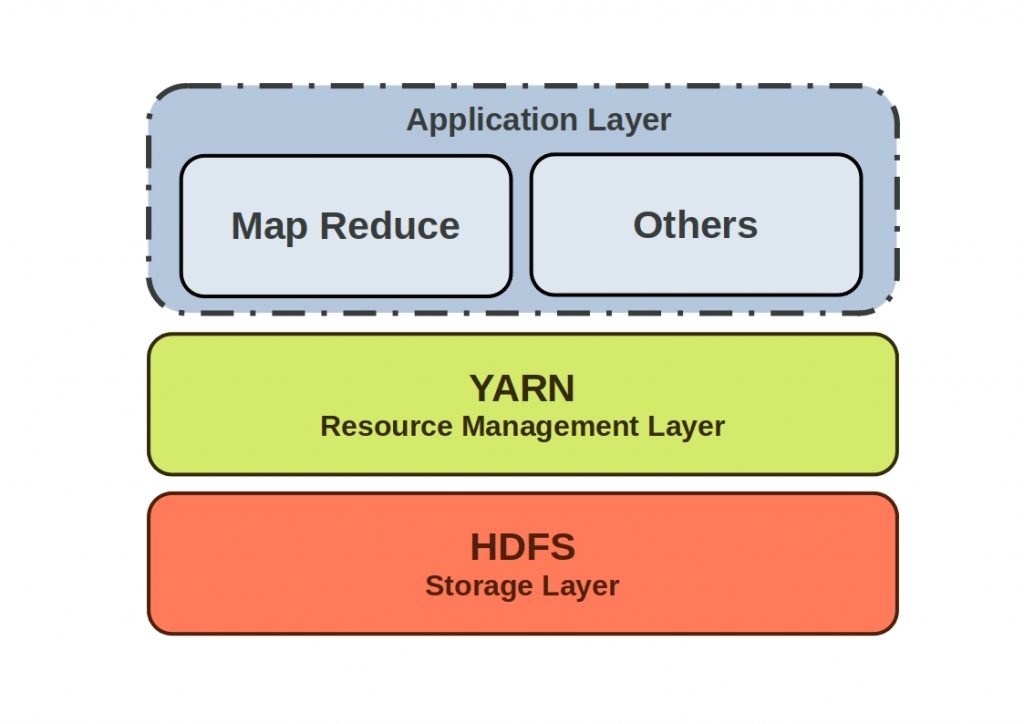

Apache Hadoop – Will there soon be no way around this Apache open source Big Data framework?

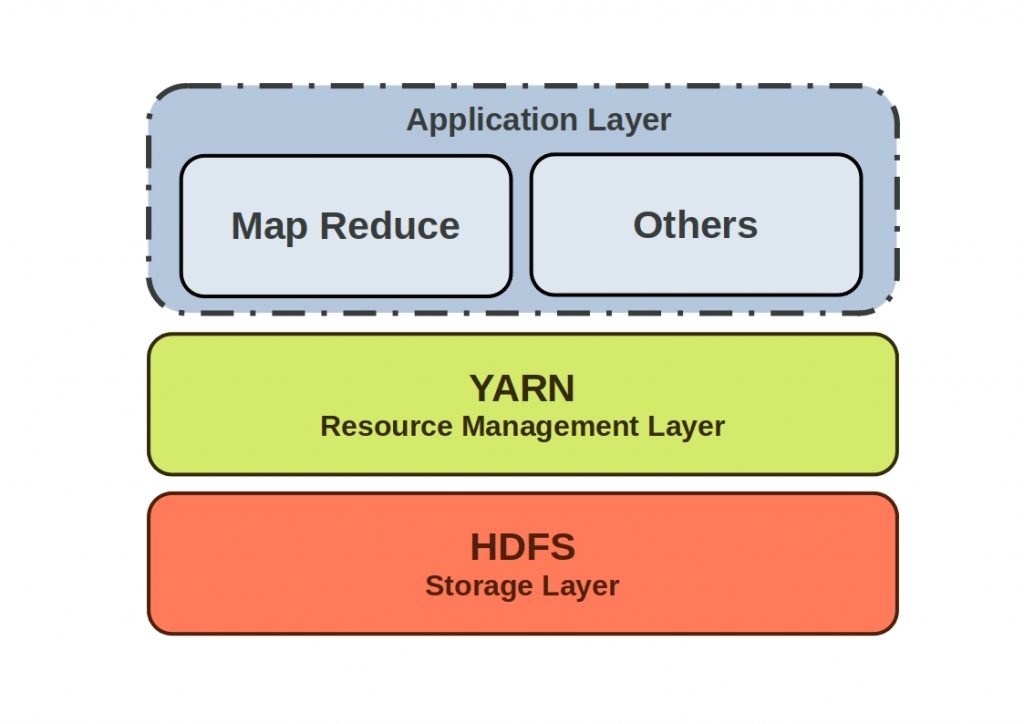

Apache Hadoop enables computationally intensive processes of large data sets through parallelization on computer clusters. Apache Hadoop has clearly lost its status as the sole Big Data solution. Many technologies have already come along that can solve smaller tasks better than the big solution, Hadoop.

Today, this small-scale nature enables Big Data management solutions that can be optimally tailored to specific use cases. But Hadoop is not dead either. The system still has its strengths and will continue to be the first choice for specific use cases in the foreseeable future.

With the Hadoop Ozone project, an alternative to the Hadoop Distributed File System (HDFS) has now been developed.

It is still to be used on a cluster, but corresponds to an object store for Big Data applications. This is much more scalable than the standard file systems and is intended to optimize the handling of small files, a previous weakness of Hadoop. Object stores are typically used as a data storage method in the cloud. Through Ozone, they can now be managed locally.

This object store can be accessed by established Big Data solutions such as Hive or Spark without modification.

What is the future significance of Apache Open Source projects?

Many of the apache open source projects are regularly improved and extended with additional programming language support. Thus, you can already implement many software solutions in your Python project. The entry-level nature of this programming language makes automation pipelines and Big Data solutions more accessible. If you want to learn Python, we have compiled an overview of the individual frameworks and libraries here.

This makes it easier for you to get an overview of the individual functionalities and to get started in a more targeted way. The choice of open source solutions is now enormous and can be overwhelming.

With Apache, above all, an ecosystem is made available. This means that standardized data pipelines can be put together in an absolutely modular way. Especially in the processing of data streams, there is hardly any way around Apache frameworks today. Due to its incredible performance with continuous data volumes, Kafka forms a new center for many application architectures and will grow in importance over the next few years.

Accordingly, digital solutions for this area will also grow in importance. With new innovations, many new projects will also emerge, but also old projects like Hadoop will continue to develop. Especially for young and small companies, Apache open source projects are essential for their own existence.