ERP vs MES vs PLM vs ALM – These terms are being mentioned more and more often in connection with Industry 4.0. But what is behind these systems and what are the differences?

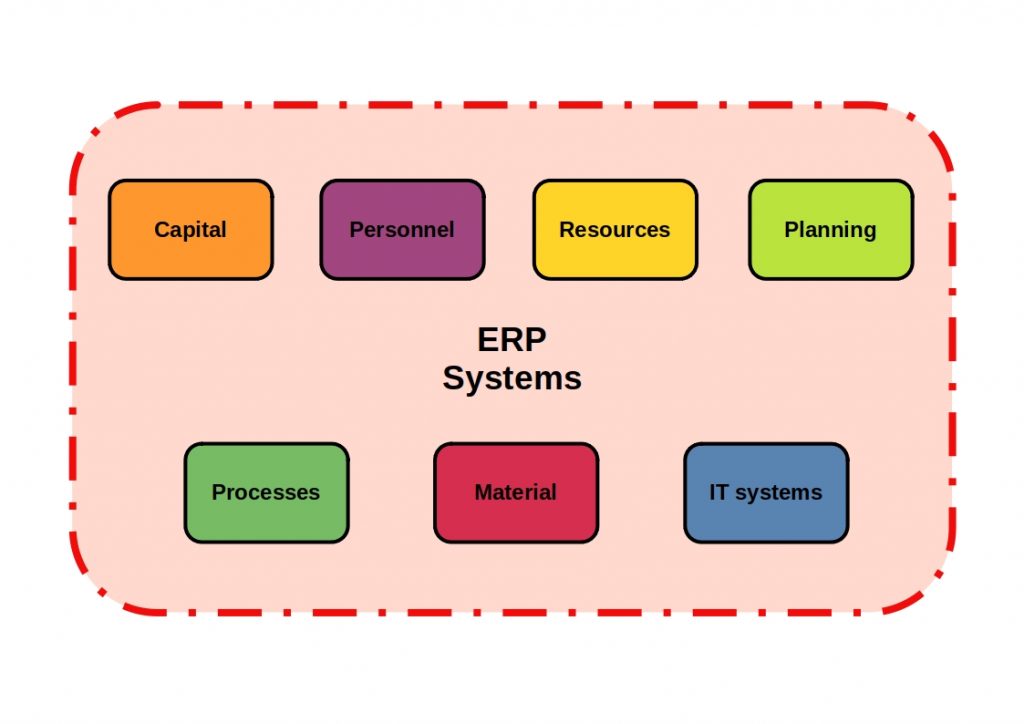

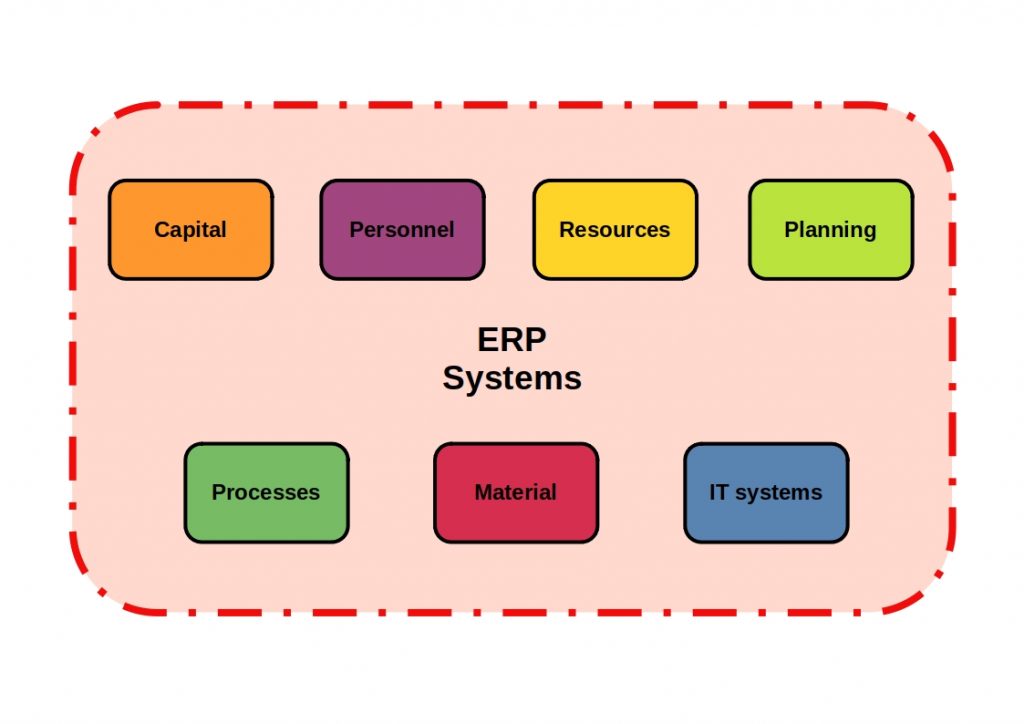

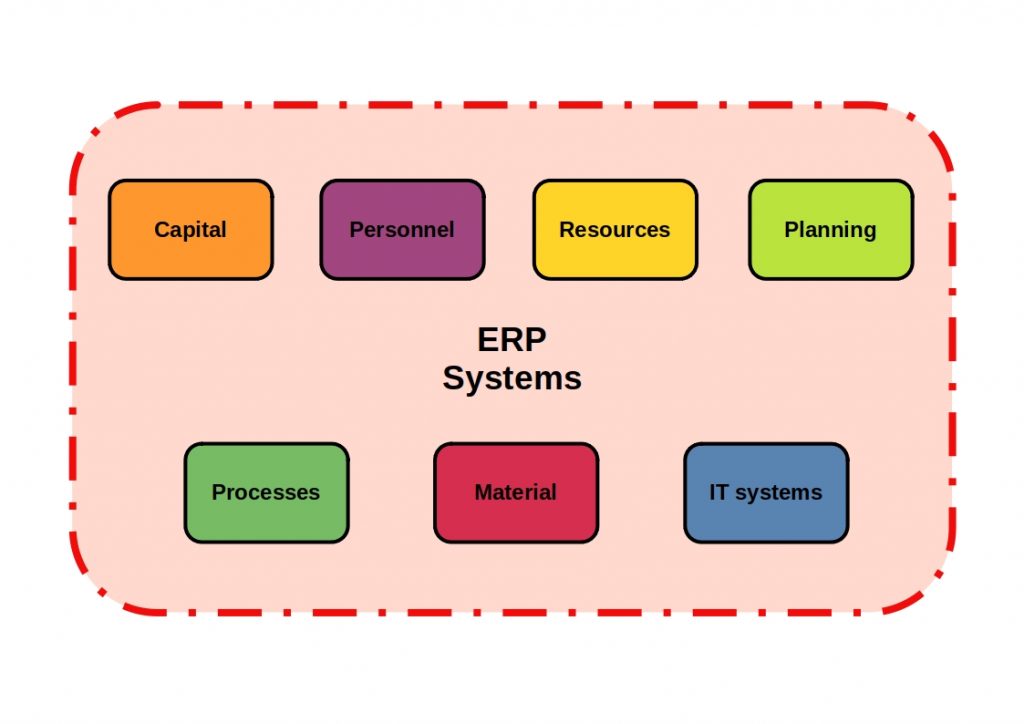

To stay competitive in today’s world, you need to increase the efficiency of your business processes. It is important that you optimally plan, control and manage your operational resources (capital, personnel…).

Your goal should be to create high quality and continuity with high productivity and low lead time.

Many of your business processes create ever larger amounts of data and increase in complexity. You need to reduce this complexity and increase your flexibility.

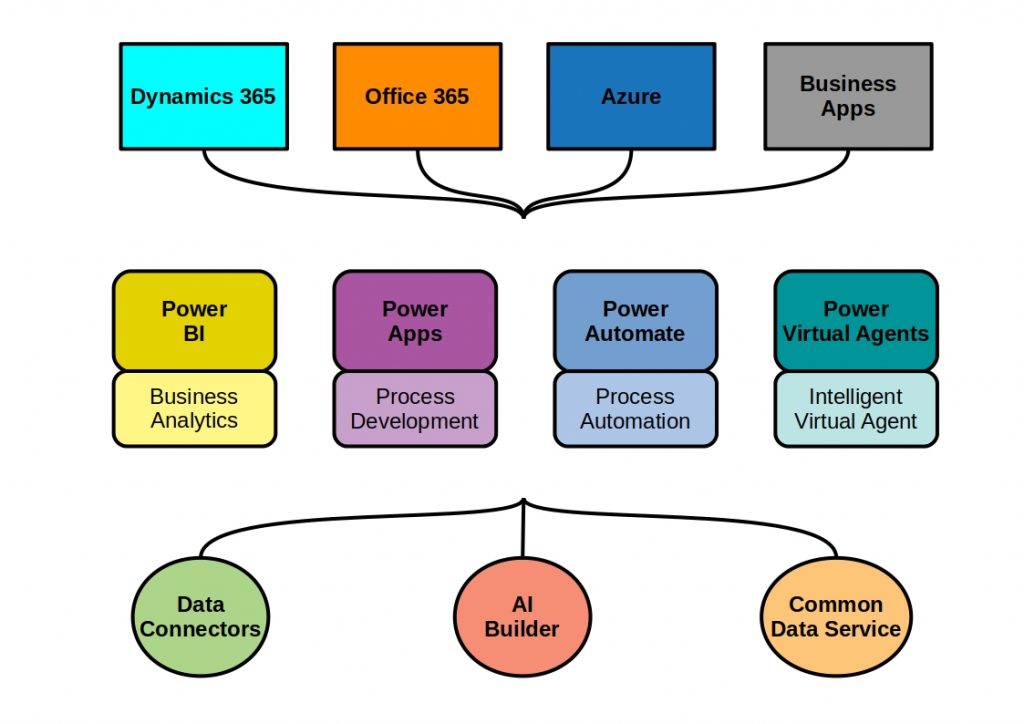

Many software solutions are available to your company for the optimal use of resources.

What is an ERP?

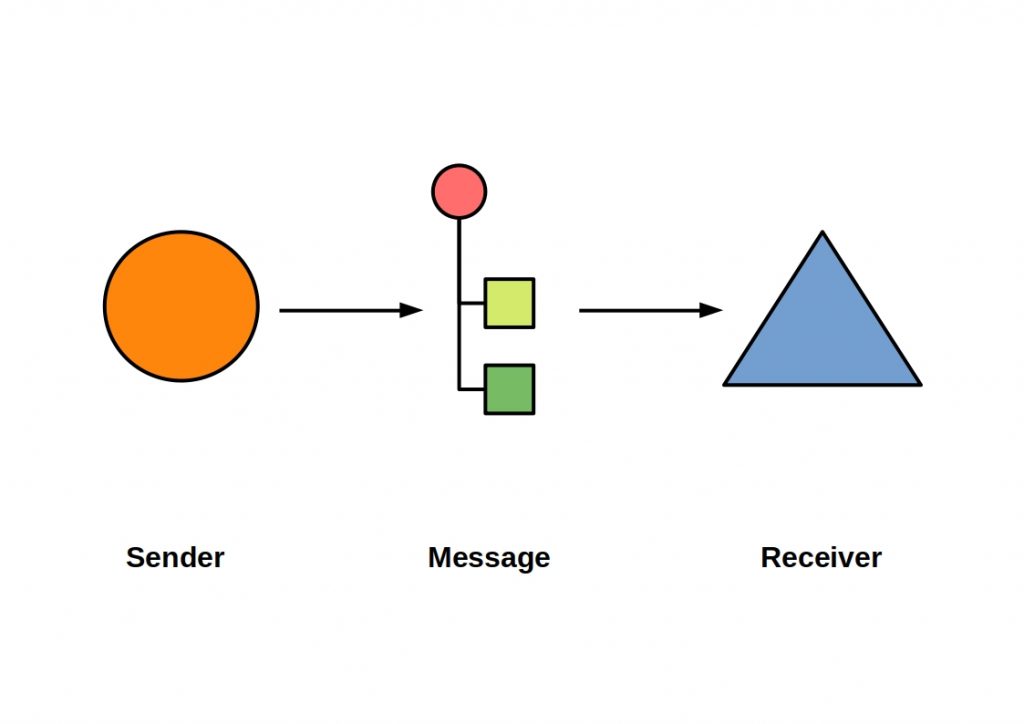

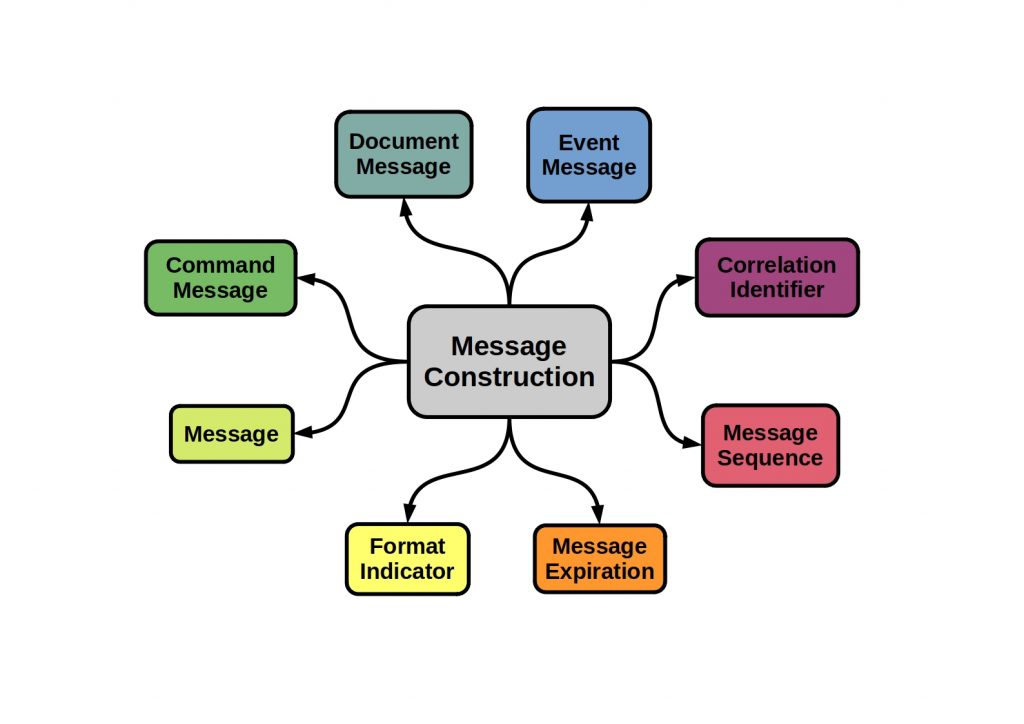

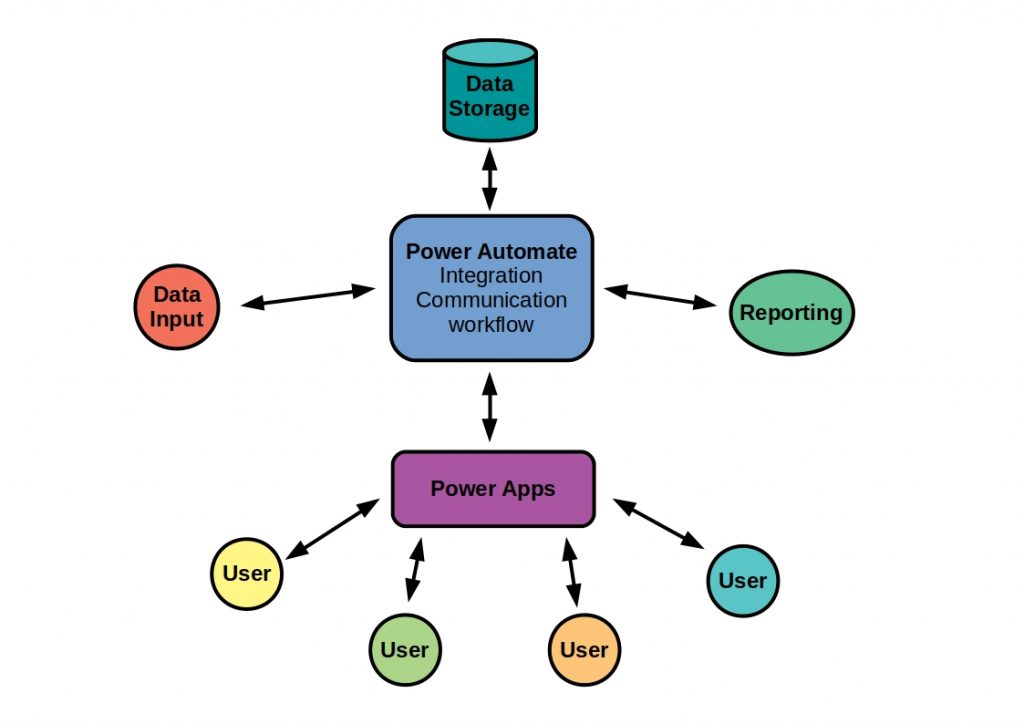

Basically, an ERP system is an IT-supported system of software solutions that communicate with each other. Your data is stored centrally and should represent your company in its entirety through quickly available information.

The information of your business processes is optimized and documented.

The trend is towards web-based applications.

This means that you access the system interface via your browser and that you can also access it beyond the boundaries of your company. Another advantage is that you don’t have to install any services, making you hardware-independent.

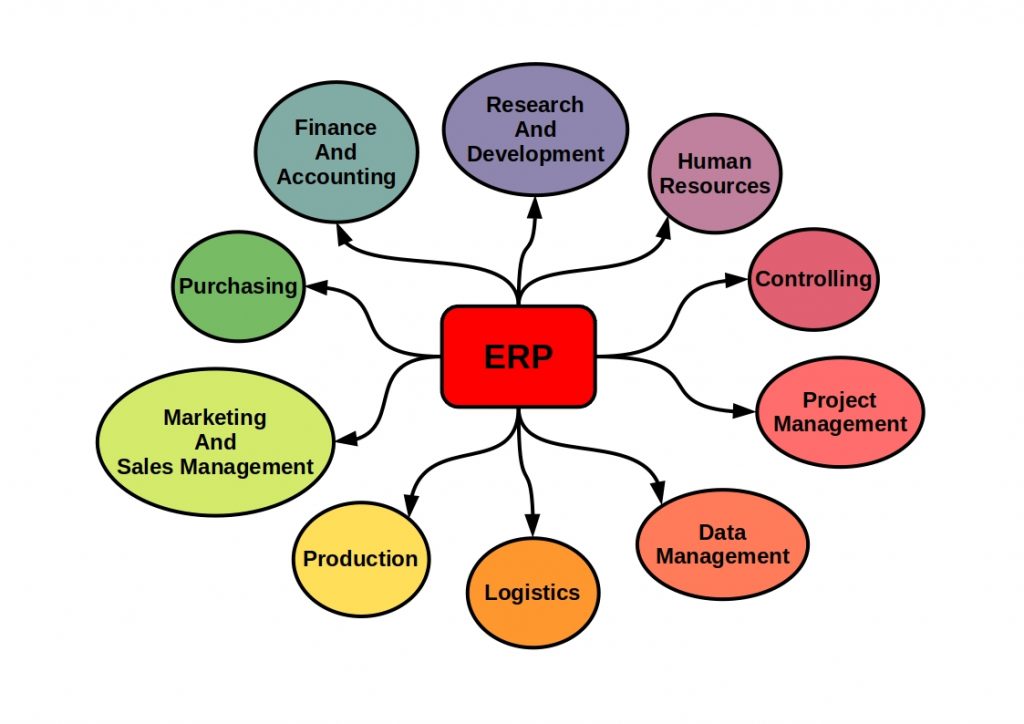

What are ERP Subsystems?

You can use ERP systems in all areas of your business. They provide you with complete solutions for all necessary subsystems.

Complex systems are divided into so-called application modules, which you can combine with each other as you wish. These fulfill various tasks for the provision and further processing of information. In this way, you can put together your ERP system according to your requirements and adapt it to the size of your company.

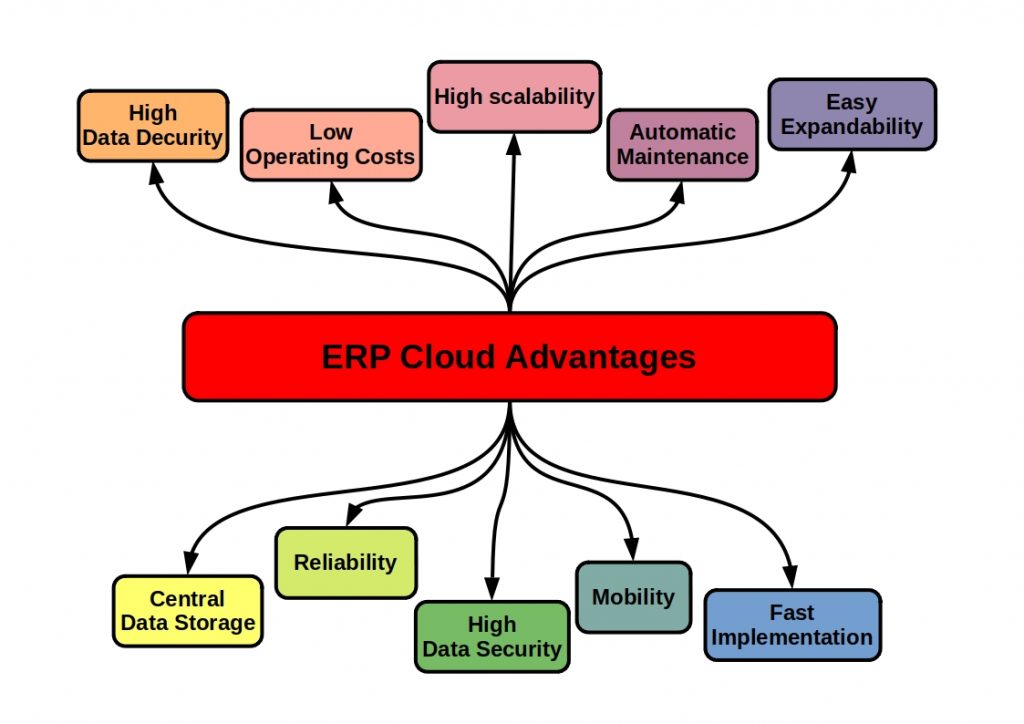

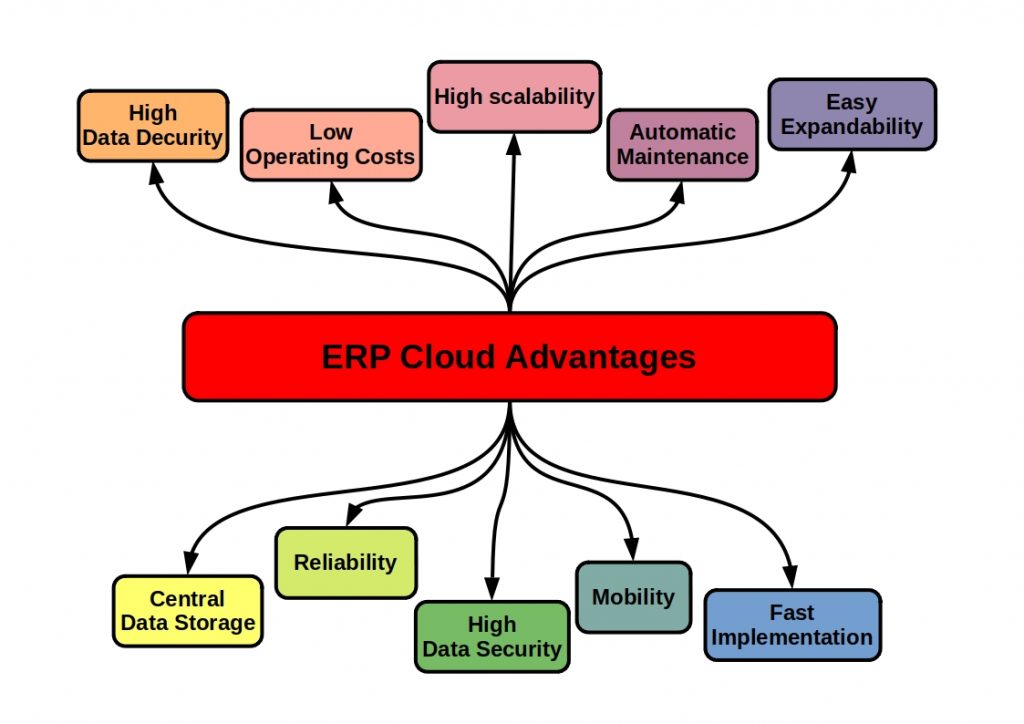

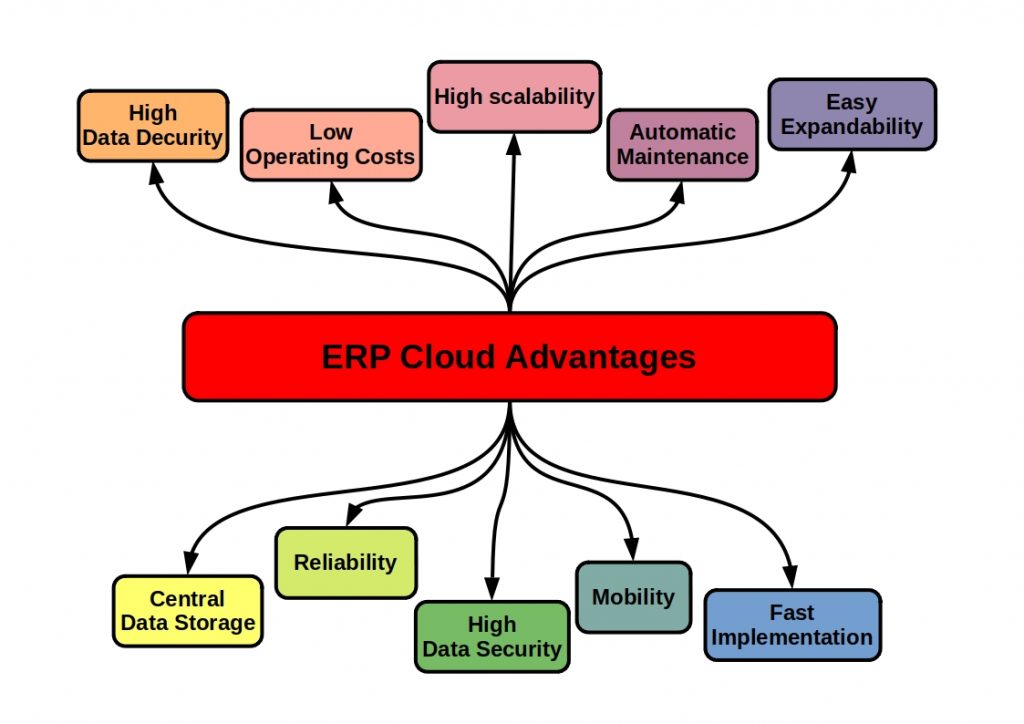

What is Advantages Cloud ERP?

ERPs can also be purchased as a complete Software-as-a-Service (SaaS) solution.

These are comletely industry and hardware independent. You, as a user, can access a sophisticated ERP software package online and thus from anywhere. This gives you absolute spatial flexibility. However, Cloud ERP solutions are still quite new and not yet fully mature. So you should weigh up well in advance whether you want to use a cloud application.

What is an MES?

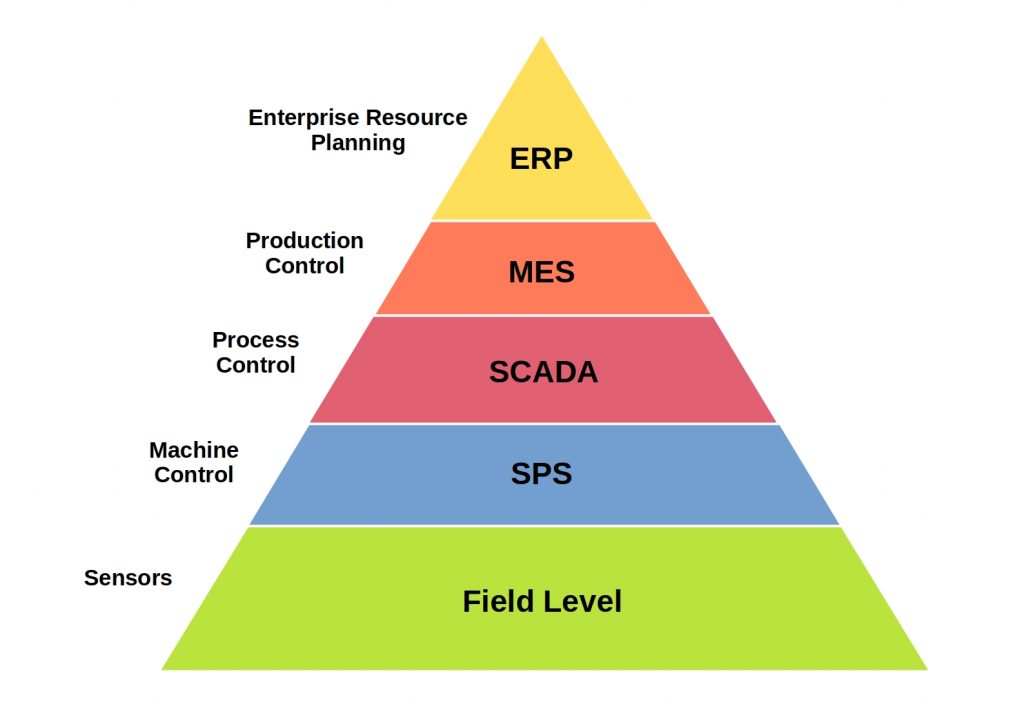

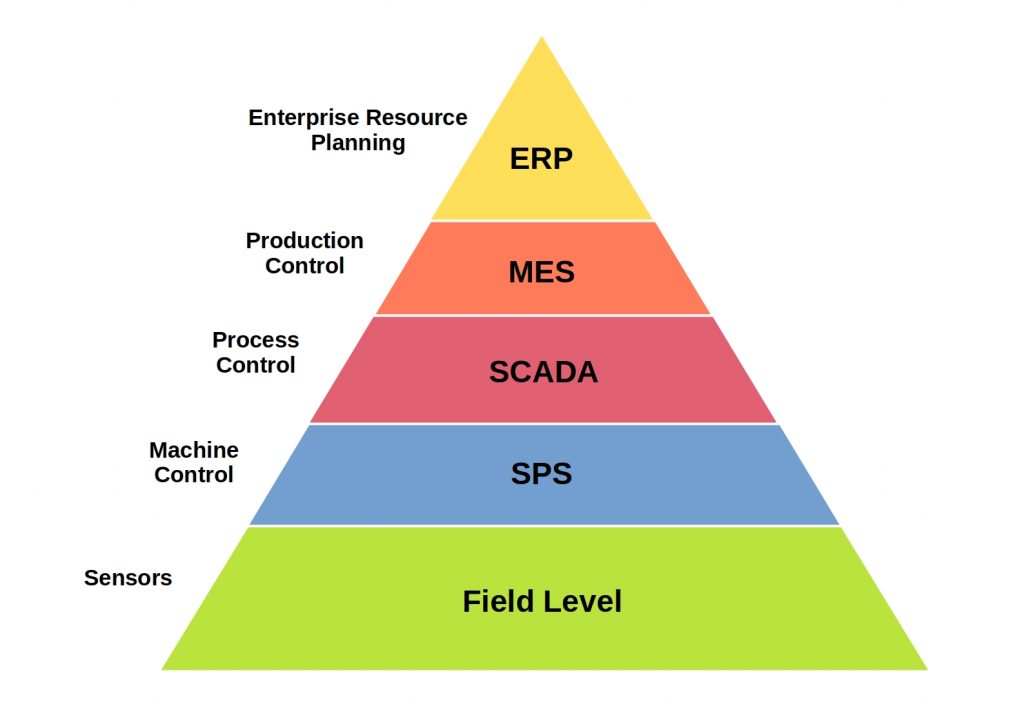

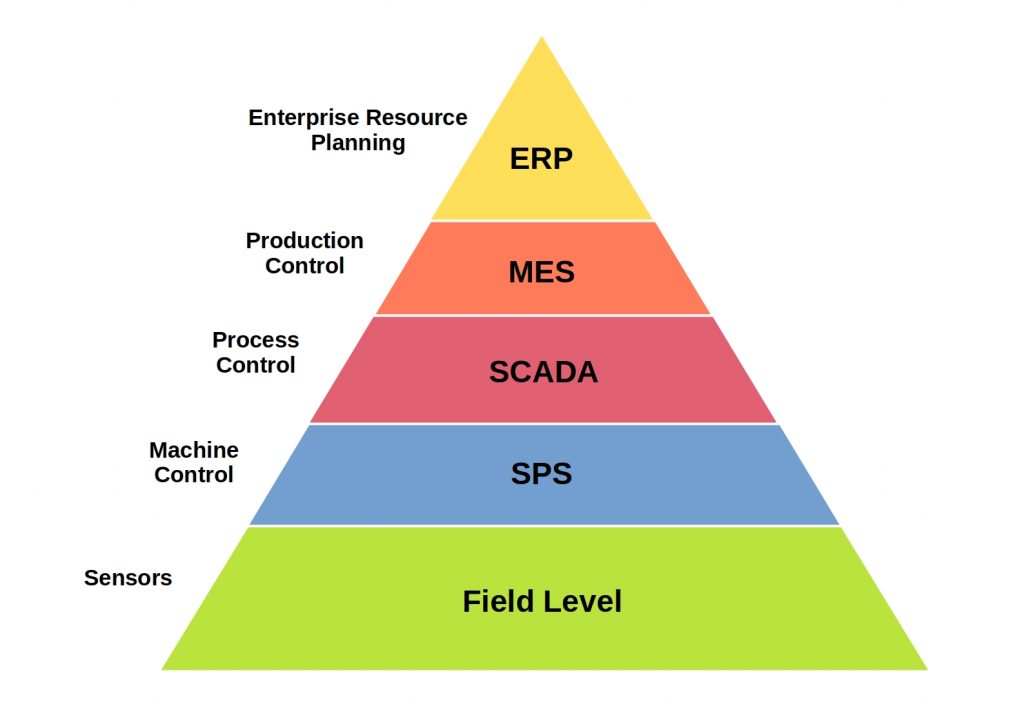

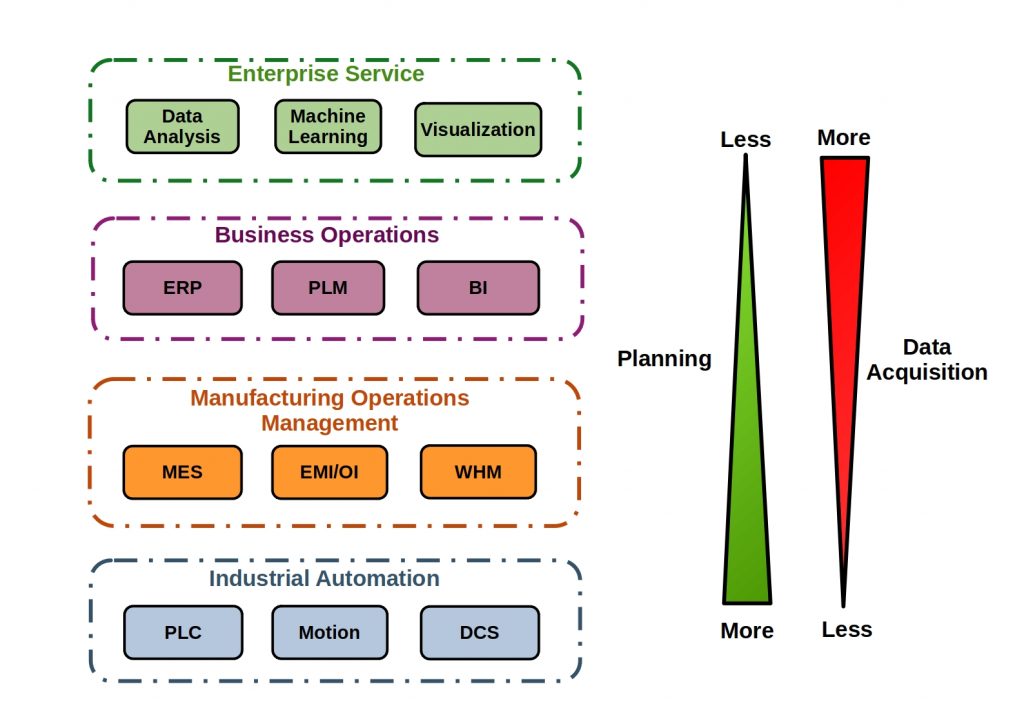

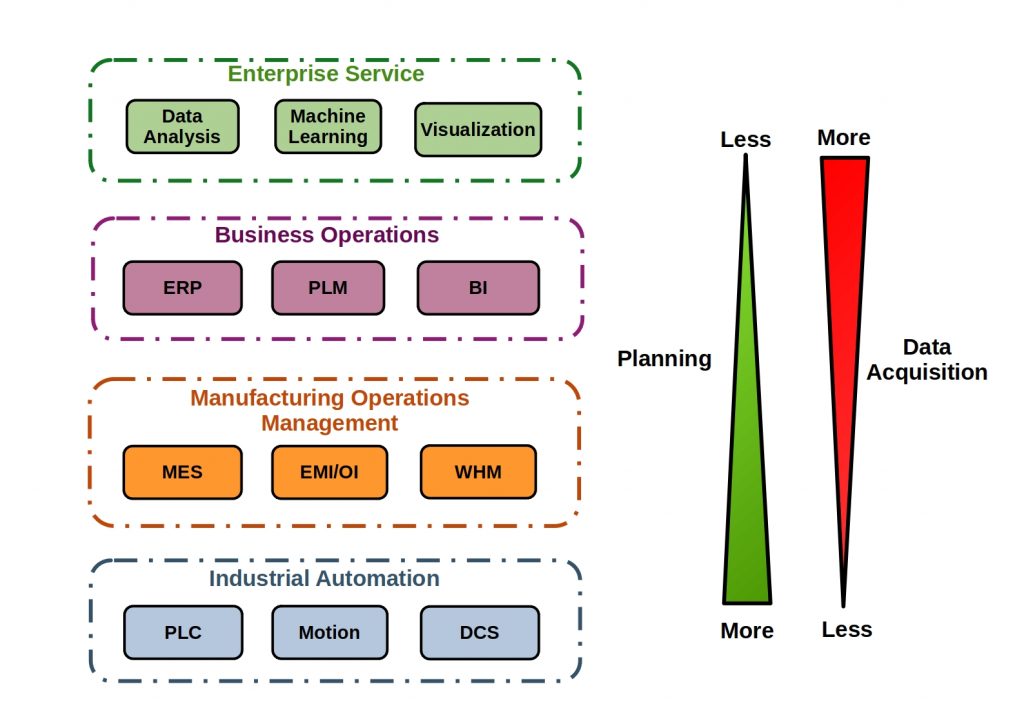

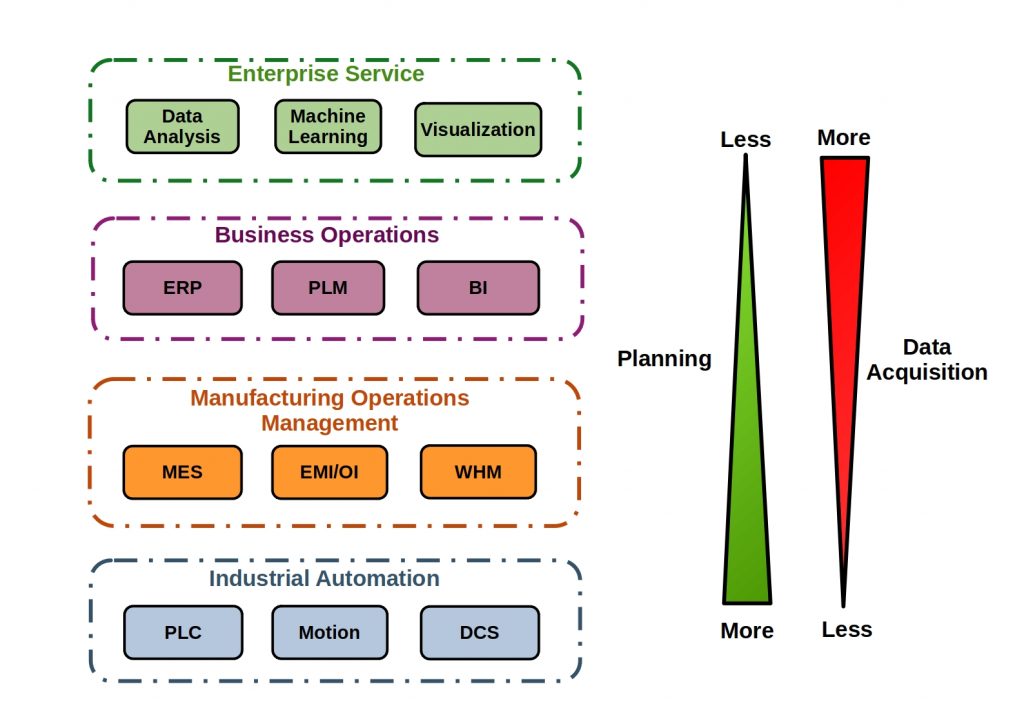

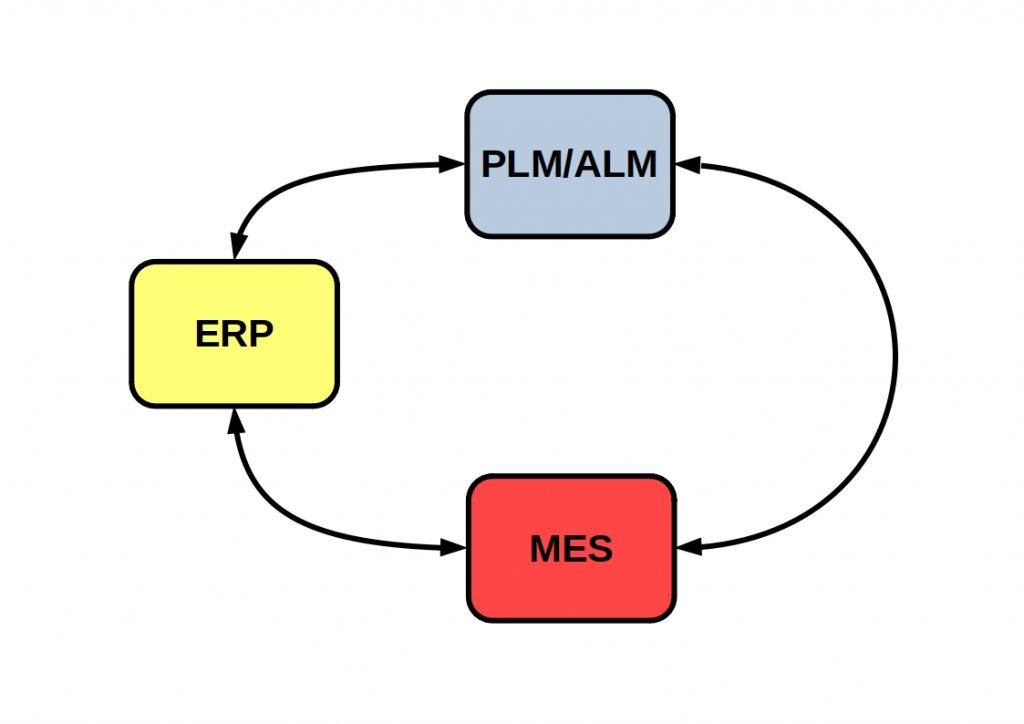

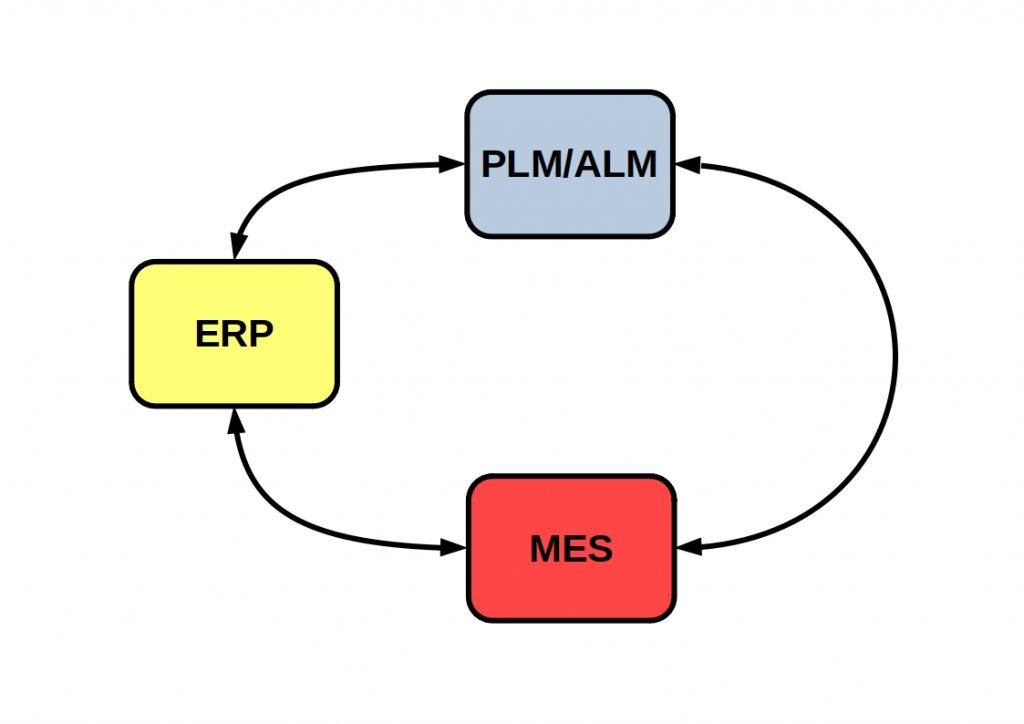

The MES system is an operational process-related part of a multi-layer MES System. It is responsible for real-time production management and control. You can use MES data to optimize manufacturing processes and detect errors during the production process.

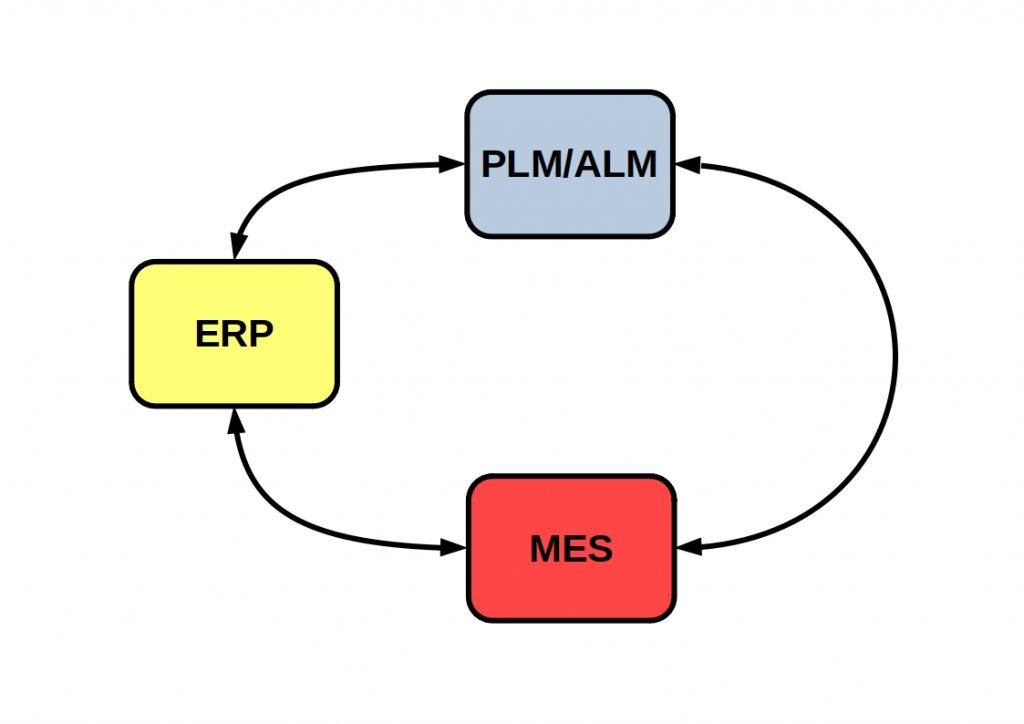

The MES system is assigned to the ERP system. This system accesses your MES data to plan production. It then feeds this information back to your production control system for implementation.

The interaction of the individual components is moving closer together in Industry 4.0.

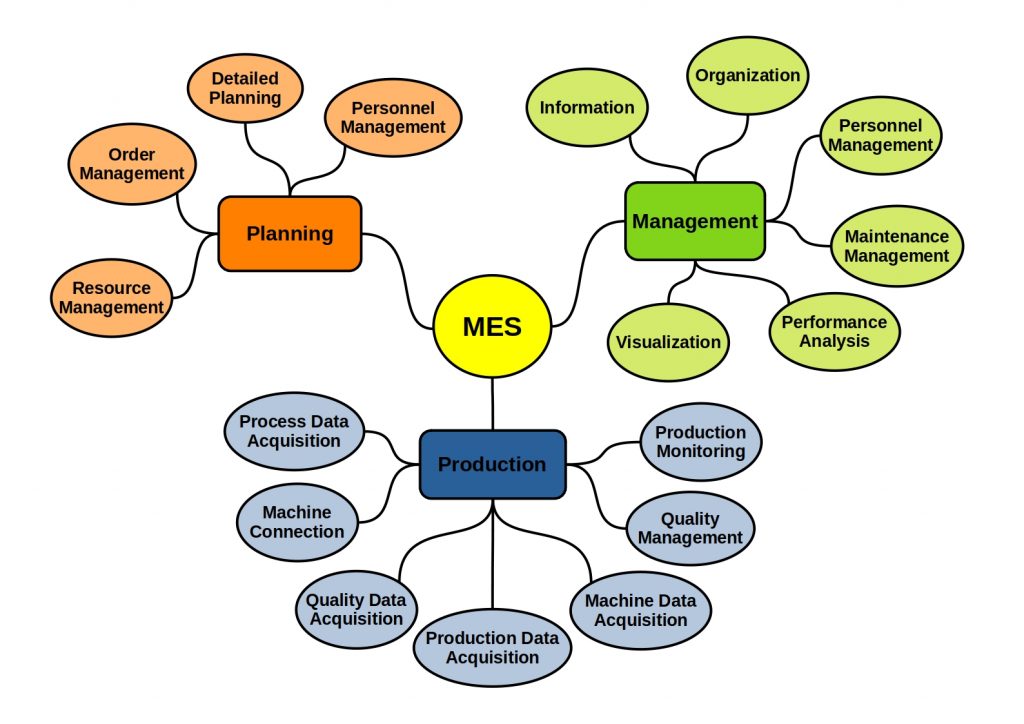

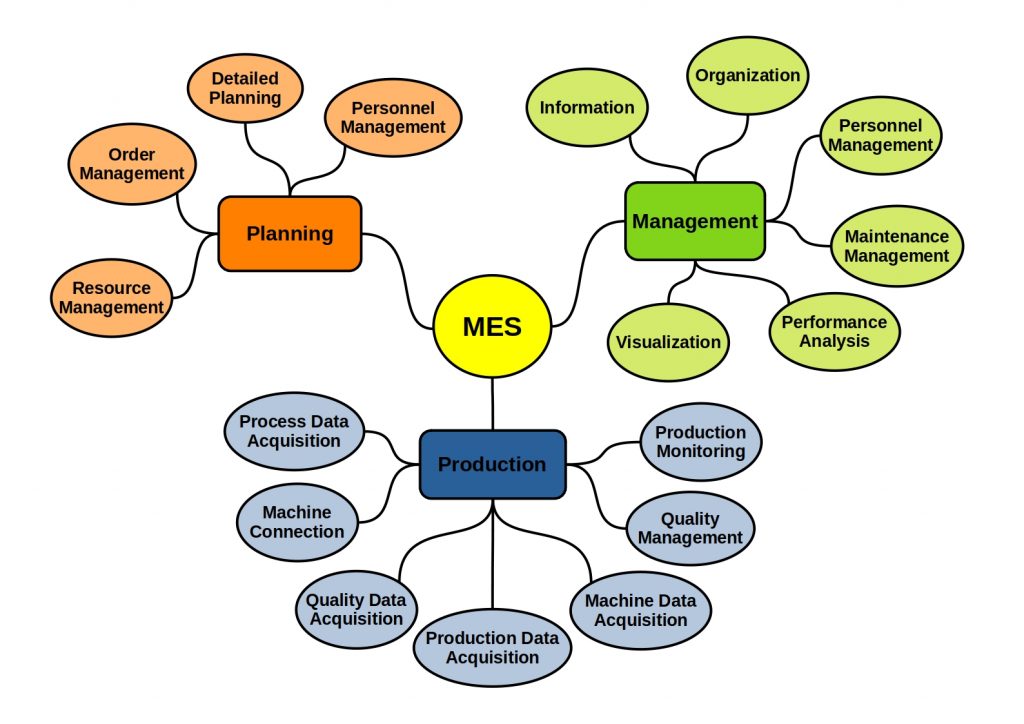

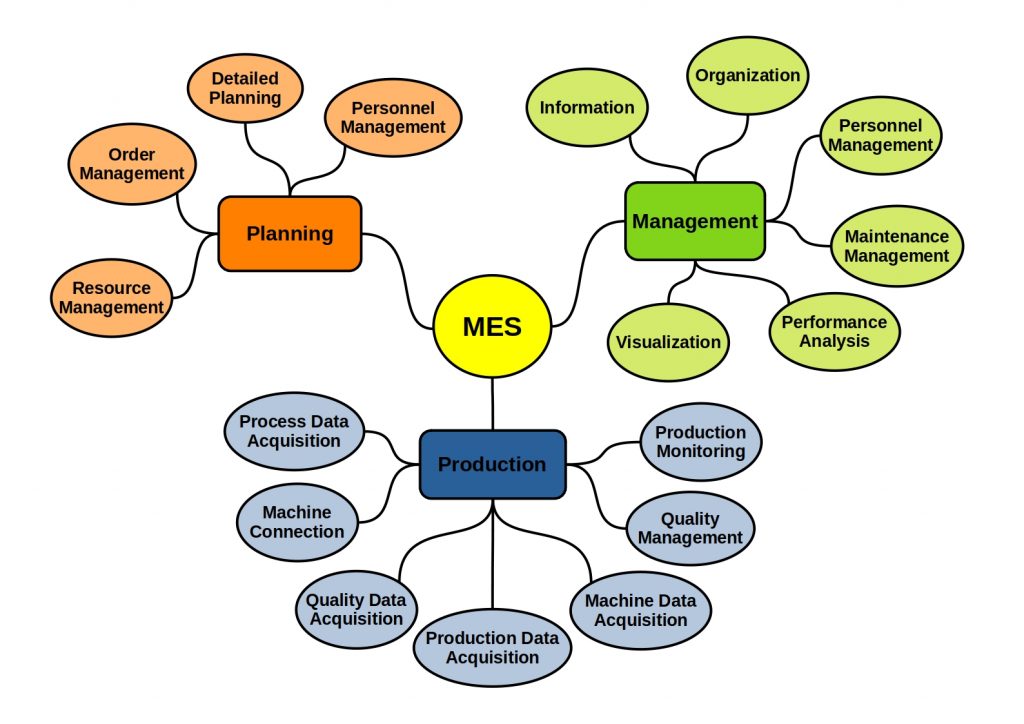

What does the MES include?

MES is usually a multi-layer overall system. It processes your production data into Key Performance Indicators (KPI) and enforces the fulfillment of an existing production plan.

It processes your production data into Key Performance Indicators (KPI) and enforces the fulfillment of an existing production plan.

What is an PLM?

In addition to MES and ERP, the Product Life Cycle Management (PLM) system plays an elementary role in the digitization of your company.

In order for your company to remain internationally competitive in today’s world, you need to optimize your business models in order to be able to act preventively.

As a manufacturing company, you need to be able to analyze large amounts of data quickly. This way you can recognize deviations from the plan early on and make the right decisions.

Many software solutions help you in all business areas and even exchange data with each other. In this way, you can create information chains within a company and act more quickly.

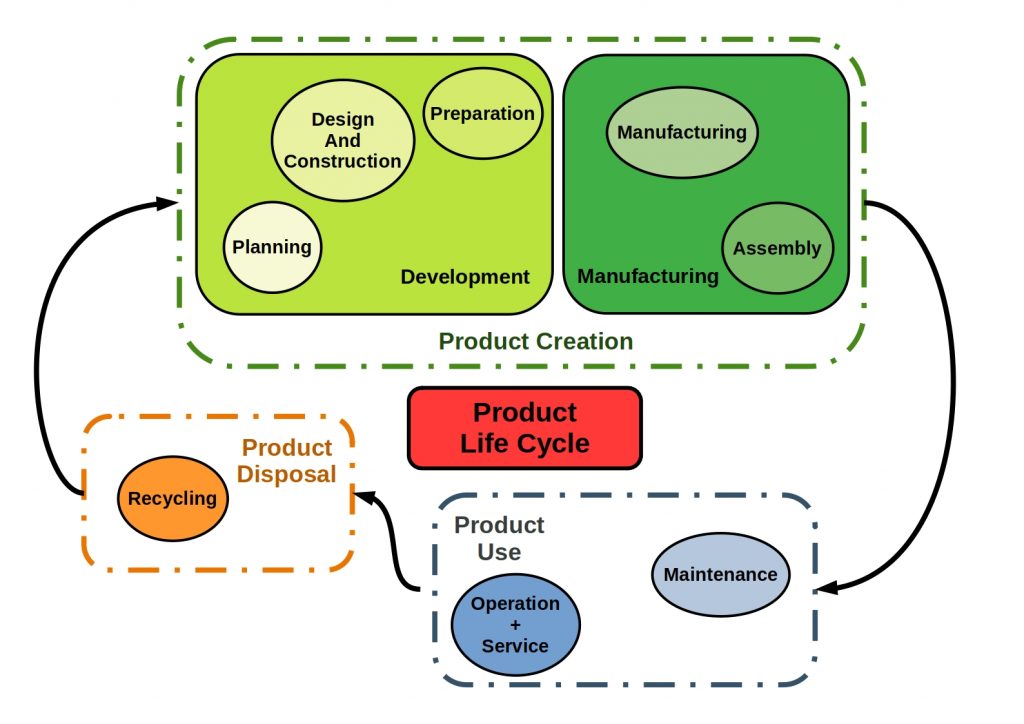

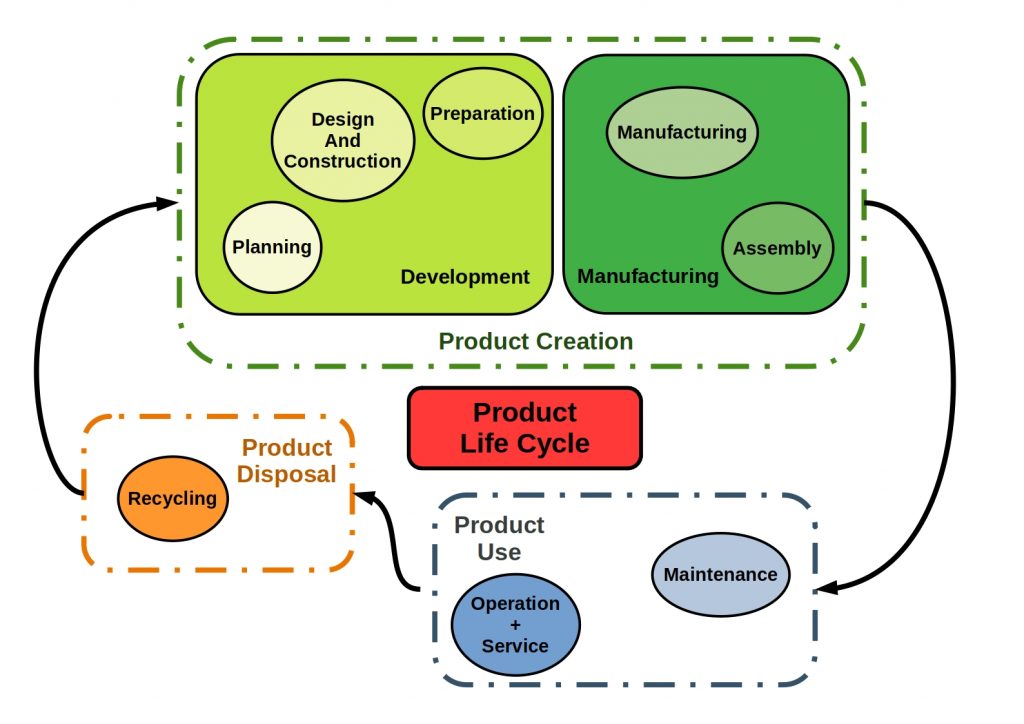

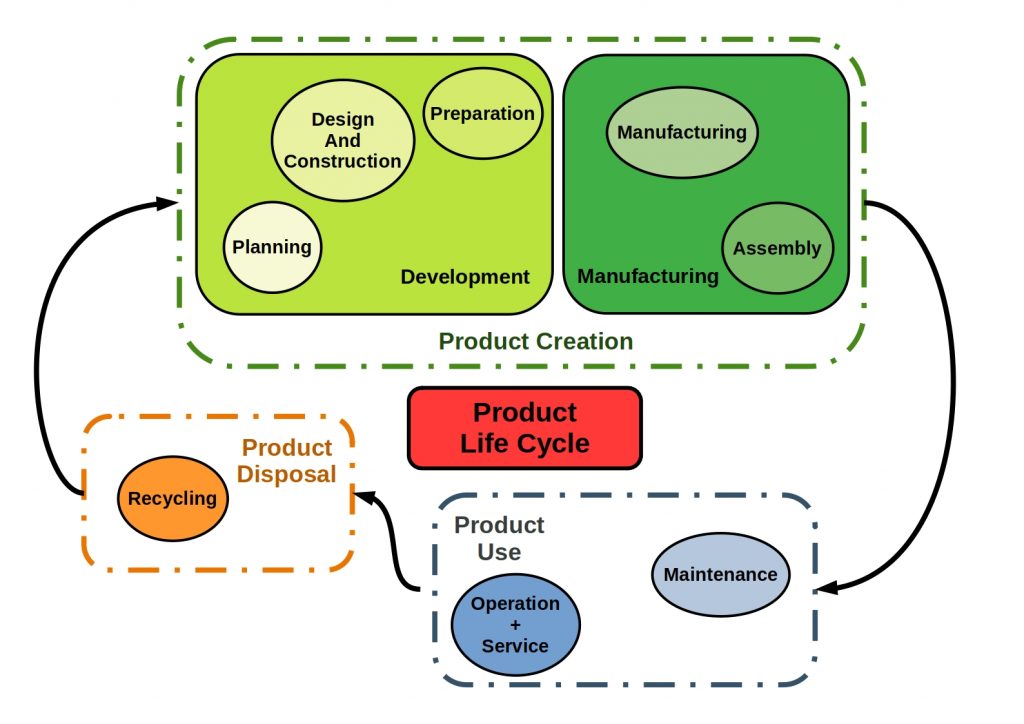

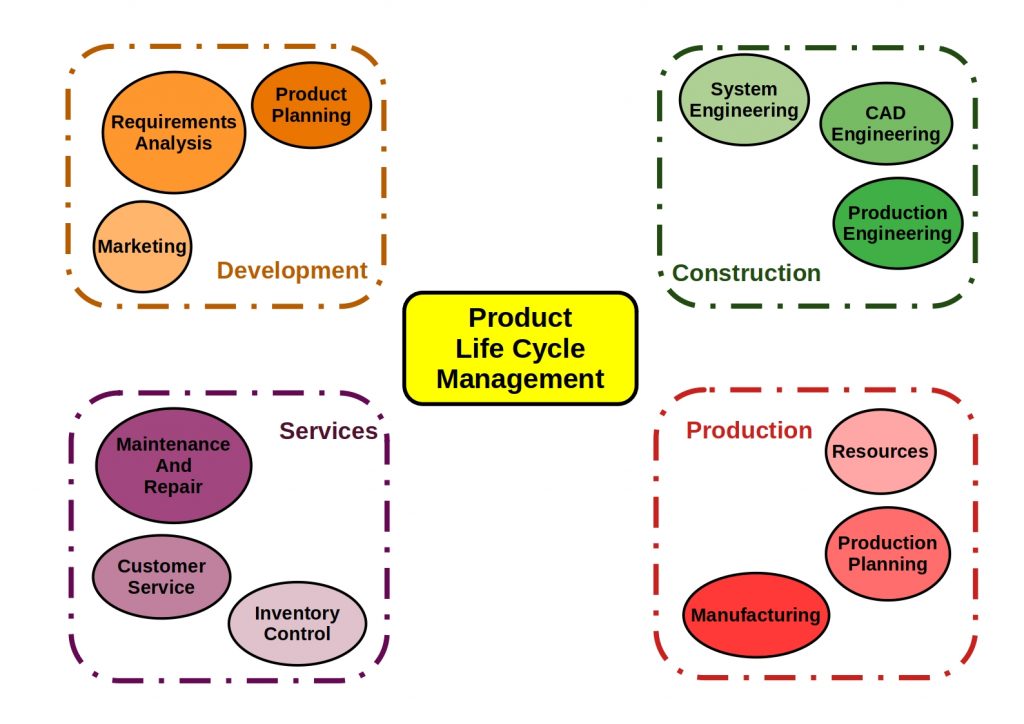

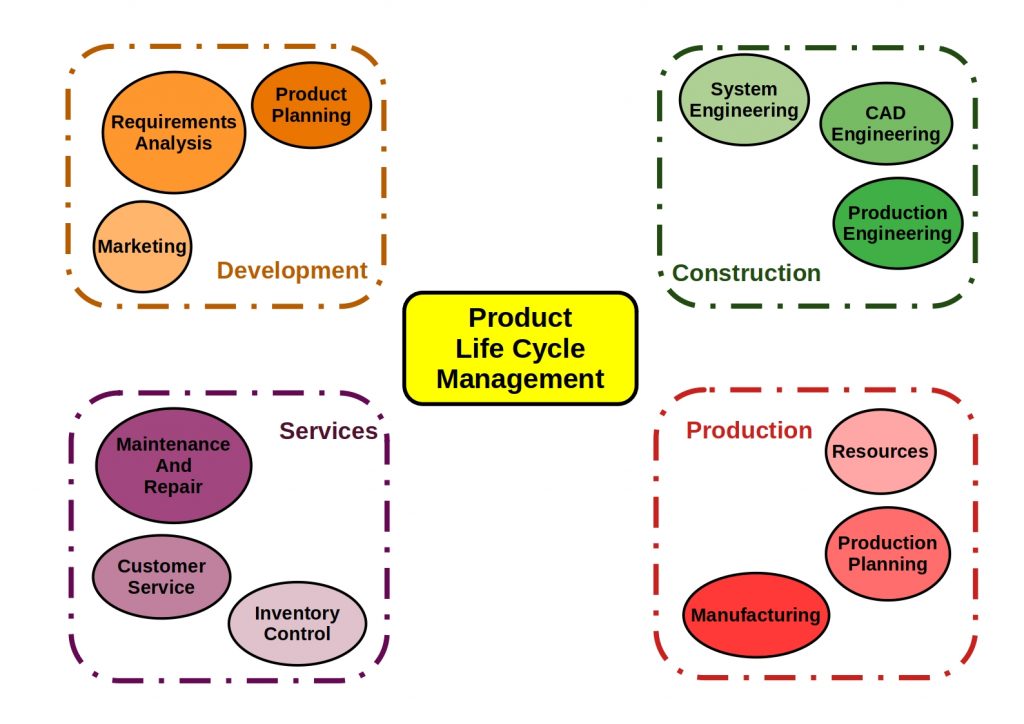

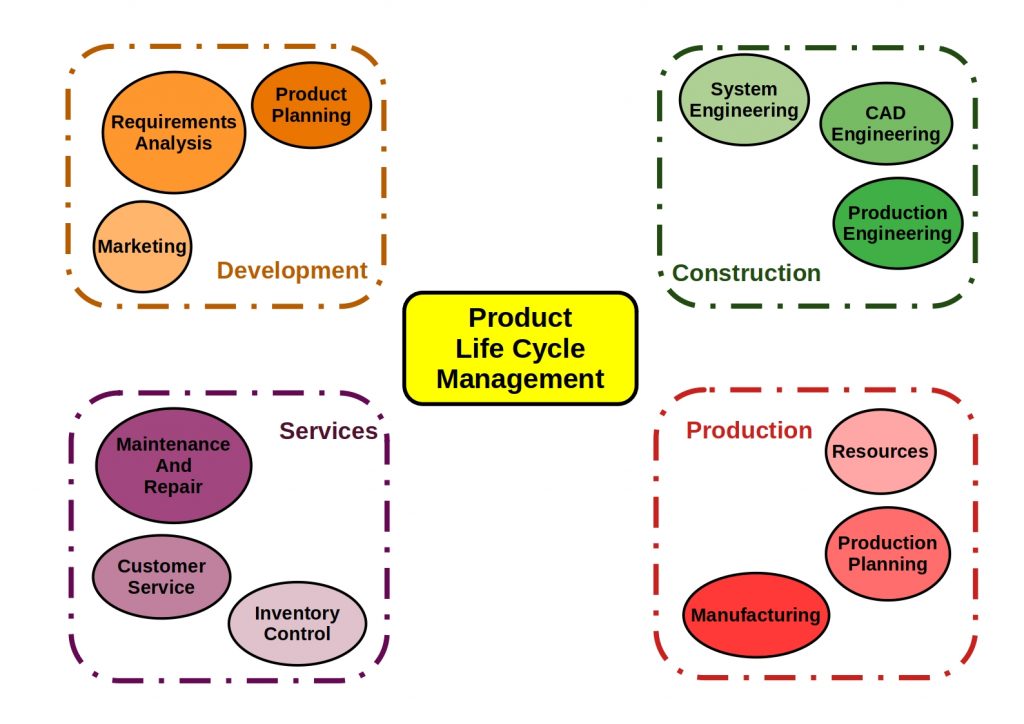

PLM System is a management approach for the seamless integration of all information that accumulates during the life cycle of a product.

The core components of PLM are the data and information related to the product lifecycle.

A large amount of product-related and time-dependent data is generated along the product life cycle. The PLM enterprise concept is based on coordinated methods, processes and organizational structures and usually makes use of IT systems. PLM tools link design, implementation and production and provide feedback from manufacturing.

The goal of a PLM system is the central management of information and corresponding user groups. One advantage here is that you can control the process of editing and distribution throughout the company.

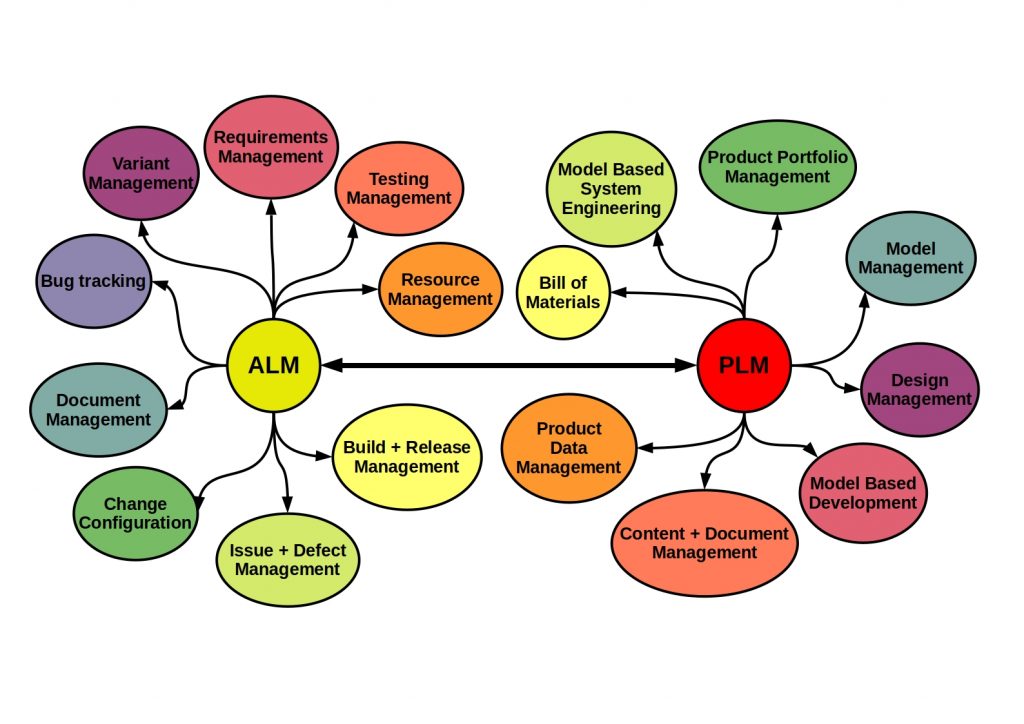

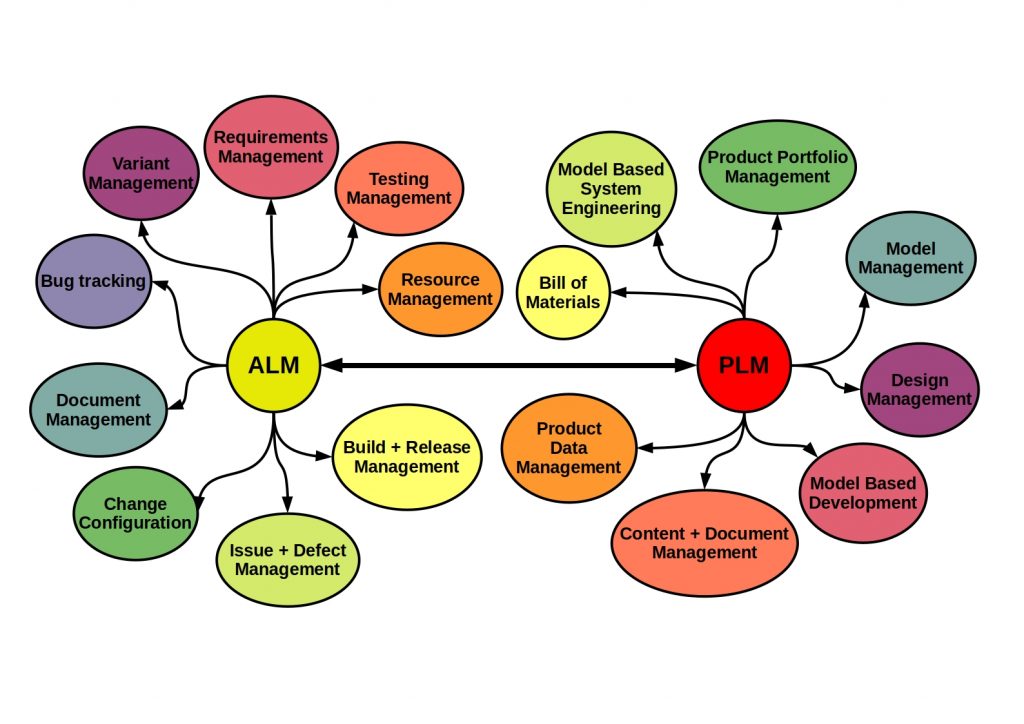

Application Lifecycle Management (ALM) vs PLM System

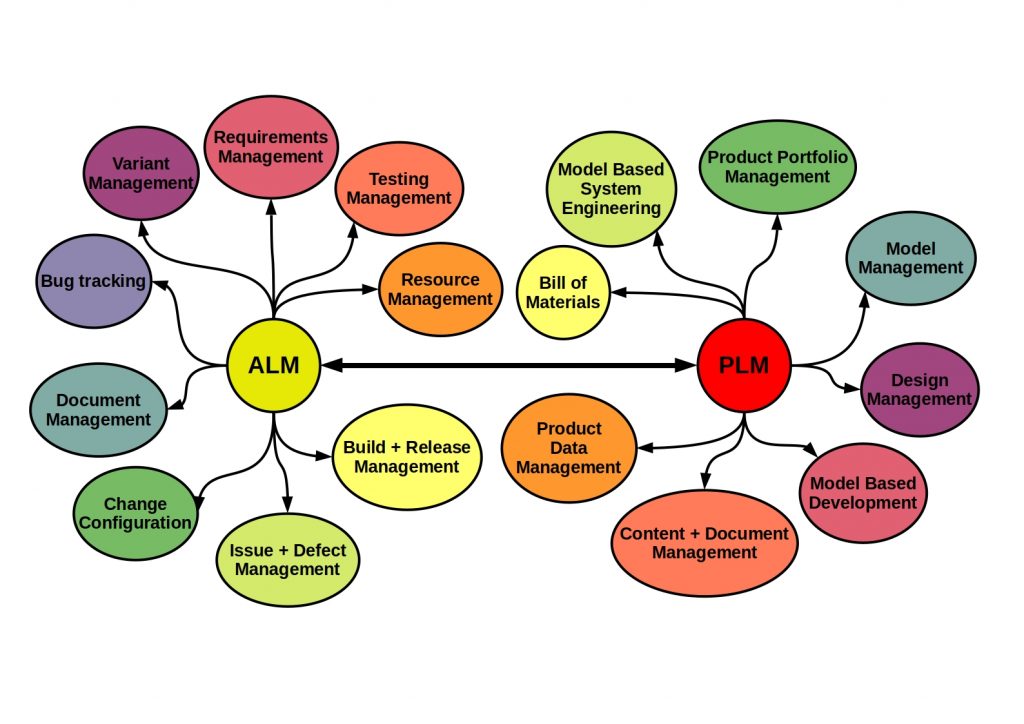

More and more products and systems now contain a software component. However, since hardware and software are historically different, you must also differentiate between the management systems.

With PLM you are looking at a physical product, with ALM you are looking at a software product. Basically, however, there are similarities between the two systems. Both also track a product over its entire lifecycle. However, since both product types are increasingly merging today, you can also link both systems on an IT basis at the overall product level.

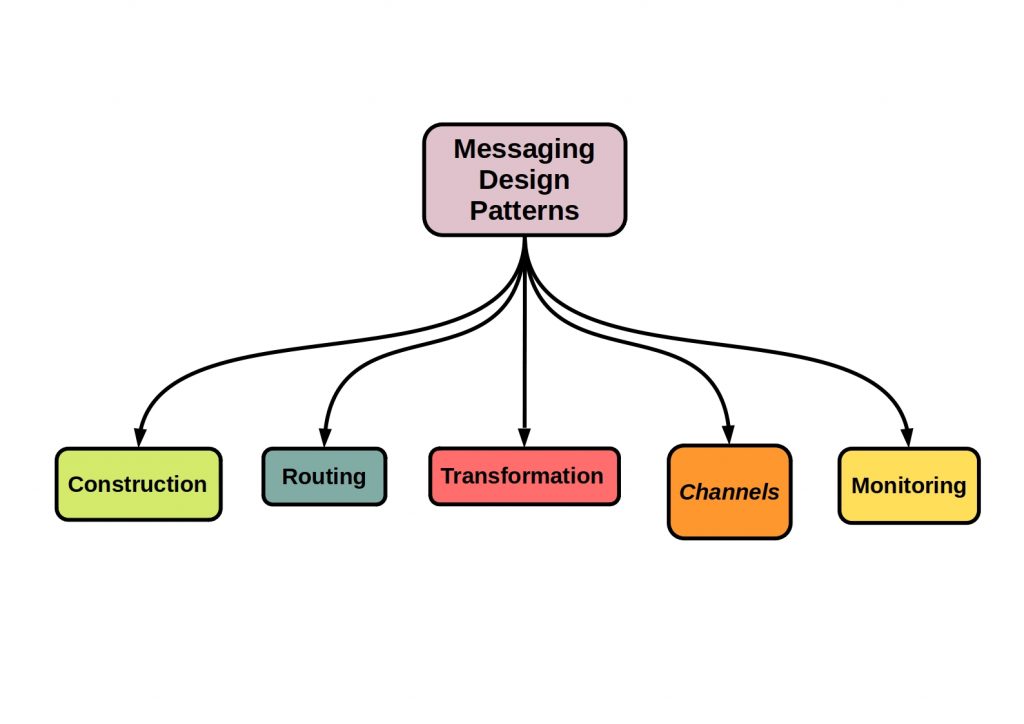

ERP vs MES vs PLM vs ALM – What does the future hold?

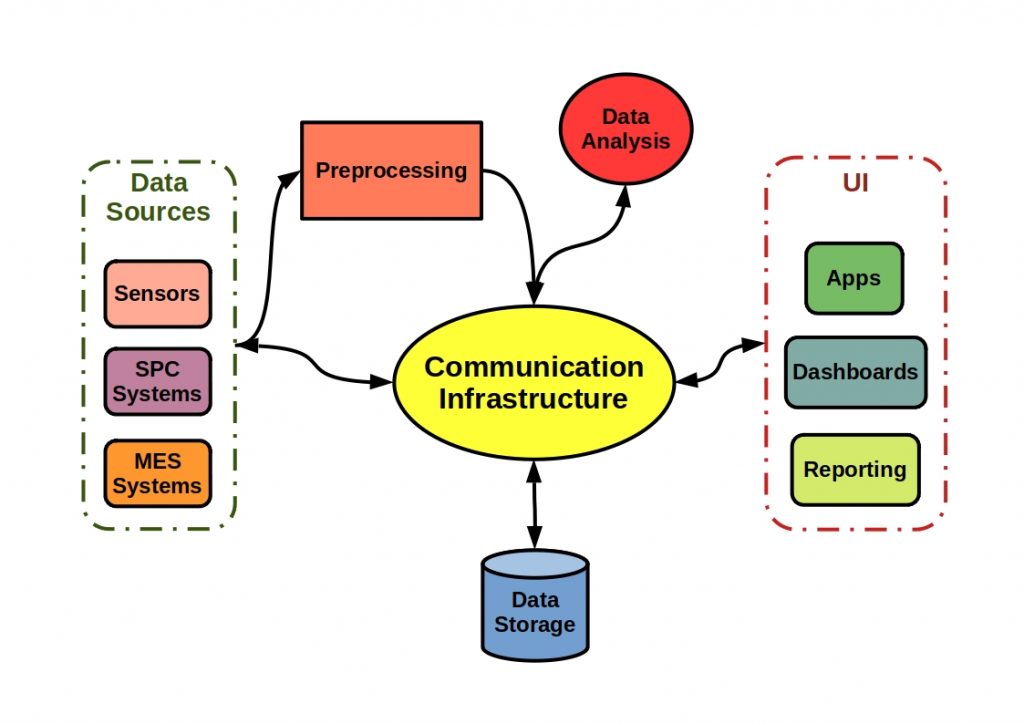

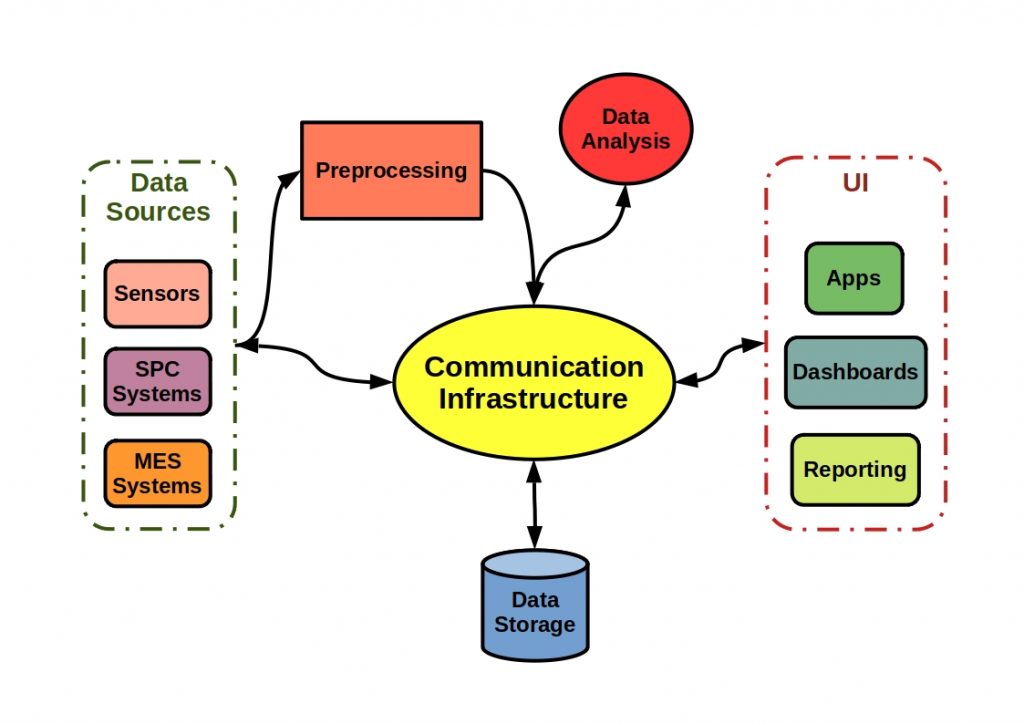

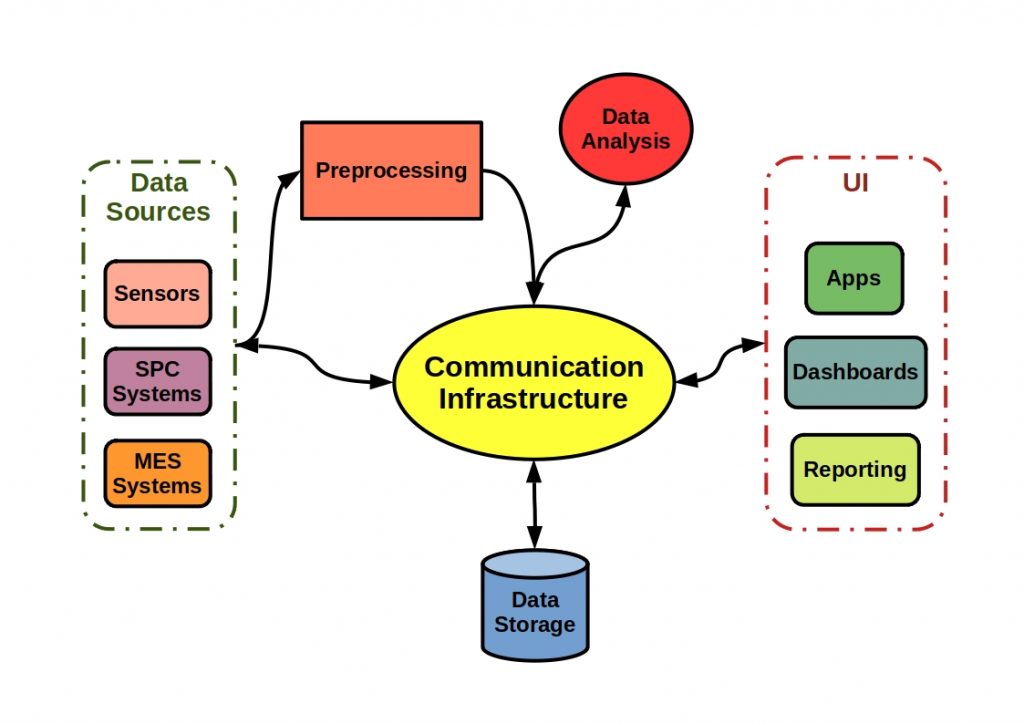

When people talk about Industry 4.0, they are referring to a new level of technological progress. The basis of this innovation is the Internet of Things (IoT). The software solutions of various company levels are networked to form cyber-physical systems and exchange information with each other in real time. In this way, production planning can take place in management and be implemented directly in production. As production becomes more complex in the future, mastering complexity and complex technologies will come with the necessary know-how.

The software solutions presented here are systems optimized for business areas. Each software system is therefore an expert in its own field. This ensures a decisive modularity for a company’s overall solution. On the other hand, this modularity always leads to increased complexity. In the future, it will become increasingly important to create reciprocal data pipelines, so-called data streams, between the individual systems, which currently still operate very autonomously.

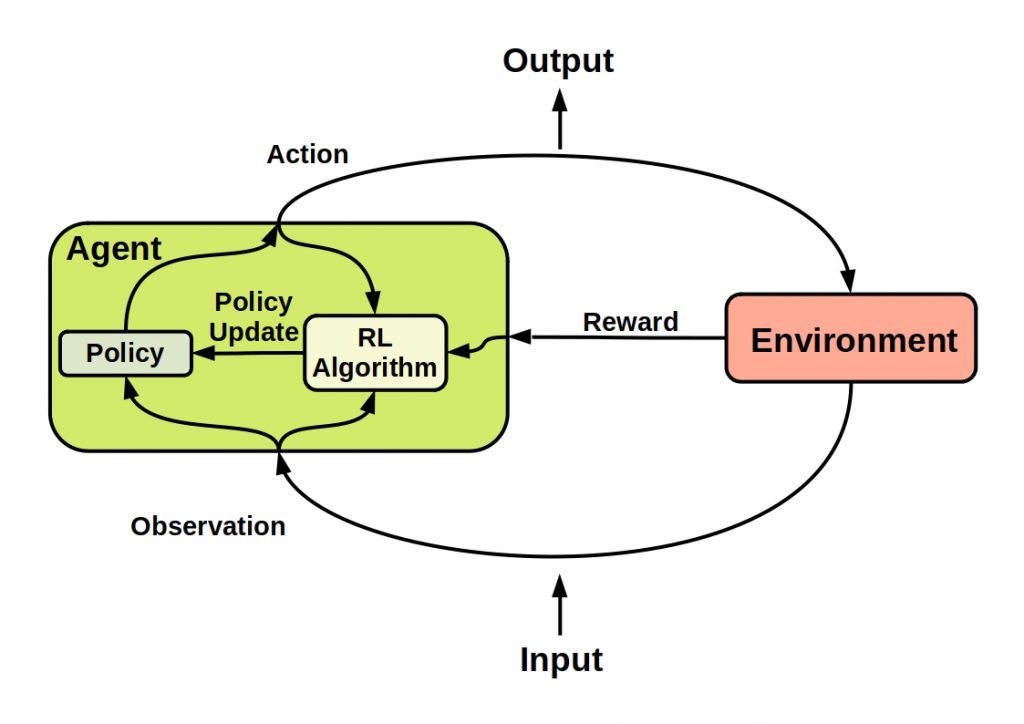

A decision made at the management level should be implemented in production and at the same time remain controllable at all levels. Optimally, the system should be able to make its own analyses. AI algorithms can help here to find sensible decisions despite increasing complexity. This allows you to optimize your individual production steps and shorten life cycles.

The MES, for example, plays an important role here due to its proximity to production. This allows you to make important decisions quickly and implement production plans.In your company of the future, software solutions from various divisions are networked with each other. So you can form information chains and the MES is part of this network.