NumPy vs Pandas – Since in our time in every science and economic branch ever larger amounts of data accumulate, which must be analyzed and managed performantly, the learning of a programming language has become interdisciplinary indispensable.

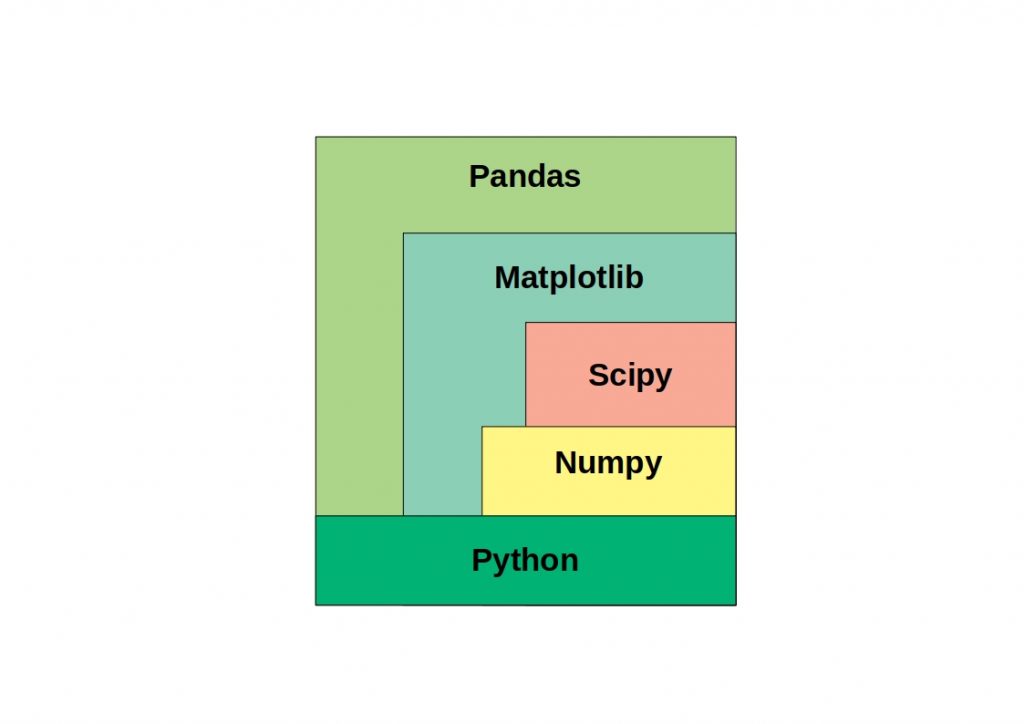

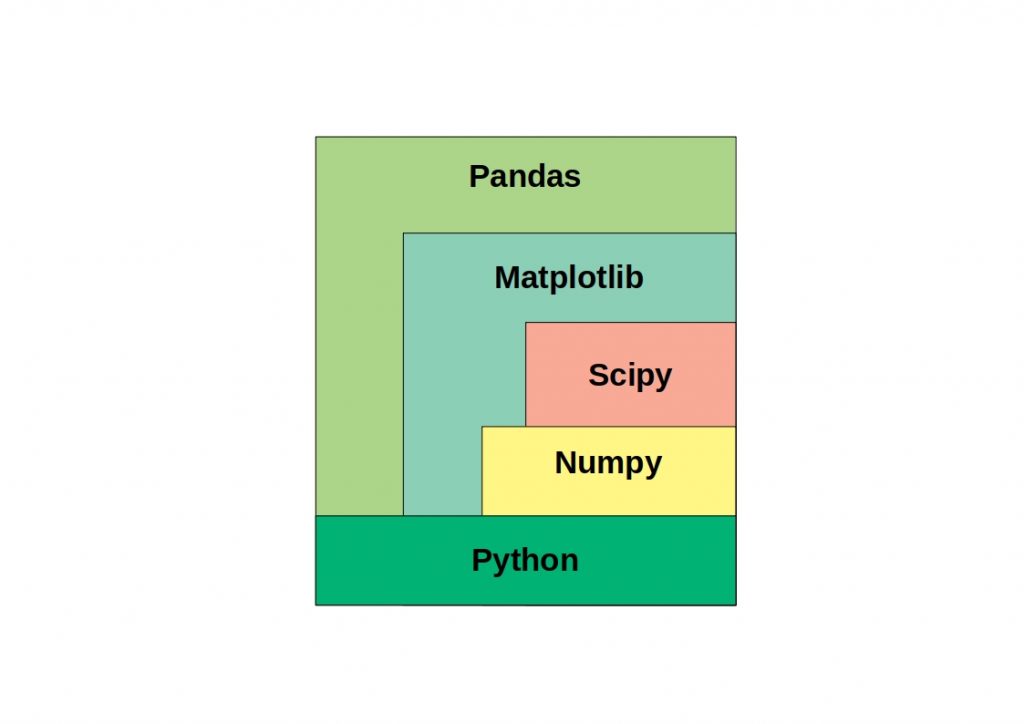

For many, Python is the first programming language in the classical sense, due to its beginner friendliness and mathematical focus. Python offers the possibility of accessing ready-made, optimized computational tools through the modular implementation of powerful mathematical libraries.

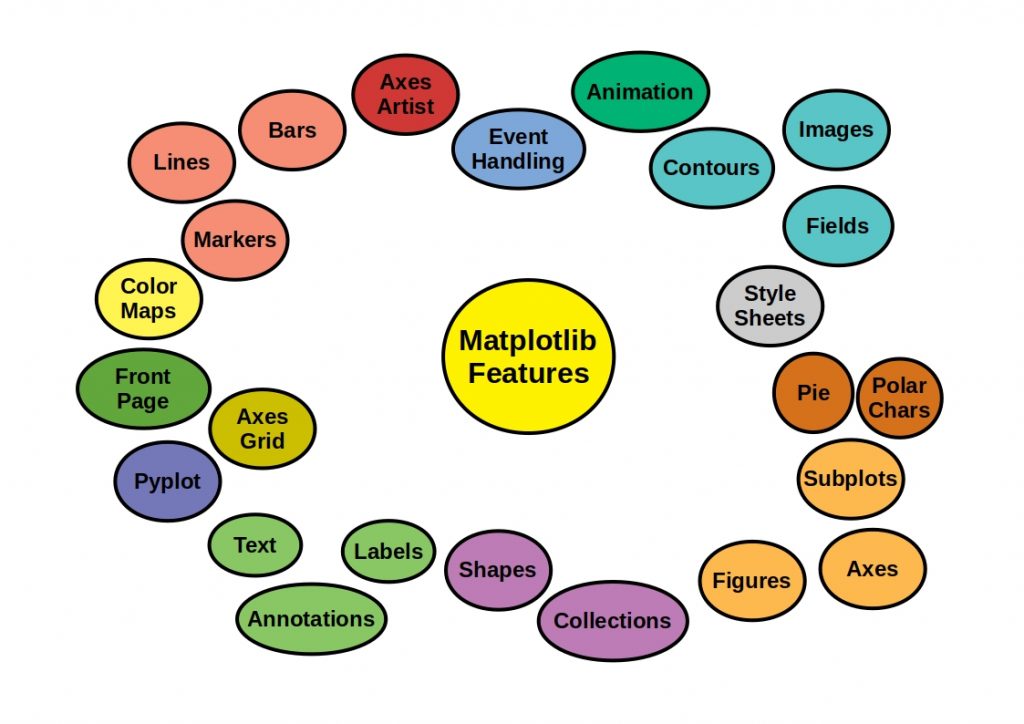

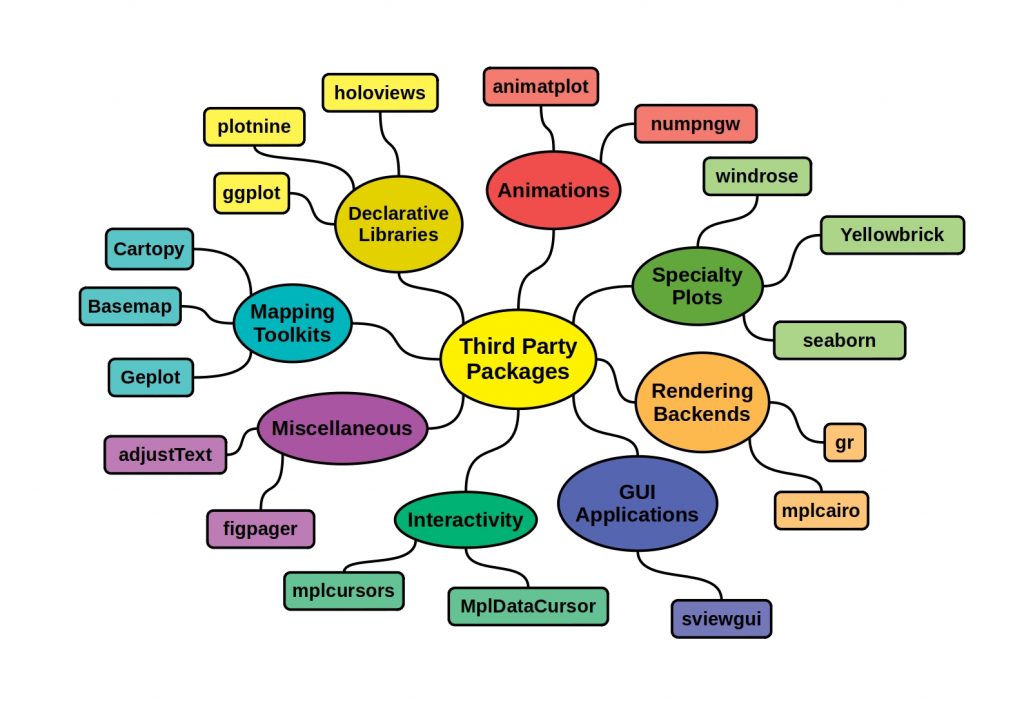

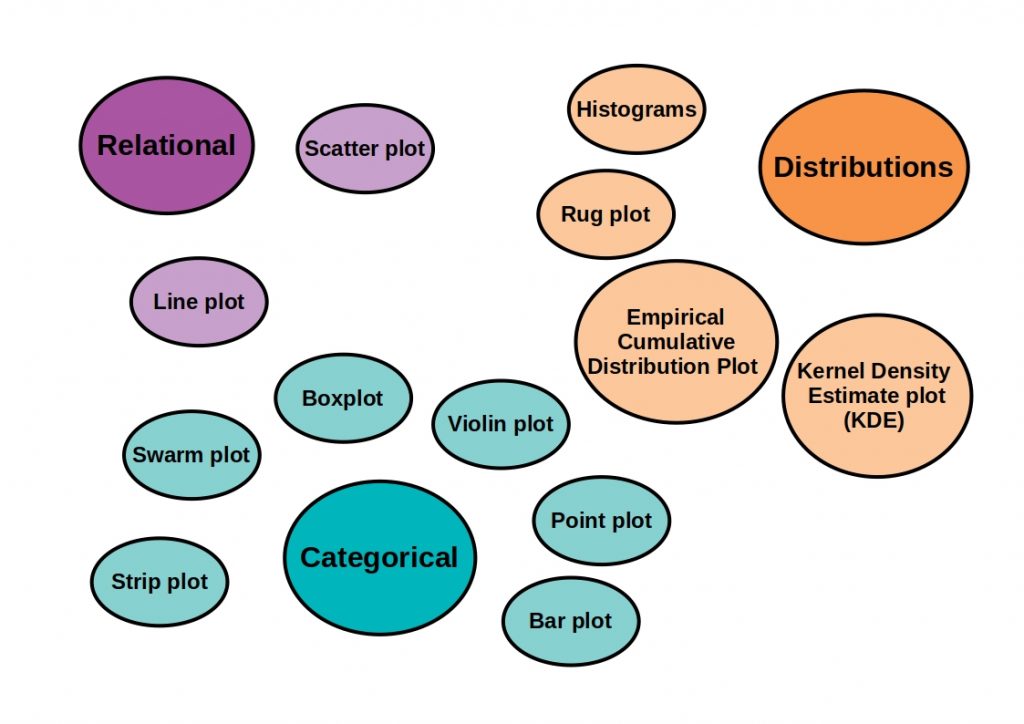

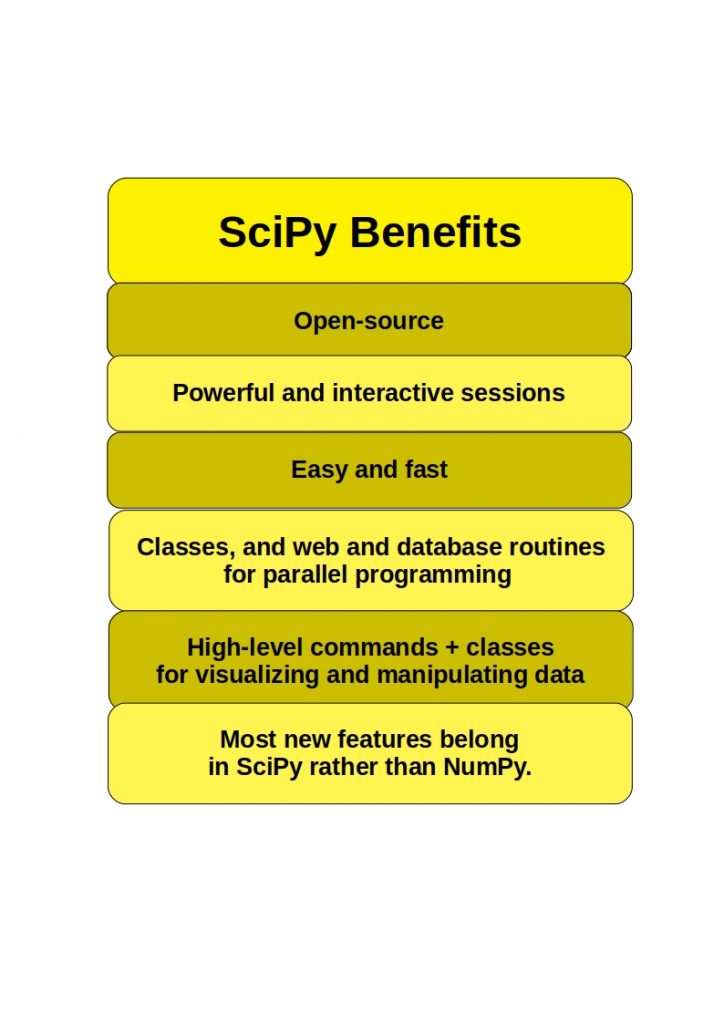

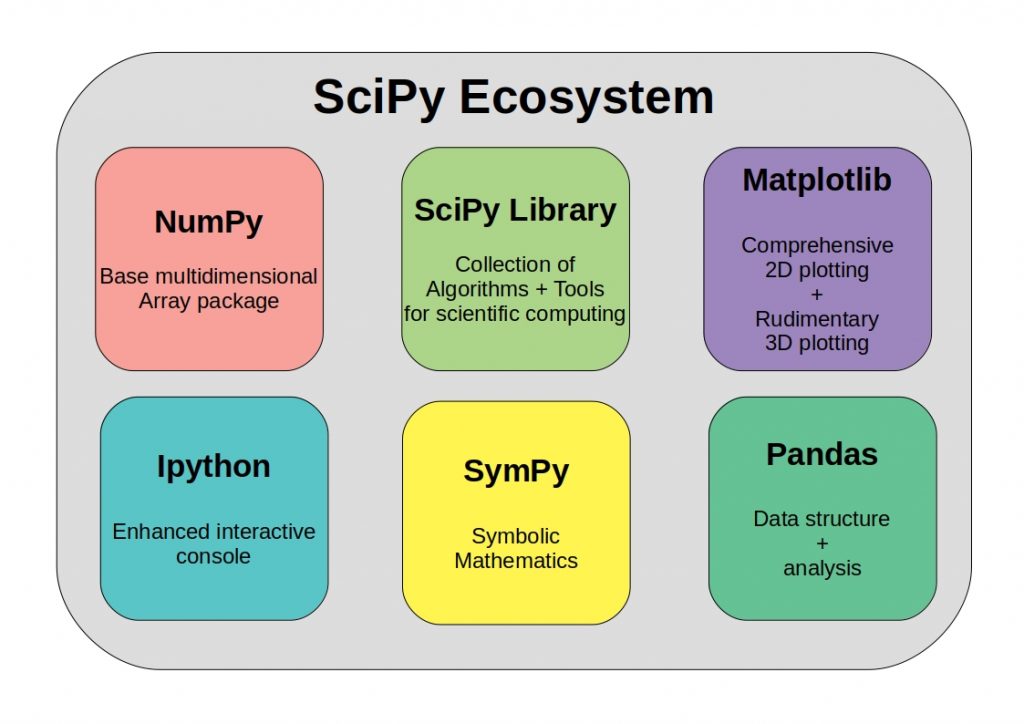

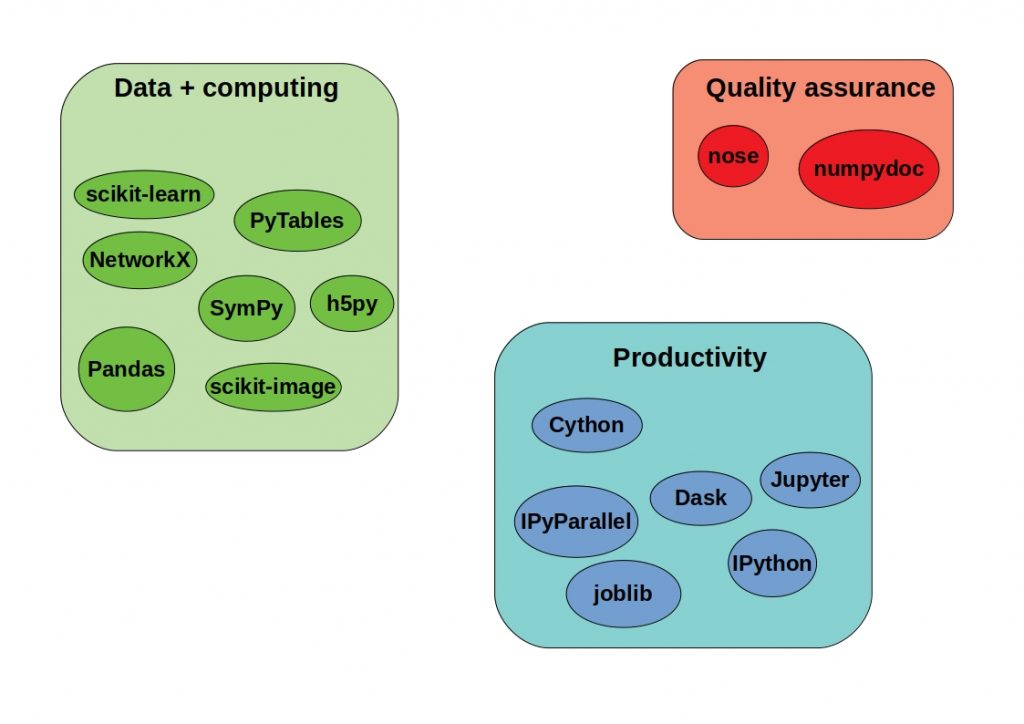

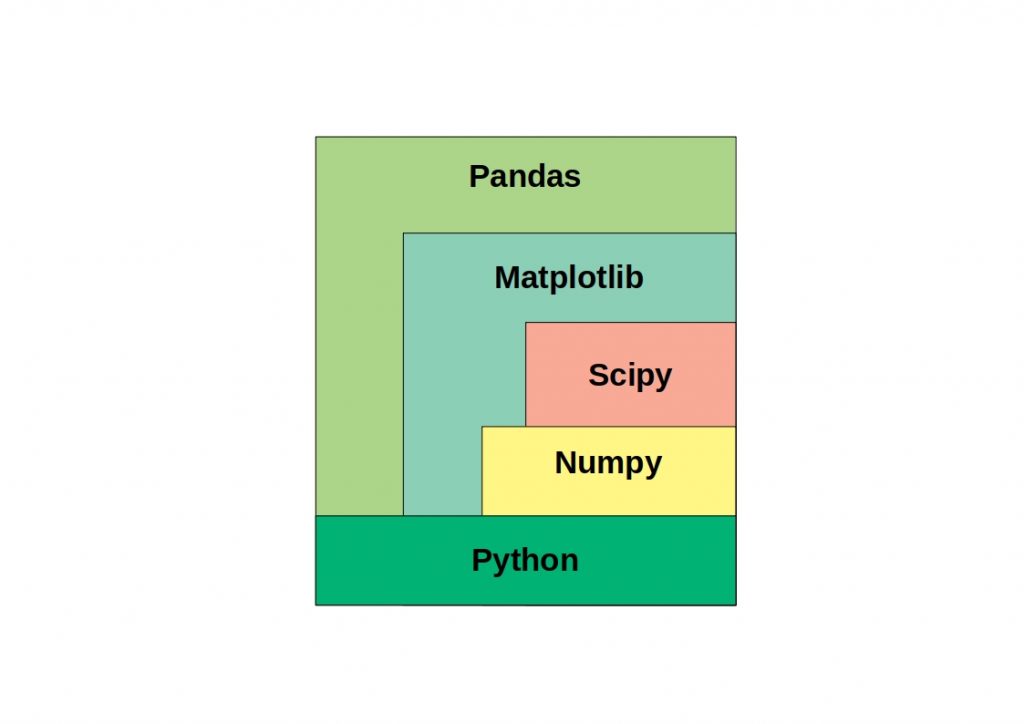

However, this offer can also quickly become overwhelming. Which library, which framework is suitable for my purposes? Will I save myself work with this tool, or will I reach its limits? Here you can learn more about SciPy and why you should definitely prefer it over MATLAB and here we compared the two Python visualization methods matplotlib and seaborn. These Python libraries are absolutely compatible with each other and together they make a very interesting data science tool. NumPy and Pandas are perhaps two of the best-known python libraries. But what are the differences between them? We will get to the bottom of this question in this article.

What actually is NumPy?

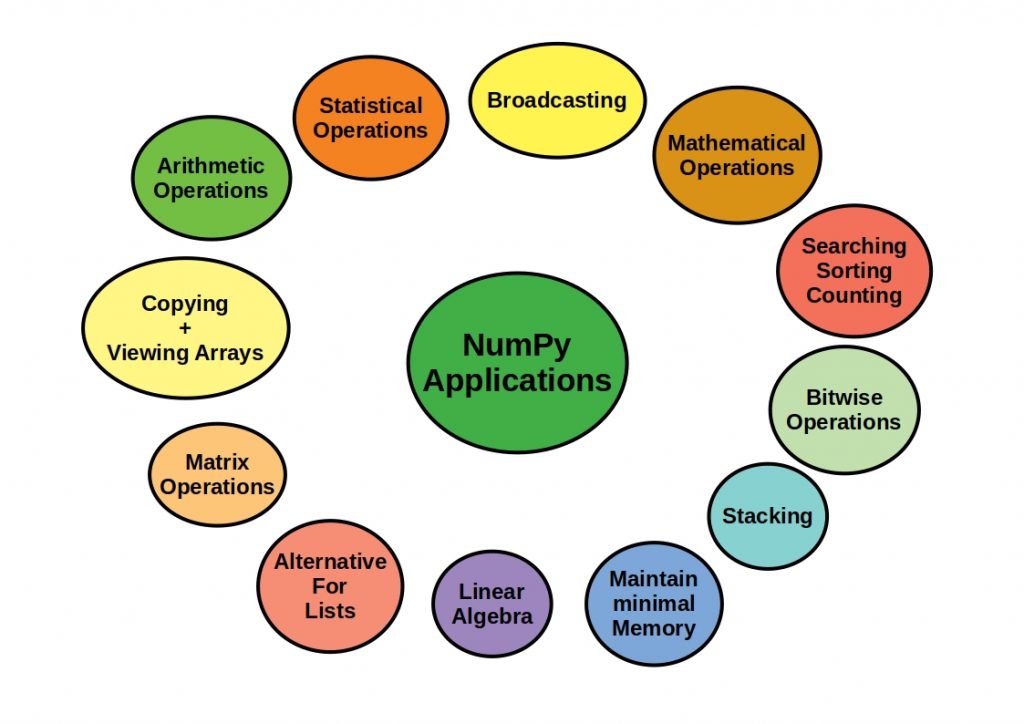

NumPy stands for “Numerical Python” and is an open source Python library for array-based calculations. It was first released in 1995 as Numeric, making it the first implementation of a Python matrix package, and rereleased as NumPy in 2006. This library is intended to allow easy handling of vectors, matrices, or large multidimensional arrays in general.

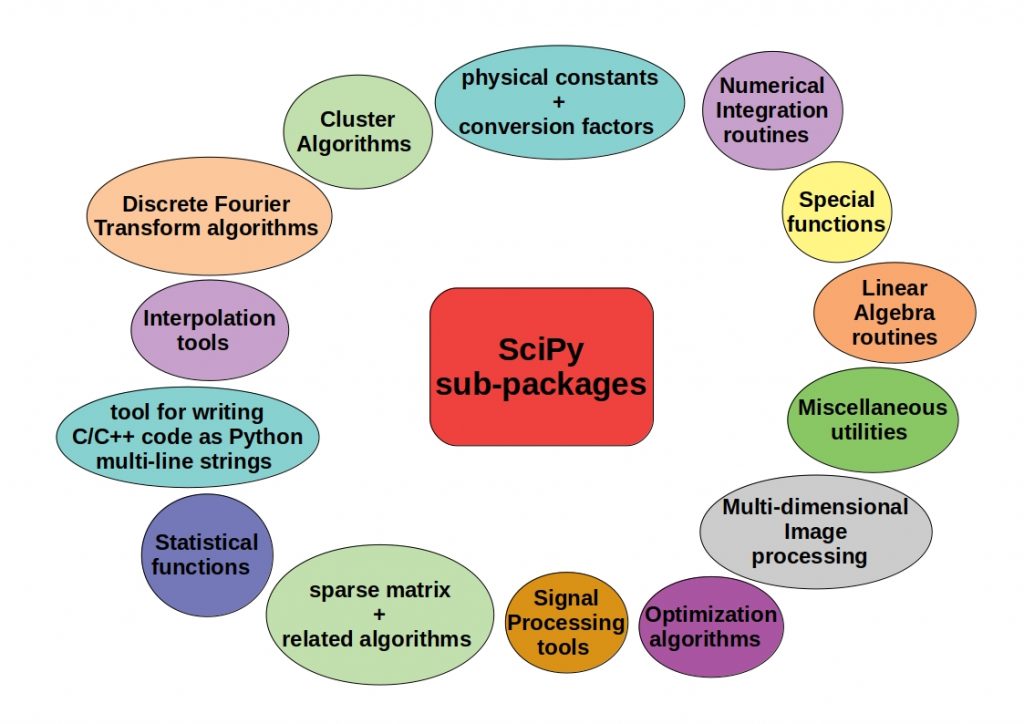

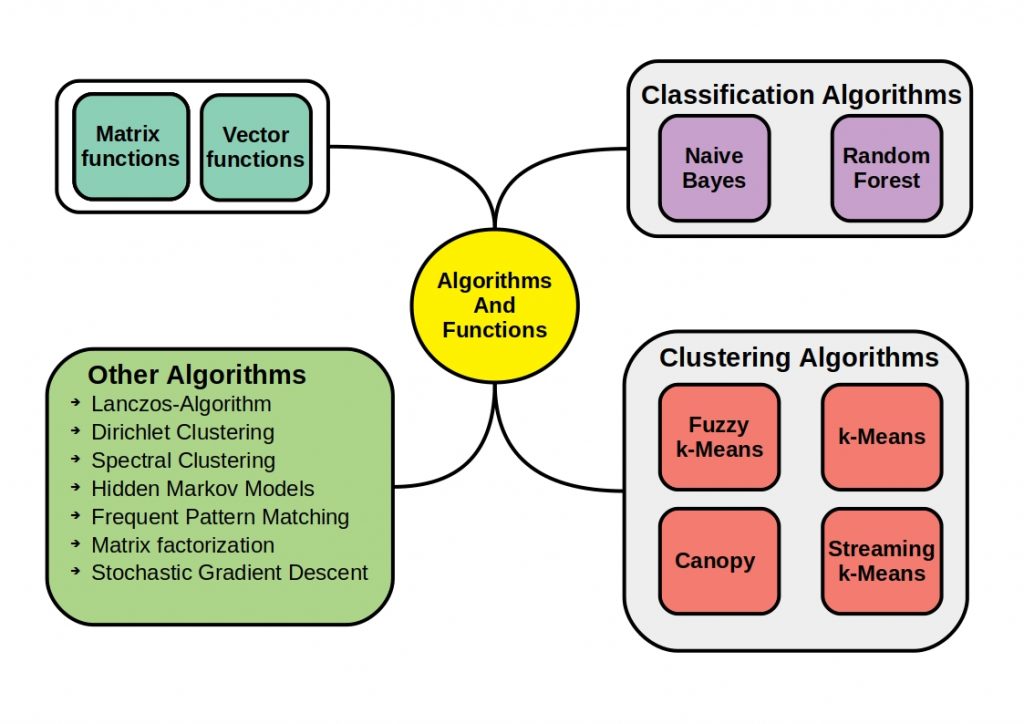

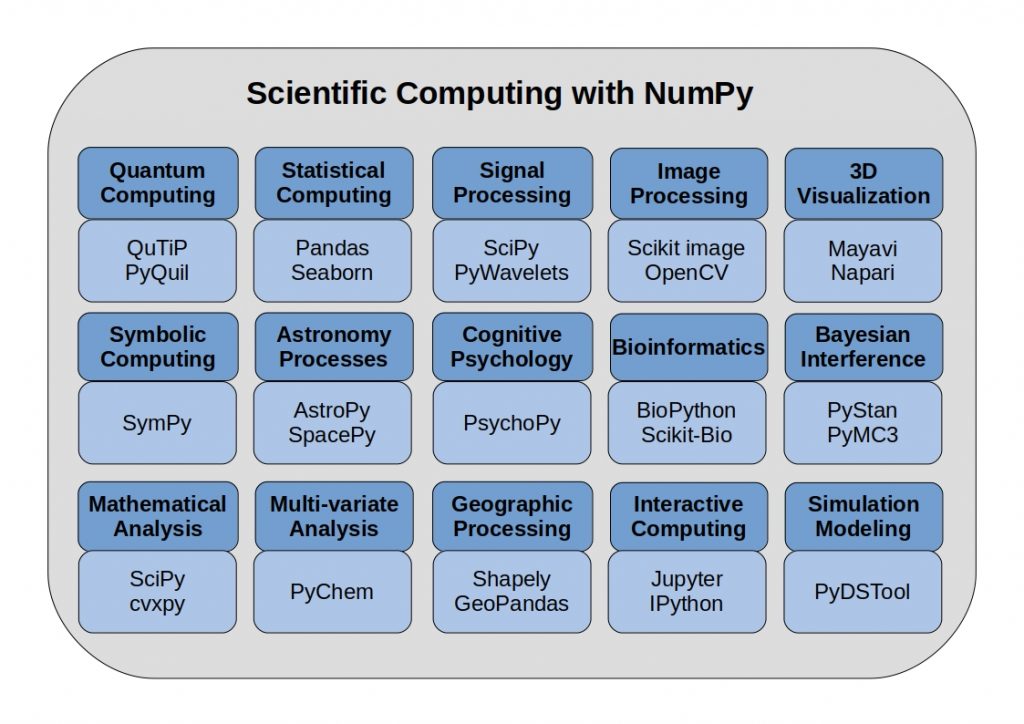

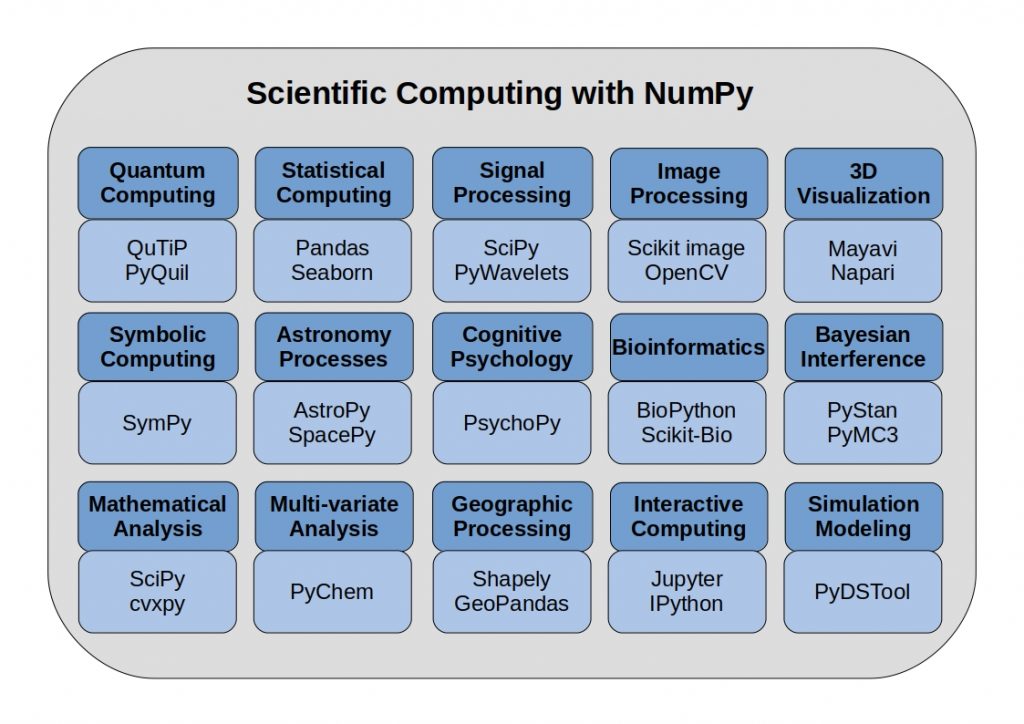

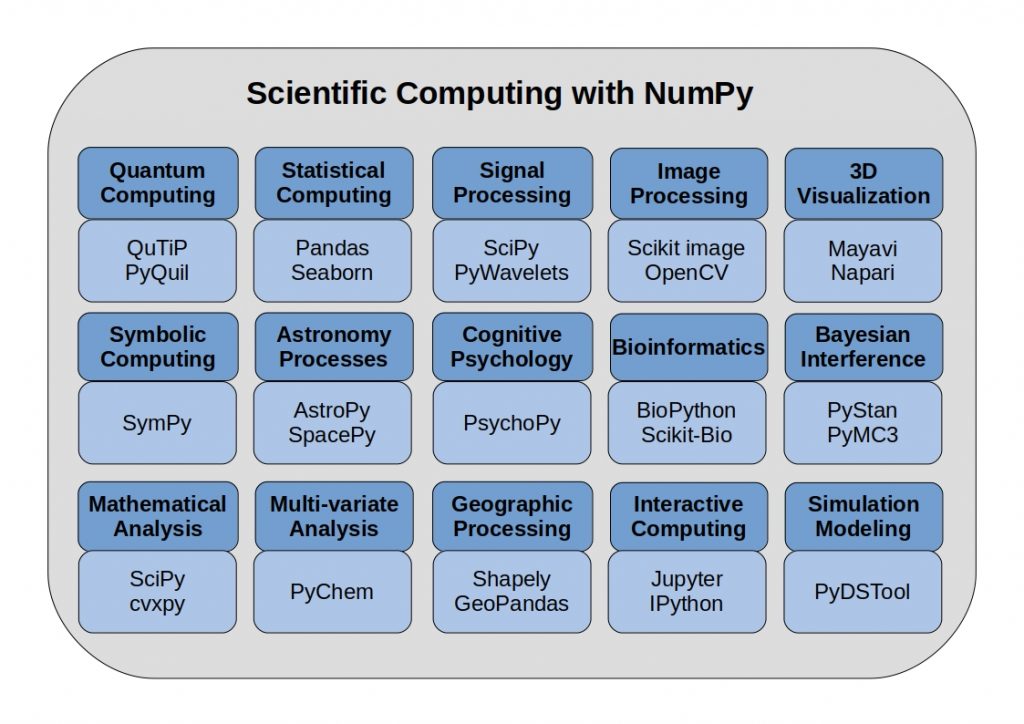

For performance purposes, it is written in C, a deep, machine-oriented programming language. NumPy is compatible with a wide variety of Python libraries, some of which are also based on NumPy, adding further useful functions to its power, such as: Minimization, Regression, Fourier Transform

Python and Science

As mentioned earlier, Python is the programming language most intensively used in the application domain of scientific research across all disciplines for data processing and analysis. What is very interesting here is that the solution approaches are similar across disciplines at the data level. Thus, an exchange of ideas has become indispensable and leads more and more to a fusion of the sciences.

This is only mentioned in passing, but should also emphasize the importance of this programming language and its libraries, which are so often open source and further developed by a community.

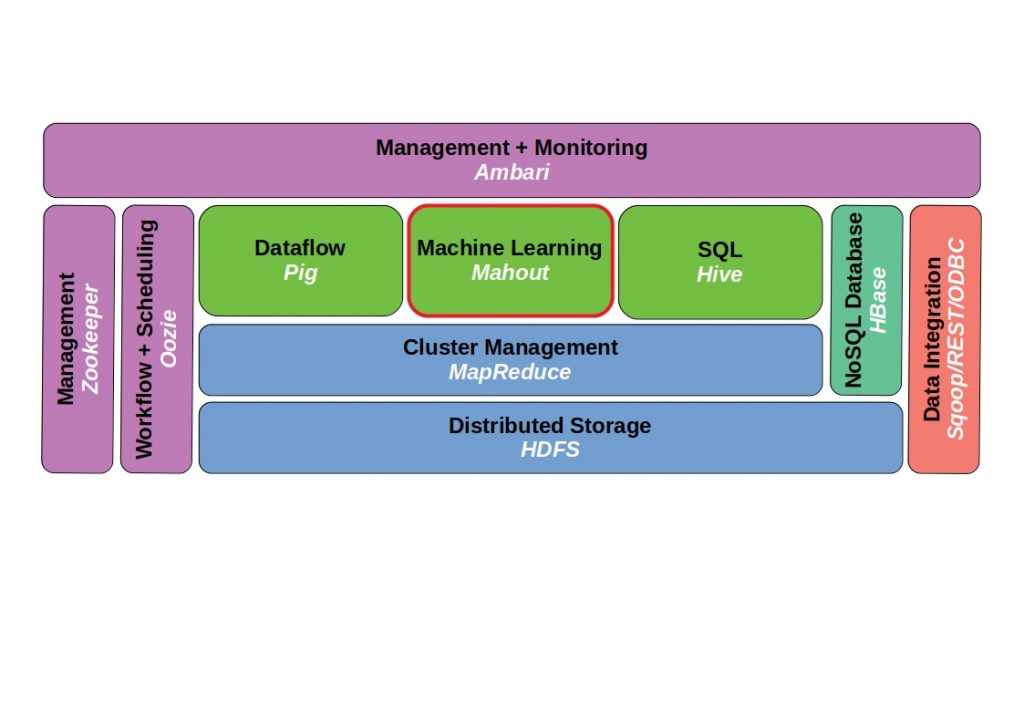

NumPy was developed specifically for scientific calculations and forms the basis for many specific frameworks and libraries.

The elementary NumPy data structure

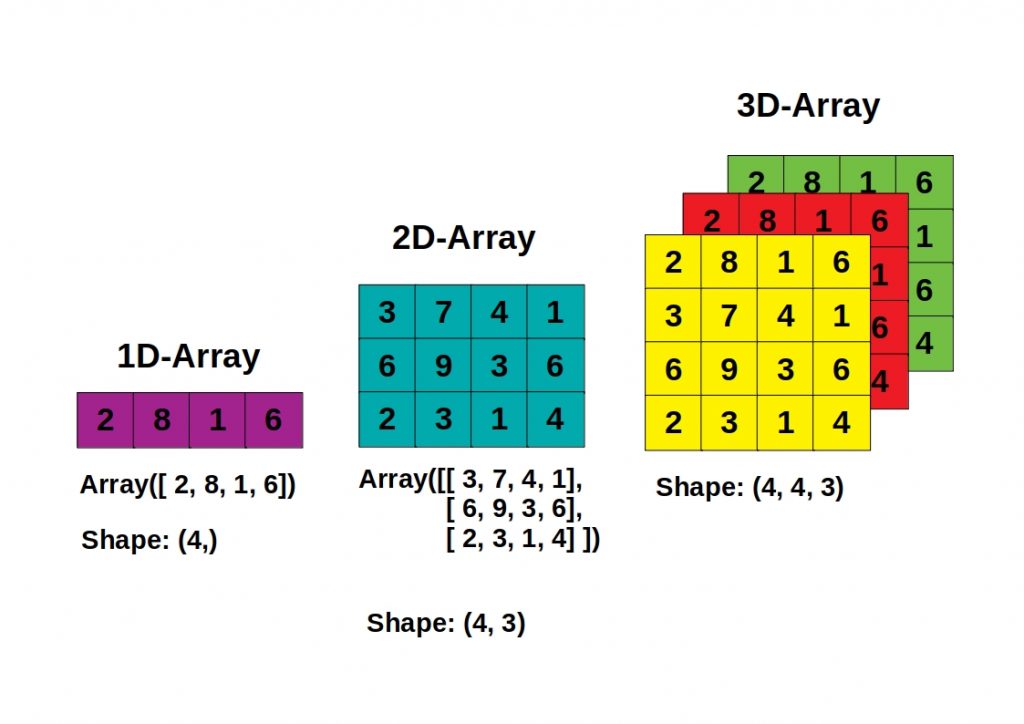

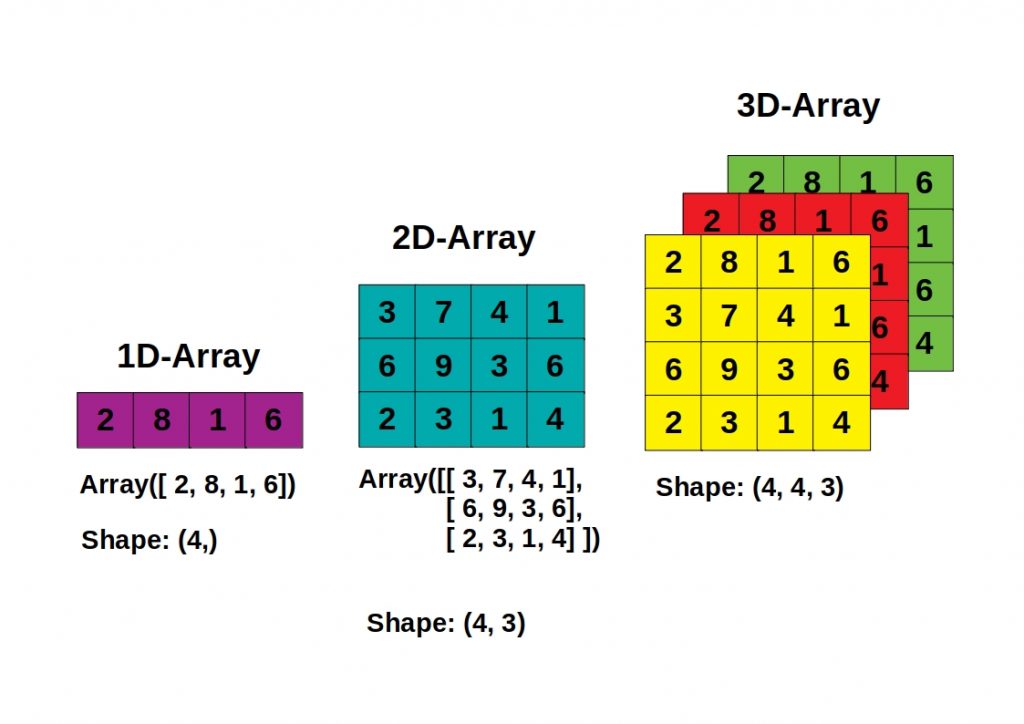

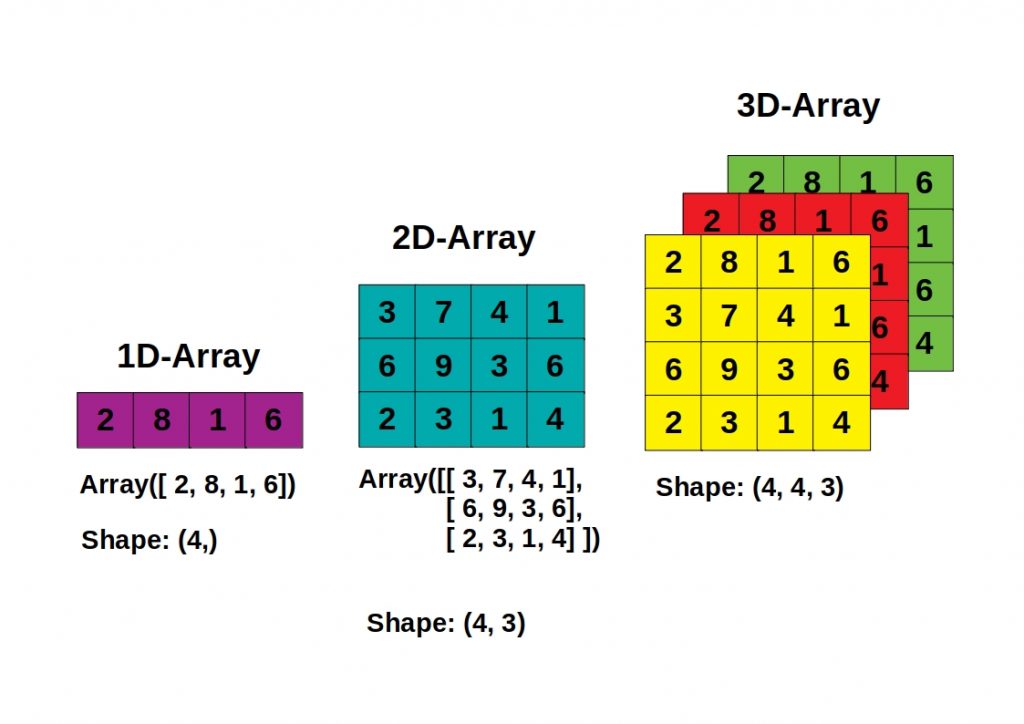

The core functionality of NumPy is based on the “ndarray” data structure.

Such an array can only hold elements of the same data type and always consists of a pointer to a contiguous memory area together with the metadata describing the data stored in it. This allows processes to access them very efficiently and manipulate them as desired.

Thus, the shape can be changed via so-called reshaping, smaller subarrays can be created within a given larger array, arrays can be split, or merged.

What is Pandas?

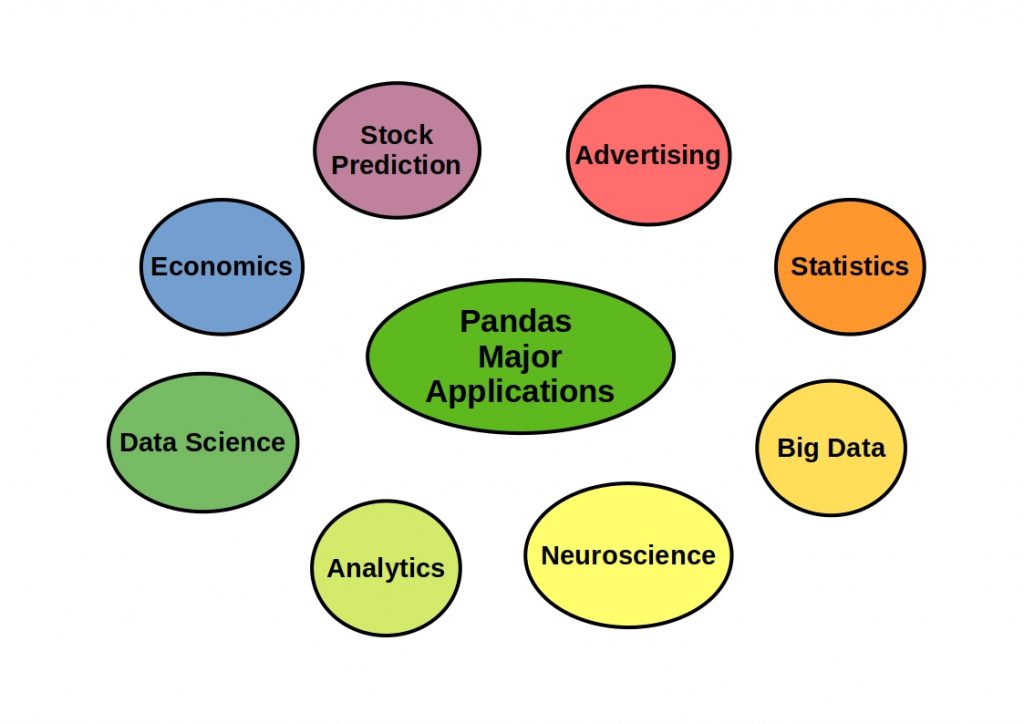

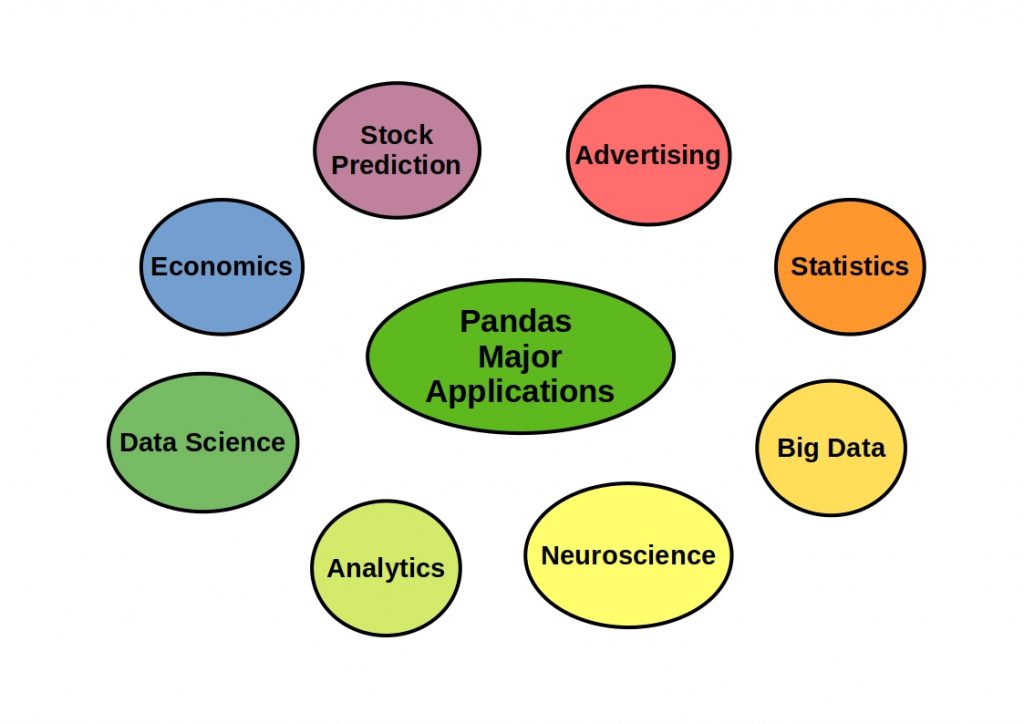

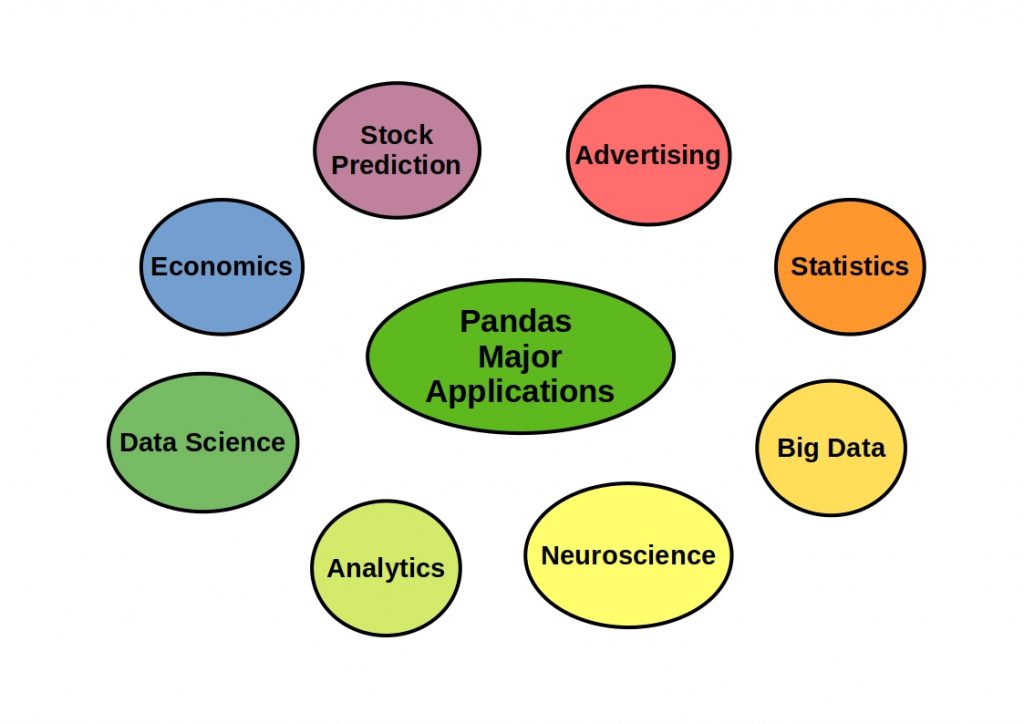

Pandas is an open source library for data analysis and manipulation in Python. Already released in 2008 by Wes McKinney and written in Python, Cython and in C. Pandas are used in almost all areas and find worldwide appeal in all industries.

The name Pandas is derived from Panel Data.

Its strength lies in the processing and analysis of tabular data and time series.

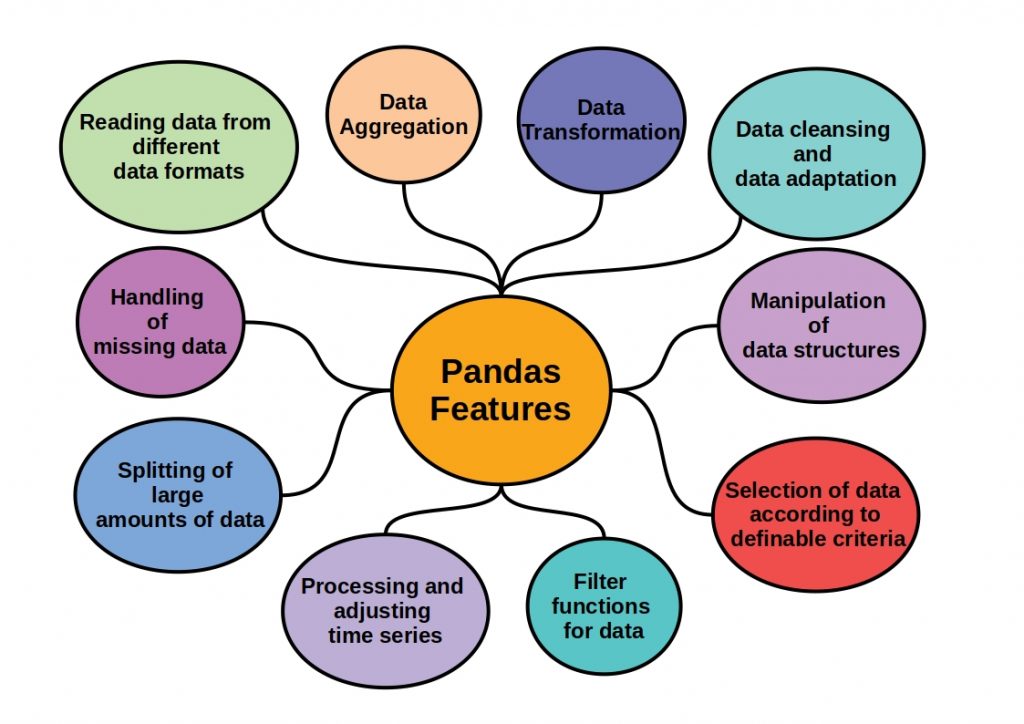

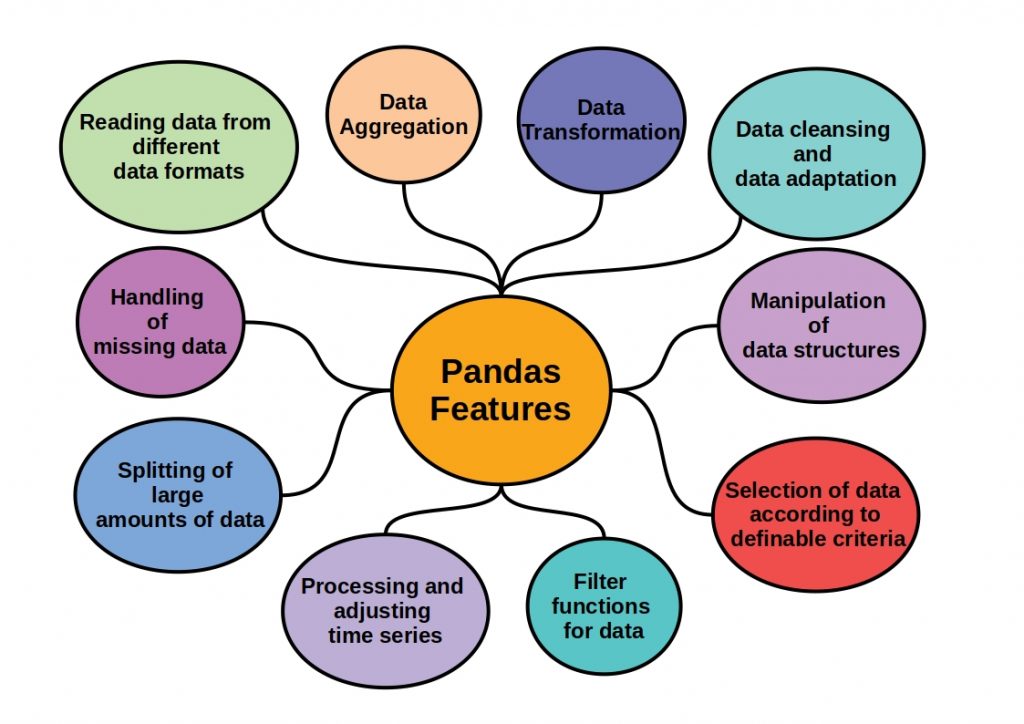

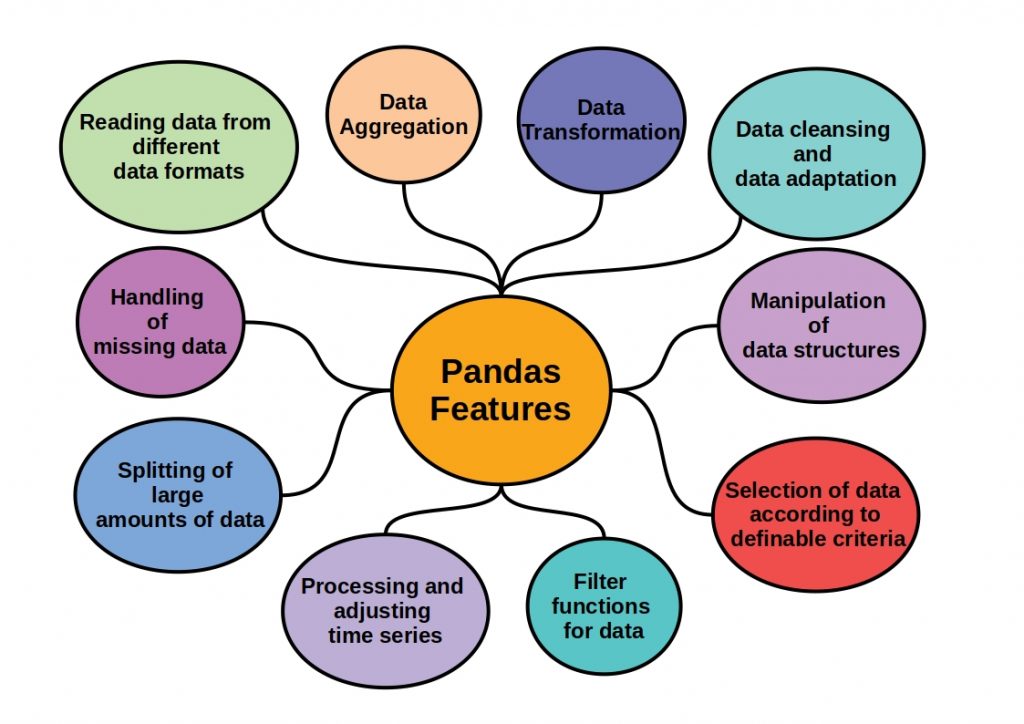

Especially in the pre-processing of data, pandas offers a lot of operations. In addition to high-performance filter functions, very large data volumes with over 500 thousand rows can be transformed, manipulated, aggregated and cleaned.

Pandas fundamental data structures

As a basis for the individual functions and tools that Pandas provides, the library defines its own data objects. These objects can be one, two, or even three-dimensional.

The one-dimensional series object can take up different data types in contrast to NumPys ndarrays and corresponds to a data structure with two arrays. One array as index and one array holding the actual data.

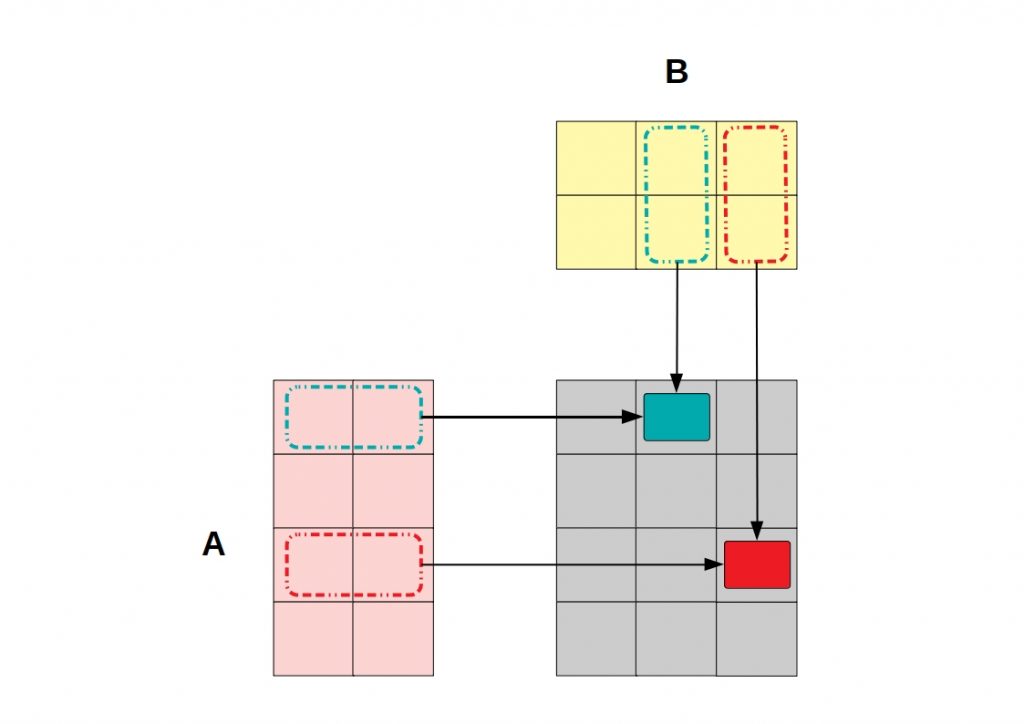

The two-dimensional DataFrame object contains an ordered collection of columns. Here, each column can consist of different data types and each value is unique by a row index and a column index.

The eponymous Panel object is then a three-dimensional dataset consisting of dataframes. These objects can be divided into major axes, which are the index rows of each DataFrame, and minor axes, which are the columns of each of the DataFrames.

NumPy vs Pandas – Conclusion

Both libraries have their similarities, which are due to the fact that Pandas is based on NumPy, but is it an either or question? No, clearly not. Pandas is based on NumPy, but adds so many individual features to its functionality that there is a clear justification for their parallel existence. They simply serve different purposes and should be used for both.

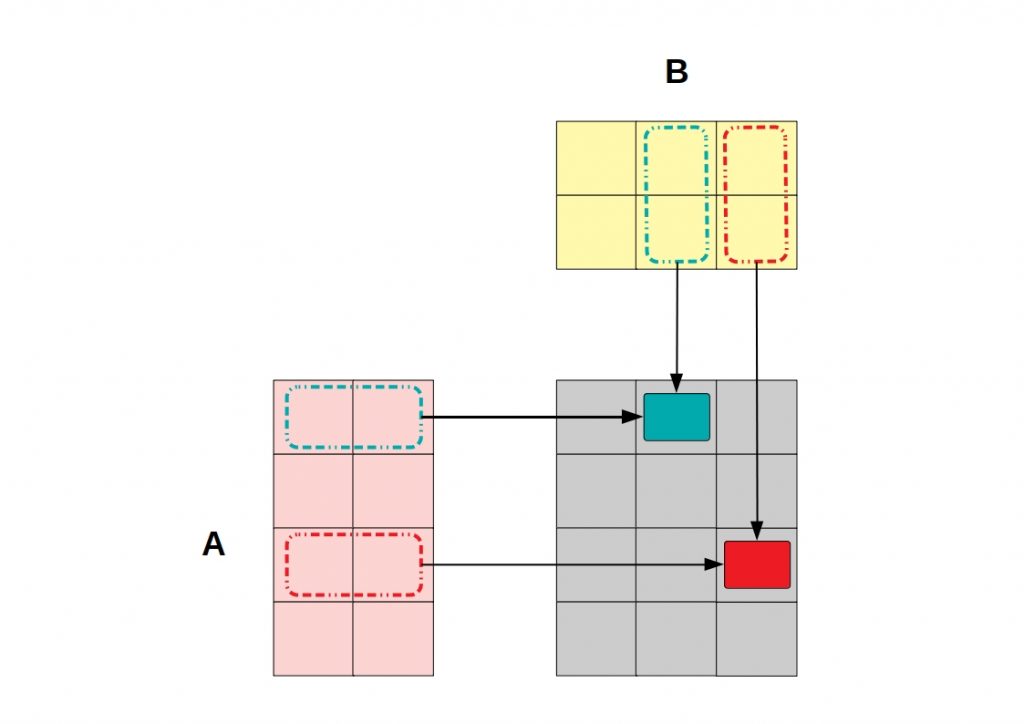

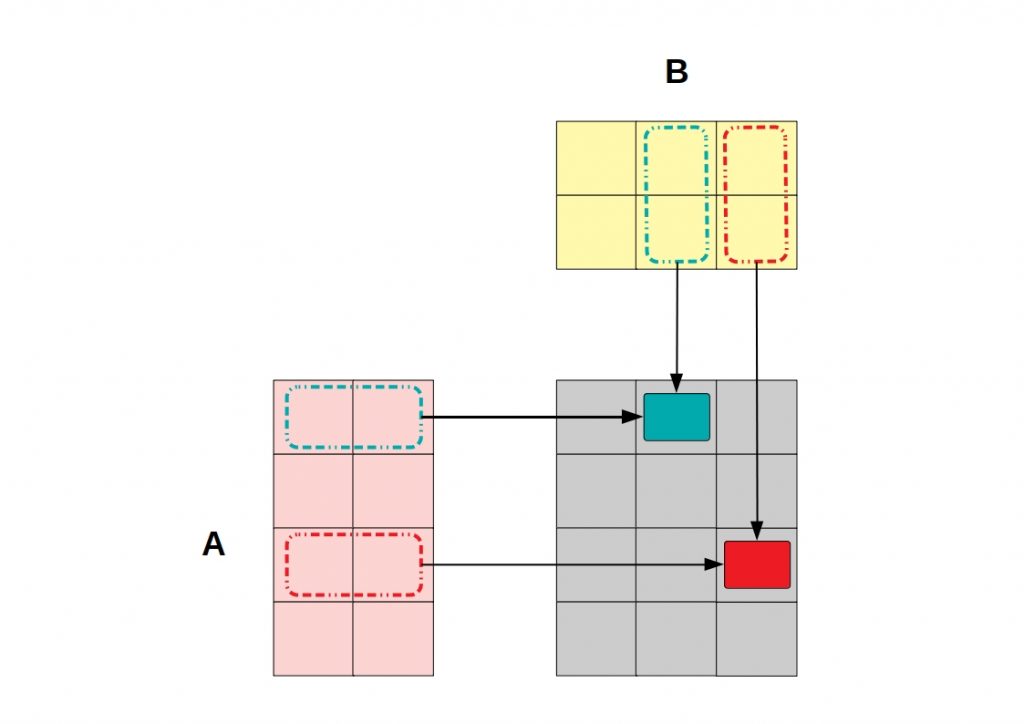

One of the main differences between the two open source libraries is the data structure used. Pandas allows analysis and manipulation on a tabular form while NumPy works mainly with numerical data in arrays whose objects can have up to n dimensions. These data forms are easily convertible among themselves via an interface.

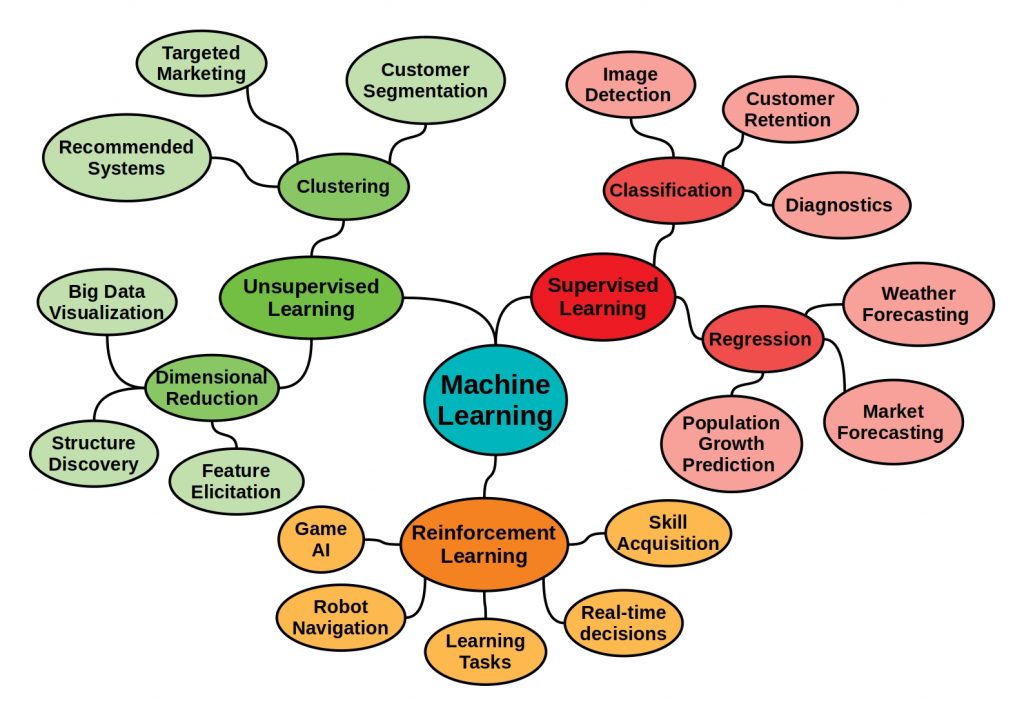

Pandas is more performant especially with very large data sets (500K rows and more). This makes data preprocessing and reading from external data sources easier to perform with Pandas and can then be transferred as a NumPy array into complex machine learning or deep learning algorithms. If you want to know more about machine learning methods and their fields of application, take a look at this article from us.