Is Hadoop dead – In the IT sector in particular, technologies and software architectures do not have a long shelf life. As new technical insights are gained, the requirements and use cases for the systems also change. As young as the term “big data” is, it is also undergoing constant change. The increased acceptance of open source projects in the business community has led to increased diversification and thus to many mutually beneficial competitive situations.

Apache Hadoop has been considered the one all-purpose solution for over a decade. A Big data ecosystem in which Hadoop plays together with many other extensions. In recent years, however, more and more people are claiming that the demands on data processing have changed and see Hadoop as an outdated concept.

A few years ago, the primary goal was to efficiently handle ever-increasing data volumes, but today iterative real-time analyses on dynamic data sets are required. Data management systems must not be self-contained, but must remain manipulable and monitorable at all times.

So is Hadoop dead, or still indispensable?

What is Hadoop?

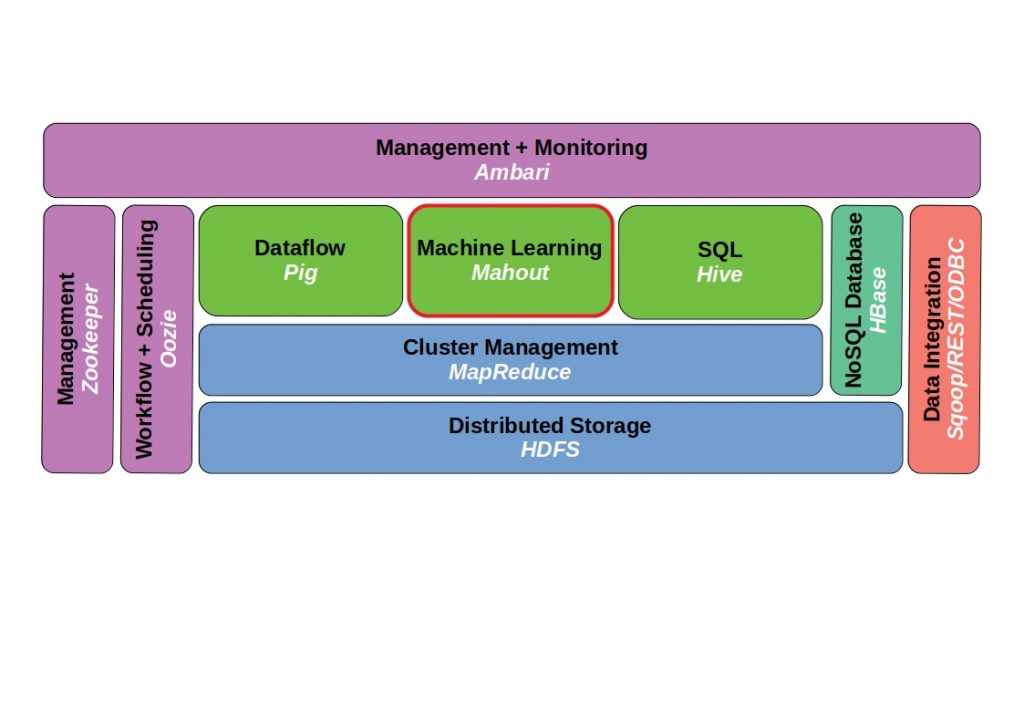

Hadoop is a Linux-based open source Big Data framework for scalable, distributed software. It is originally based on Google’s MapReduce algorithm and enables computationally intensive processes of large data sets by parallelizing them on computer clusters, i.e. a large number of networked computers, using multiple components working together.

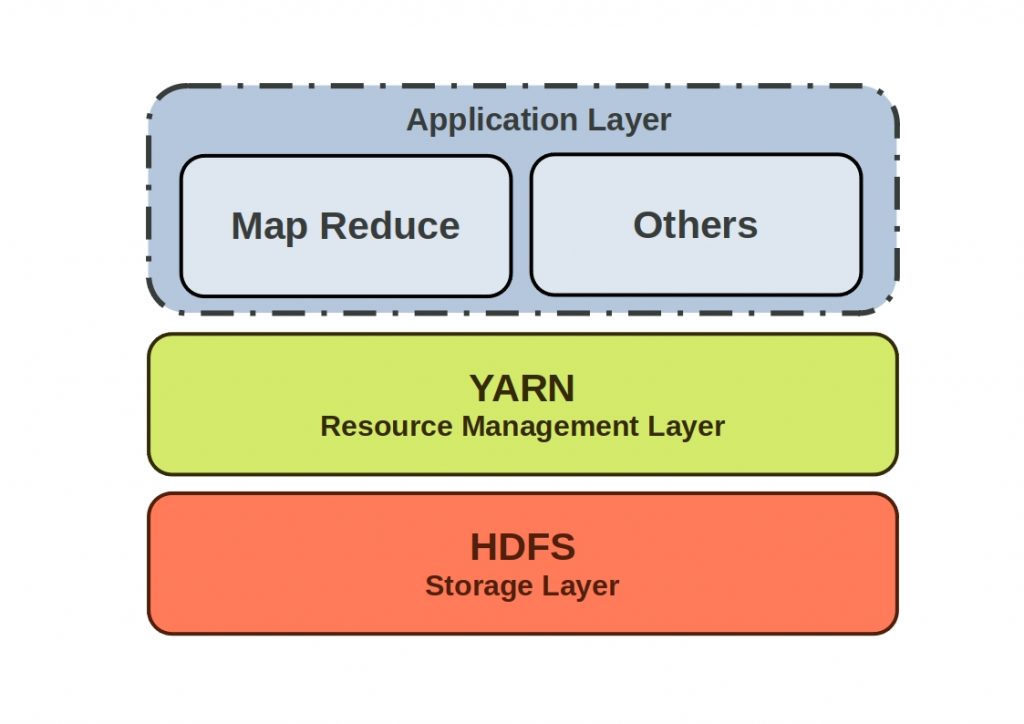

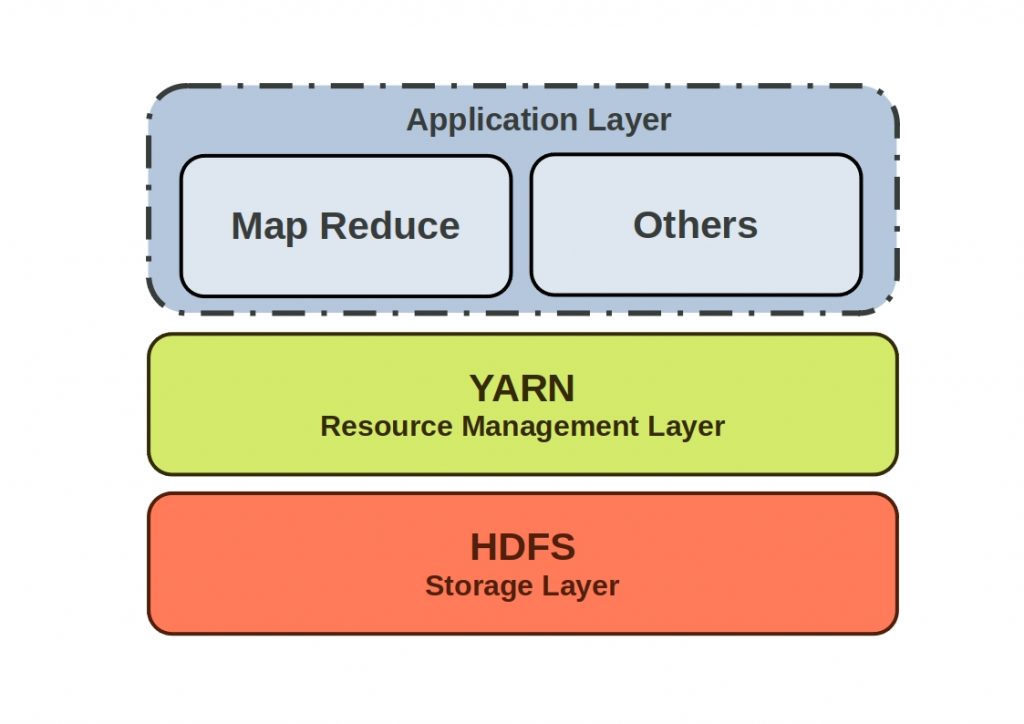

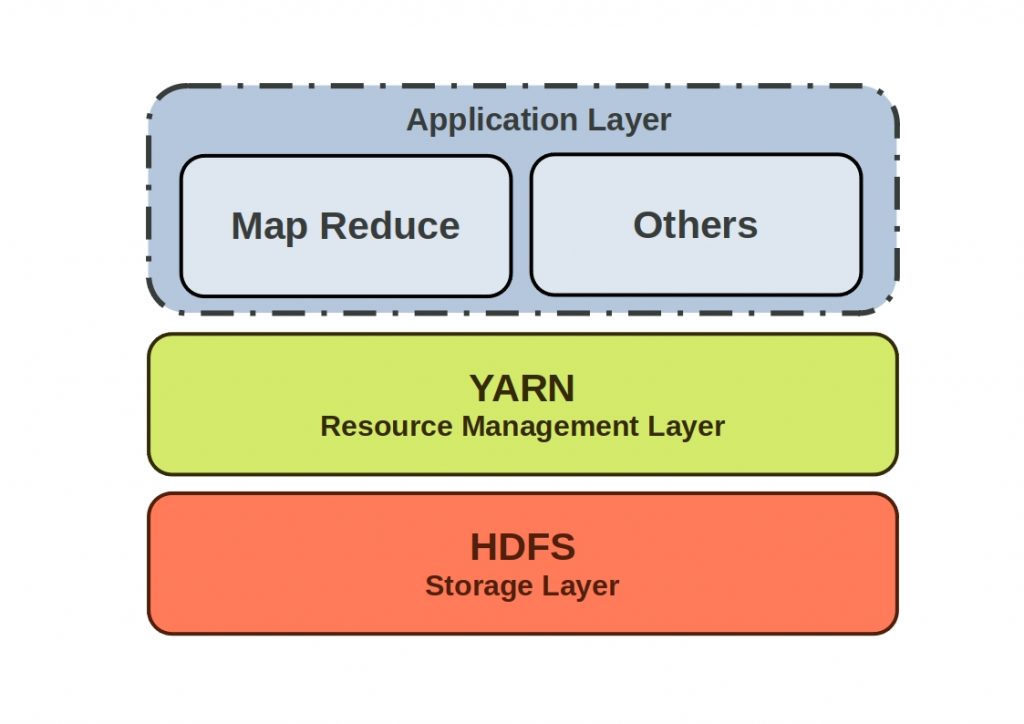

The Hadoop ecosystem is composed of the Hadoop Common, an interface for all other components. It connects Hadoop to the file system of the computers and contains the libraries.In the Hadoop Distributed File System

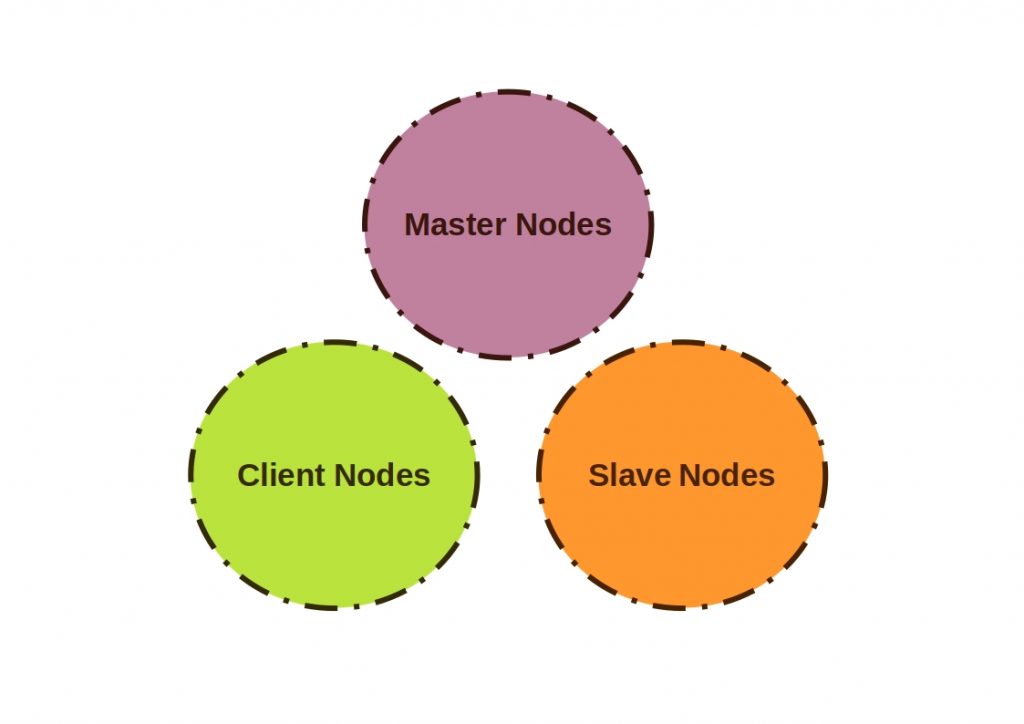

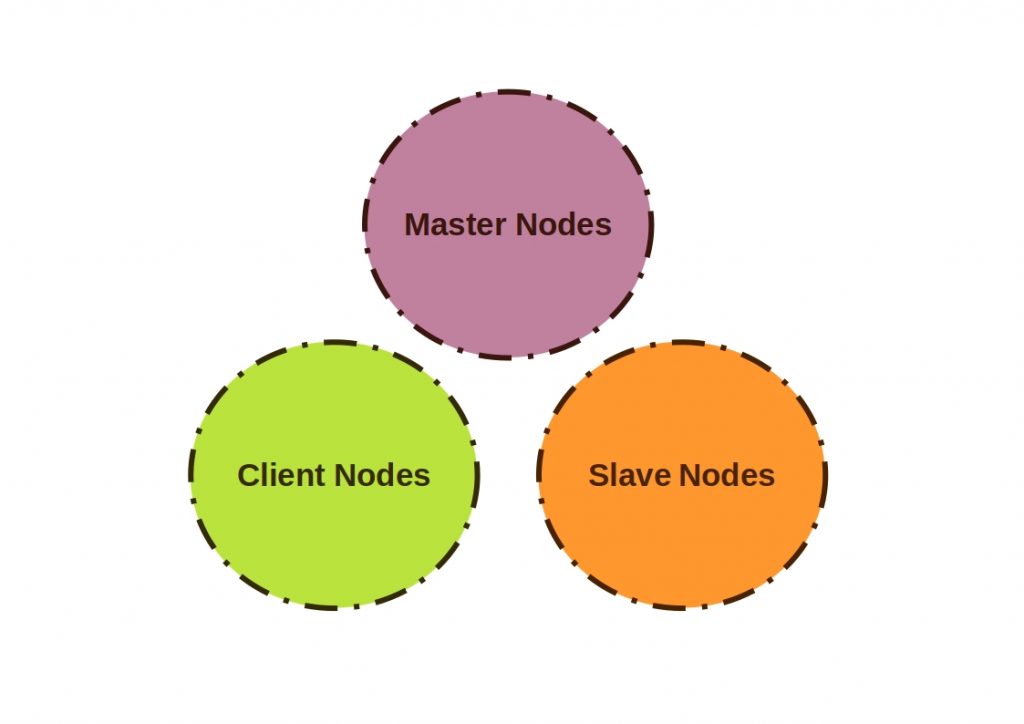

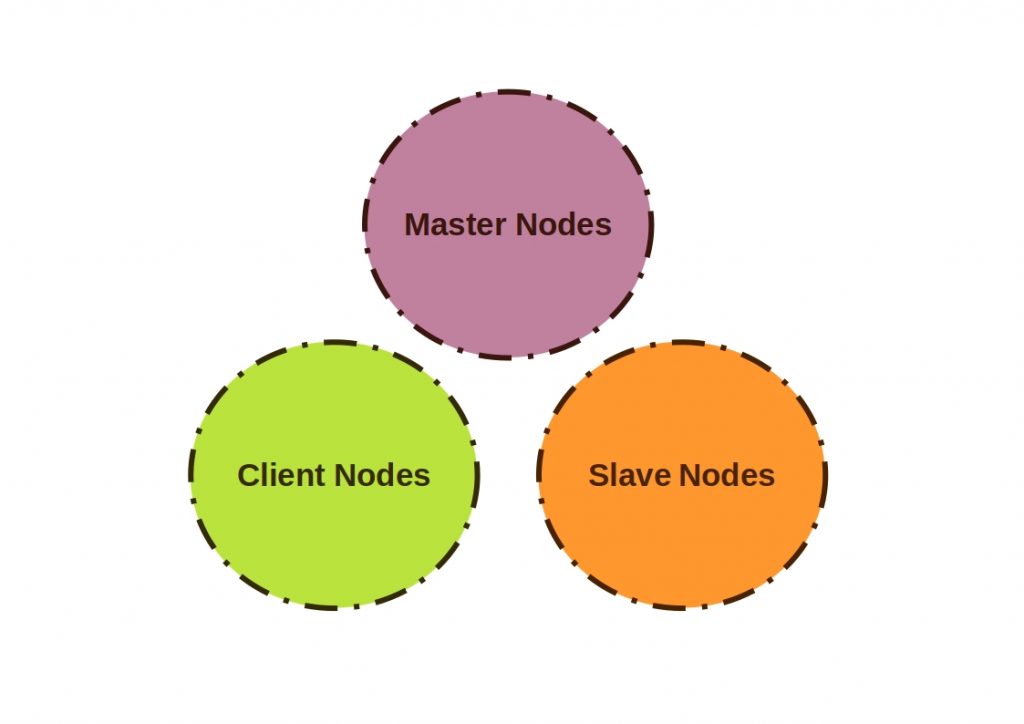

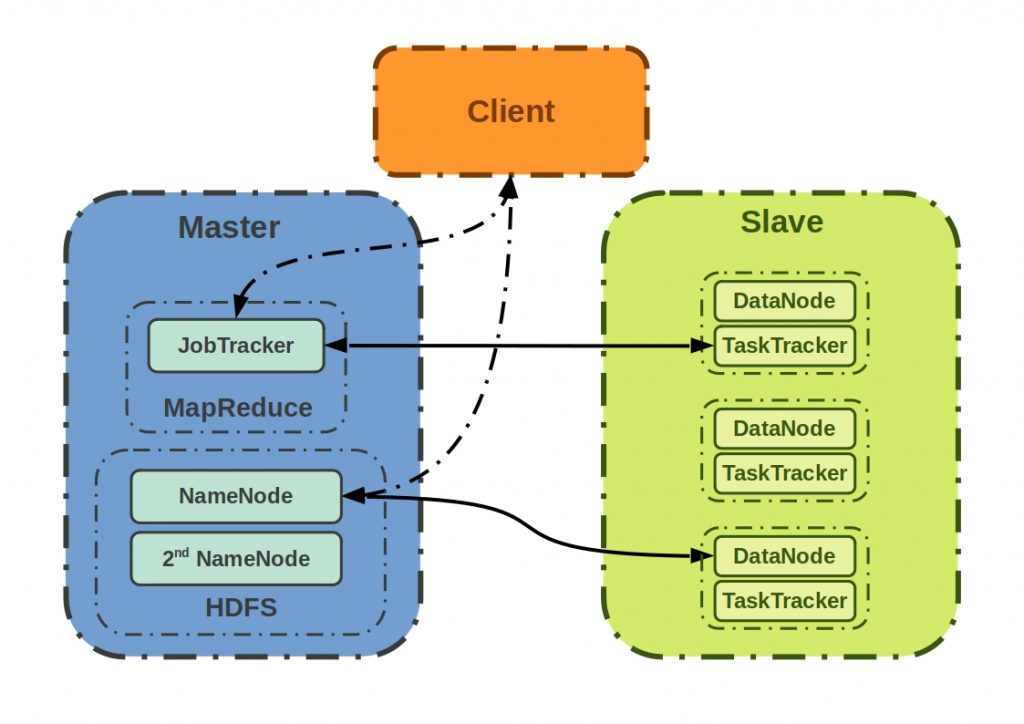

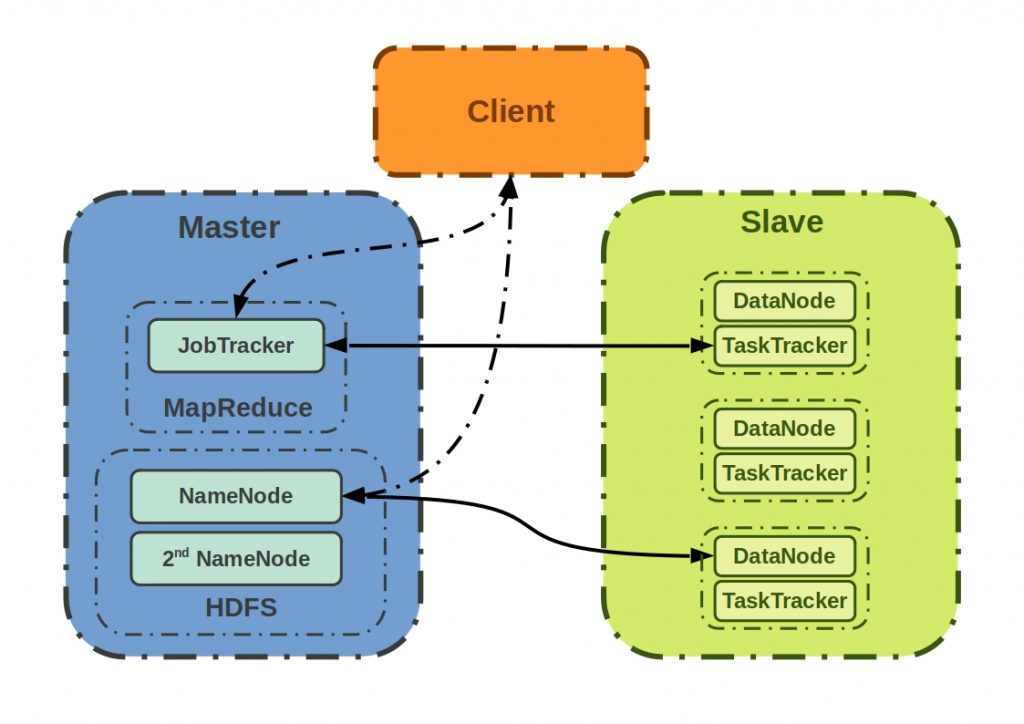

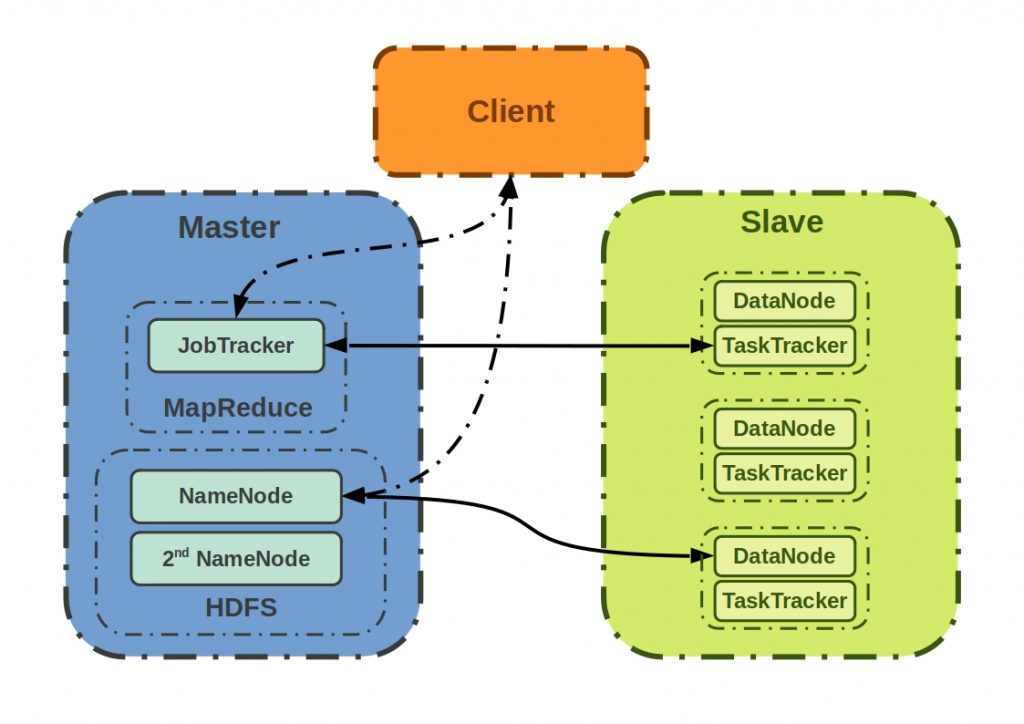

( HDFS ) very large amounts of data are stored. This is organized as a server cluster with master and slave nodes. The resources are controlled via the Yet Another Resource Negotiator (YARN) component. This resource manager distributes the individual tasks to the available resources, such as CPU and memory.

What is the MapReduce algorithm?

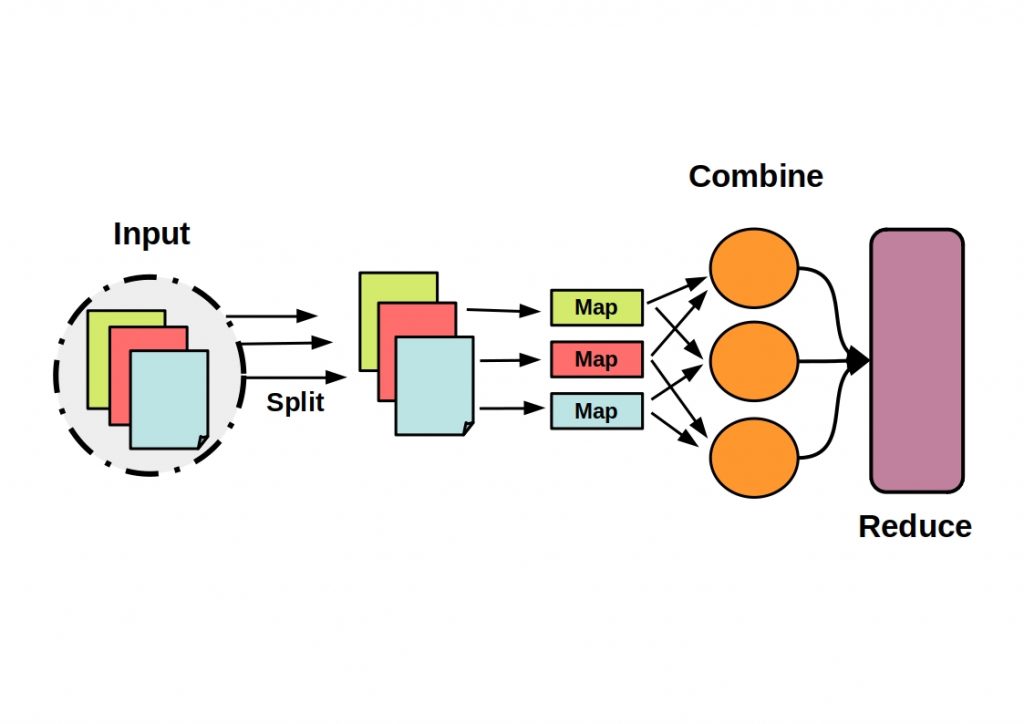

Google’s MapReduce programming model, even though it is currently being replaced by engines based on Directred-Acyclic-Graph (DAG), is still a core component of the Hadoop framework. So if we want to understand how Hadoop works, we first need to understand what MapReduce is in the first place.

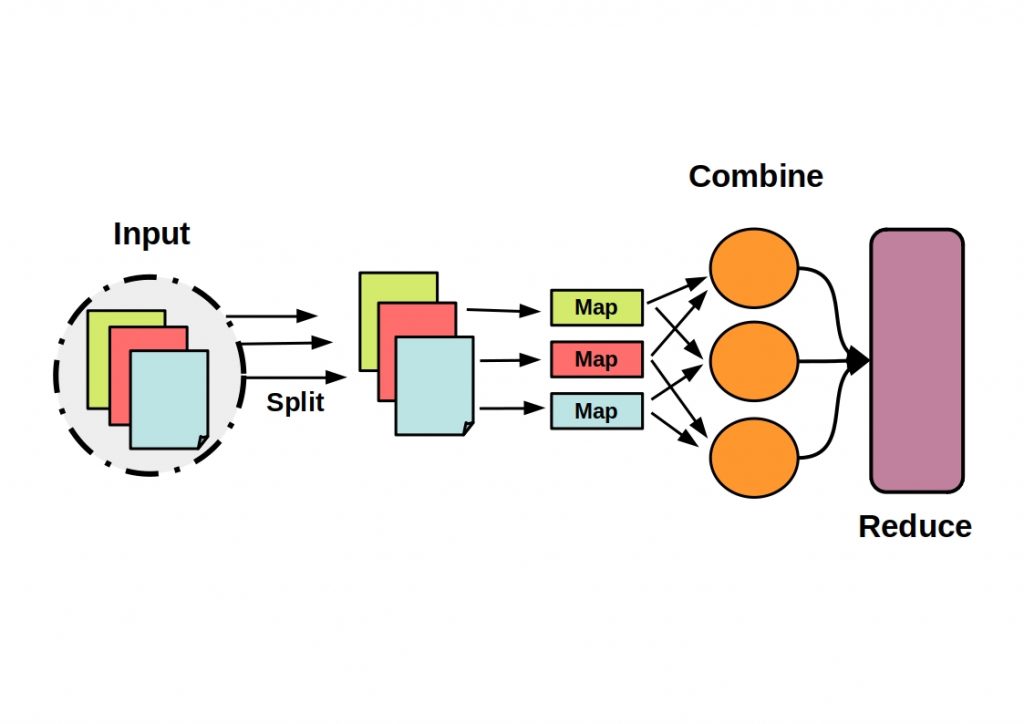

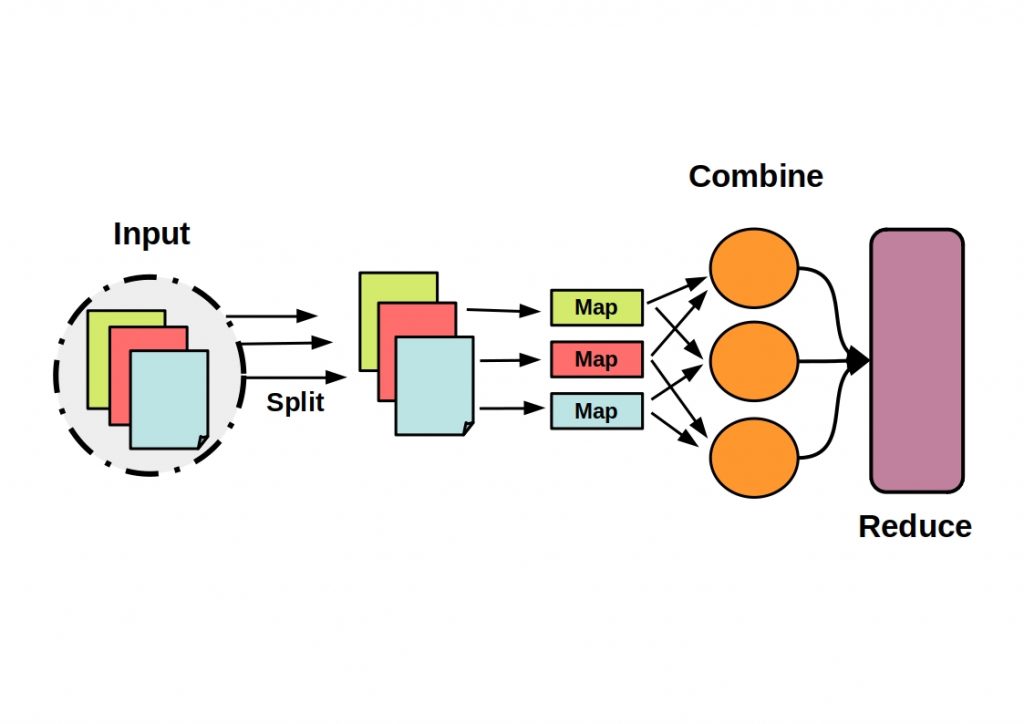

Configurable classes for Map, Reduce and Combination phases are provided via the Hadoop MapReduce framework. Map means that a set of data is transformed into another set of data, where the individual elements of the data are combined into tuples (key/value pairs). In the Reduce phase, the formed tuples are then combined into smaller sets of tuples.

How a Hadoop cluster works

As mentioned earlier, Hadoop distributes storage and processing of large amounts of data in a balanced manner across compute clusters, or interconnected hardware.

These computers are connected to a dedicated server that acts as the master

components. The master node organizes the storage of files and the metadata in the individual slave nodes. Within a cluster, data is stored on multiple computers called nodes. The files are partitioned into data blocks and distributed redundantly among the nodes.

The NameNode and Resource Manager run on the master node. These collect data in the Hadoop Distributed File System (HDFS) and store data with parallel computations by applying MapReduce.

The client nodes are responsible for loading the data into the cluster’s

Architecture. The slave node is one responsible for collecting the data

Client nodes.

How does communication within a cluster work?

The internal communication, i.e. the process of job execution, is organized via so-called JobTrackers and TaskTrackers.

The client submits a MapReduce job to the JobTracker on the master to process a particular file.The JobTracker then determines the DataNodes that store the blocks for that file by querying the NameNode. The NameNode manages the HDFS file system metadata, so it keeps track of all the files that are divided into blocks. The DataNodes store and retrieve these blocks. Then tasks are assigned to different TaskTrackers based on the information received from the NameNode . In the process, the status of each task NameNode and DataNode is monitored.

A secondary NameNode communicates with the NameNode at a periodic interval to take the snapshot of the HDFS metadata. In other words, a backup. This information can then be used in the event of a NameNode failure.

In principle, both single-node clusters and multi-node clusters can be implemented with Hadoop. In the case of a single node, the cluster is implemented on one machine only. All processes then run on a Java virtual machine instance.

In the case of multi-nodes, the master slave architecture already discussed is then implemented over several computers.

Is Hadoop dead?

So is Hadoop dead? Apache Hadoop has clearly lost its status as the sole Big Data solution. Many technologies have already been added that can solve smaller tasks better than the big one solution Hadoop.Today, this small-scale nature enables Big data management solutions that can be optimally tailored to specific use cases. However, Hadoop Hadoop is not dead either. The system still has its strengths and will continue to be the first choice for special use cases in the foreseeable future.

So how is Hadoop evolving?

With the Hadoop Ozone project, an alternative to the Hadoop Distributed File System (HDFS) has now been developed.

It is still to be deployed on a cluster, but corresponds to an object store for Big Data applications. This is much more scalable than than standard file systems and is intended to optimize the handling of small files, a previous Hadoop weakness. Object stores are typically used as a data storage method in the cloud. Through Ozone, they can now be managed locally.

This object store can be accessed by established Big Data solutions such as Hive or Spark without modification.If you want to know more about the hadoop compatible frameworks read our articles on Hive and Spark.

Ozone is built on a block storage layer called Hadoop Distributed Data Store (HDDS) and is designed to scale to billions of objects. The blocks are organized internally using unique namespaces in many independent volumes.

However, one disadvantage of these local object stores is that they are not yet implemented in the core, but must be separated from the traditional file systems by containerized environments such as Kubernetes and YARN. So there are always two truths.