Data Mining vs Big Data Analytics – Both data disciplines, but what makes them different? In this article, we introduce you to both fields and explain the key differences.

Data Science is an interdisciplinary scientific field, as it has become more and more in focus in the last decades. Many companies see this as the key to an Industry 4.0 company. The hope is that valuable information can be found in the company’s own data, which can be used to massively increase its own profitability. Terms such as big data, data mining, data analytics and machine learning are being thrown into the ring. Many people do not realize that these terms describe other disciplines. If you want to build a house, you need the right tools and you have to know how to use them.

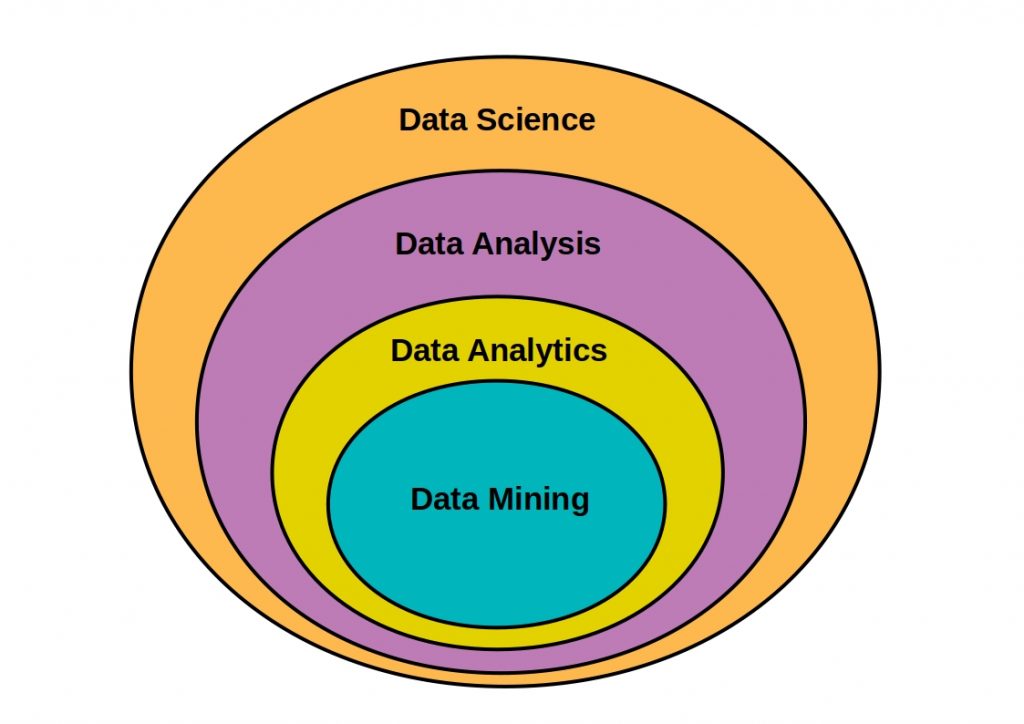

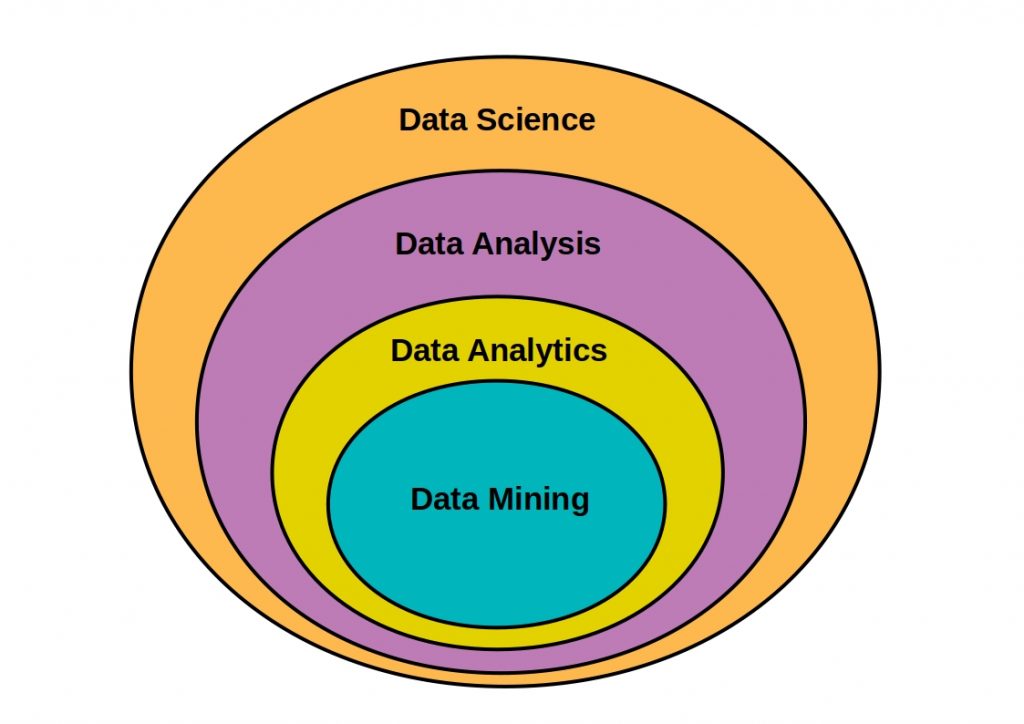

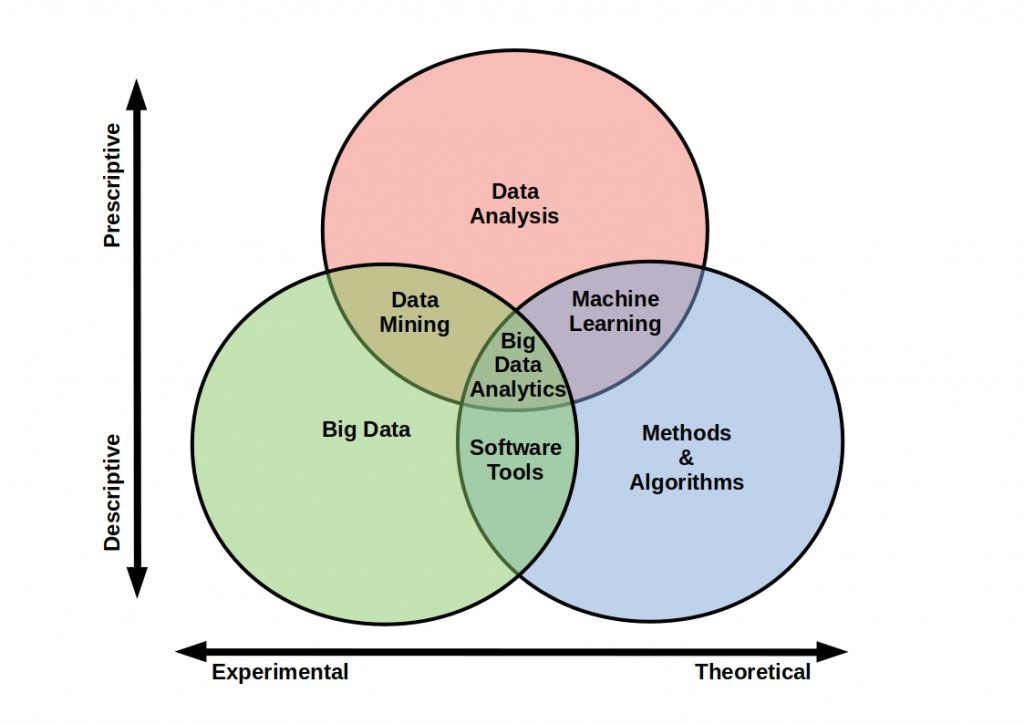

Map of Data Disciplines

First of all, you should think of the individual disciplines as being layered into each other like an onion. So there is overlap between all the fields and when you talk about a discipline, you are also talking about lower layers.

Since data analytics is located above data mining in the layer model, it is already clear that mining must be a sub discipline of analytics. Therefore, we will first describe the comprehensive discipline.

Data Mining vs Big Data Analytics – What is Analytics?

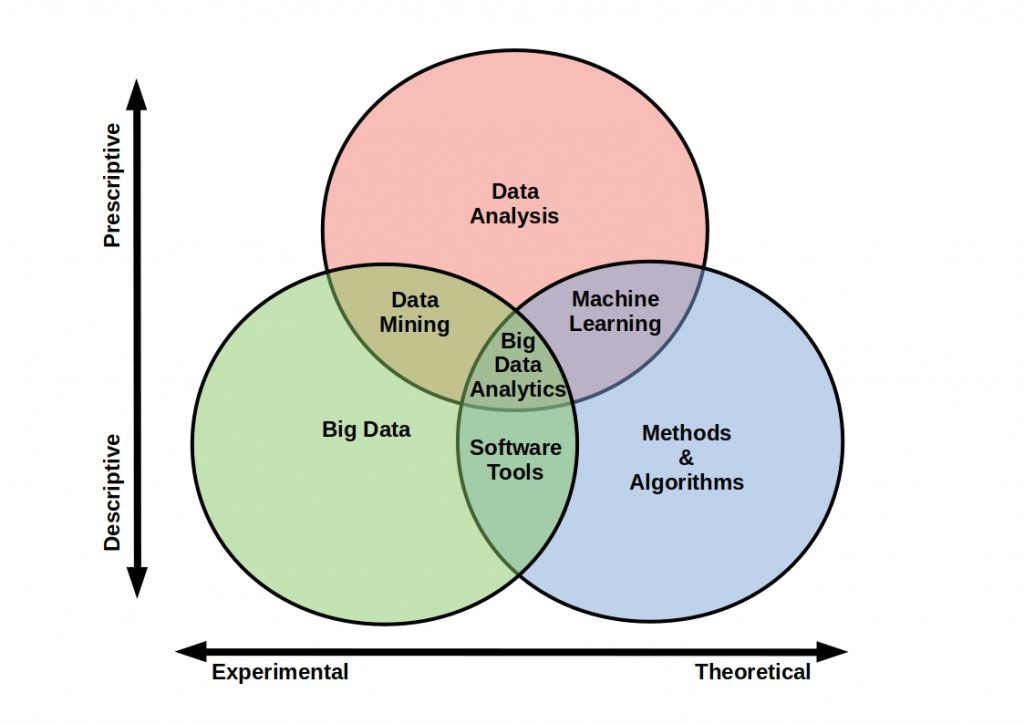

Big data analytics, as a sub field of data analysis, describes the use of data analysis tools and without special data processing. in data analytics, you use queries and data aggregation methods, but also data mining techniques and tools. The goal of this discipline is to represent various dependencies between input variables.

The goal of this discipline is to represent various dependencies between input variables. The following figure shows the individual overlaps in the use of the tools of the different disciplines.

Data Mining vs Big Data Analytics – What is Data Mining?

Data mining is a subset of data analytics. At its core, it is about identifying and discovering a large data set through correlations. Especially if you know little about the available data this field should be used.

But what does a typical data mining process look like and what are typical data mining tasks?

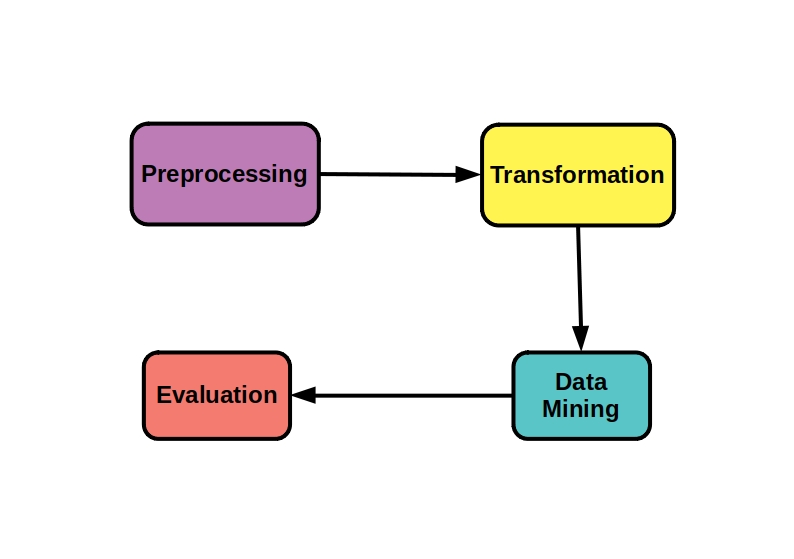

Data Mining Process

You can divide a typical data mining process into several sequential steps. In the preprocessing stage, your data is first cleaned. This involves integrating sources and removing inconsistencies. Then you can convert the data into the right format. After that, the actual analysis step, the data mining, takes place.Finally, your results have to be evaluated. Expert knowledge is required here to control the patterns found and the fulfillment of your own objectives.

The term data mining covers a variety of techniques and algorithms to analyze a data set. In the following we will show you some typical methods.

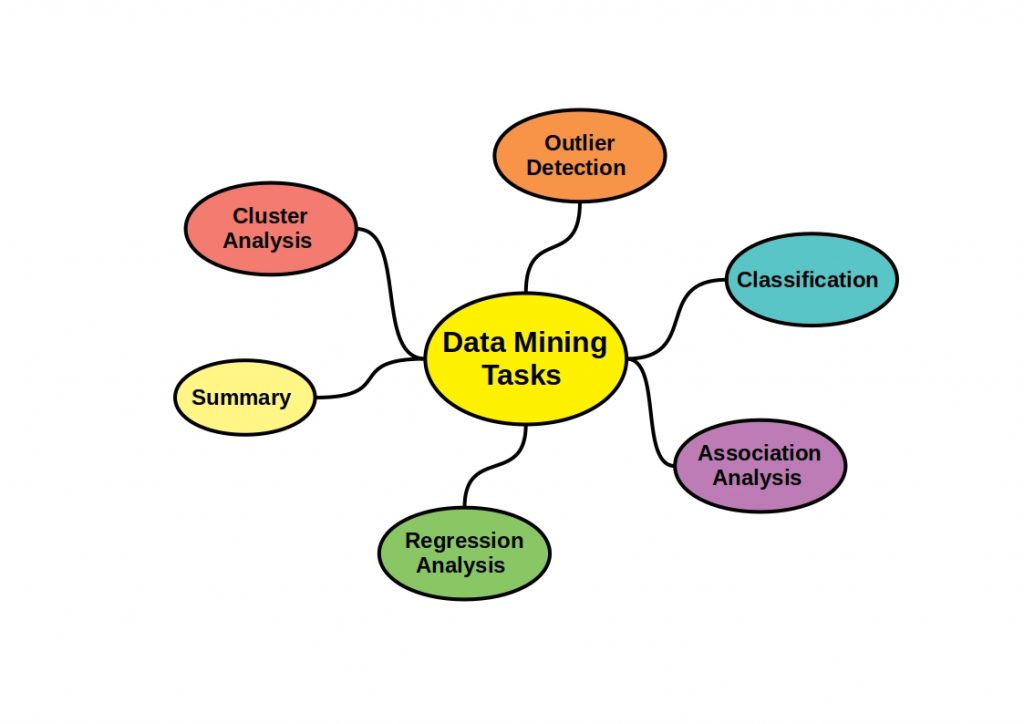

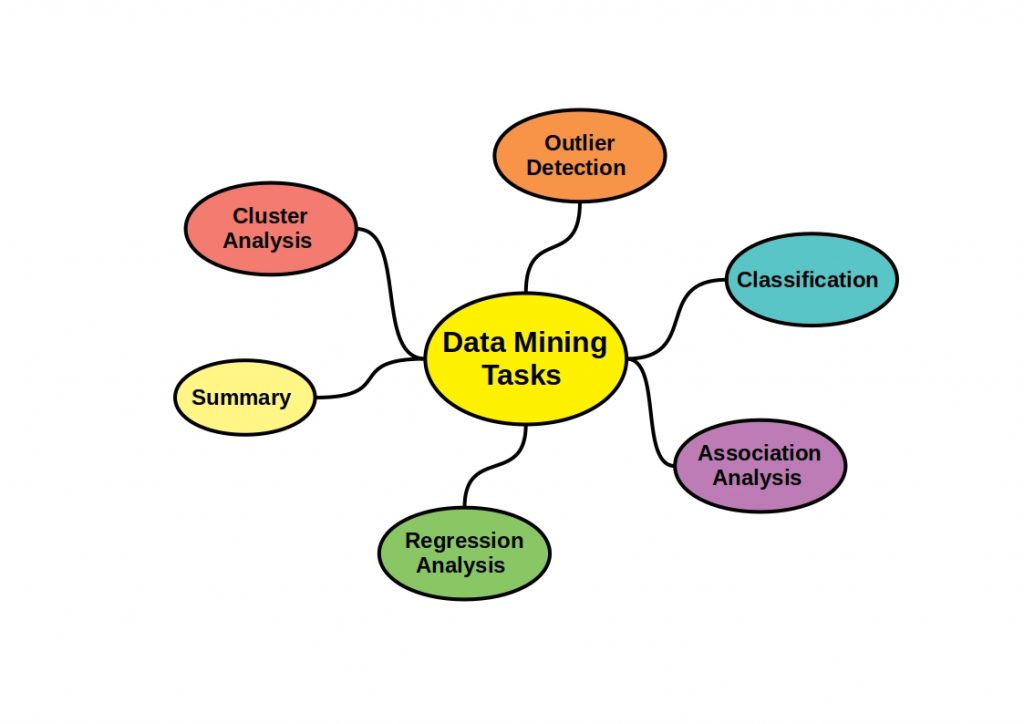

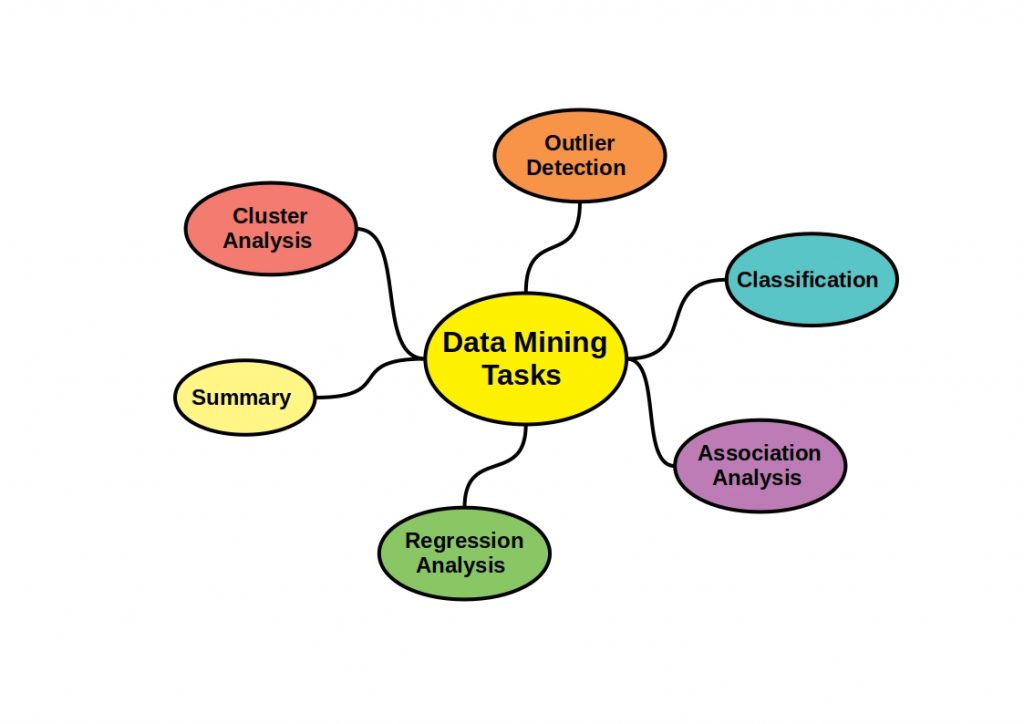

Data Mining Tasks

Besides identifying unusual data sets with outlier detection, you can also group your objects based on similarities using cluster analysis. In this article we have already summarized some popular clustering algorithms that you should know as a data scientist. While association analysis only identifies the relationships and dependencies in the data, regression analysis provides you with the relationships between dependent and independent variables. Through classification, you assign elements that were not previously assigned to classes to existing classes. You can also summarize the data to reduce the data set to a more compact description without significant loss of information.

Data Mining vs Big Data Analytics – Conclusion

Although the two disciplines are related, they are two different disciplines. Data mining is more about identifying key data relationships, patterns or trends in the data, while data analytics is more about deriving a data-driven model. On this path, data mining is an important step in making the data more usable. In the end, it’s not a versus, but both disciplines are part of an analytics pipeline.

In this article, we will go further into the differences between the various data sciences and clarify the difference between data analysis and data science.