In recent years, the field of data science has been able to access increasingly powerful analysis methods thanks to increasingly high-performance hardware. Google’s Tensorflow has been the benchmark for editing machine learning and modeling deep learning methods. It still has the most freedom today. But a wide range of options often creates a high barrier to entry.

PyTorch vs TensorFlow – With the 2 years younger, also Python-based, open source package PyTorch, Facebook now wants to knock Tensorflow off its throne. It has been steadily gaining popularity for years due to its simplicity and features.

In this article, we will clarify what is in the package and whether it can really compete with Tensorflow.

What is PyTorch?

Pytorch is one of the most popular open source Python packages for scientific computing and neural network development/training.

It was developed by Facebook in 2016 and is based on the Torch library written in Lua. A NumPy-like tensor library that provides rich GPU support to enable accelerated neural network learning. PyTorch is also often referred to as the library of the same name. More about this in the section “Libraries”.

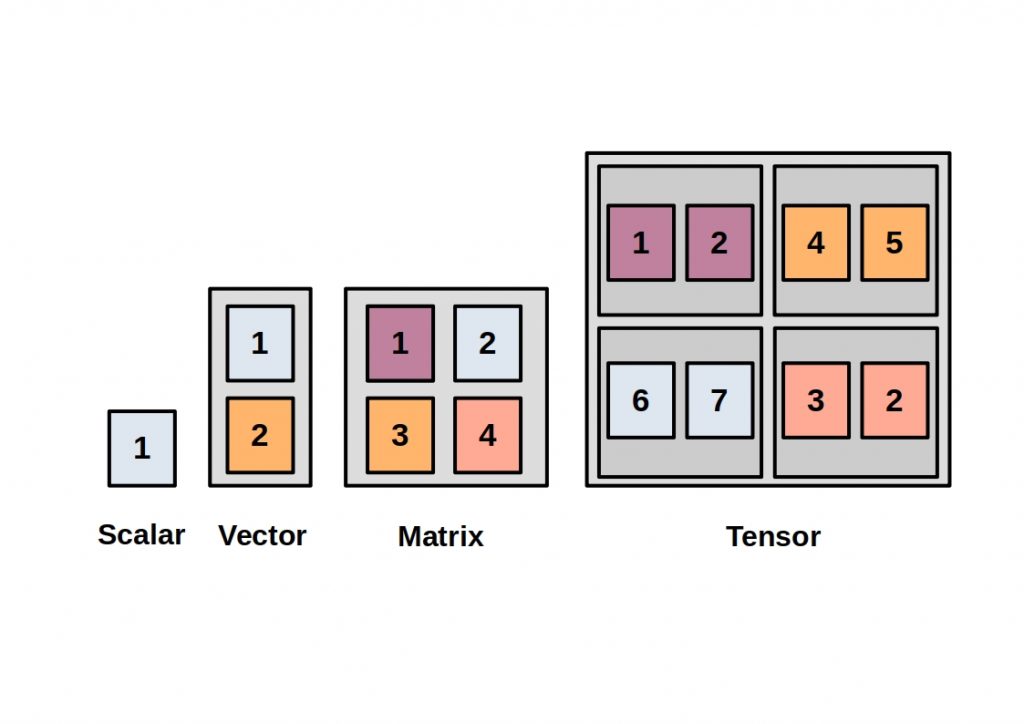

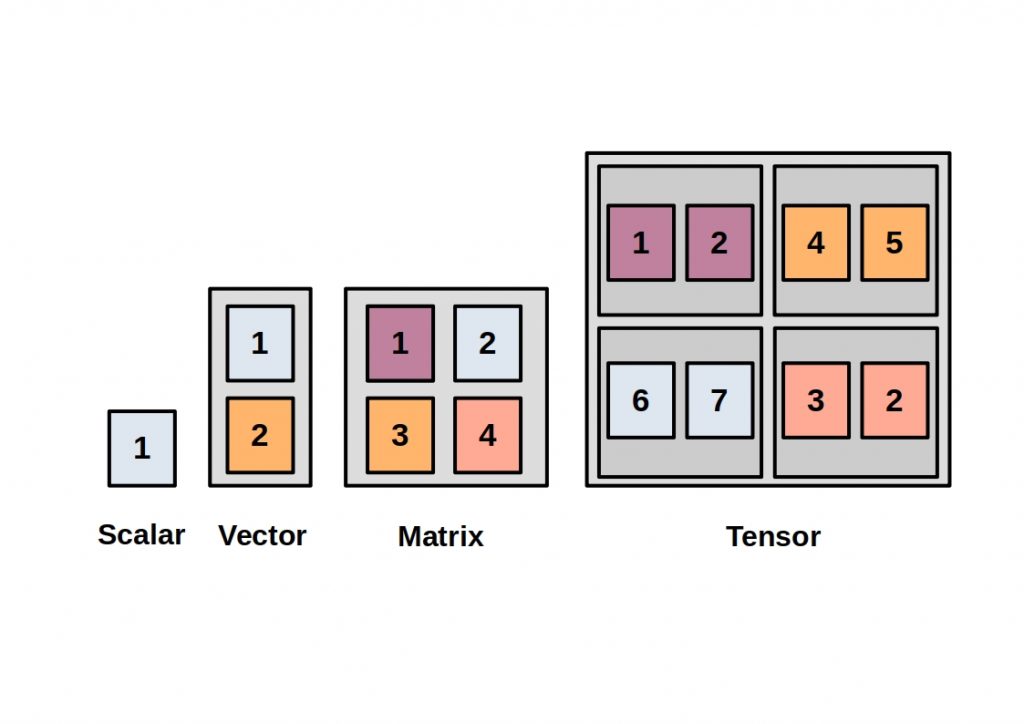

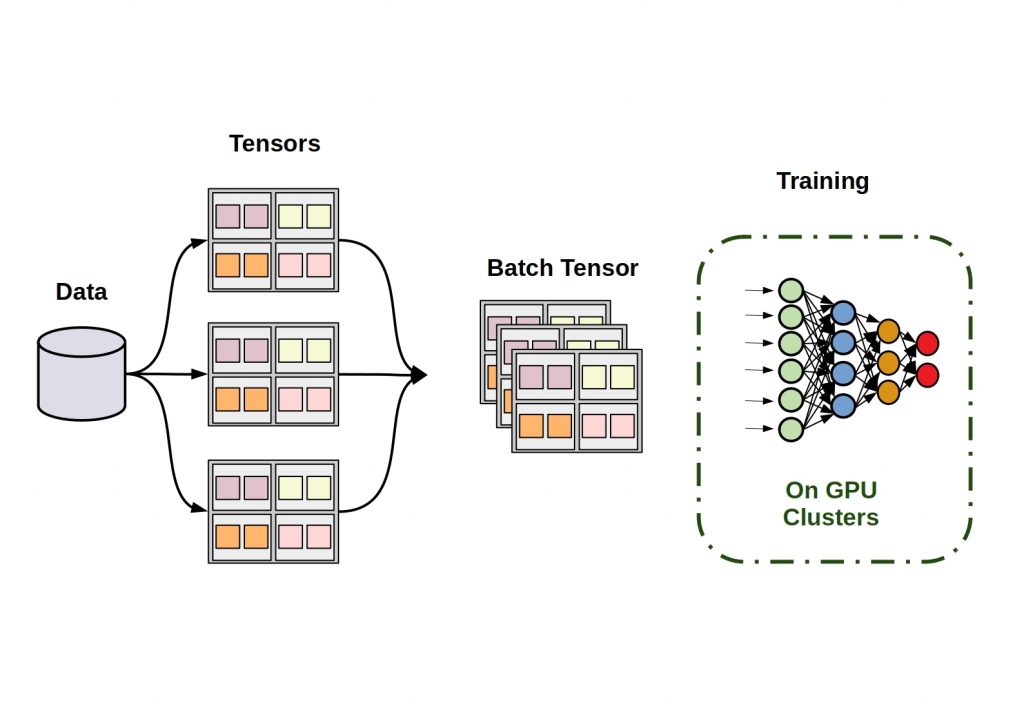

Tensors form the elementary data structures for PyTorch, similar to Tensorflow.

PyTorch vs TensorFlow – Tensors form the basis for both!

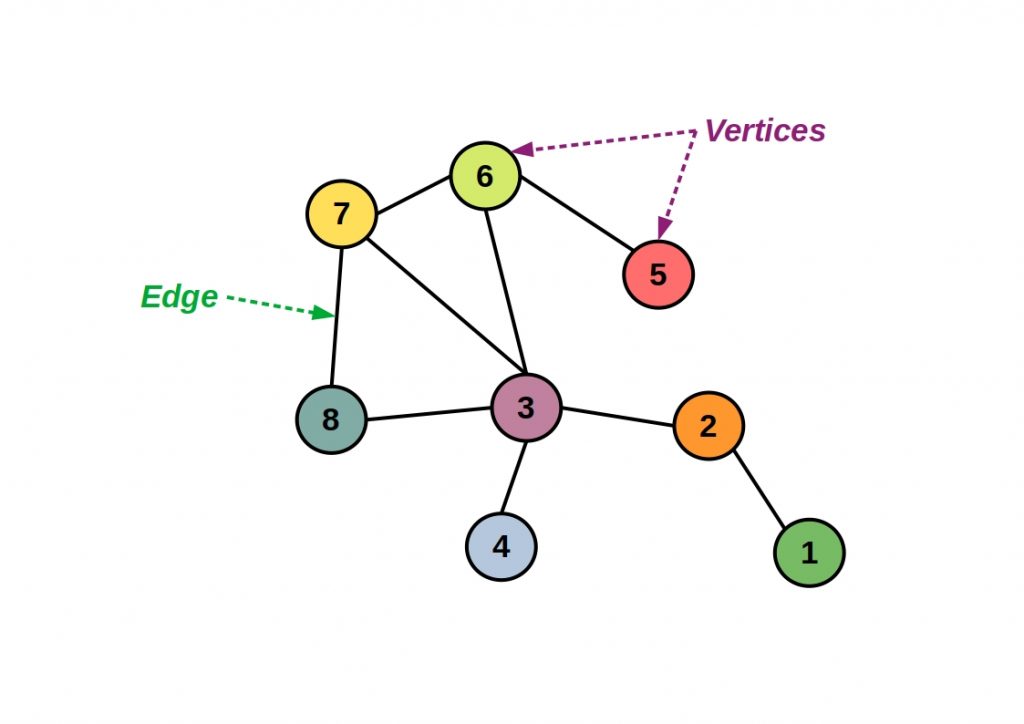

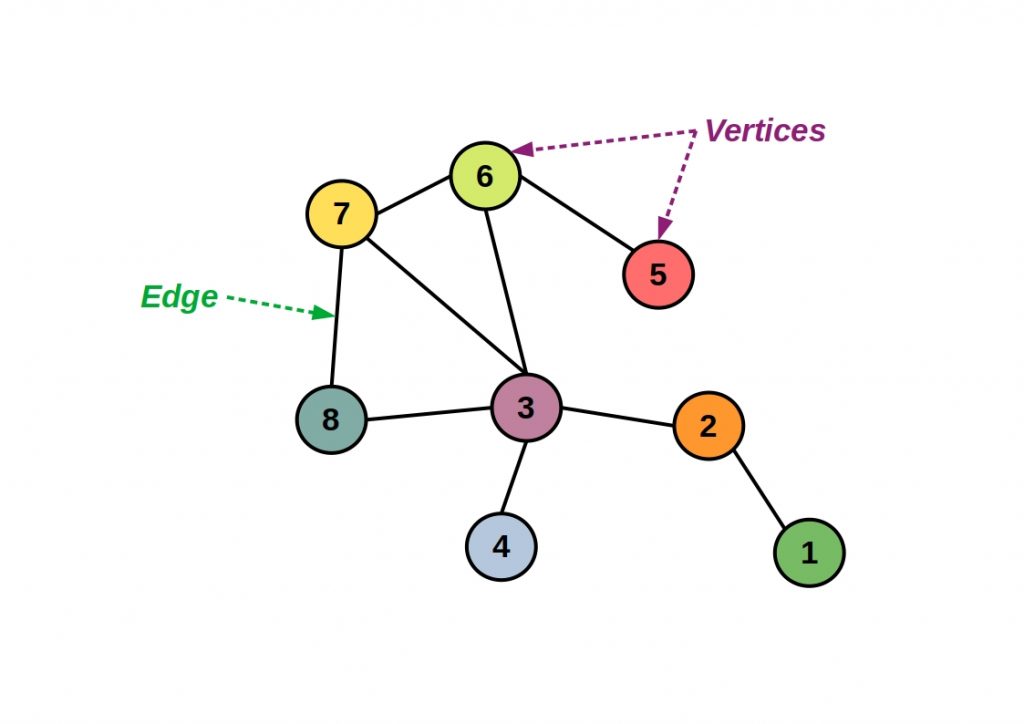

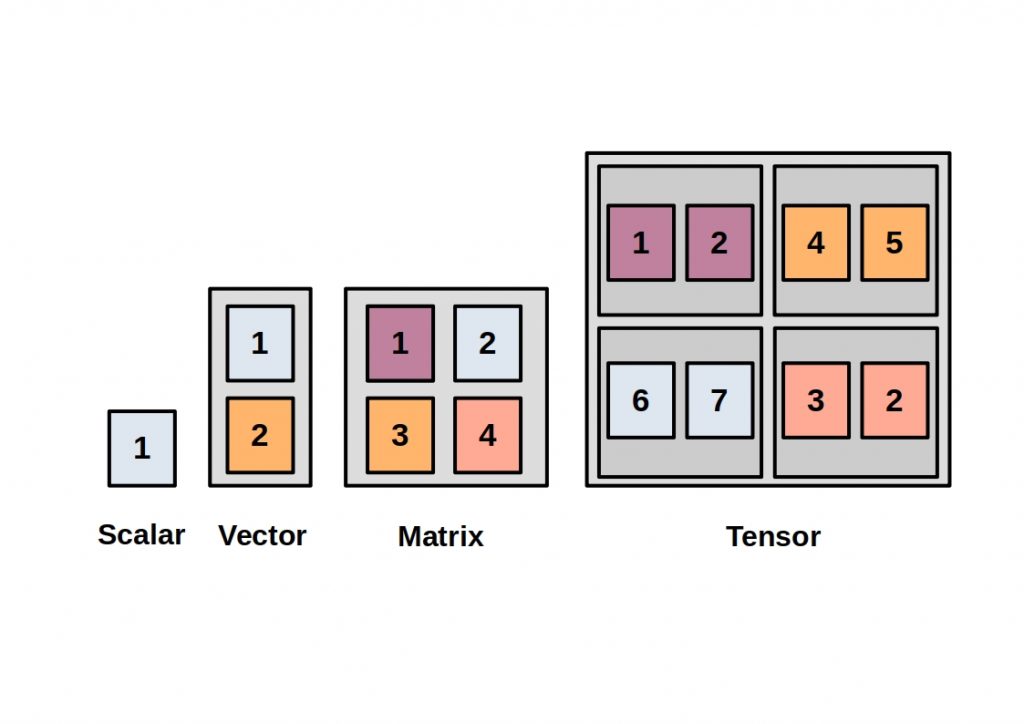

The mathematical term tensor corresponds to a generalization of vectors and matrices. It is thus an elementary data structure for data representation and processing. In PyTorch the implementation is done as multidimensional arrays . A vector thus corresponds to a one-dimensional tensor.

More dimensions can be added to a tensor up to infinity. Common types of tensors are 3 dimensional tensors for time series, images are usually 4 dimensional and videos are five dimensional tensors.

PyTorch methods manipulate tensors for linear algebra operations. These processes can run at high performance by moving the tensor objects into the graphics card memory.

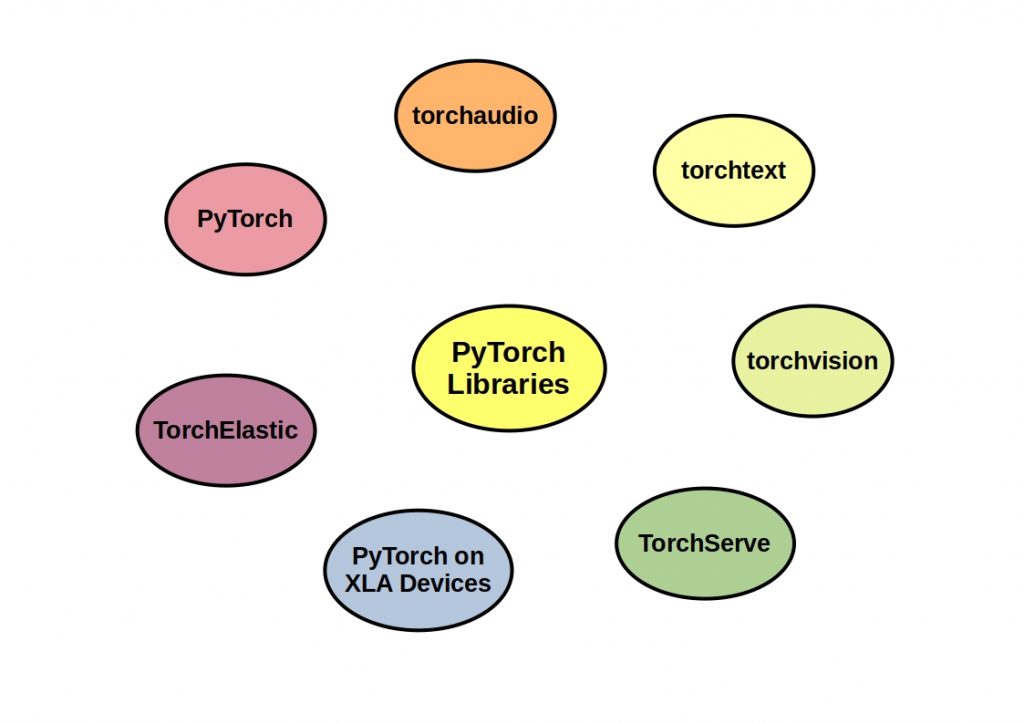

PyTorch Libraries

Pytorch offers the possibility to include specific libraries. This way the program can be kept lean and only make references to needed code.

The PyTorch library itself is an optimized tensor library for deep learning on both GPUs and CPUs.

By including another library, PyTorch can also compute on TPUs.

Depending on the data type, different libraries can be loaded, which provide optimized methods and pre-modeled prototypes for analysis. Torchaudio offers besides the usual audio transformation methods also data sets for training. With torchtext large language packages can be accessed and with torchvision images can be analyzed.

With TorchElastic, training jobs can be managed and elatically distributed, for example, to shared capacities.

PyTorch features

Through accelerated tensor analysis via allocation to GPUs, PyTorch achieves high flexibility and high speed in Deep Learning algorithms. Beyond this, PyTorch offers through its Python base unlimited compatibilities to powerful Python libraries, such as NumPy and SciPy and to the Cython programming language. Here we have collected the most important Python open source data management and analysis libraries.

Reverse-mode auto differentiation allows developers to modify network behavior at will, without delay or overhead. This allows for essential acceleration of research iterations.

The 8-bit quantization model ensures efficient deployment on servers and edge devices, and PyTorch Mobile can be used to develop for Android and iOS environments.

Other features include named tensor, artificial neural network pruning, and parallel training of models with remote procedure call.

PyTorch can access TorchServe, an open source server from Facebook, and is fully compatible with cloud provider Amazon Web Services (AWS). If you don’t know what AWS is, read our article on the subject.

PyTorch offers a hybrid frontend as an additional feature. This offers the possibility to choose between two modes. The Eager and the Graph mode. The eager mode primarily offers usability and flexibility, while the graph mode offers better speed, optimization and functionality in a C++ runtime environment. PyTorch also allows conversion with the Hybrid frontend. This allows models to be developed in eagermode and then transferred to graph mode for production.

PyTorch has unlimited access to ONNX (Open Neural Network Exchange) compatible platforms. ONNX is an open source project jointly developed by Microsoft, Amazon, and Facebook, among others, that enables the exchange of AI models between different tools.

PyTorch vs Tensorflow

Duel of the Giants

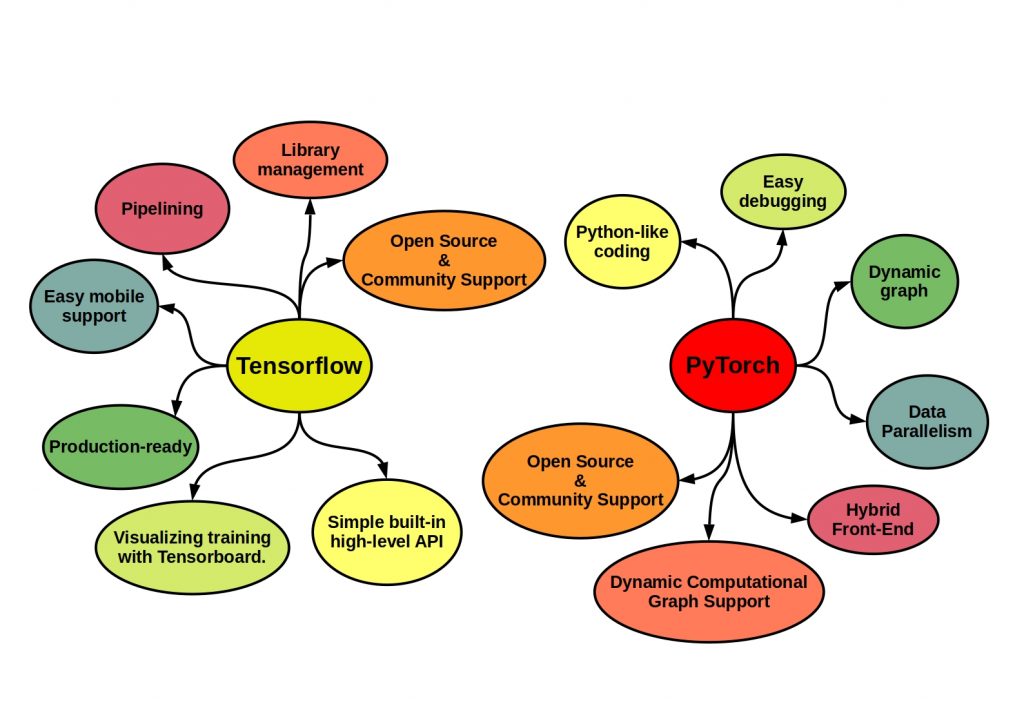

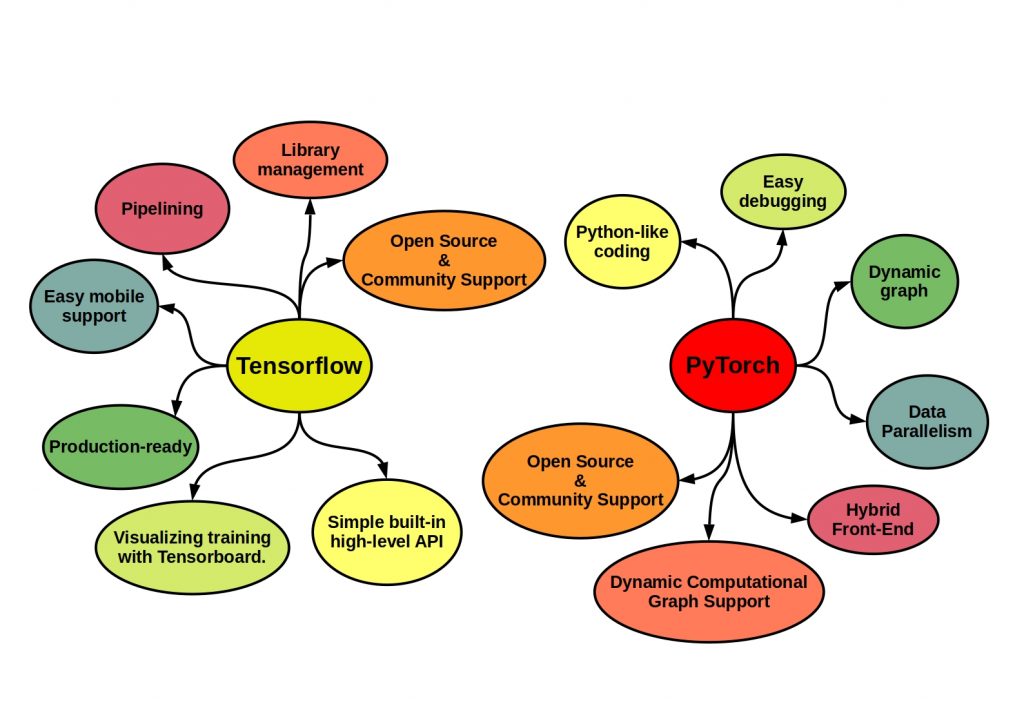

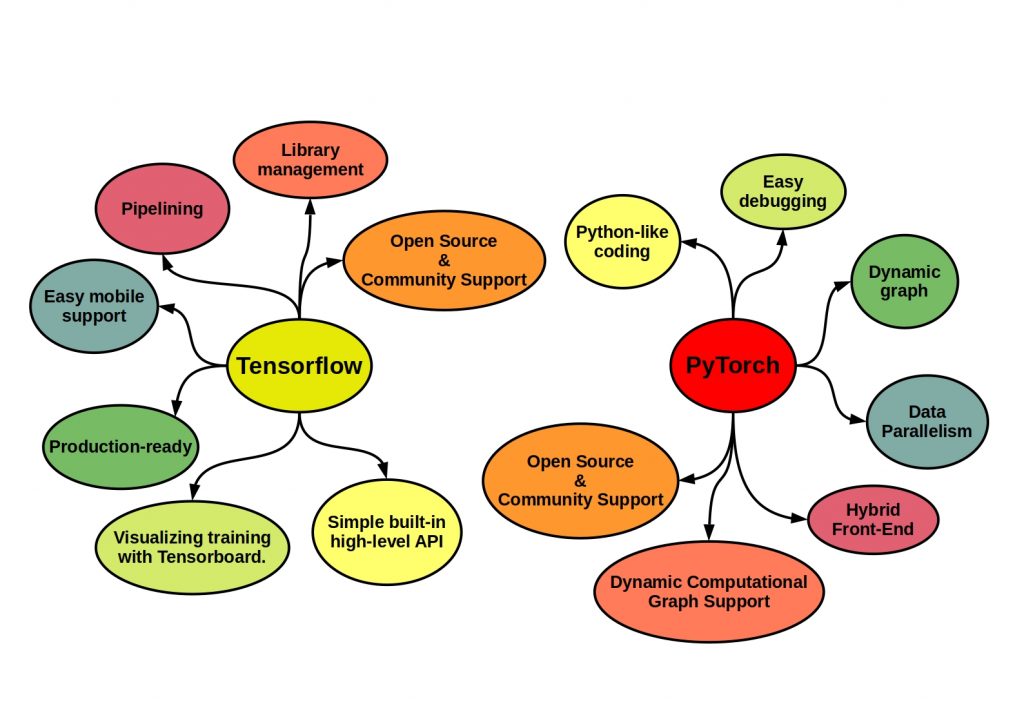

Just like the Facebook solution, Tensorflow works with the tensor data type. PyTorch scores with its simplicity and effective memory usage. Tensorflow, on the other hand, is much more scalable and thus better suited for production models. An essential difference was originally that with PyTorch the graph structure is defined during execution, while with Tensorflow it is first defined and then executed. Here, however, Tensorflow has now followed with its own eager mode. However, this is not yet fully developed at this stage.

PyTorch vs Tensorflow – Who is ahead now?

It remains an exciting head-to-head race. Despite its recent development history, PyTorch has already made up a lot of ground and is interesting in an entrepreneurial context precisely because of its user-friendliness. As is often the case, however, it is not a question of which solution will come out on top, but rather of the principle that competition stimulates business. In the end, competitive pressure leads to great new innovations and exciting new tools.