Data Warehousing – In today’s flood of data, it is becoming increasingly difficult to maintain a clear data management system. More and more data sources are recorded via different software systems.

A unified, centralized system can facilitate analysis and ensure that only one data truth exists in an organization.

What is a Data Warehouse System?

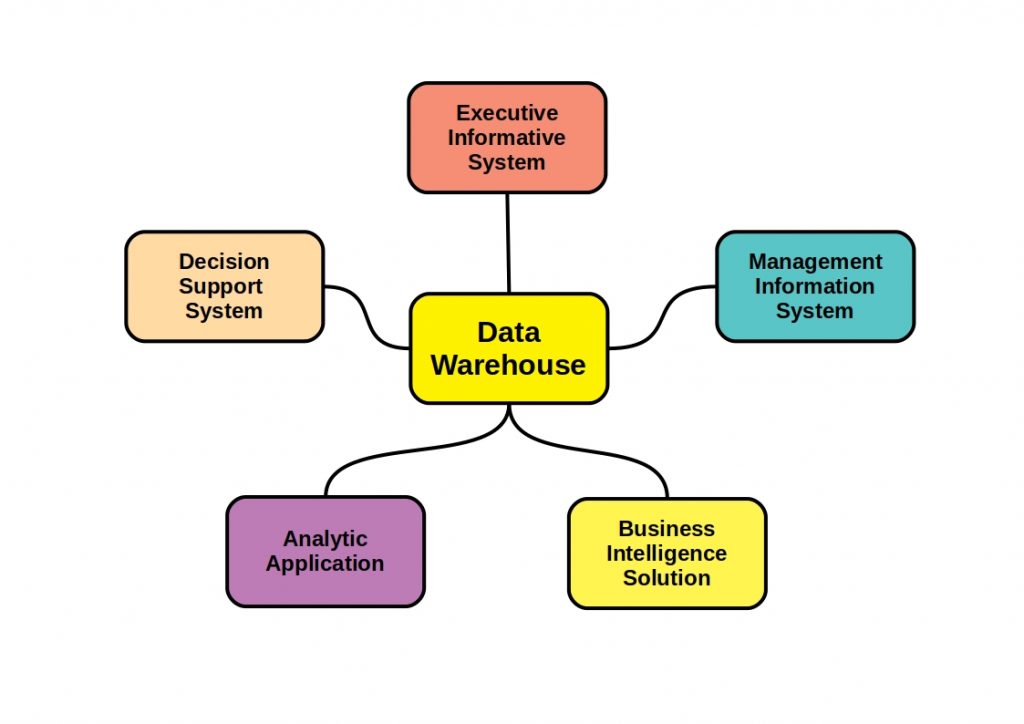

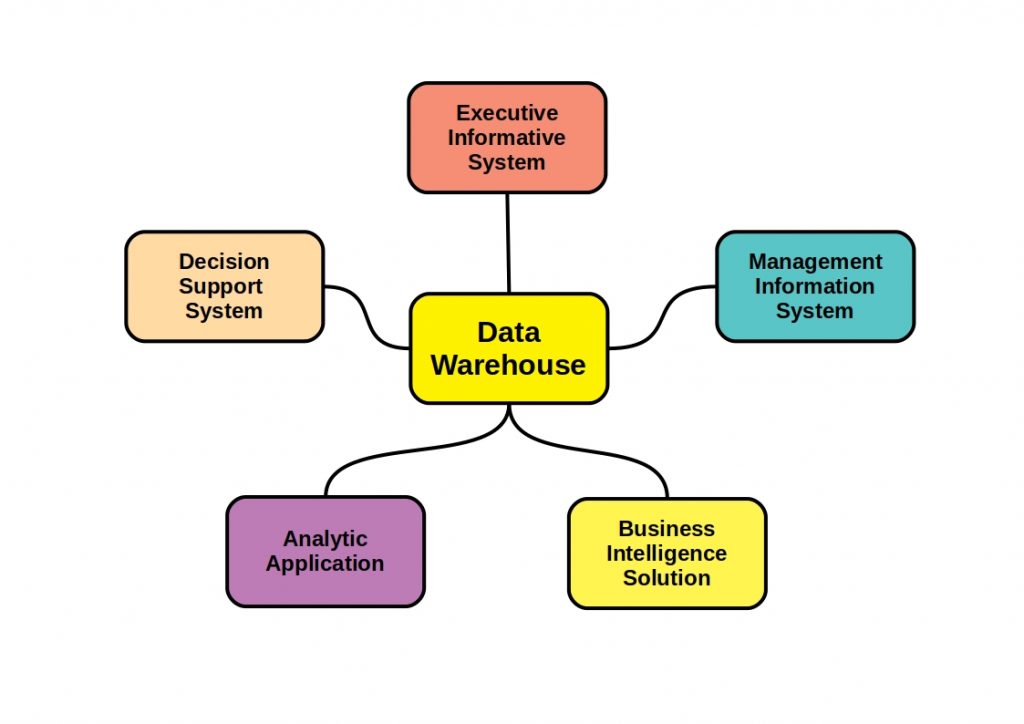

Data warehouse systems are built by integrating data from multiple heterogeneous sources and, in addition to centralization, performs the task of structuring data, supporting analytical reporting and structuring decision-making.

The system can perform data cleansing as well as data integration and data consolidation and does not require transaction processing or recovery.

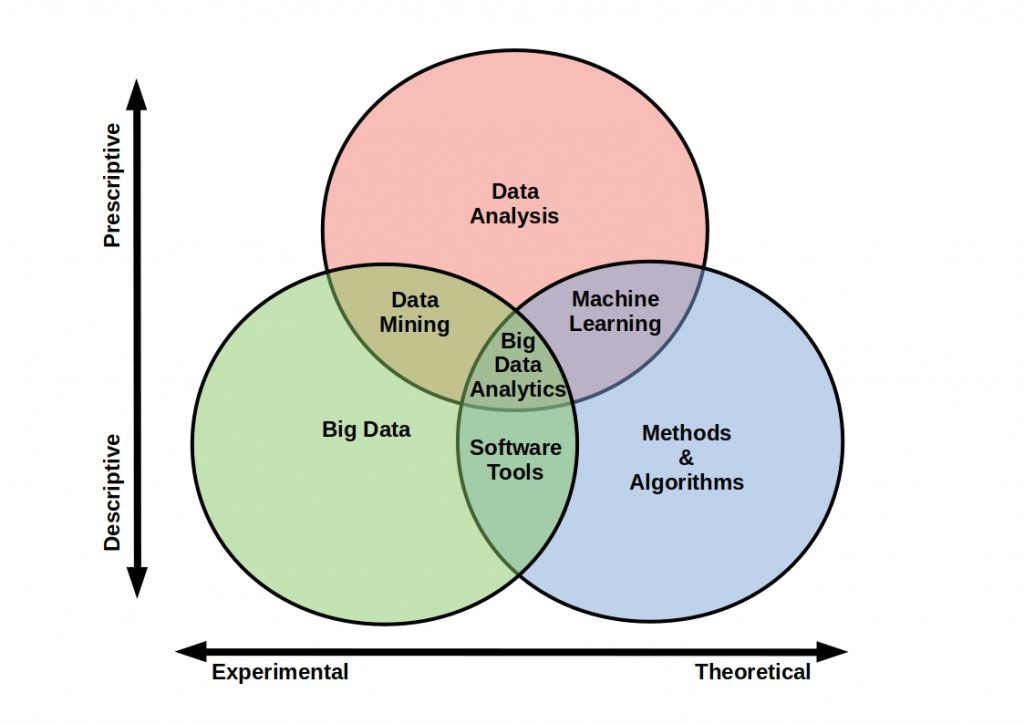

It is thus a powerful Big Data information system that can centrally handle everything related to data processing.

Data Warehousing Features

Data warehousing offers several features. Such an information system is subject oriented. It does not focus on the current operation, as these data are separated. This means that frequent changes in the operational database are not reflected in the data warehouse. Thus, the focus is on modeling and analysis of data.

The system is Time variant, which means that the collected data are identified with a certain period of time and previous data are not deleted when new data are added.

What does a Data Warehouse structure look like?

The complexity of this system increases exponentially with the complexity of the business. Many distinctive data sources, i.e. business processes, provide commutative and historical data.

Therefore, basic approaches have been defined according to which every data warehouse system should be structured. Single Tier, Two Tier and Three Tier.

2 Tier vs Three Tier Data Warehouse Architecture

In the following we will work out the three tier architecture.

This, the most commonly used, structure is completely decoupled from the data and the user interface by moving the application logic to a middle tier.

In two-tier, the application logic resides either in the user interface on the client or in the database on the server.

Thus, without a middle tier, this system is less scalable and more flexible. Integration of other data sources is more difficult here.

Three Tier Data Warehouse Architecture

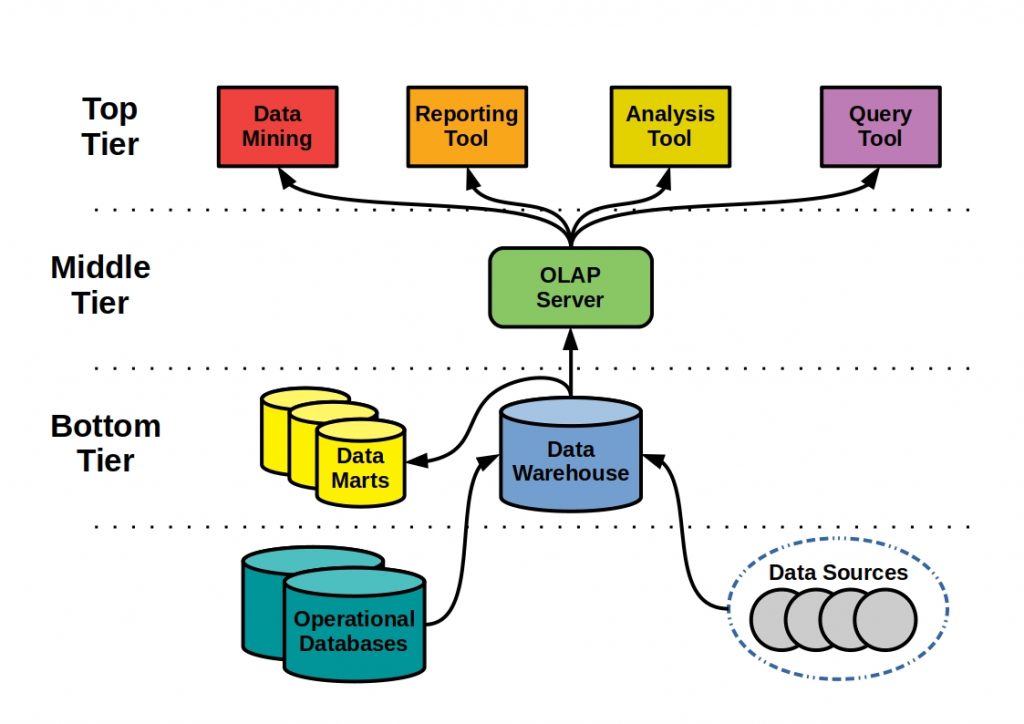

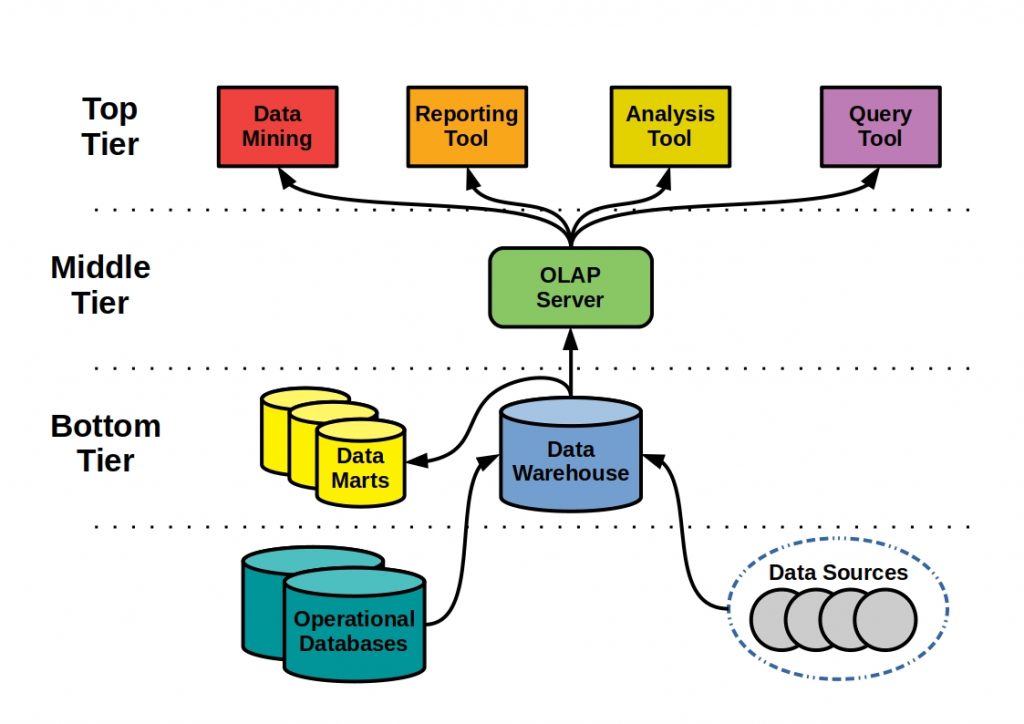

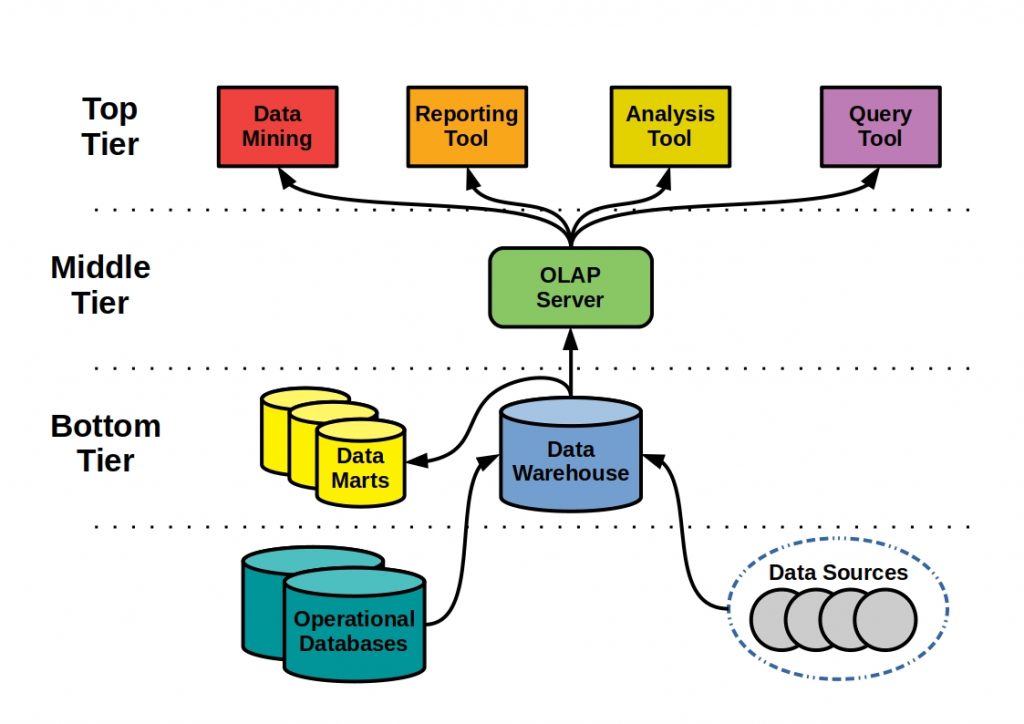

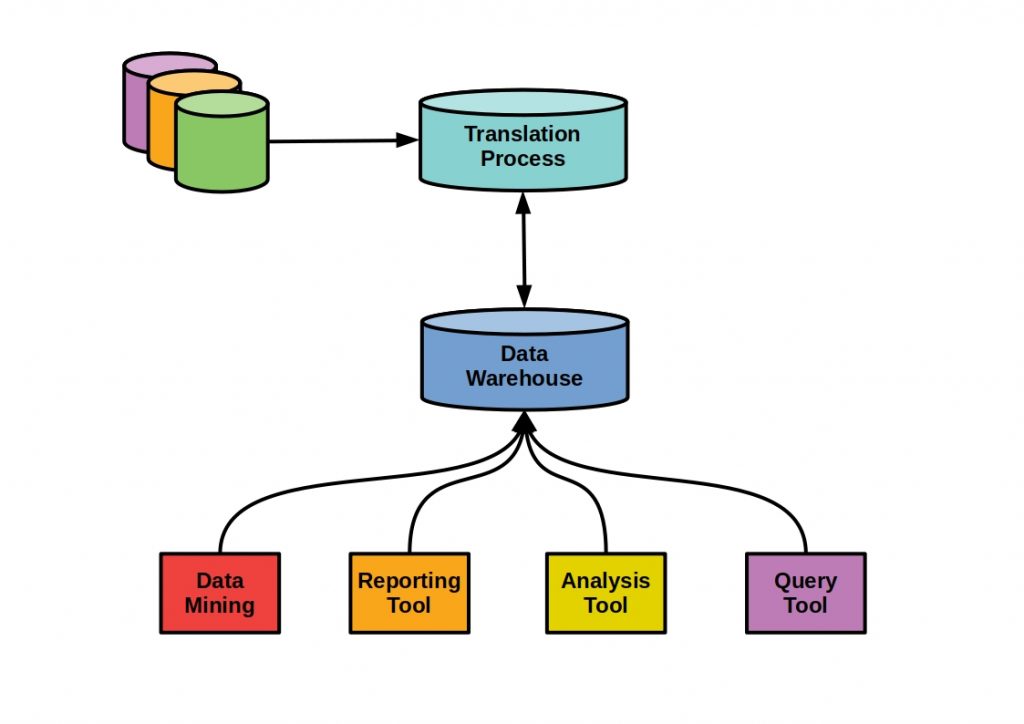

The Three Tier Data Warehouse Architecture is the design on the basis of which a data warehouse with three tiers is then built. The figure below shows this structure with common components.

However, the individual components can vary and depend on the project framework. As a rule, however, these changes do not alter the basic structure.

Bottom Tier

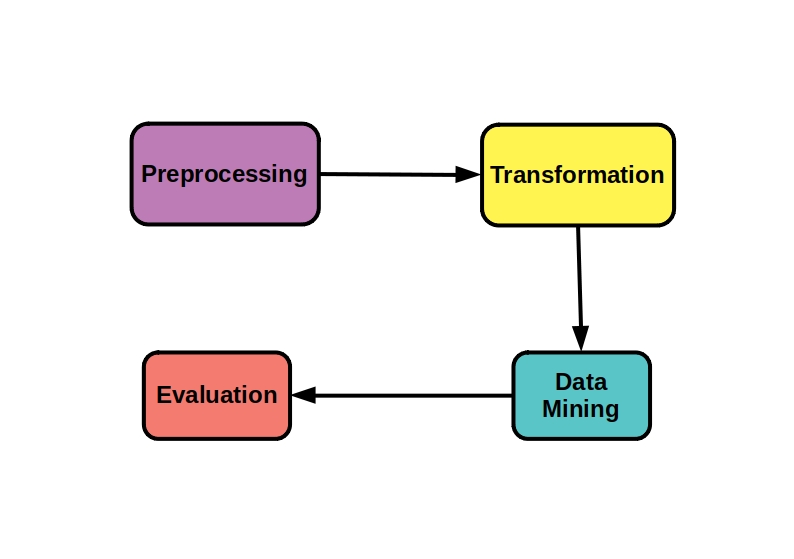

The lowest layer is persistence, which is usually located on a server. The data from various data sources is prepared and stored here using an ETL (extract, transform and load) process. Tools and other external resources can be used to feed the data.

This persistence can consist of a relational but also a multidimensional database system.

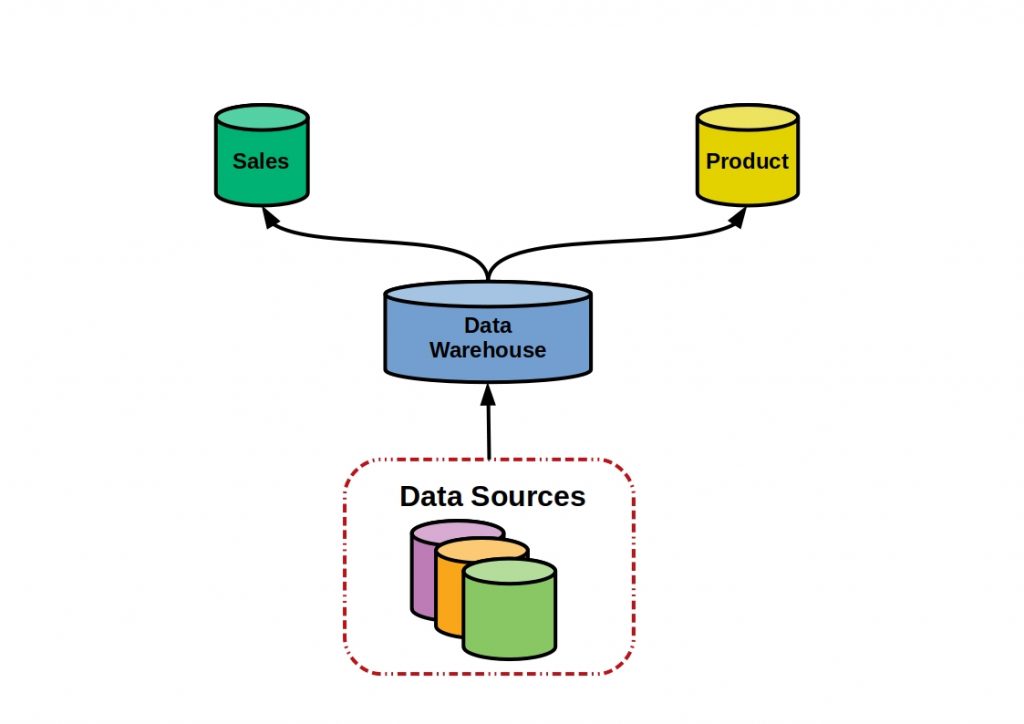

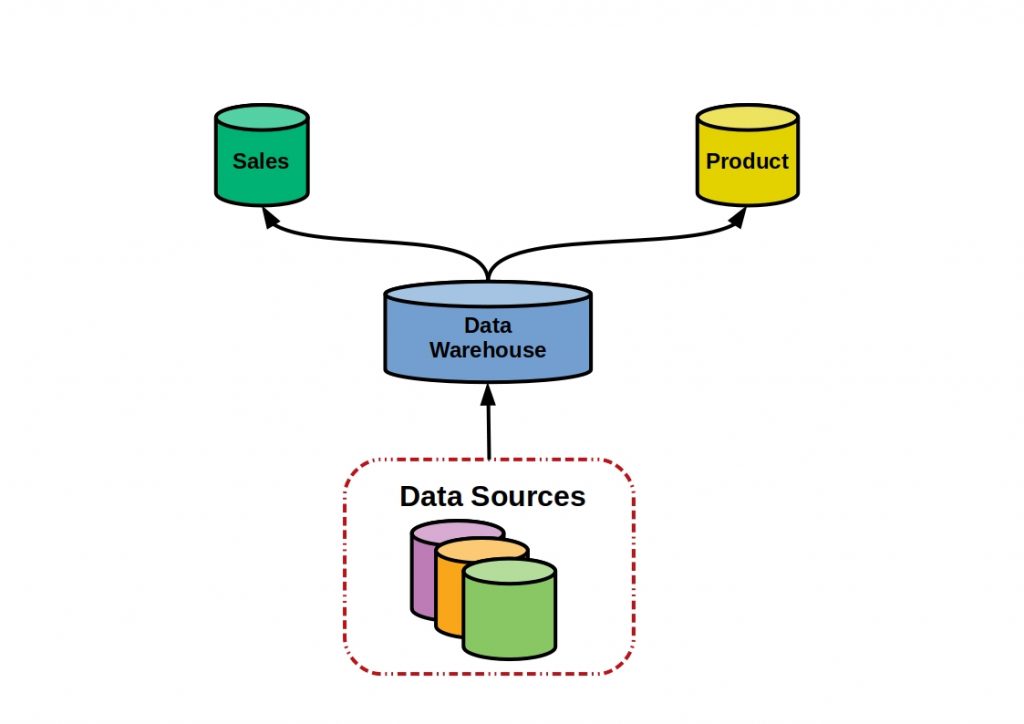

Load Data into Warehouse

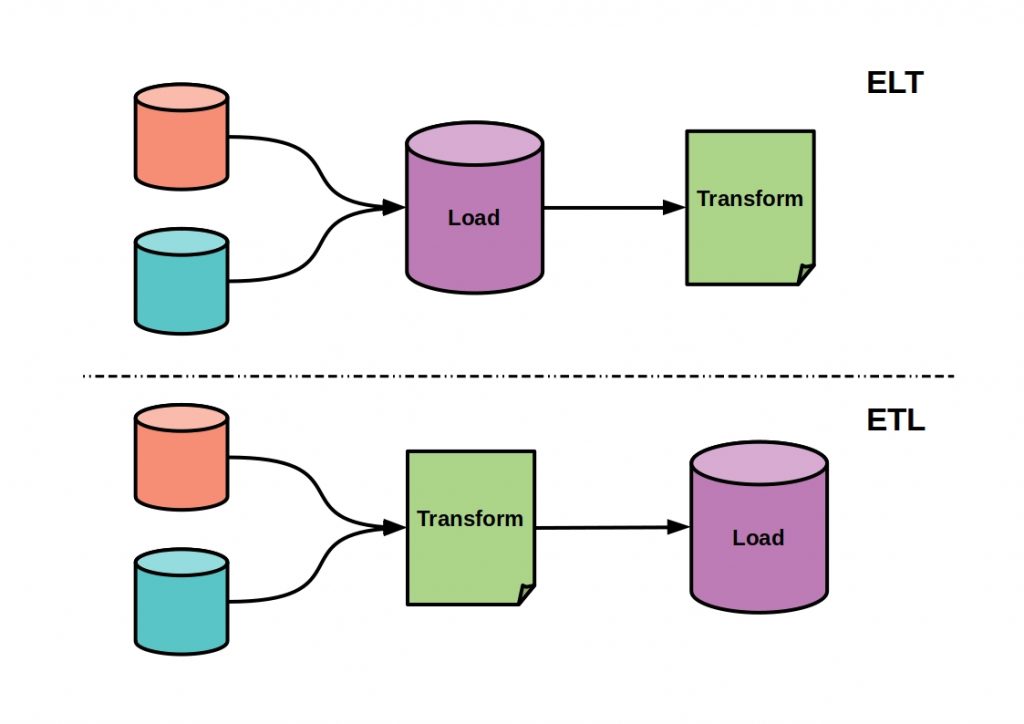

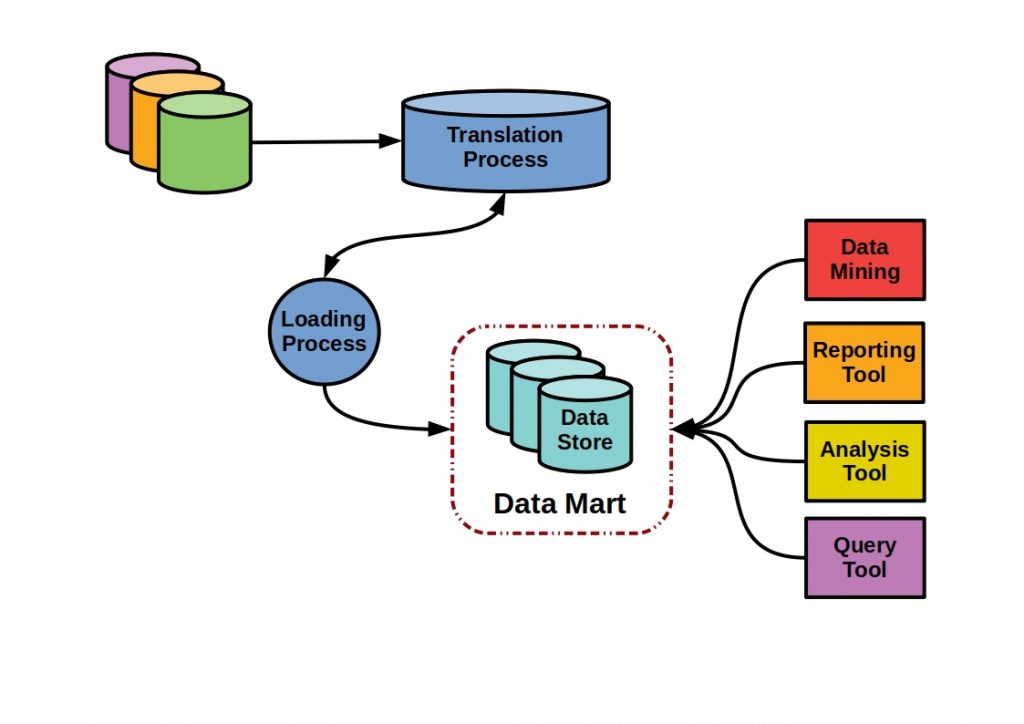

In addition to the different components and architectures, data can also be transmitted to the information system in different ways.

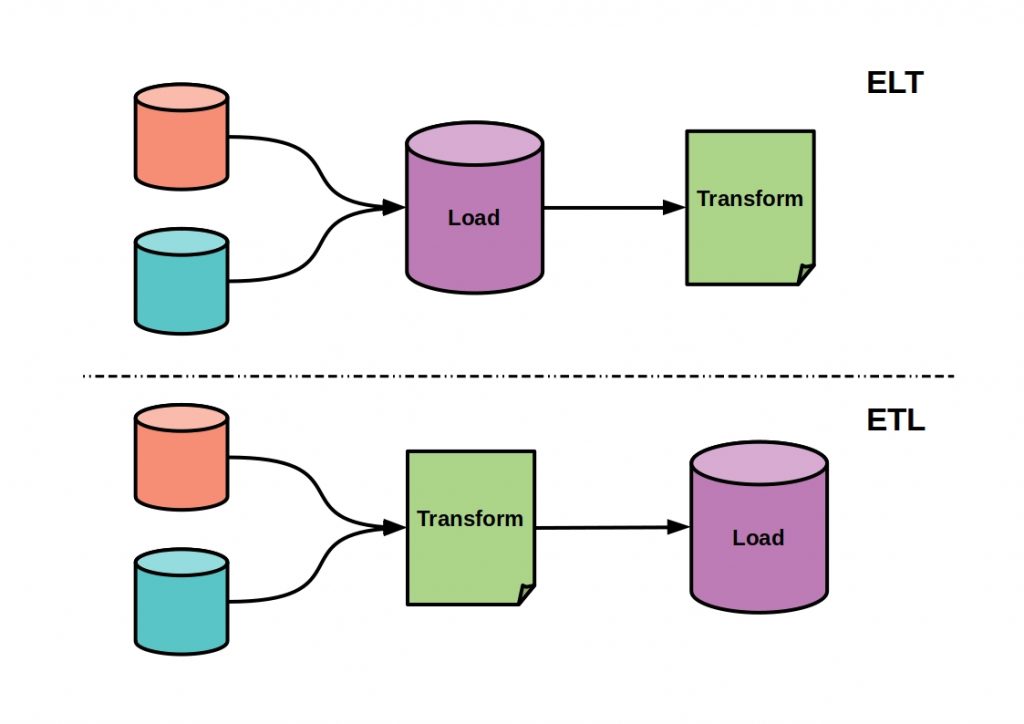

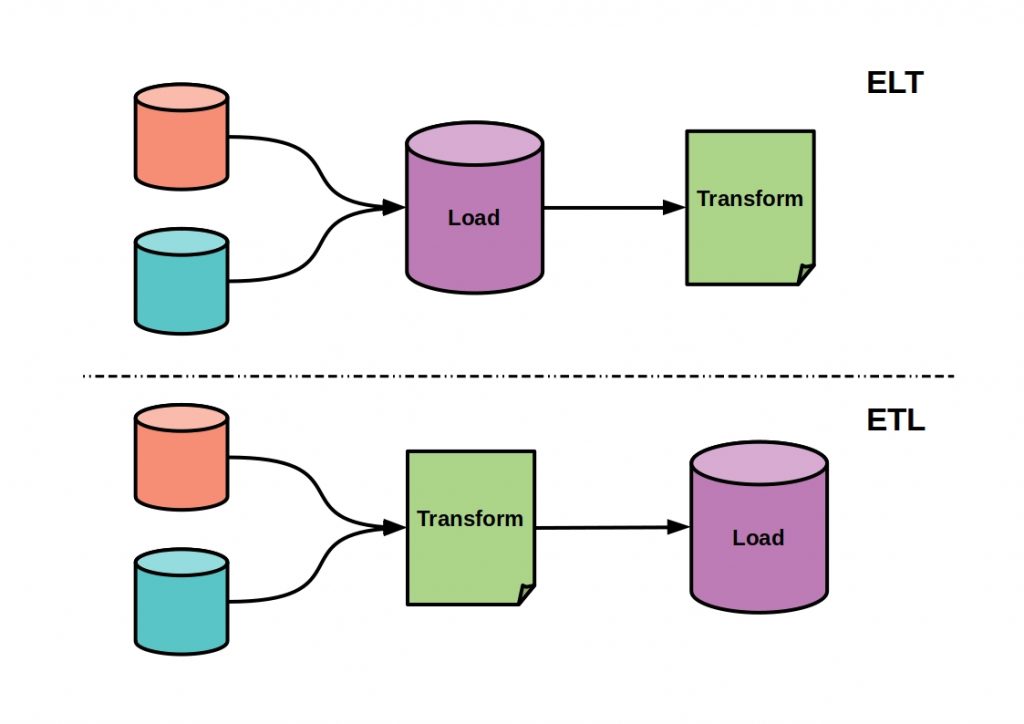

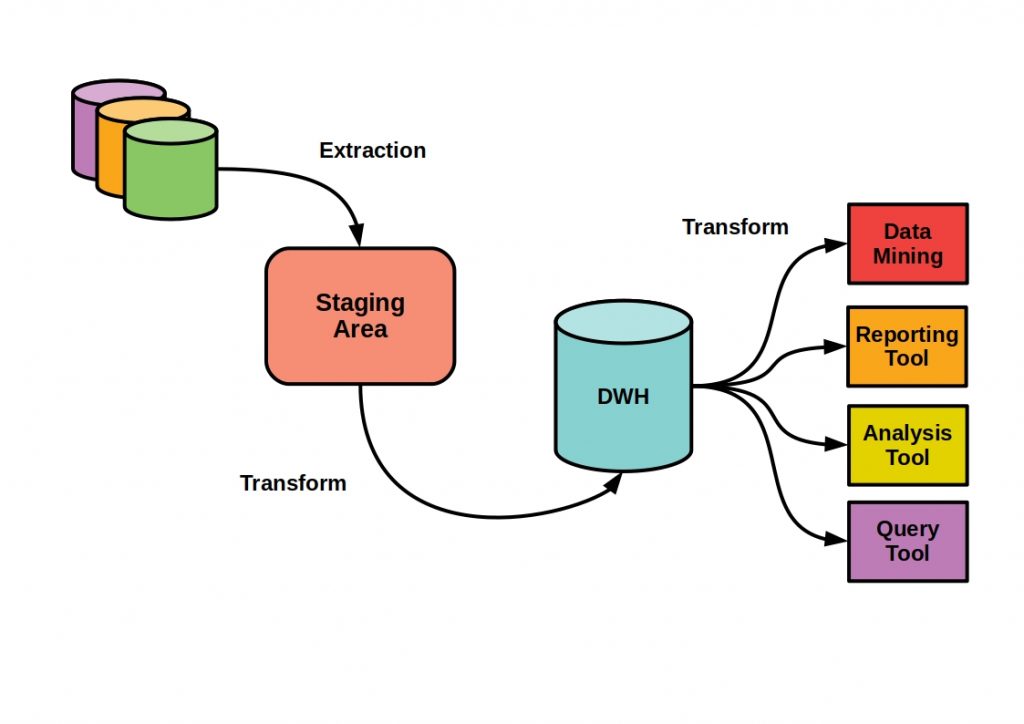

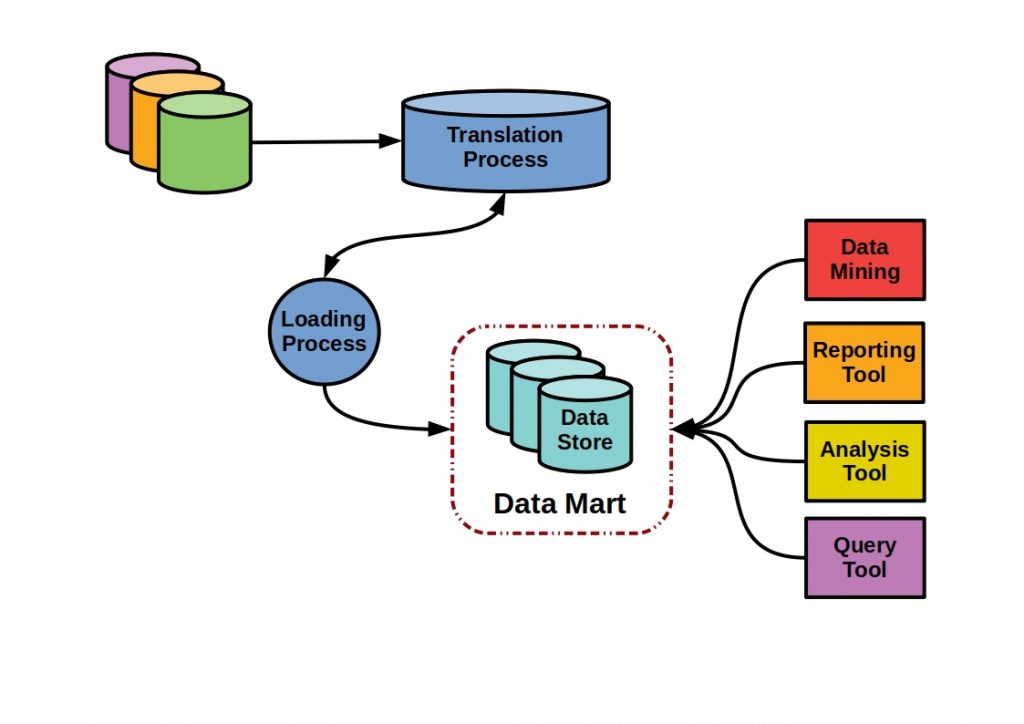

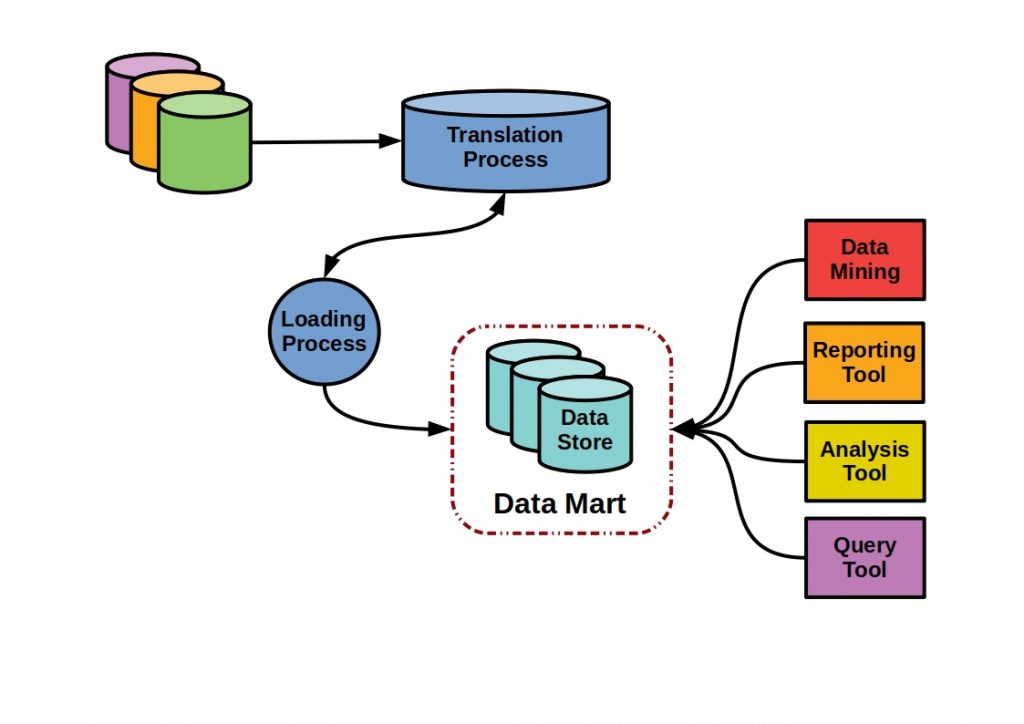

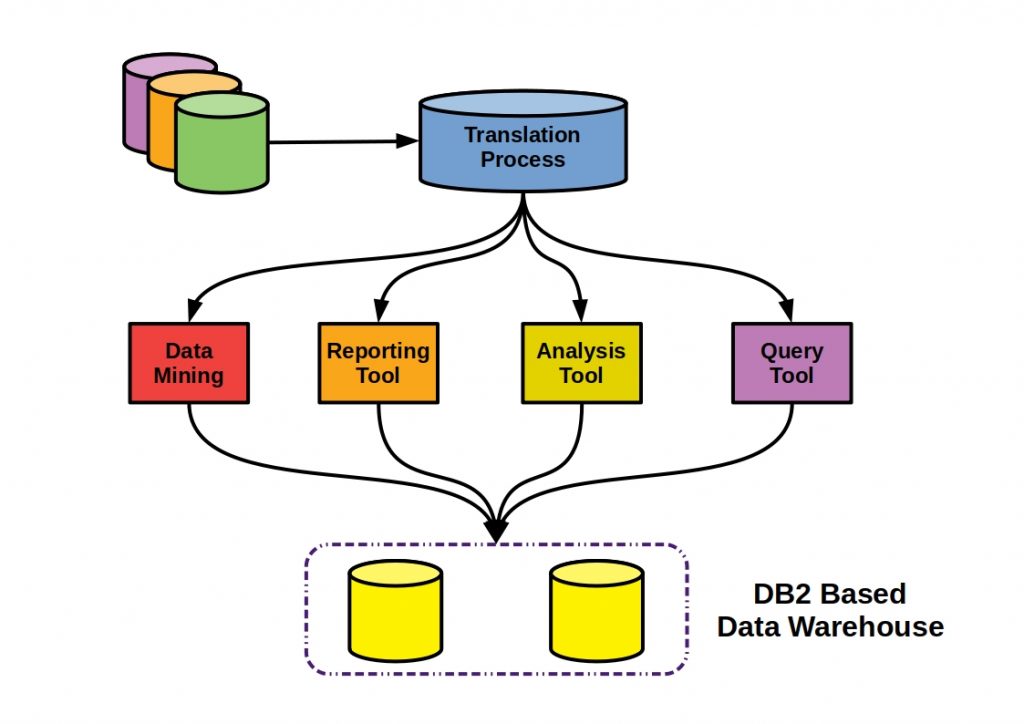

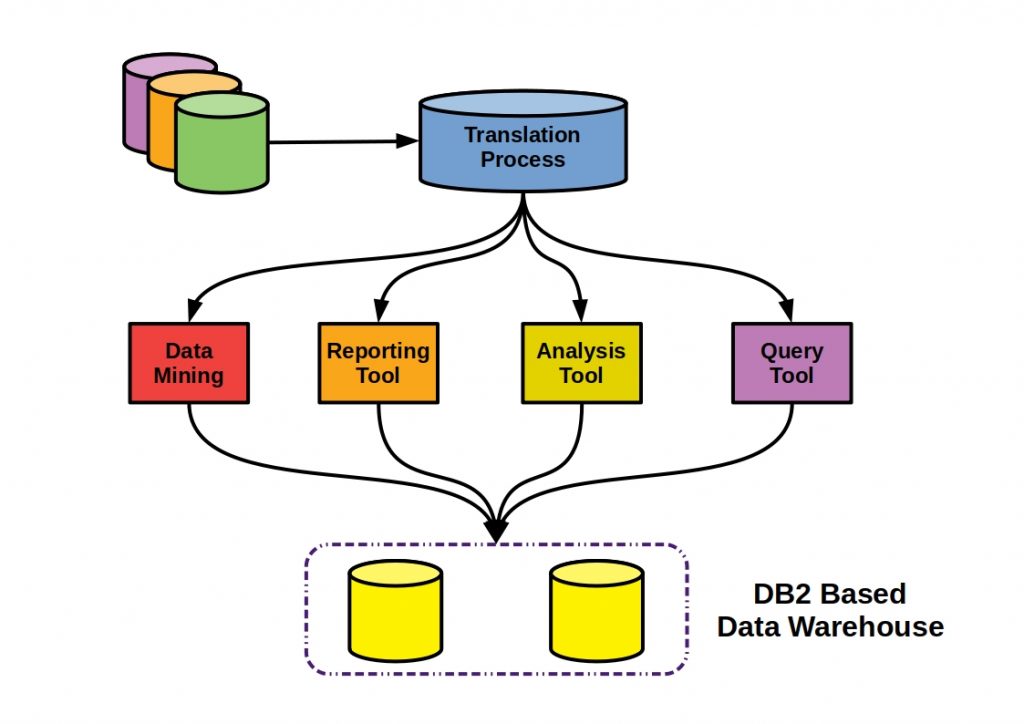

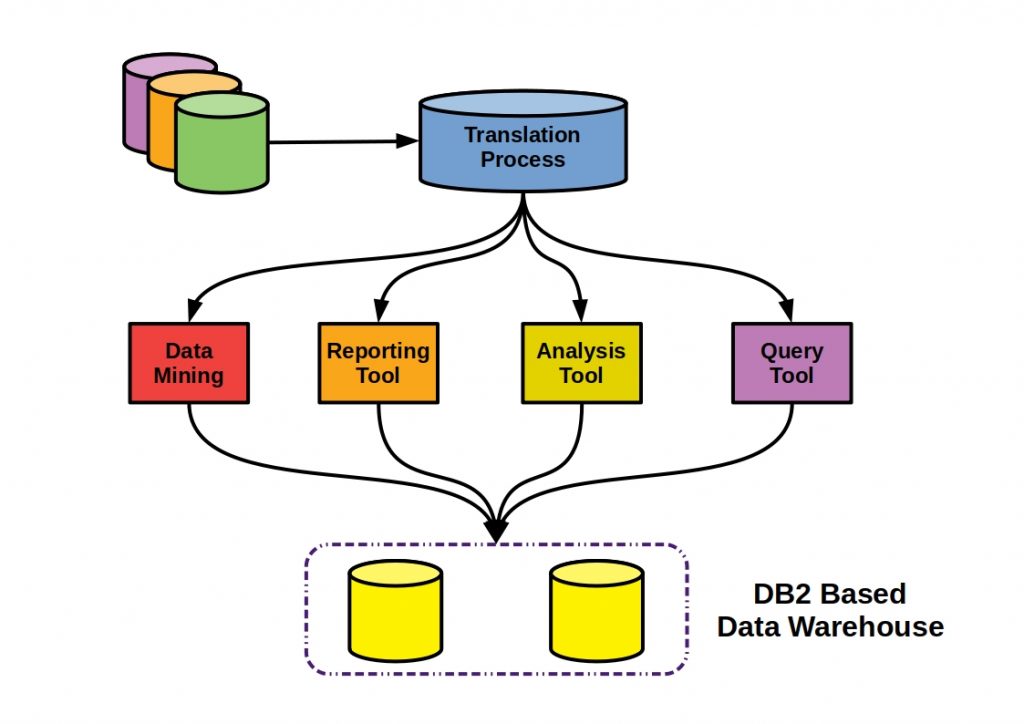

As shown in the figure, a basic distinction is made between two elementary processes.

What is ELT?

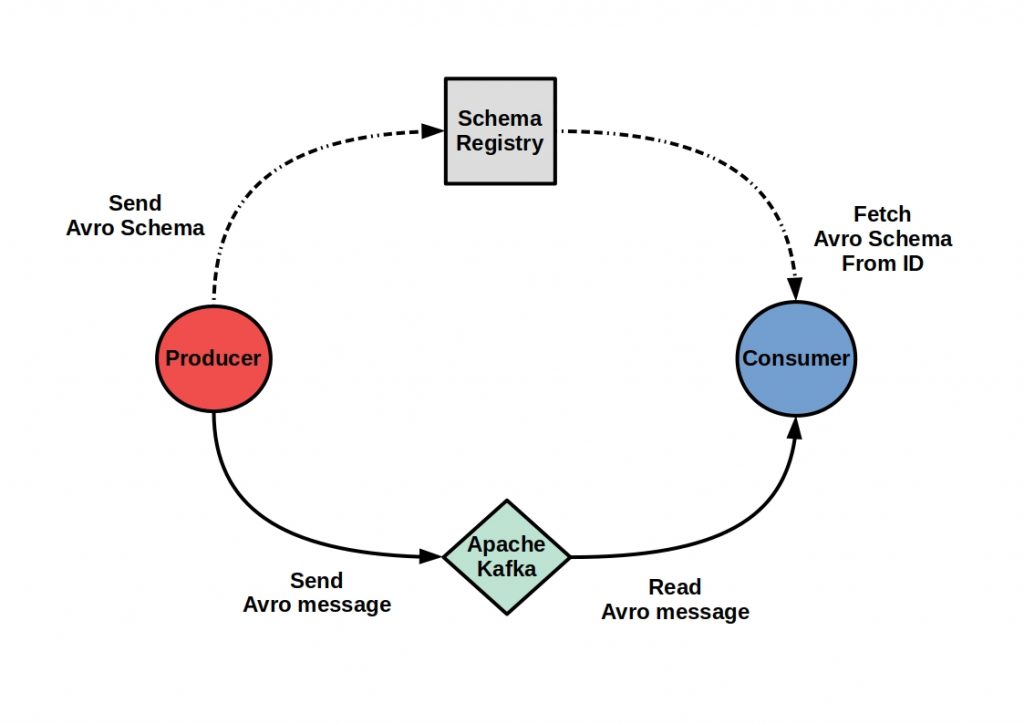

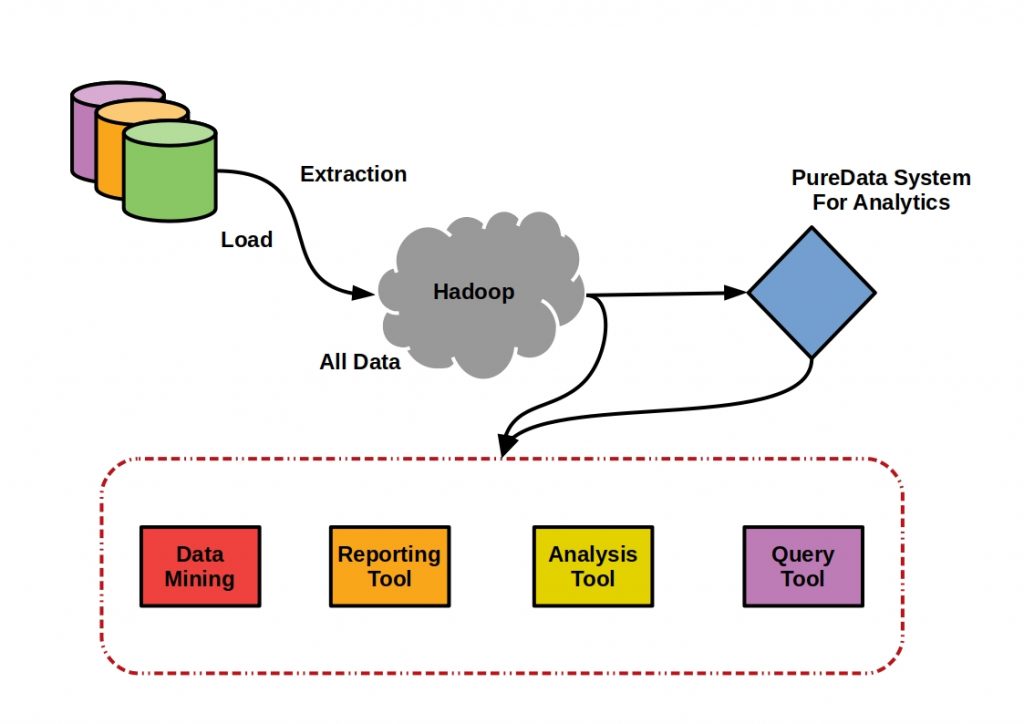

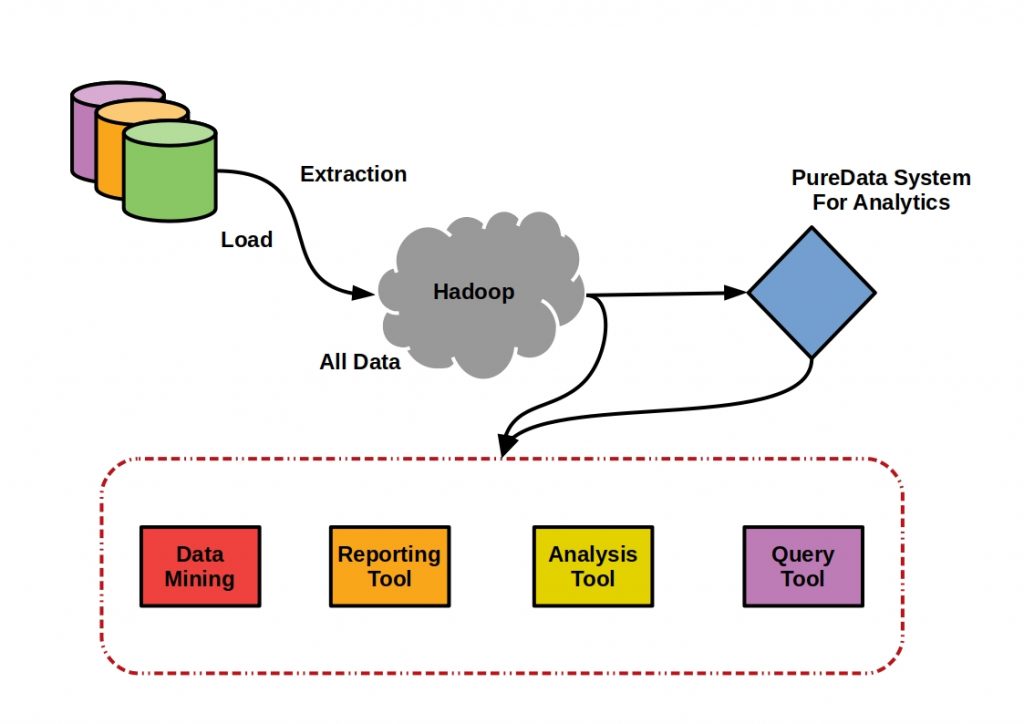

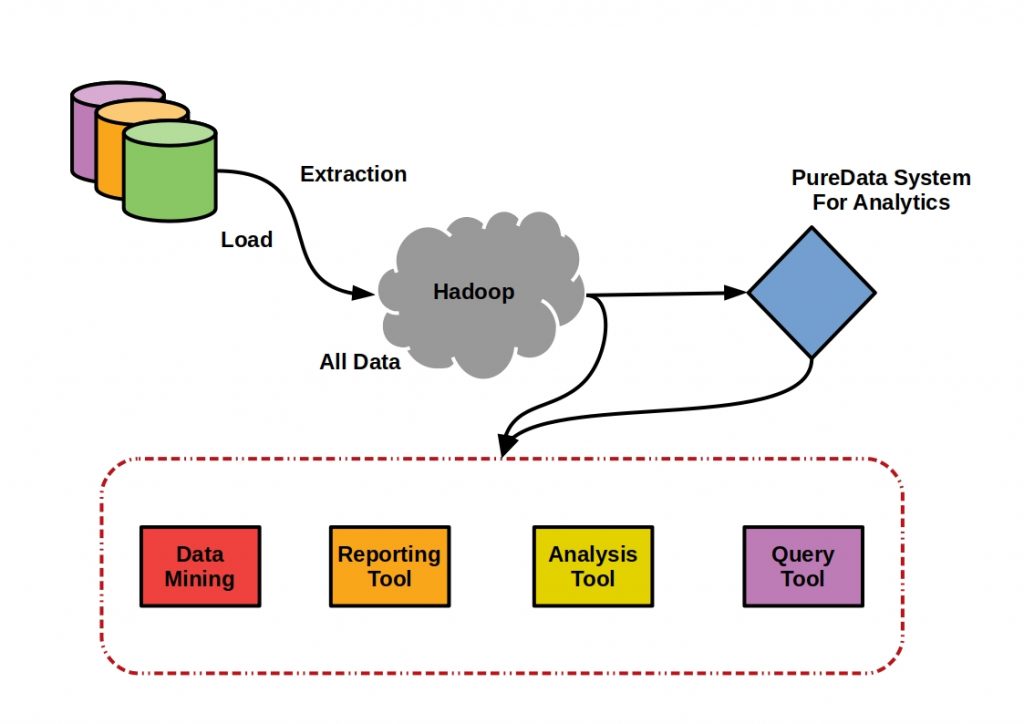

Extract, Load, and Transform, or ELT for short, is about extracting aggregate information from the source system and loading it into the target method.

The following figure shows such an example system. In this case, the Hadoop framework handles the central data management, while applications and analysis tools access the untransformed data.

What is ETL?

In Extract, Transform and Load, or ETL for short, the data set is first extracted from the sources into a staging area, then transformed or reformatted with business manipulation performed on it, and only then loaded into the target or destination database or data warehouse.

Middle tier

One or more OLAP (Online Analytical Processing) servers reside in the middle data warehouse layer. This technology can be used to create complex budget plans and perform analyses cost-effectively. So in the three tier data warehouse architecture, jobs are generated in the top tier and sent to this middle tier. Here, the data in the bottom tier is then accessed and analyses are performed. The result is then sent to the top tier and thus made available to the user, and/or forwarded to the bottom tier for storage of the analysis results in persistence.

What is an OLAP Server?

Basically, three OLAP server models are distinguished.

In Relational OLAP (ROLAP) the operations on multidimensional data are based on standard relational operations. The Multidimensional OLAP (MOLAP) directly implements the multidimensional data operations. A mixture of relational and multidimensional processing can be handled by Hybrid OLAP (HOLAP).

The choice of the server model always depends on the data composition in the lowest layer.

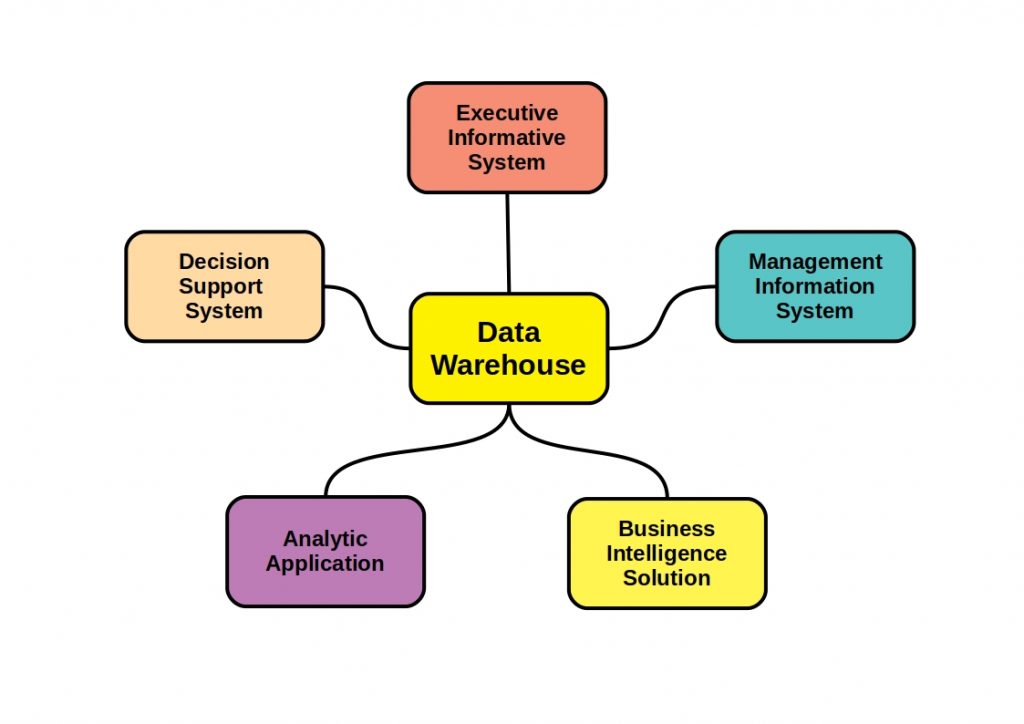

Top-Tier

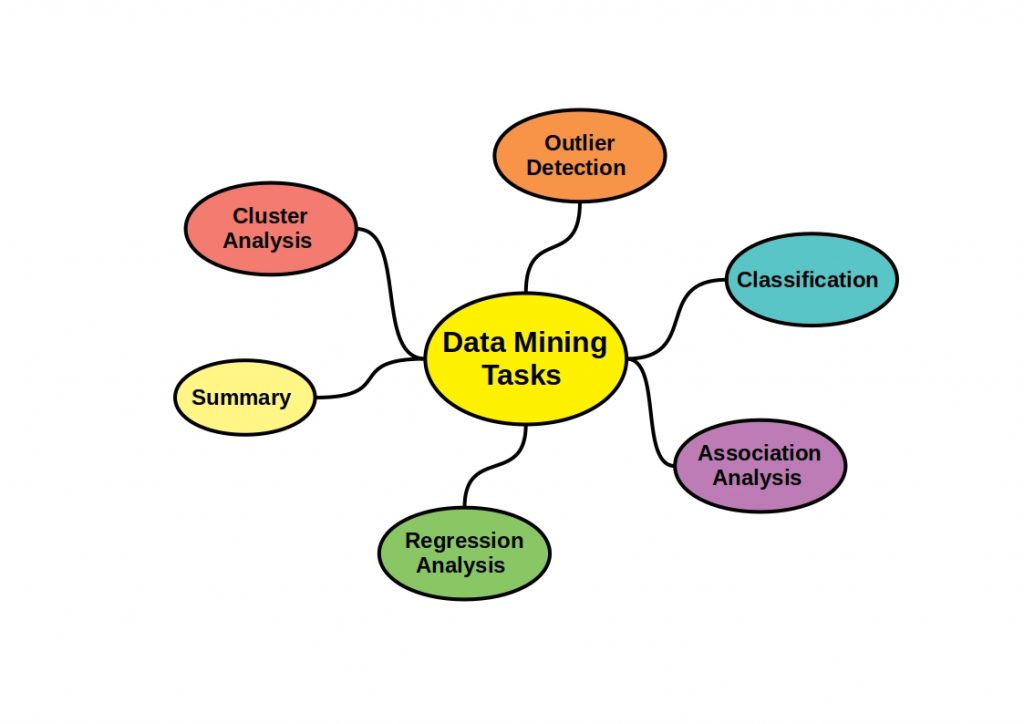

The top tier is the top of the three tier data warehouse architecture, the front-end client layer. It contains query and reporting tools, analysis tools, and data mining tools, thus providing the interface to the user. Here he can generate analyses and take a look at the data.

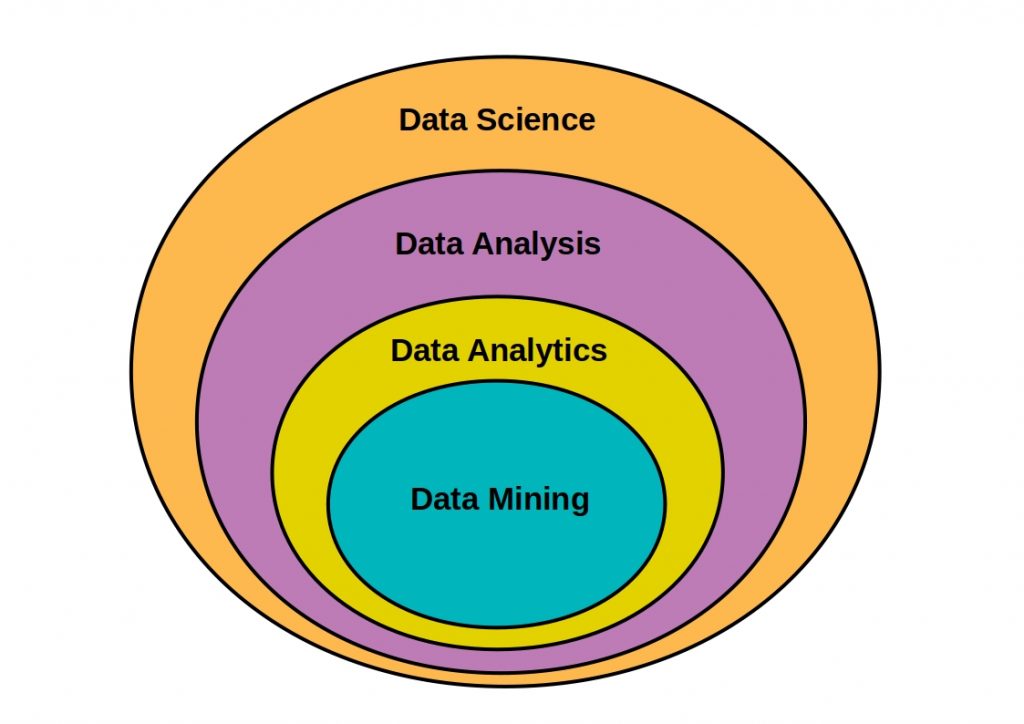

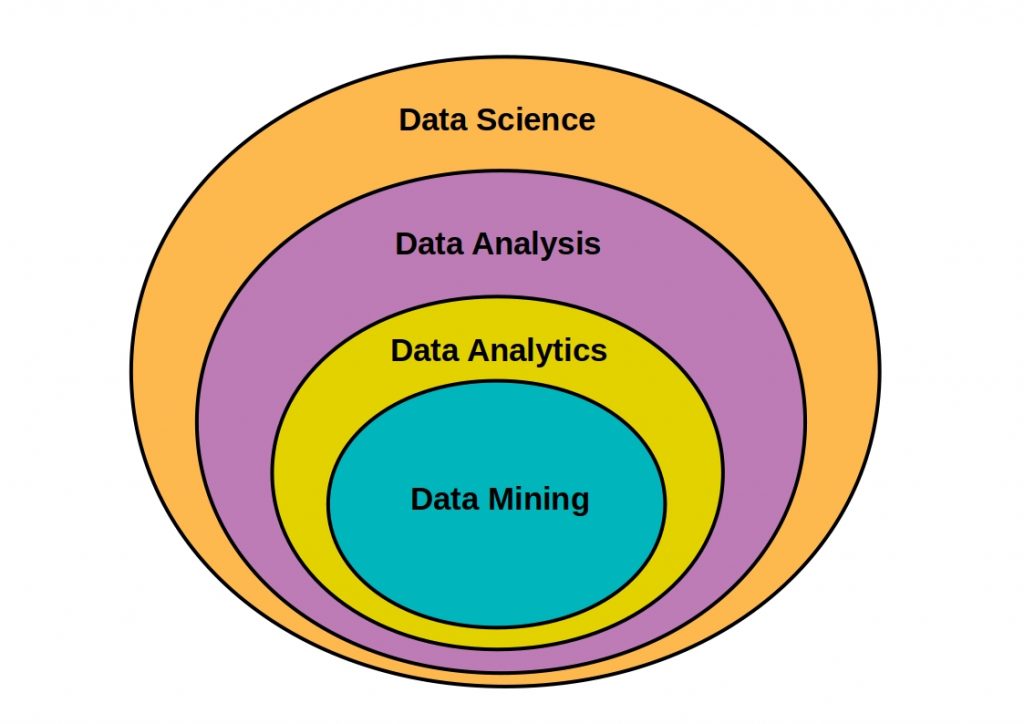

Terminologies

However, some terms that often come up in connection with this system need to be clarified.

When metadata is mentioned, a kind of roadmap to the data warehouse is meant. Here the warehouse objects are defined and it acts as a directory. This means that the decision support system finds the contents via the metadata.

The metadata is stored in the metadata repository. An integral directory that manages both the business metadata, i.e. data ownership information, business definition and change policies, and the operational metadata. Operational metadata refers to the timeliness of the data is it active, archived or cleansed, and data lineage, which is the history of the data. This includes the data used to map the operational environment, source databases and their contents, data extraction, data partitioning, cleansing, transformation rules, data refreshing and cleansing rules, but also the algorithms for summation, dimensional algorithms, data for granularity, aggregation, summation, etc.

The so-called data cube represents data in multiple dimensions and the data mart contains only the data specific to a certain group.

Data Warehouse Types and How they work

All Data Warehouse systems follow the same basic structure, which we explain in this article, but can consist of different components. Accordingly, they are typified.

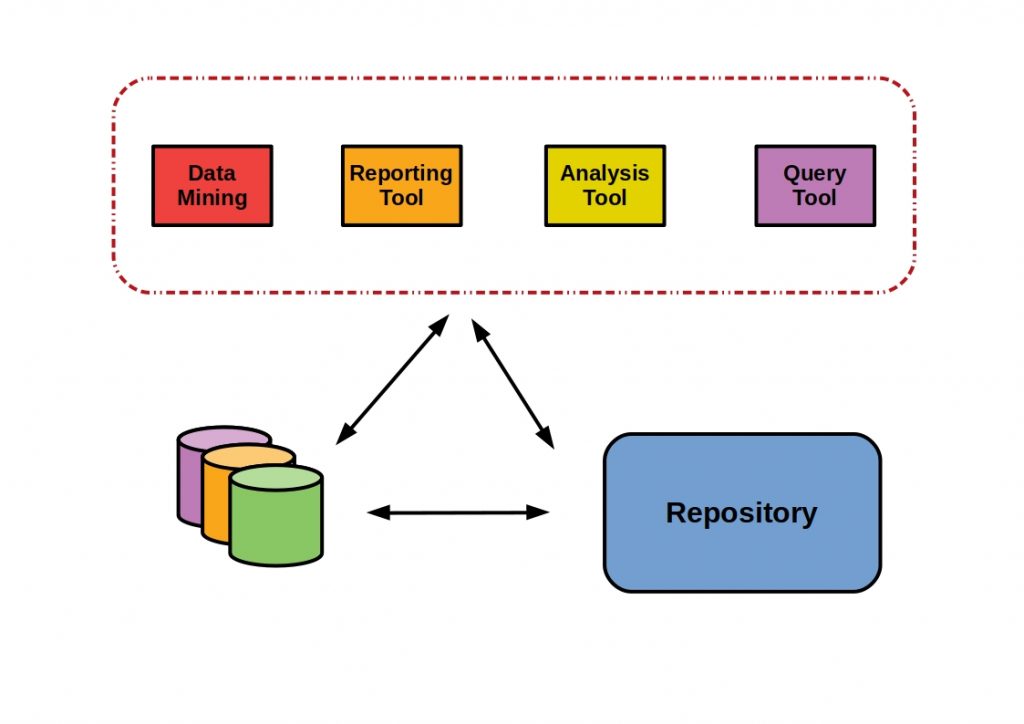

Host-Based mainframe warehouse

The Host-Based mainframe warehouse resides on a large-volume database.

In addition to this database, metadata is managed in a central metadata repository. Within this metadata, for example, the information for the documentation of data sources or data translation rules are stored.

In general, three phases run in this information system.

Selections and scrubbing methods take place in the unloading phase. That is, the appropriate data types and data sources are determined here and the data is subsequently error corrected.

In the following transform phase the data are translated into a suitable form. Here also already the rules for the access and the storage are specified.

In the final Load phase, the preprocessed data set is moved into tables.

Host-Based LAN data warehouse

With this type, information can be extracted from a variety of sources. Multiple LAN-based warehouses are supported. Data provisioning can be either centralized or from the workgroup environment. The size depends on the platform.

Multi-Stage Data Warehouses

Here, the data is staged several times before being loaded into the data warehouse and finally distributed into department-specific data marts.

Stationary Data Warehouse

In the case of stationary warehouse types, the data from the sources are not changed. The customer thus has direct access to the data.

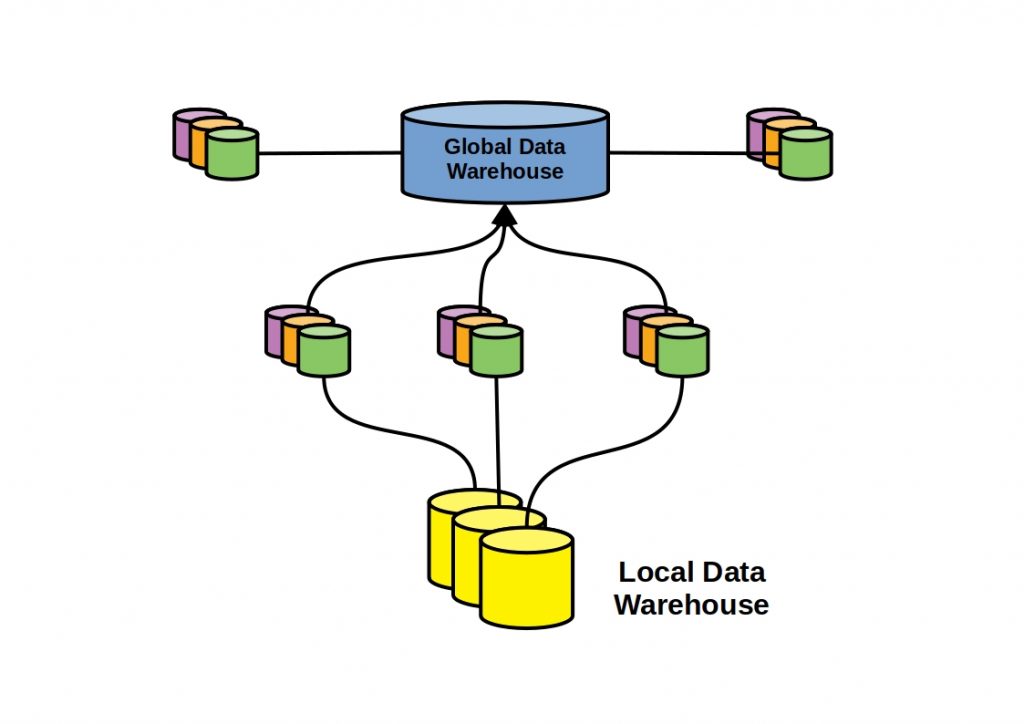

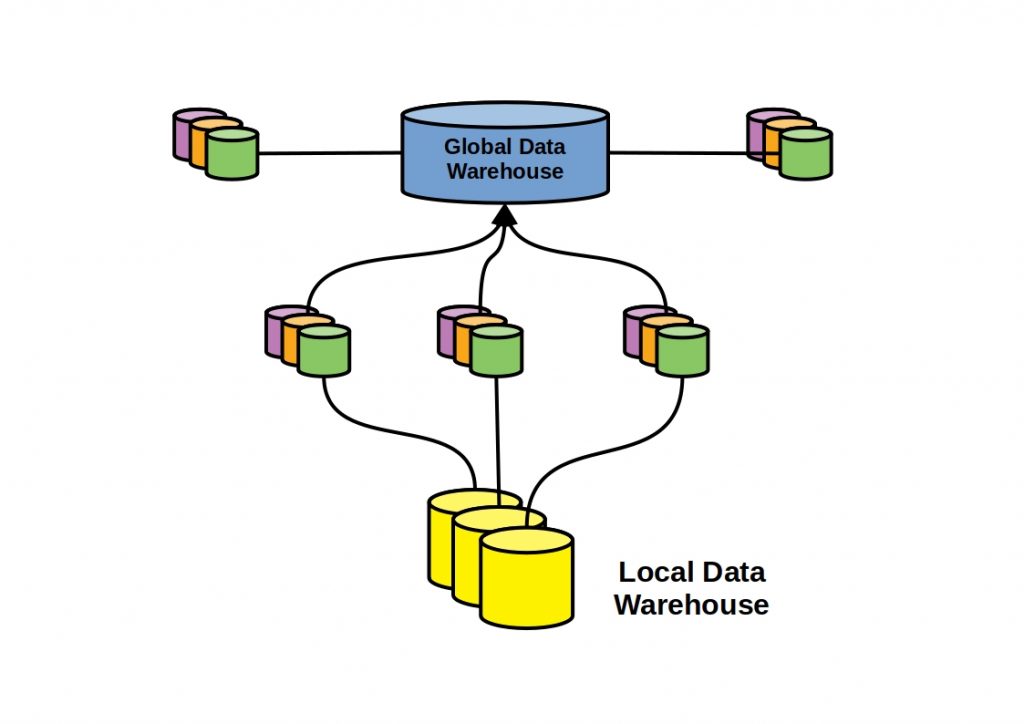

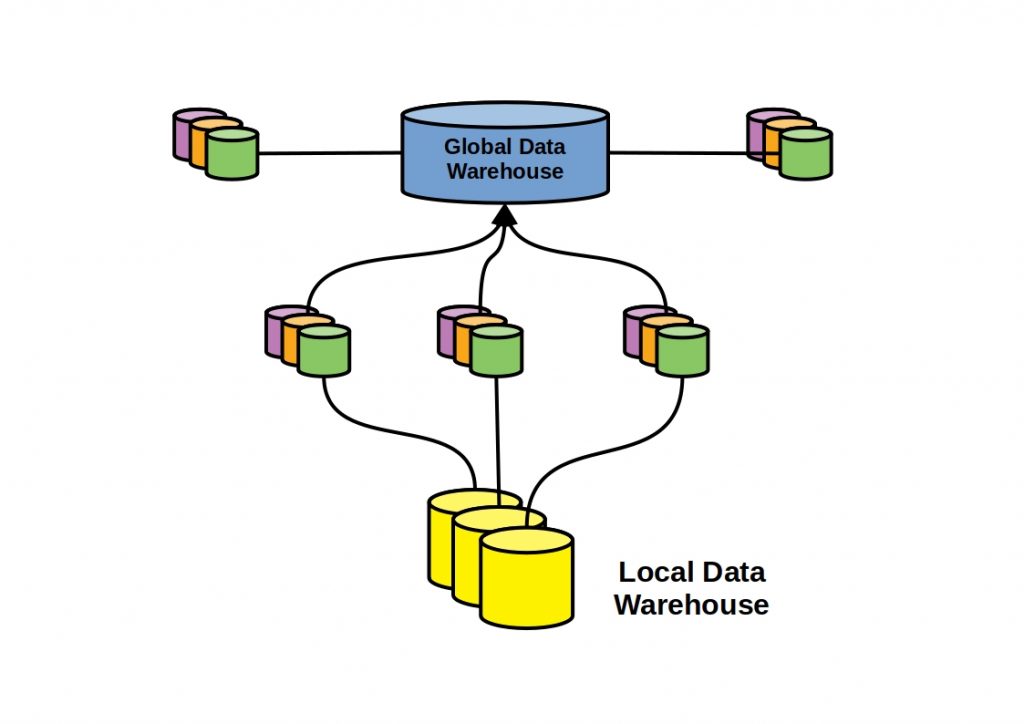

Distributed Data Warehouses

In the distributed warehouse system, the data is basically distributed. For technological or business reasons, this separation can take place in local and global warehouses. The local levels are then only integrated within the local site but also contain historical data and is therefore absolutely autonomous. This is where most of the operational processing takes place, while the global part processes the data that is relevant to the company as a whole.

Are data warehouses still promising?

Nowadays, data streams are on everyone’s lips and it is precisely with iterative and highly dynamic data sources that large data warehouse systems reach their limits. Often, smaller tools that do not require a complete solution are more efficient. So are data warehouse systems dead after all? No, definitely not. Apart from the basic principles, such as the pursuit of data truth, which can be applied to any other software system. In many cases, however, a data warehouse system is still a high-performance overall solution and can coexist with data stream pipeline systems.

With Apache Hive you can access a free Apache data warehouse software. With this software you can easily implement very performant big data systems. Here we have collected the most important information about Hive.