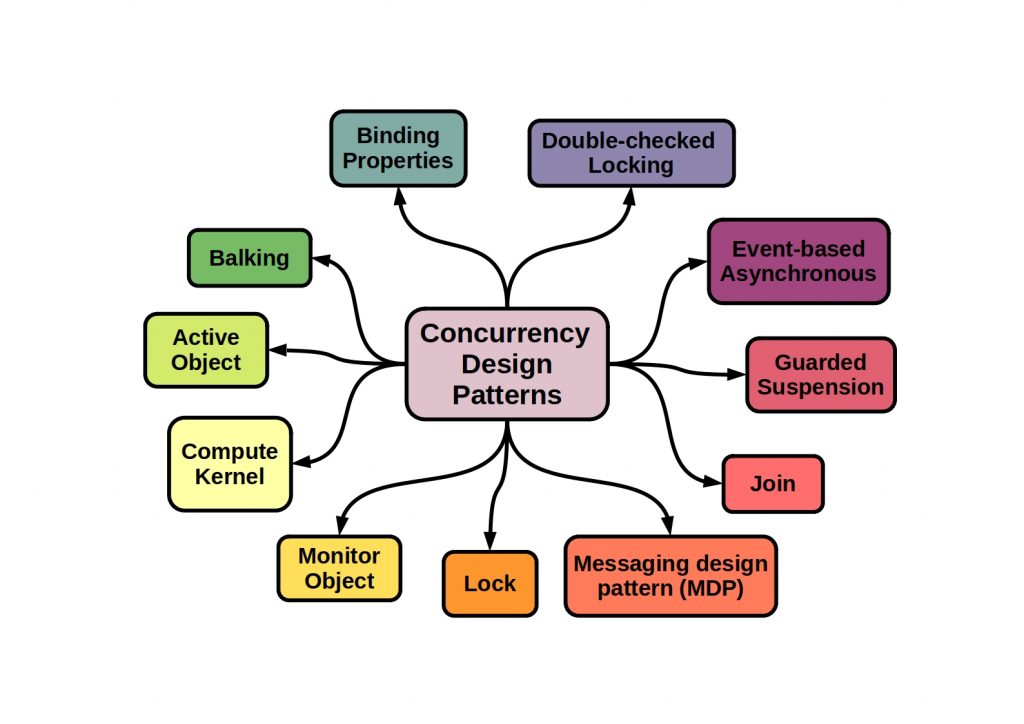

Messaging Patterns- What are they? What are their strengths and why should they only be used with caution? We clarify these questions in this article.

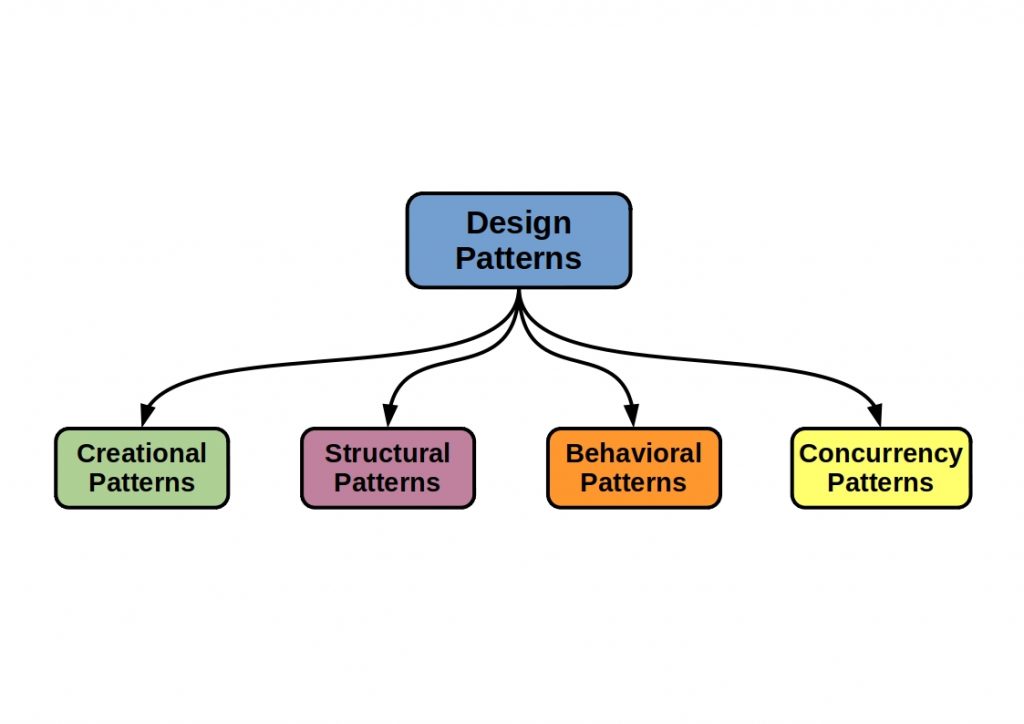

What are Design Patterns?

Technology-independent designs can provide proven pattern solutions in software development, ensuring standardized and robust architecture.

If you’ve never heard of software design patterns, check out this article from us on the subject first.

Design patterns allow a developer to draw on the experience of others. They offer proven solutions for recurring tasks. A one-to-one implementation is not advisable. The patterns should rather be used as a guide.

What is a message?

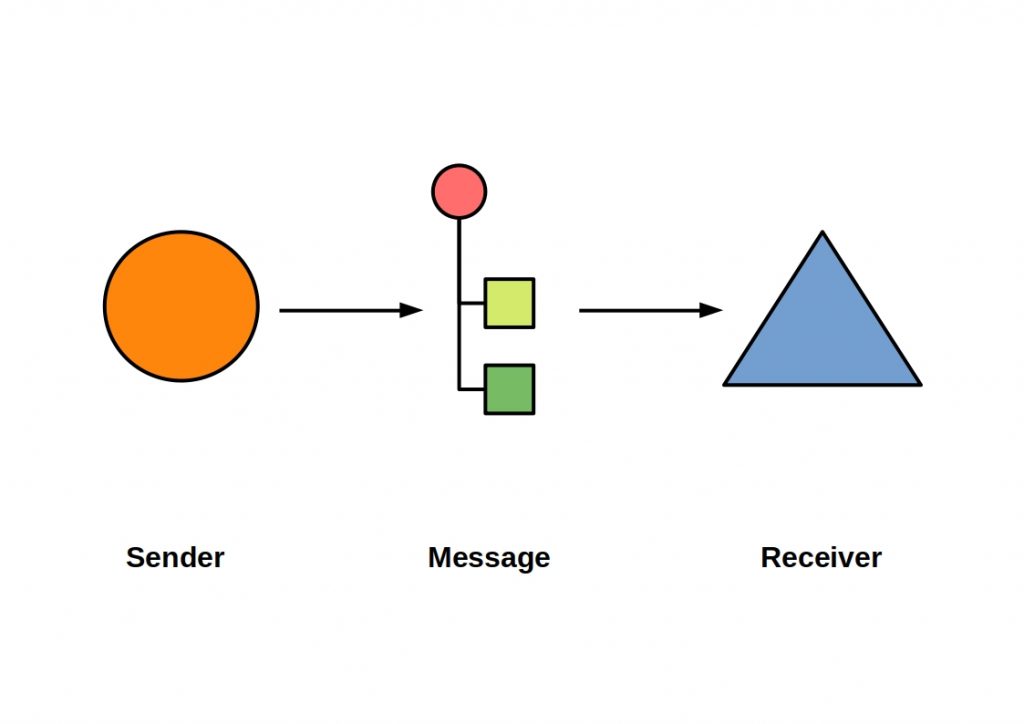

A basic design pattern is the message. Actually a term that is used by everyone as a matter of course, but what is behind it?

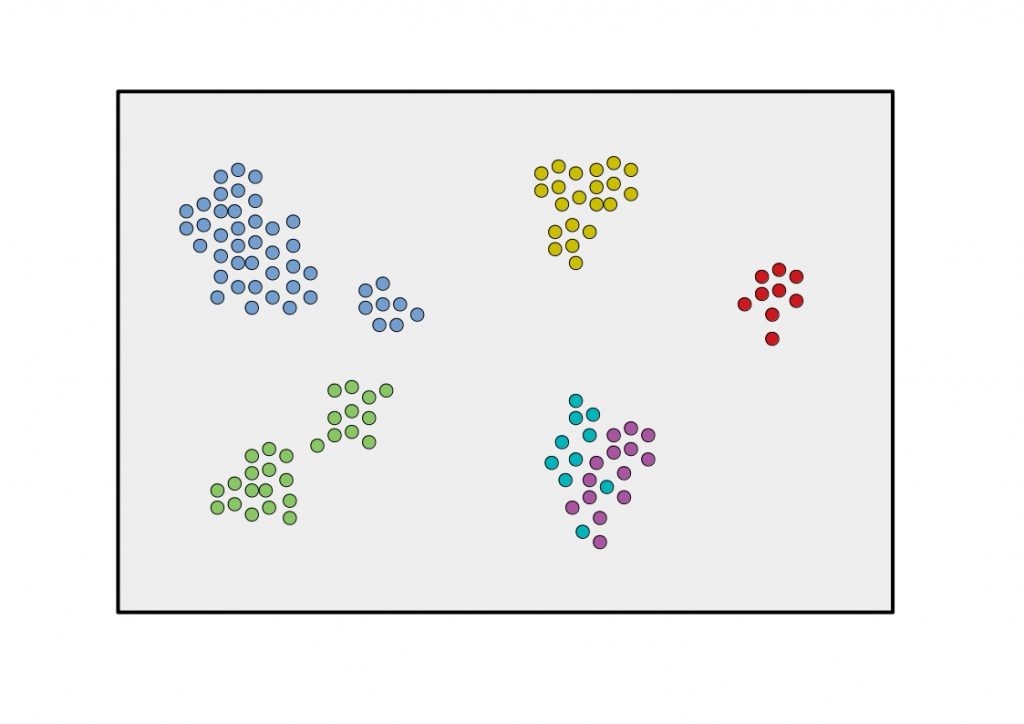

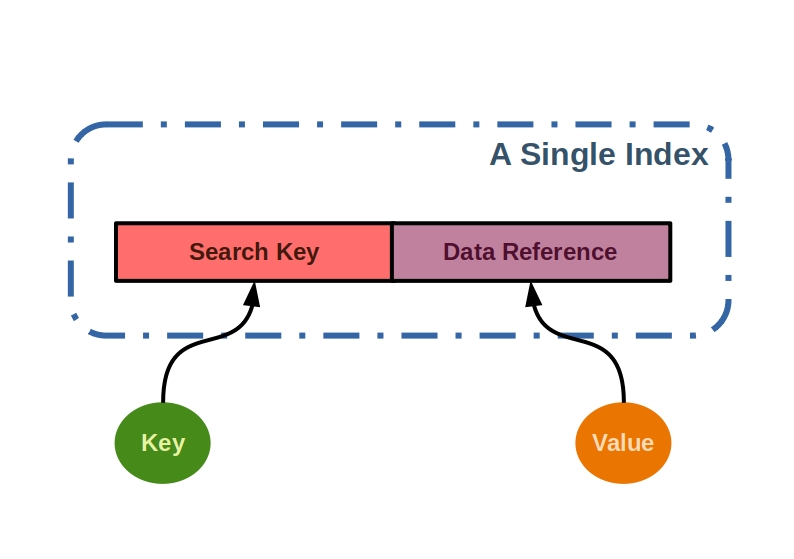

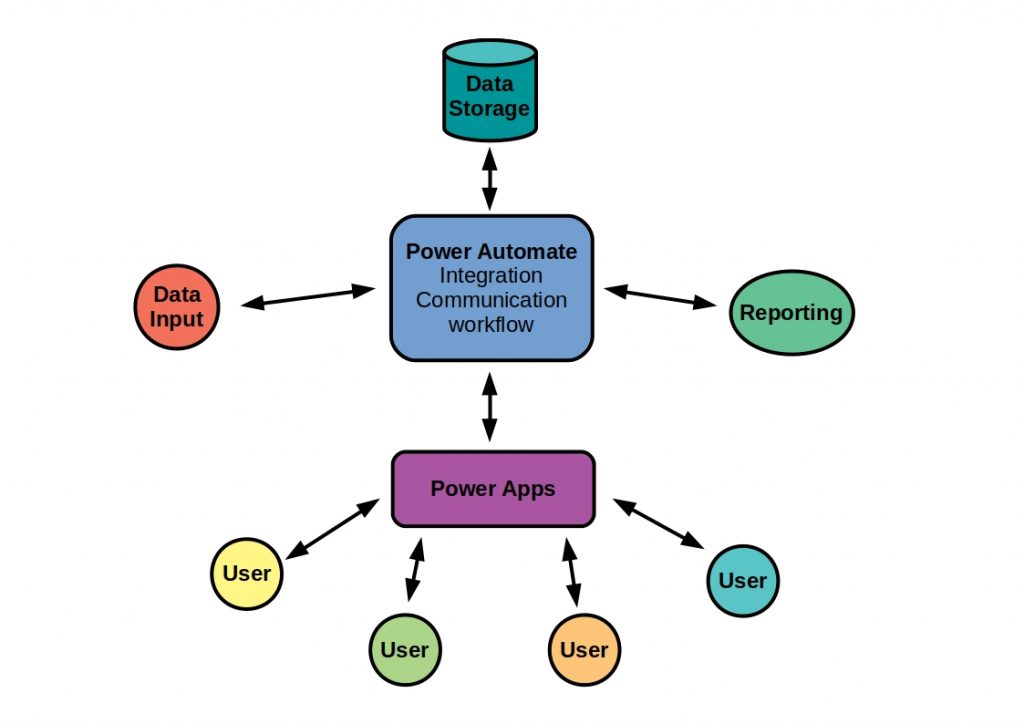

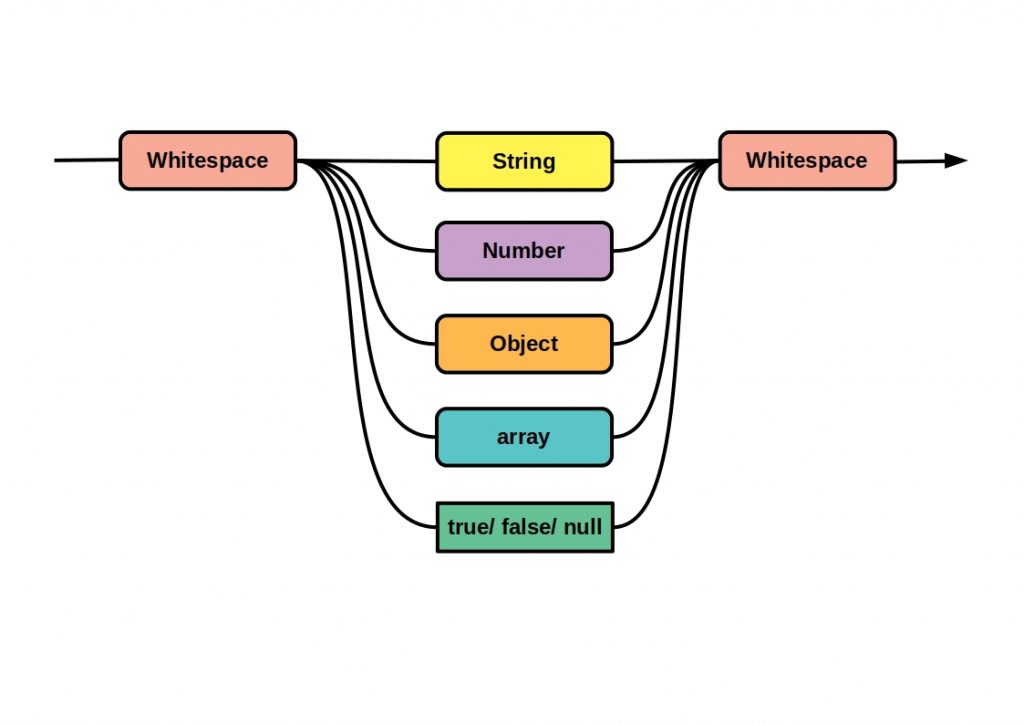

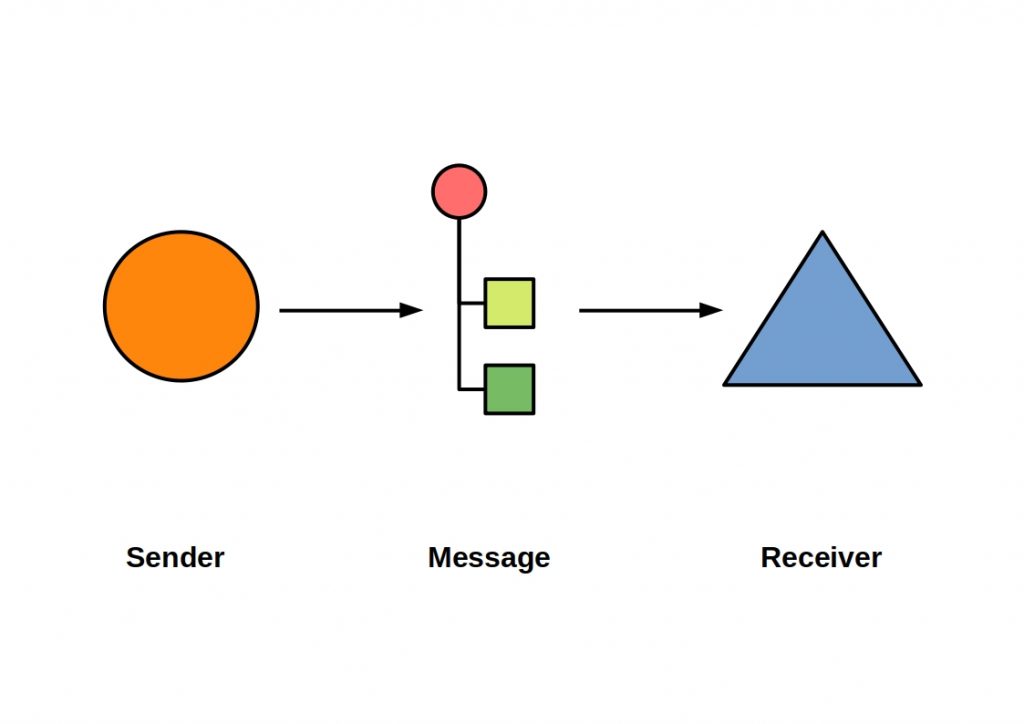

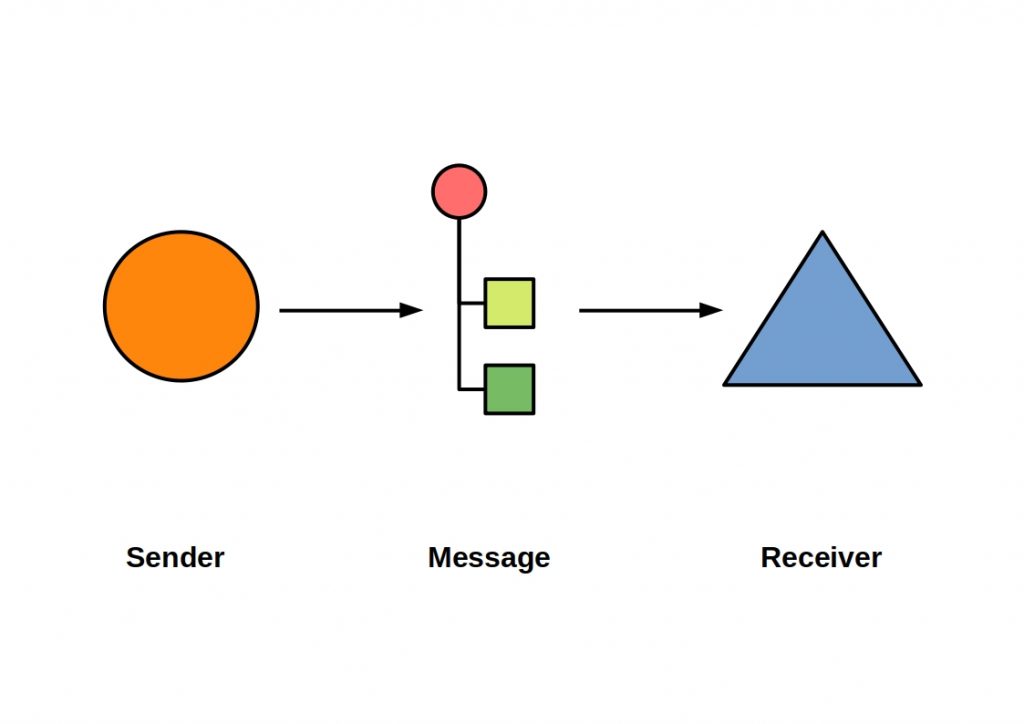

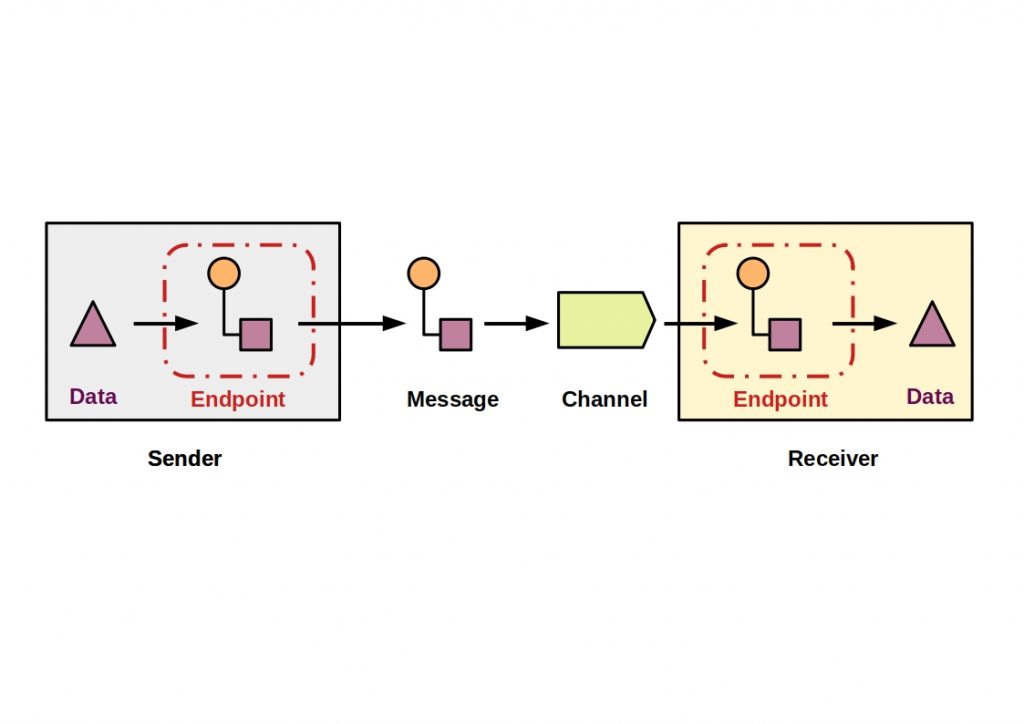

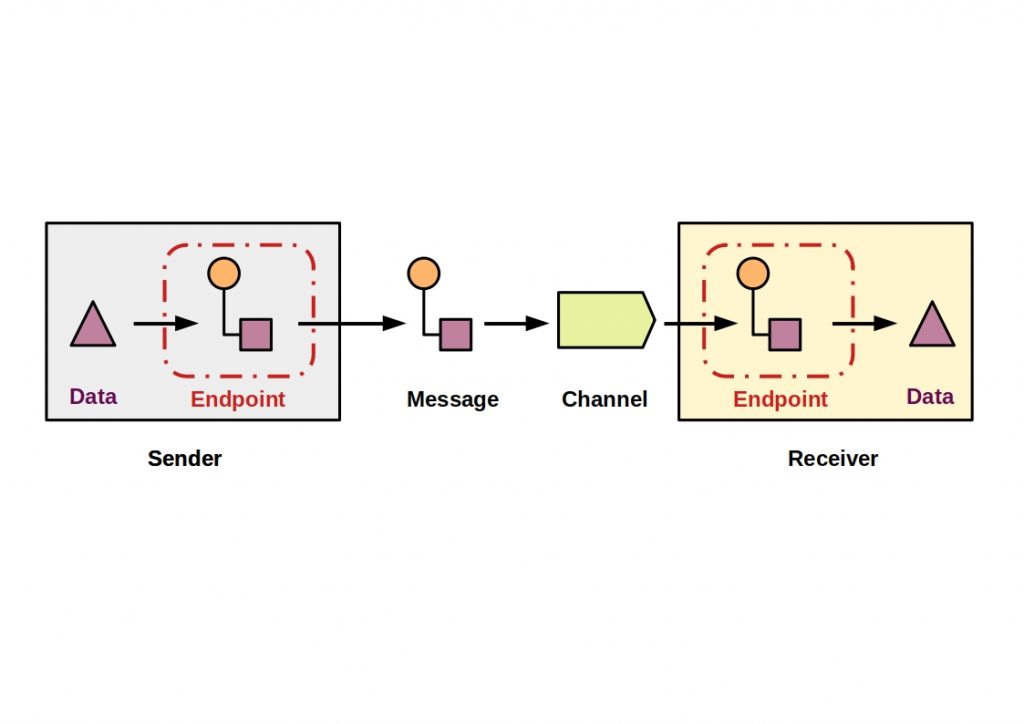

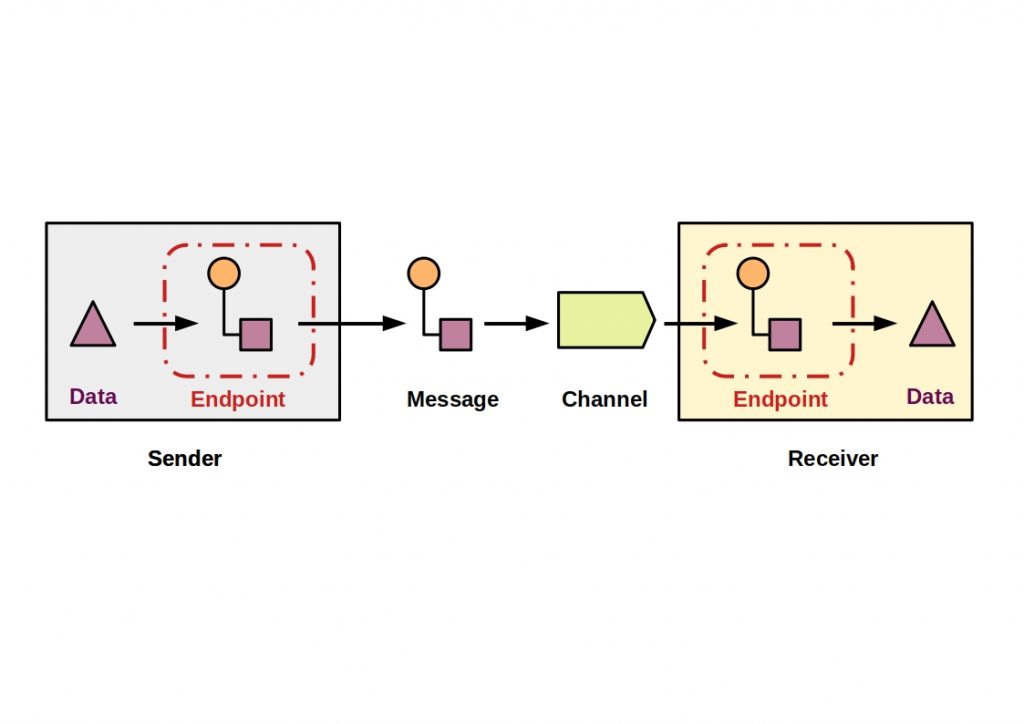

Data is packaged in messages and then transmitted from the sender to the receiver via a message channel. The following figure shows such a messaging system.

The communication is asynchronous, which means that both applications are decoupled from each other and therefore do not have to run simultaneously. The sender must build and send the message, while the receiver must read and unpack it.

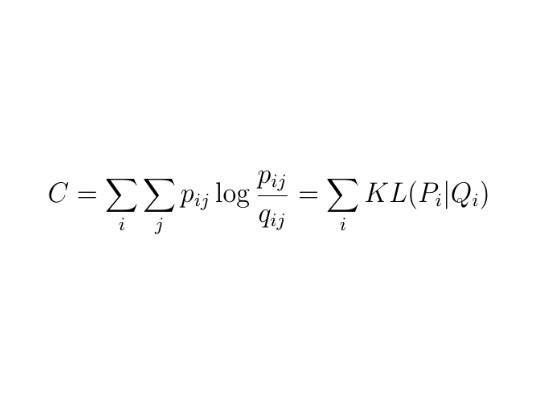

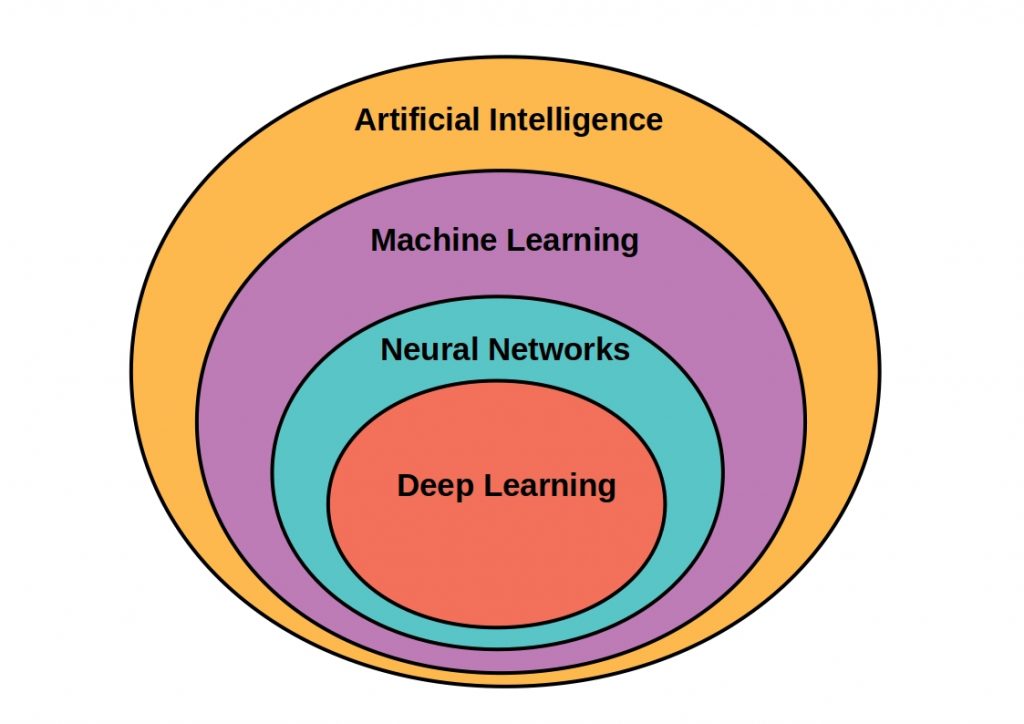

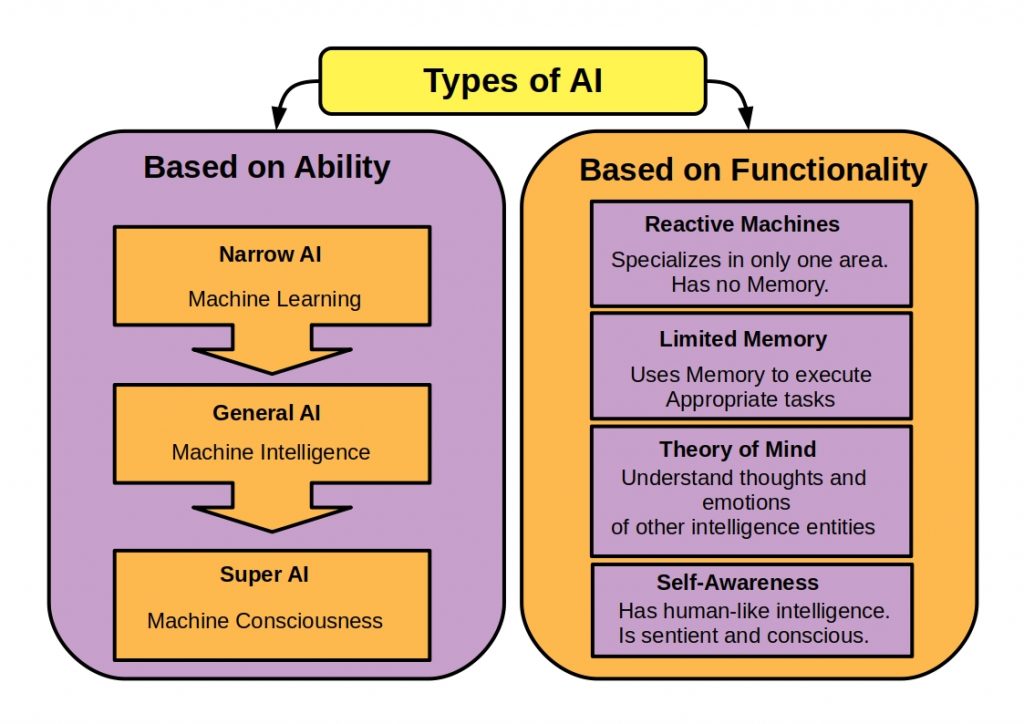

What are Messaging Patterns?

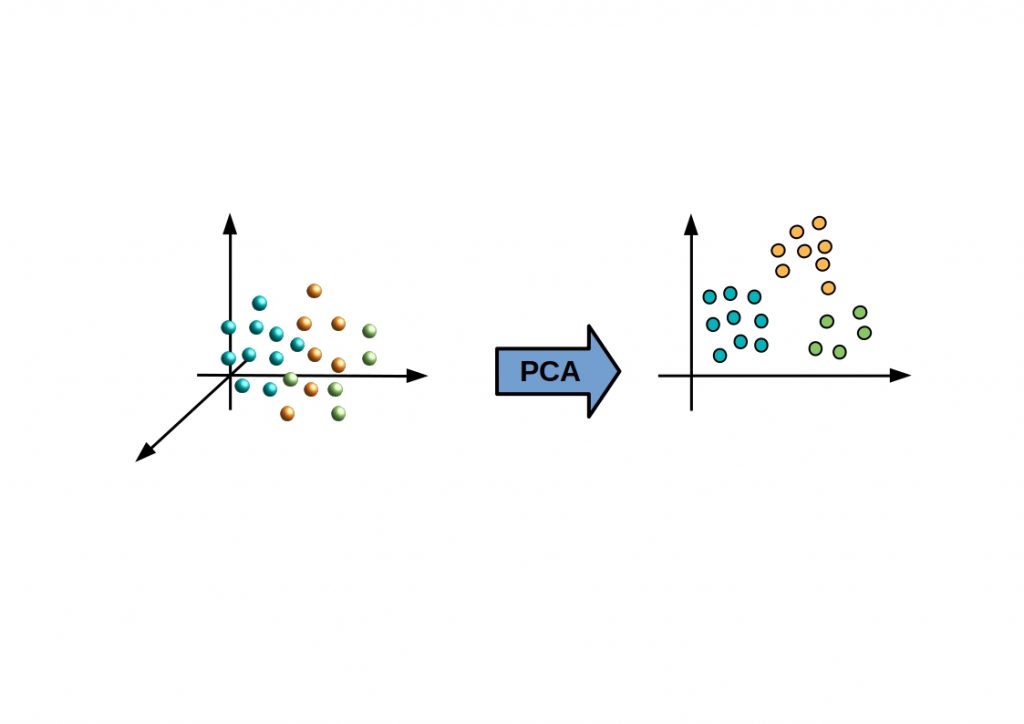

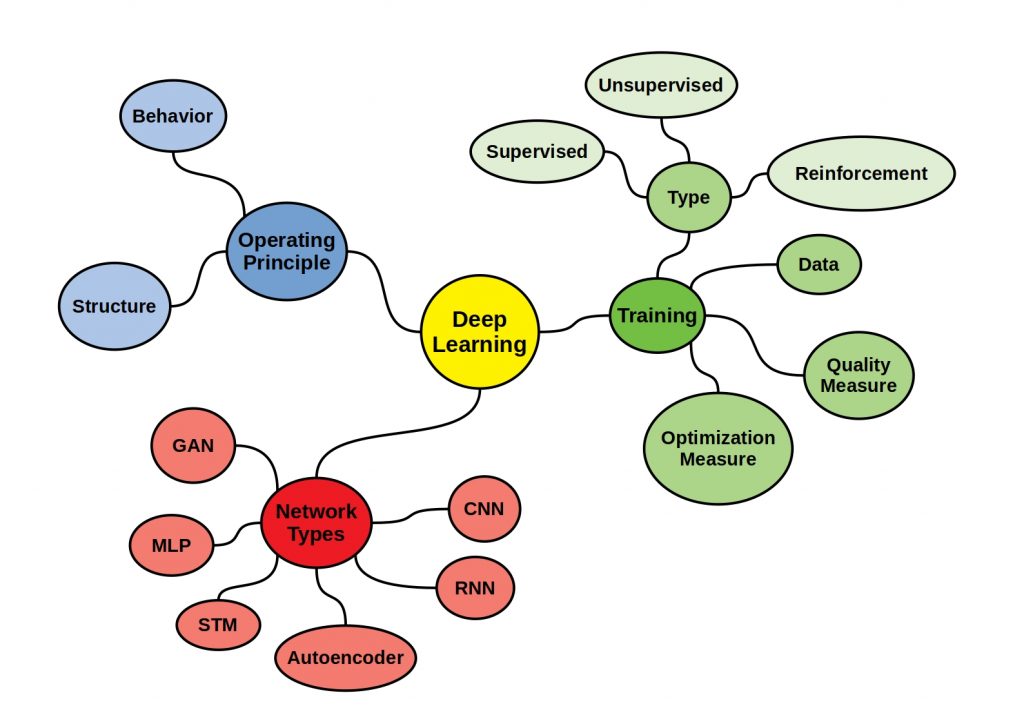

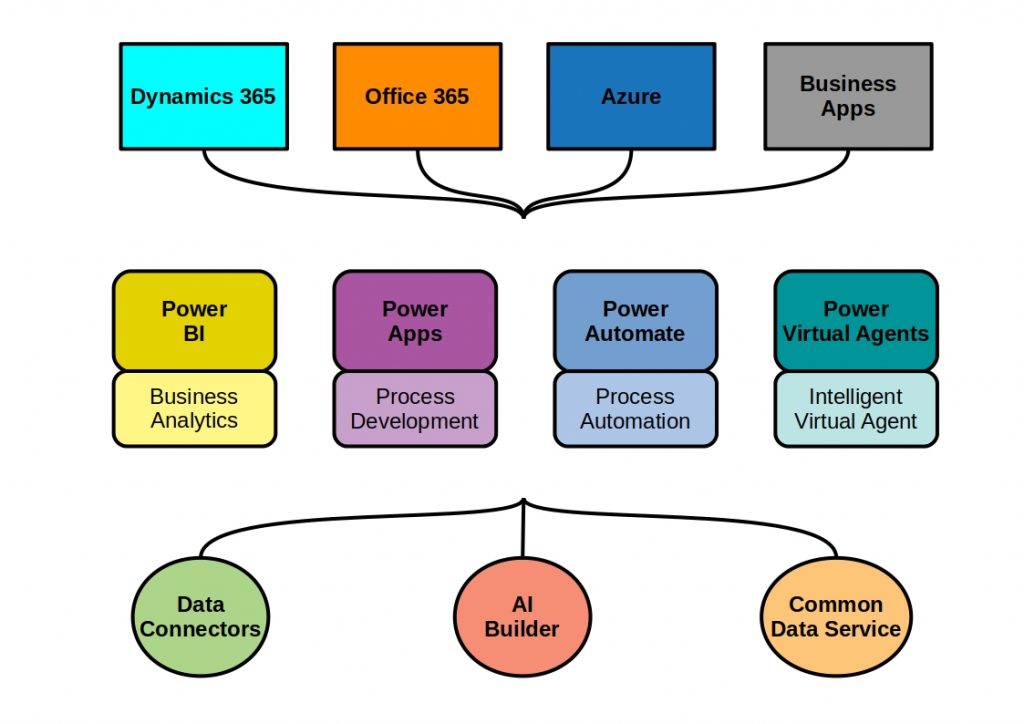

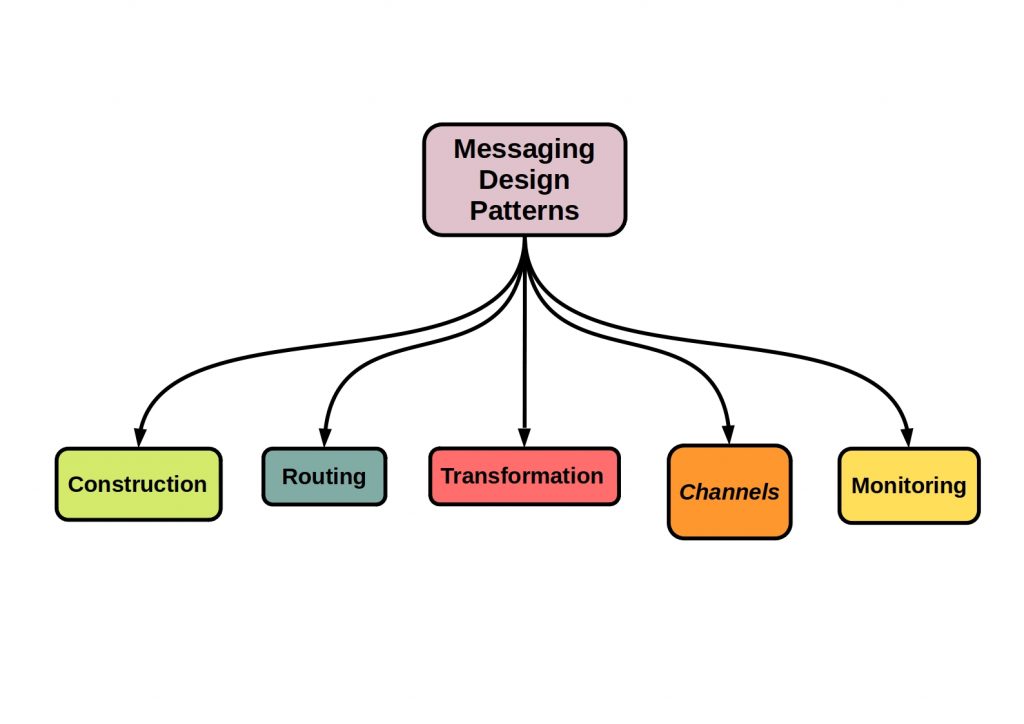

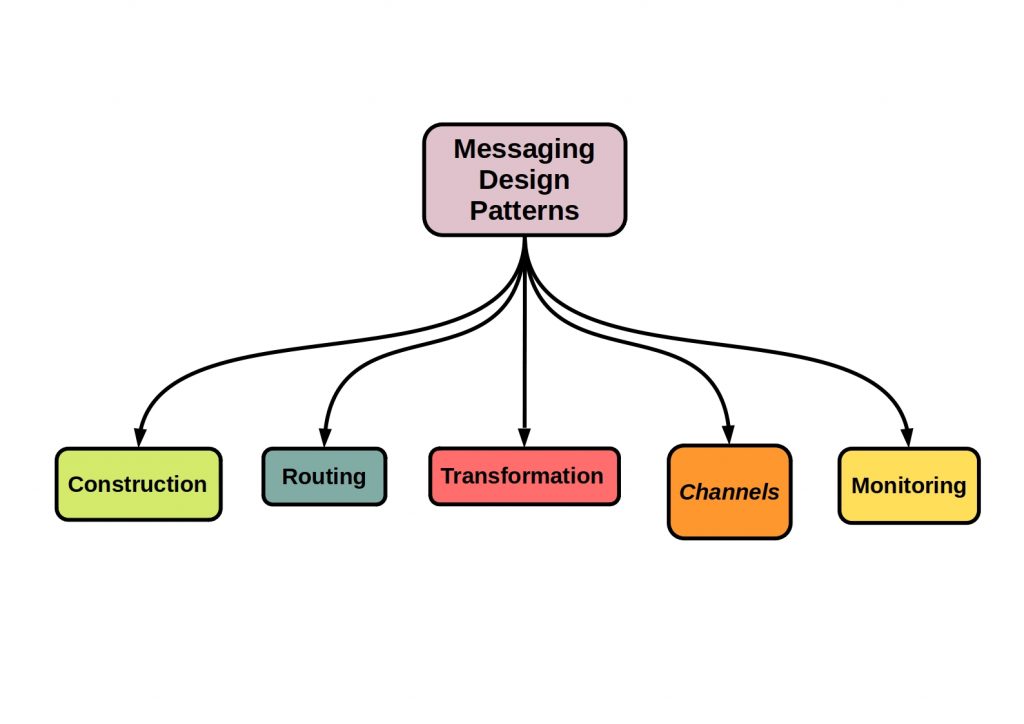

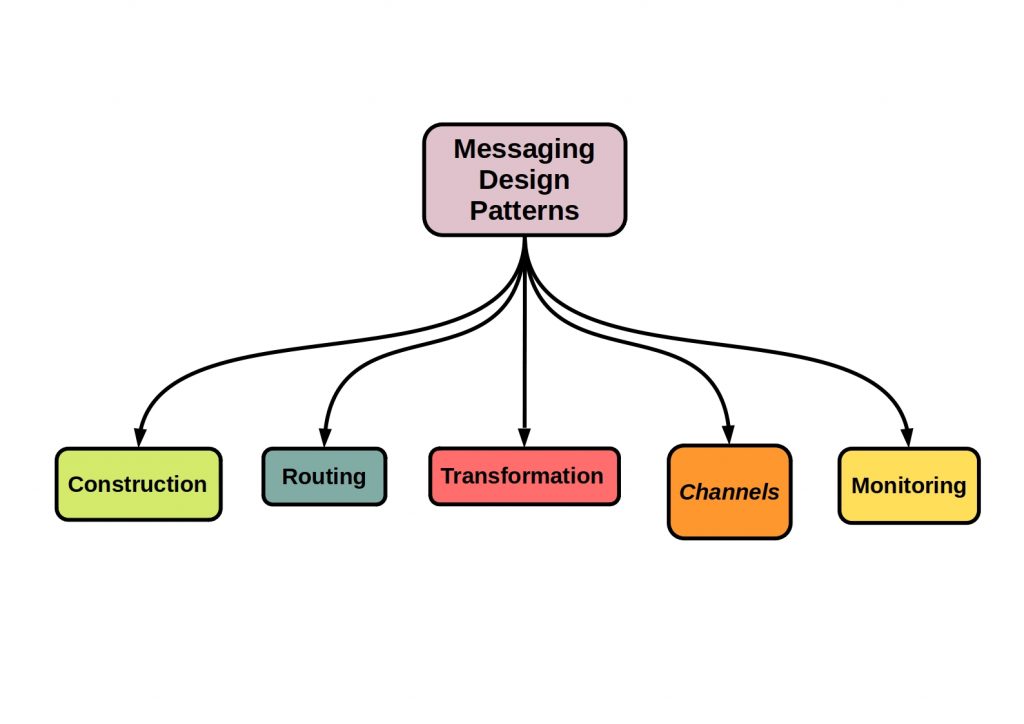

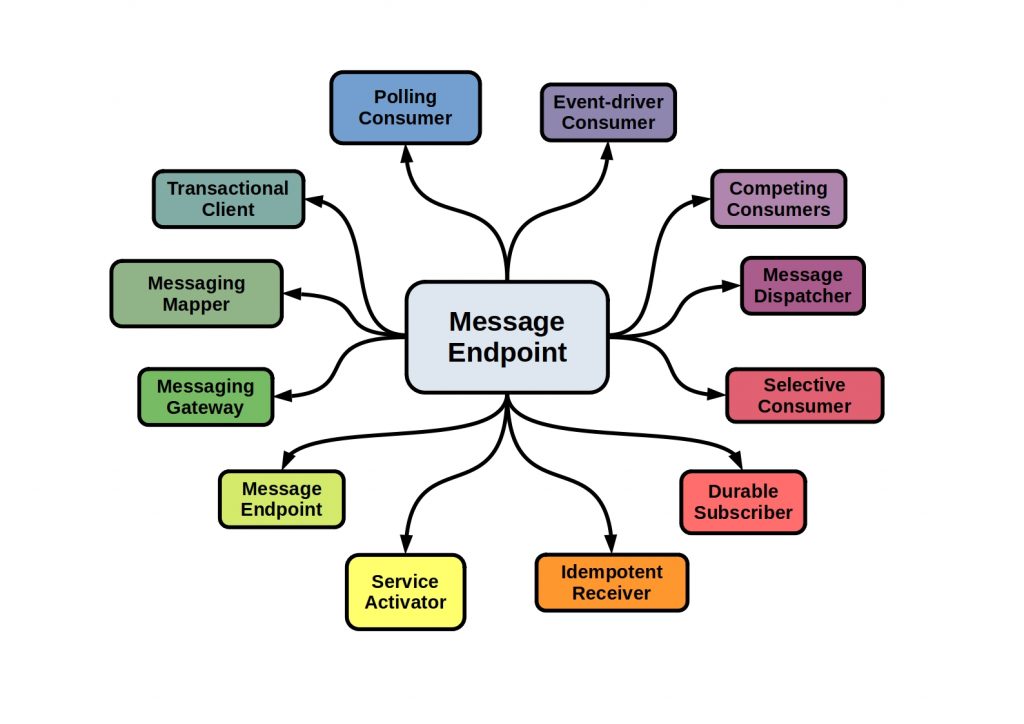

However, this form of message transmission is only one way of transferring information. The following figure shows the basic concepts of messaging design patterns.

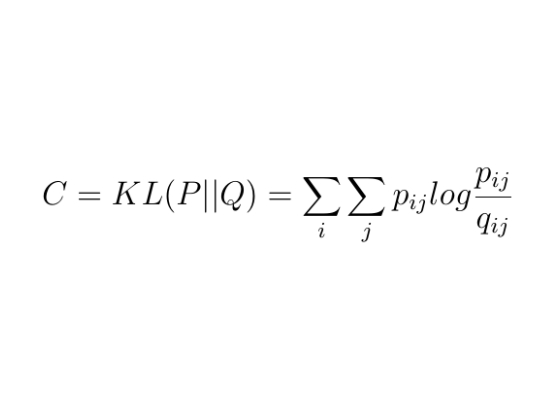

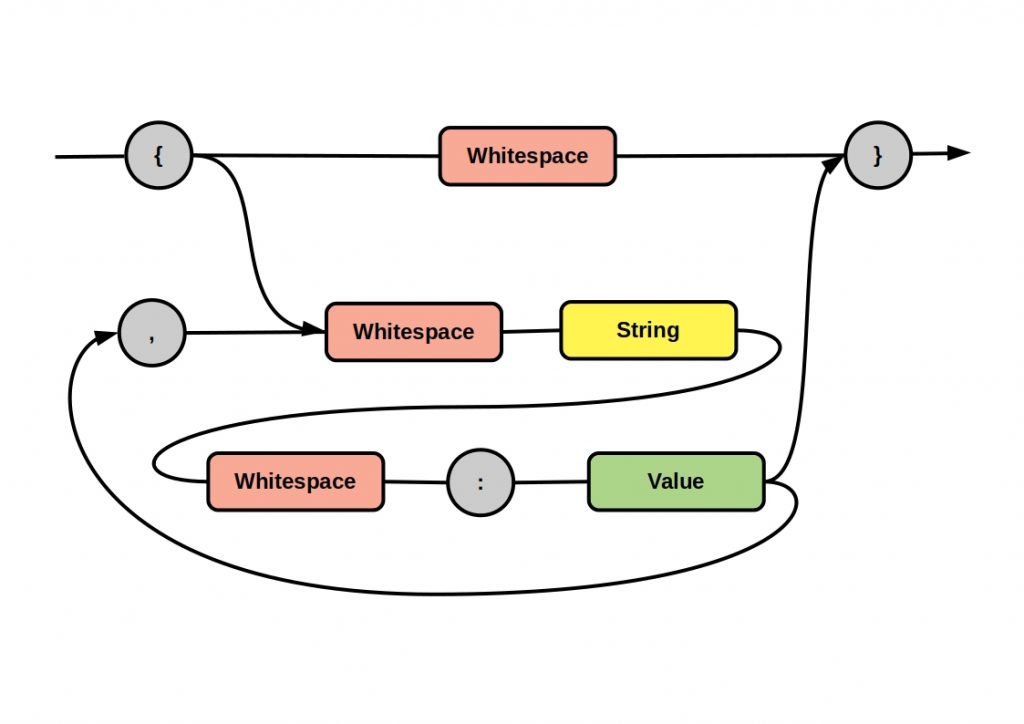

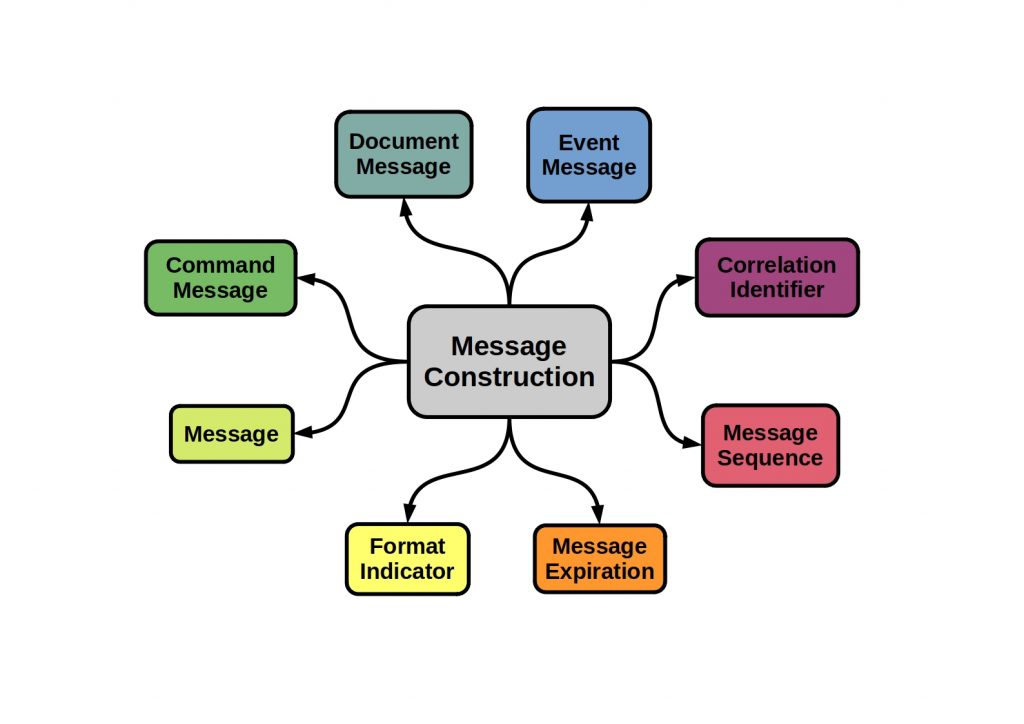

What is Message Construction?

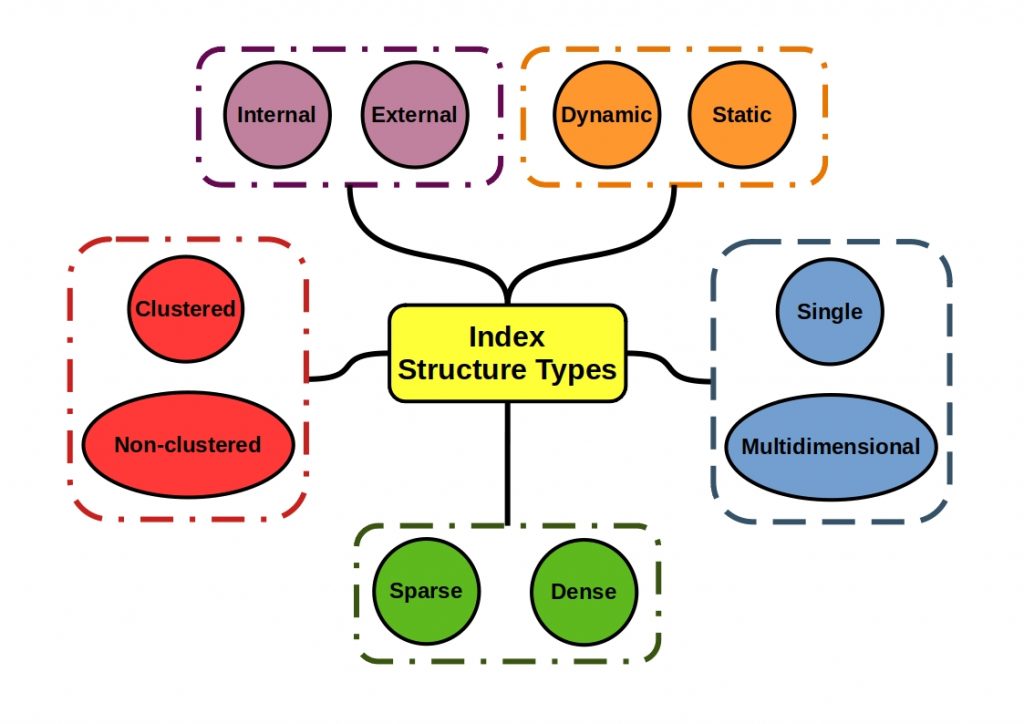

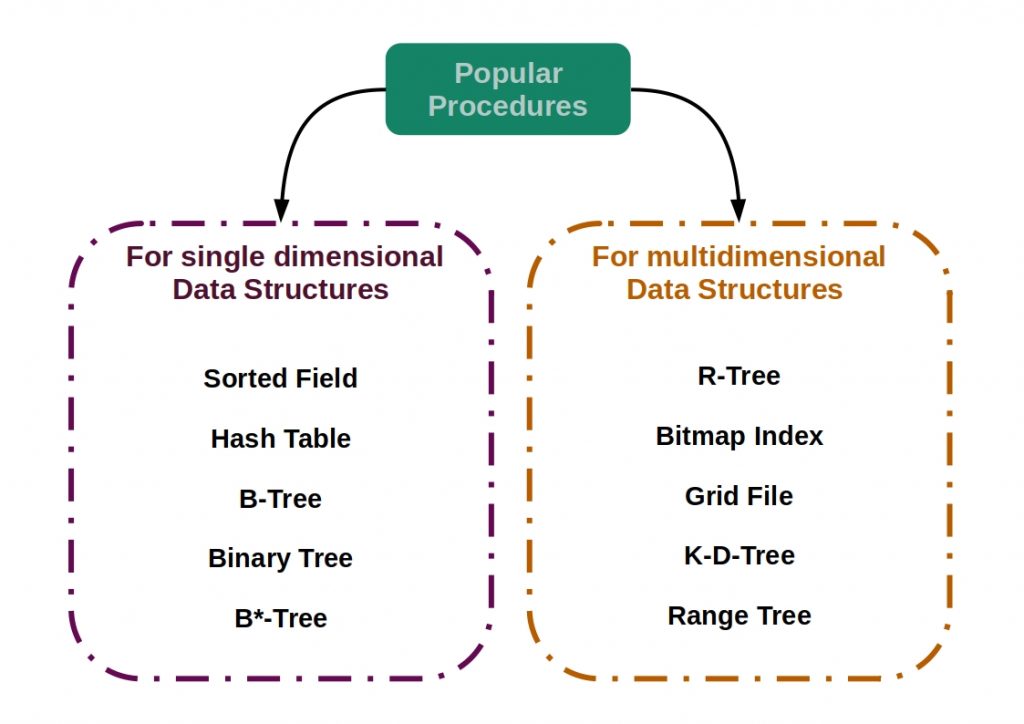

It is not enough to decide to use a message. A message can be constructed according to different architectural patterns, depending on the functions to be performed.

The following figure shows some of these patterns.

Message Construction – When do I use it?

Massaging can be used not only to send data between a sender and receiver, but also to call a procedure or request a response in another application.

With the right message architecture a certain flexibility can be guaranteed. This makes the message much more robust against possible future changes.

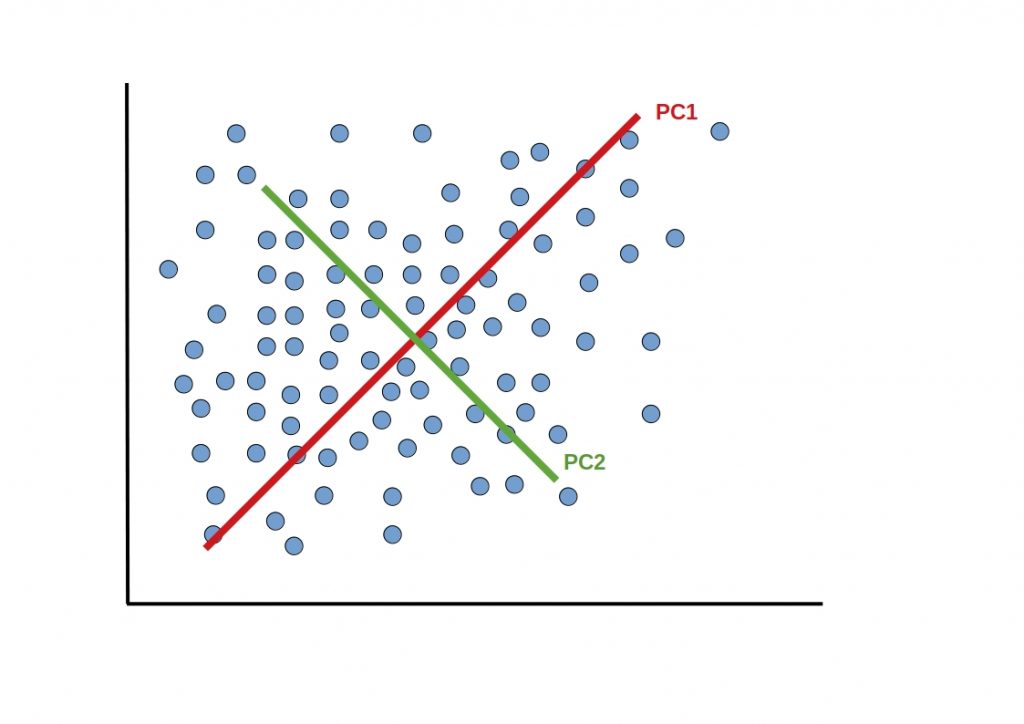

What is Message Routing?

A message router connects the message channels in a messaging system. We will come back to this topic later. This router corresponds to a filter, which regulates the message forwarding, but does not change the message. A message is only forwarded to another channel if all predefined conditions are met.

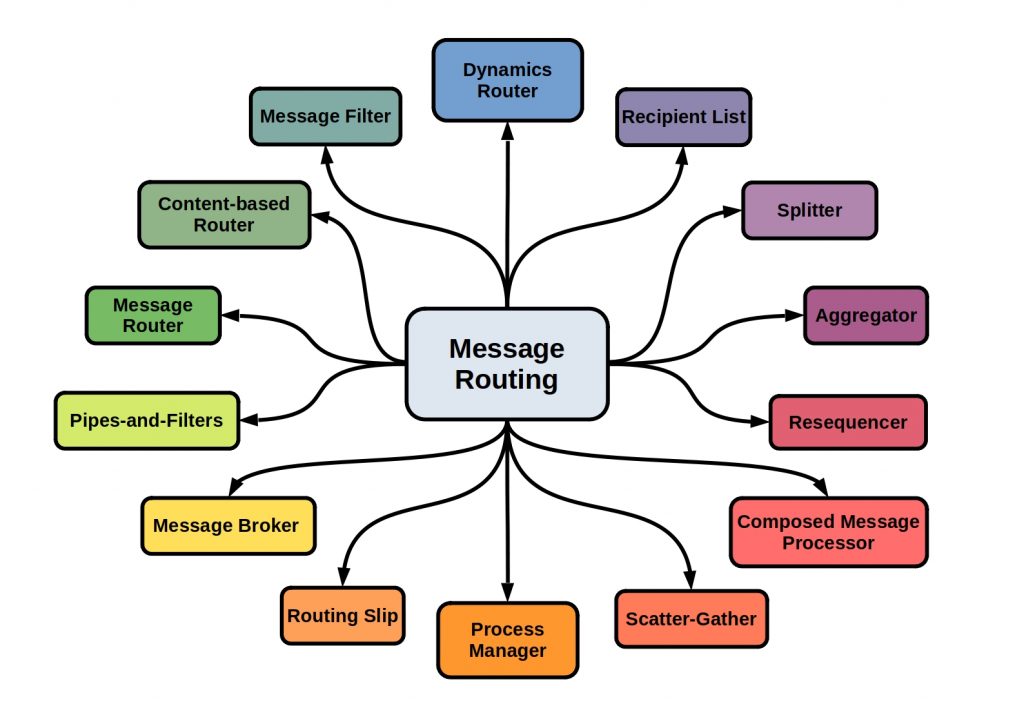

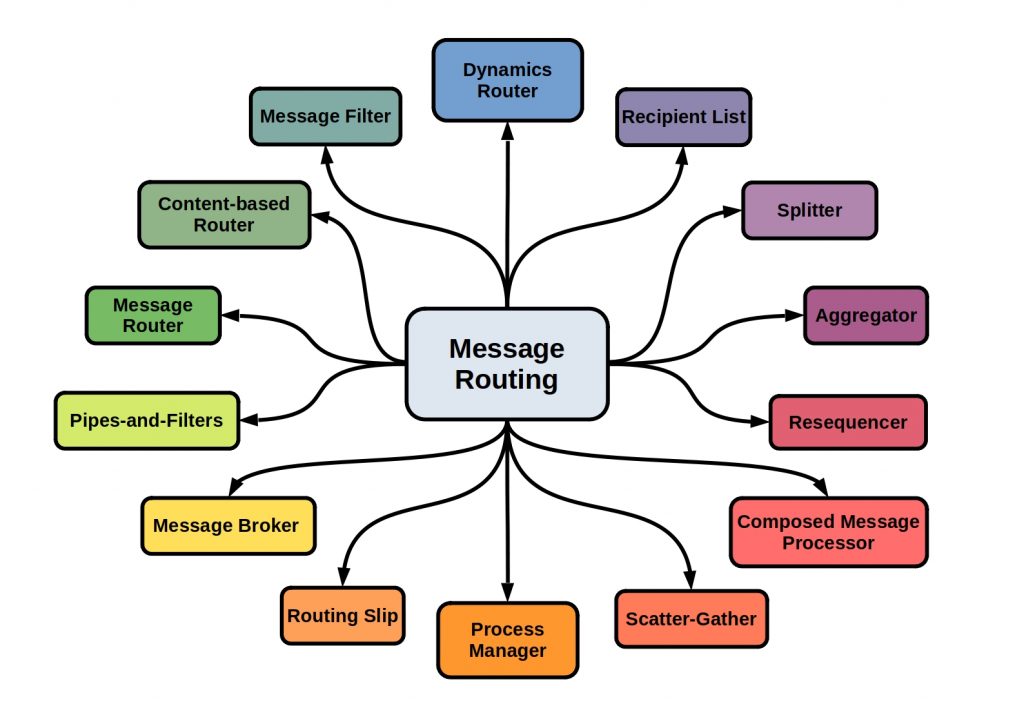

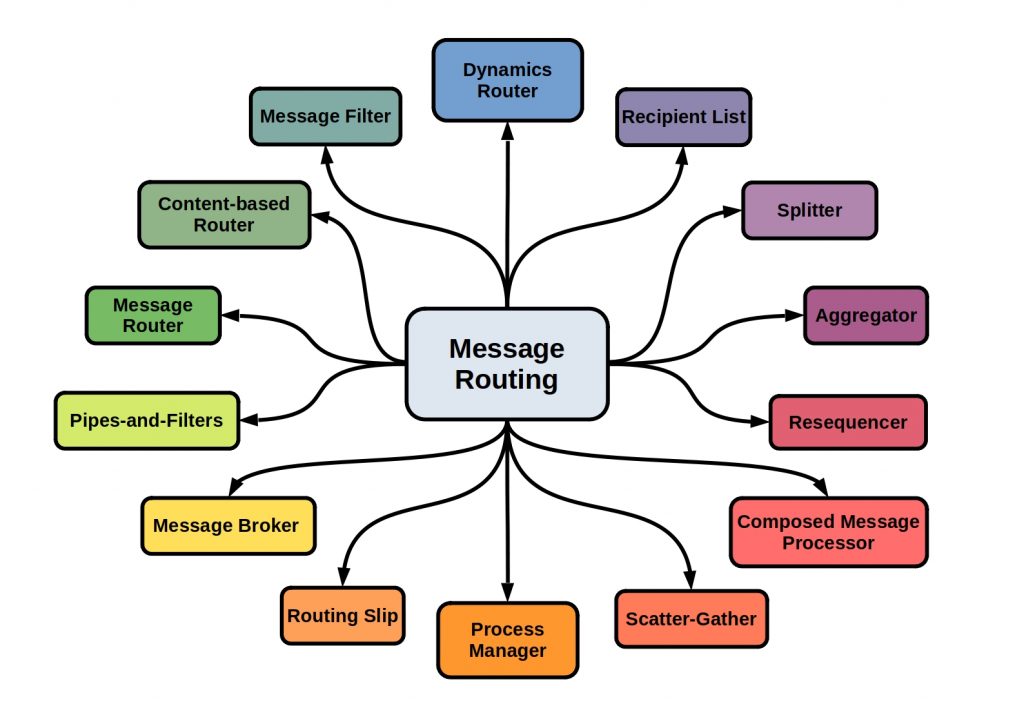

The following figure lists some specific message router types.

When do I use message routing and how?

For example, messages can be forwarded to dynamically defined recipients, or message parts can be processed or combined in a differentiated manner.

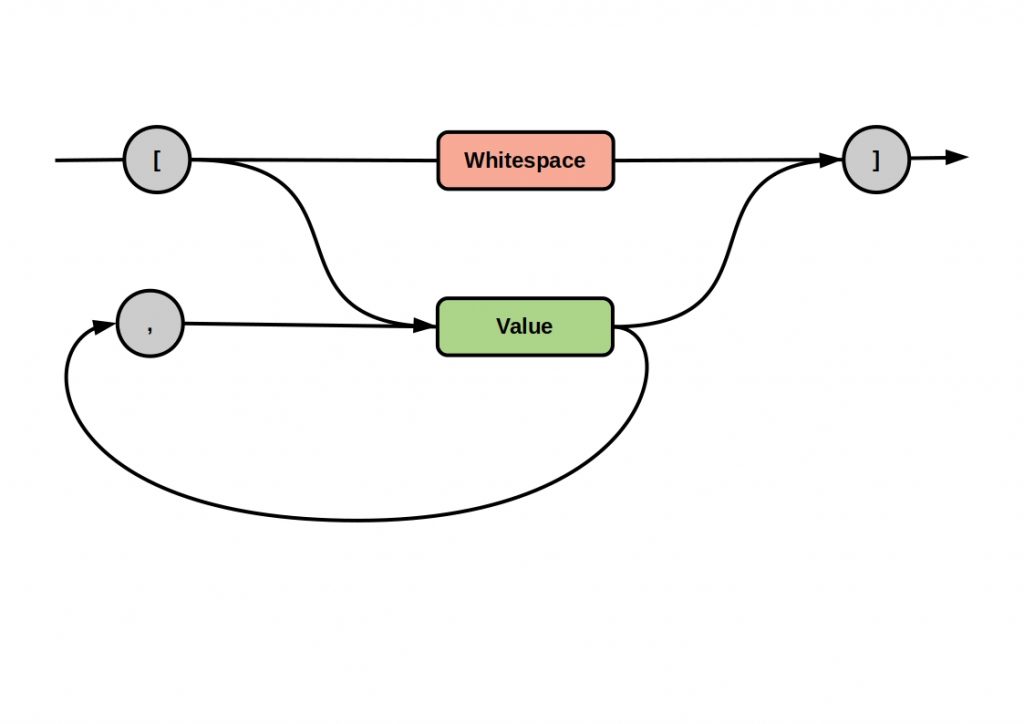

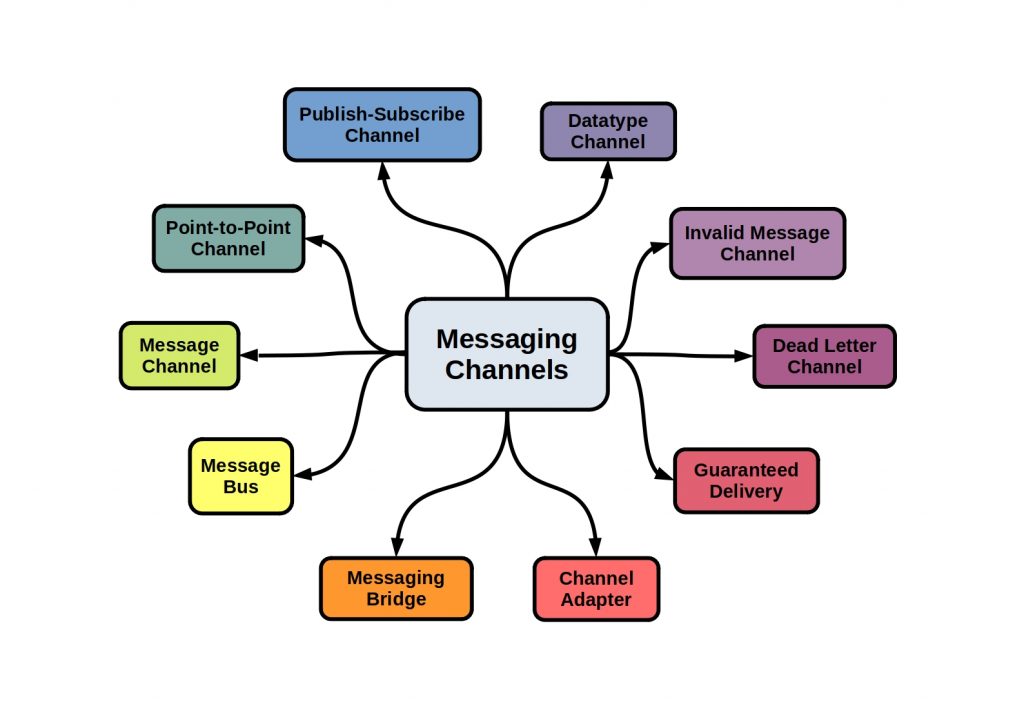

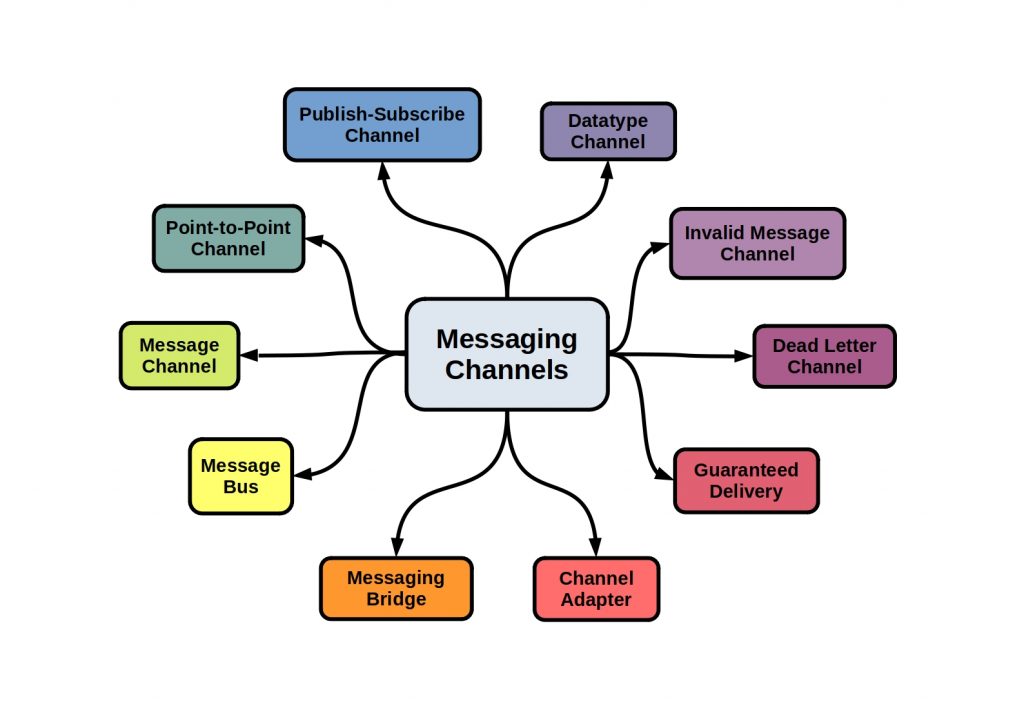

What are Messaging Channels?

In a messaging system, the exchange of information does not just happen unregulated. The sender transfers the message to a so-called messaging channel and the receiver requests a specific message channel.

In this way, the sender and receiver are decoupled. However, the sender can determine which application receives the data without knowing about it by selecting the specific messaging channel.

However, the right choice of message channel depends on your architecture. Which channel should be addressed and when?

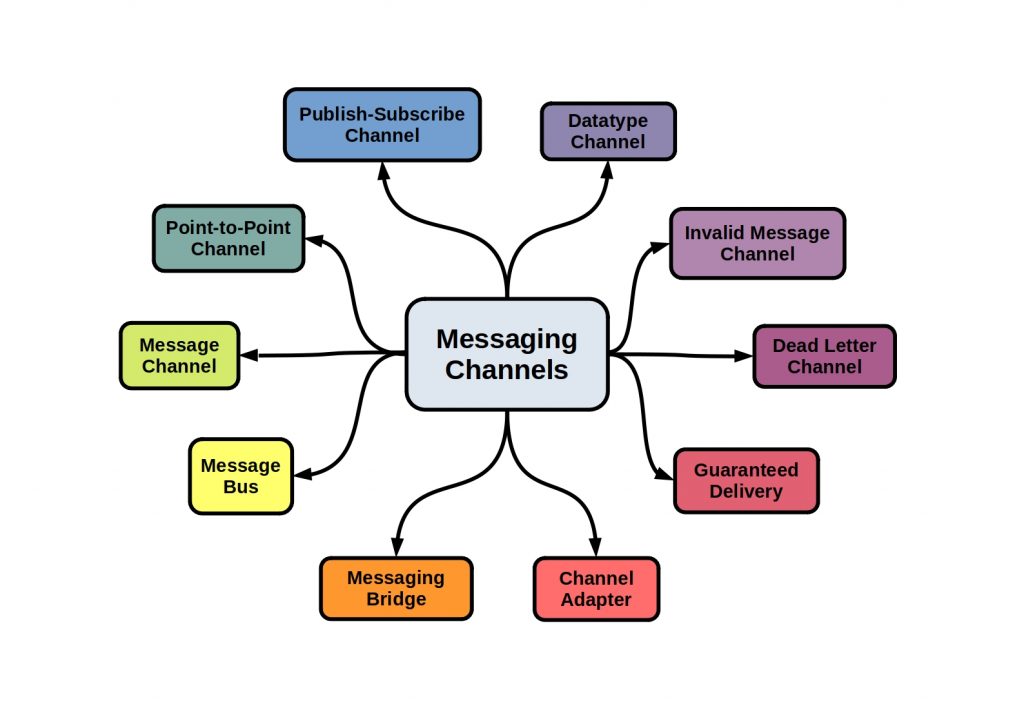

The following figure lists some such channel types.

What are the basic differences between the channel types ?

Basically, the channel types can be divided into two main types.

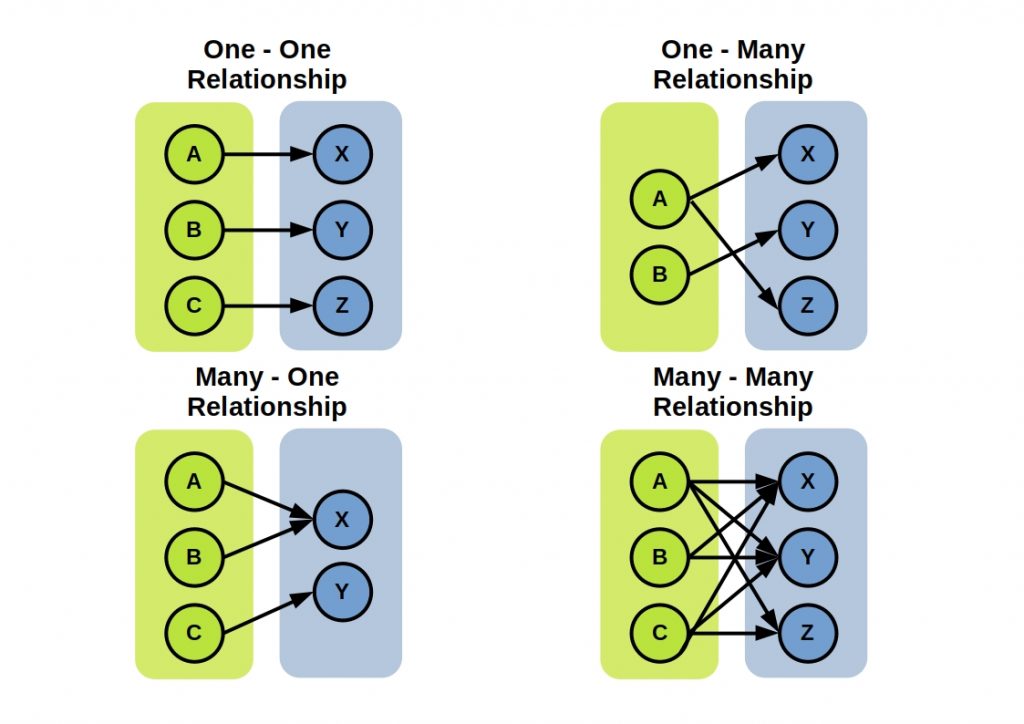

A distinction can be made between a point-to-point channel, i.e. one sender and exactly one receiver, and a publish-subscribe channel, one sender and several receivers.

What is a Messaging Endpoint?

In order for a sender or receiver application to connect to the messaging channel, an intermediary must be used. This client is called a messaging endpoint.

The following figure shows the principle of communication via messaging endpoints.

On the receiver side, the end point accepts the data to be sent, builds a message from it and sends it via a specific message channel. On the receiver side, this message is also received via an end point and extracted again. An application can access several end points here. However, an endpoint can only implement one alternative.

The following figure lists some endpoint types.

When do I choose which endpoint?

Receiving messages in particular can become difficult and lead to server overload. Therefore, control and possible throttling of the processing of client requests is crucial. A proven means is, for example, the formation of processing queues or a dynamic adjustment of consumers, depending on the volume of requests.

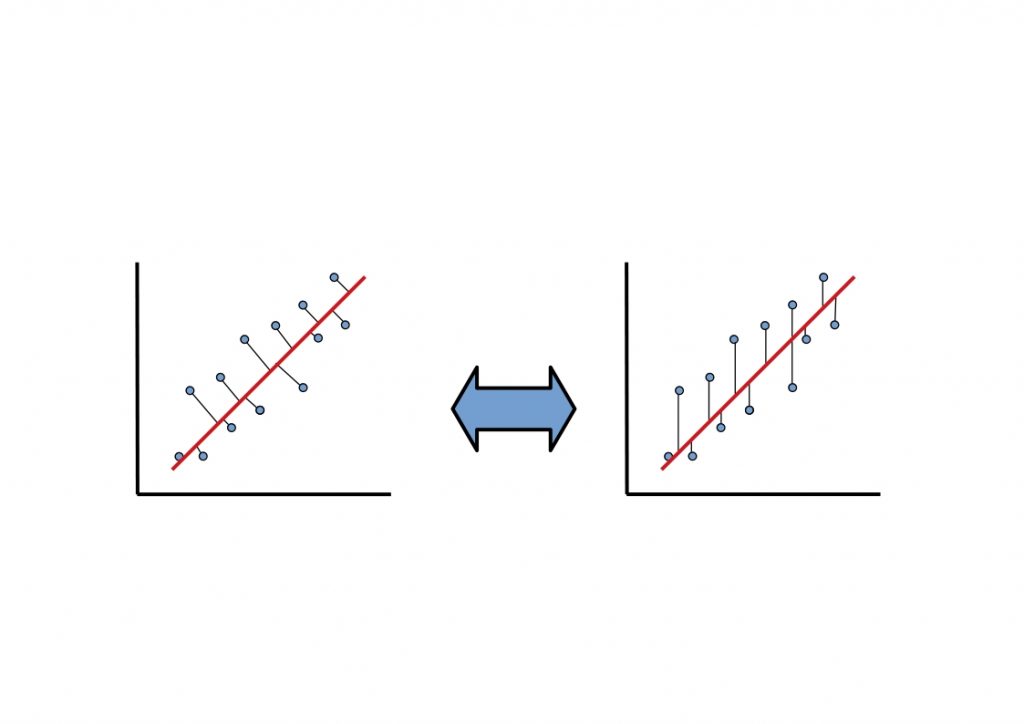

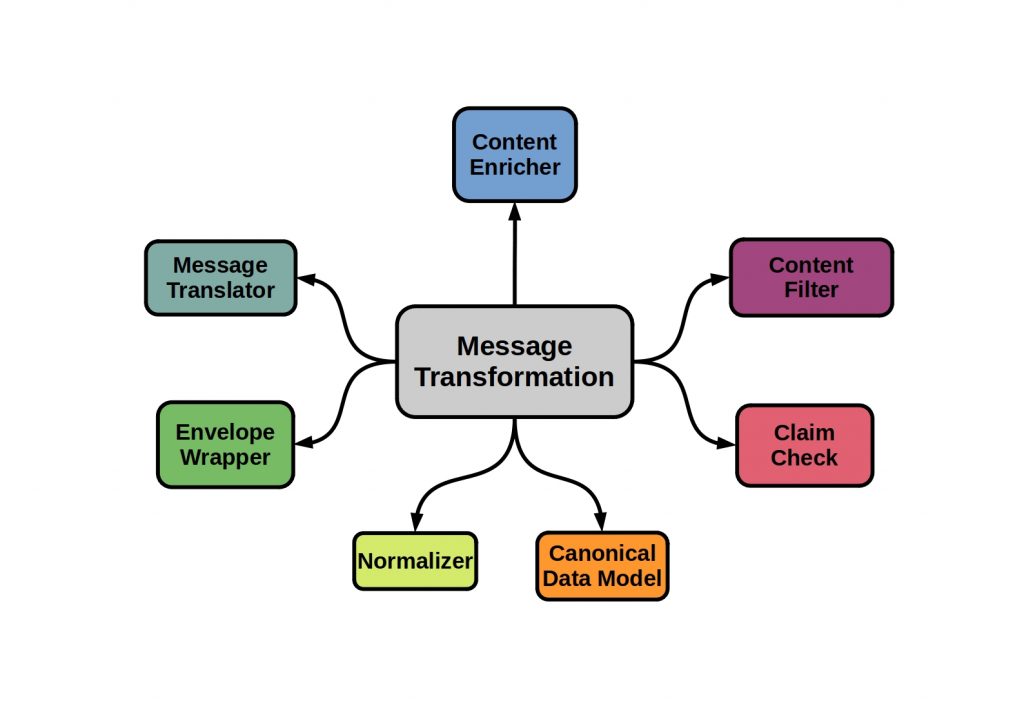

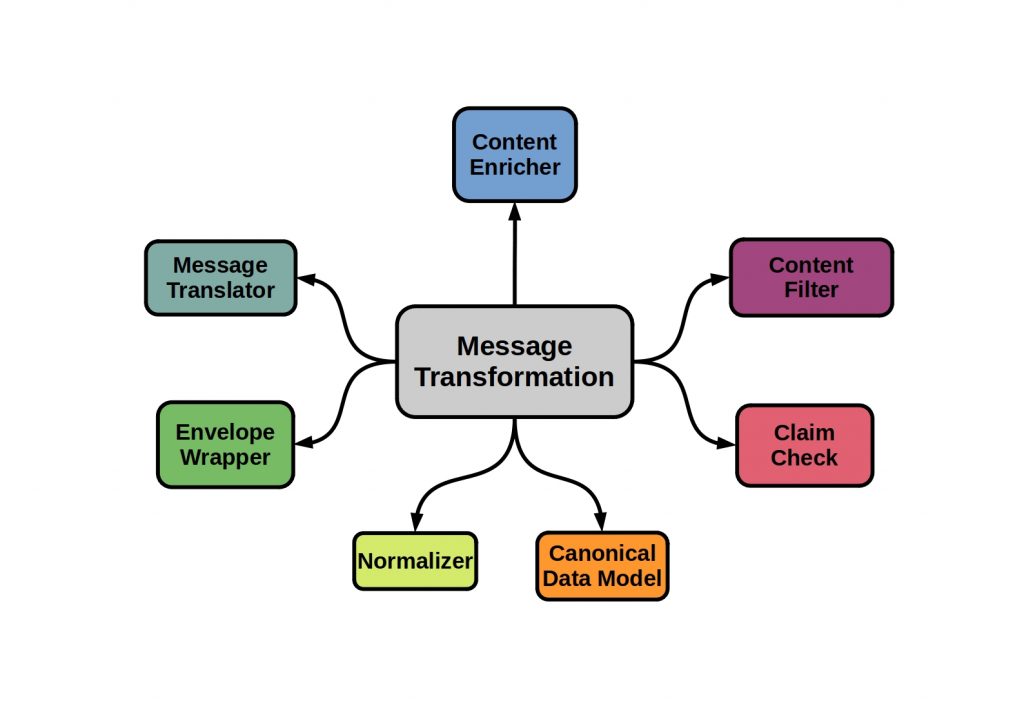

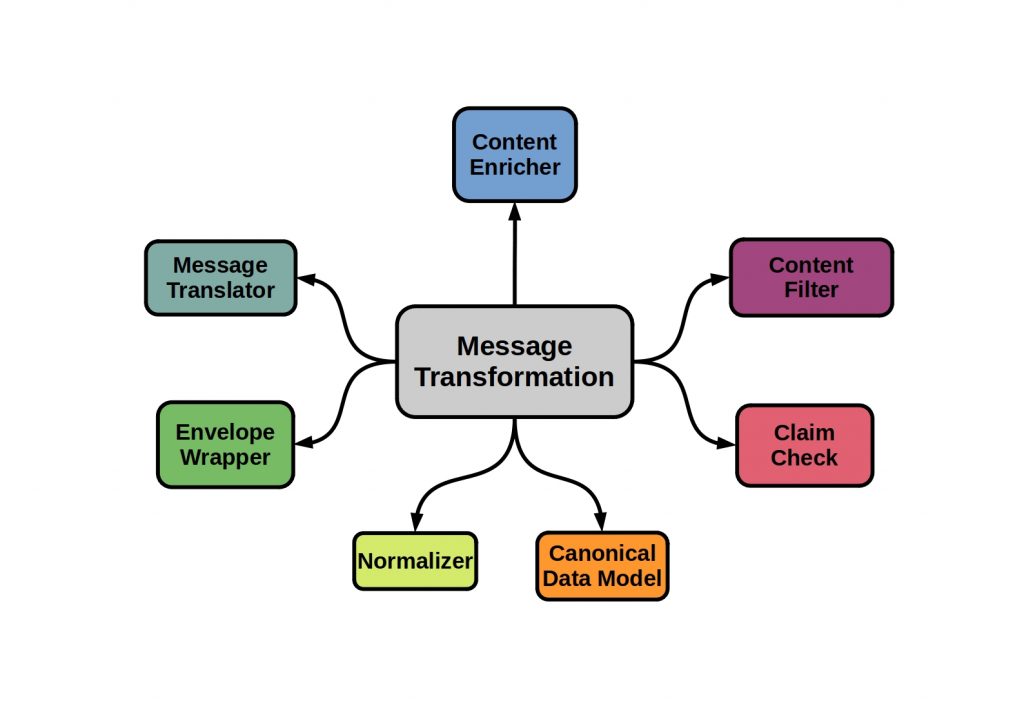

What is Message Transformation?

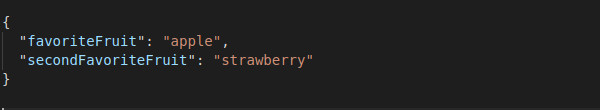

If the data format has to be changed when data is exchanged between two applications, a so-called message transformation ensures that the message channel is formally decoupled.

This translation process can be understood as two systems running in parallel. The actual message data is separated from the metadata.

The following figure shows some message transformation types.

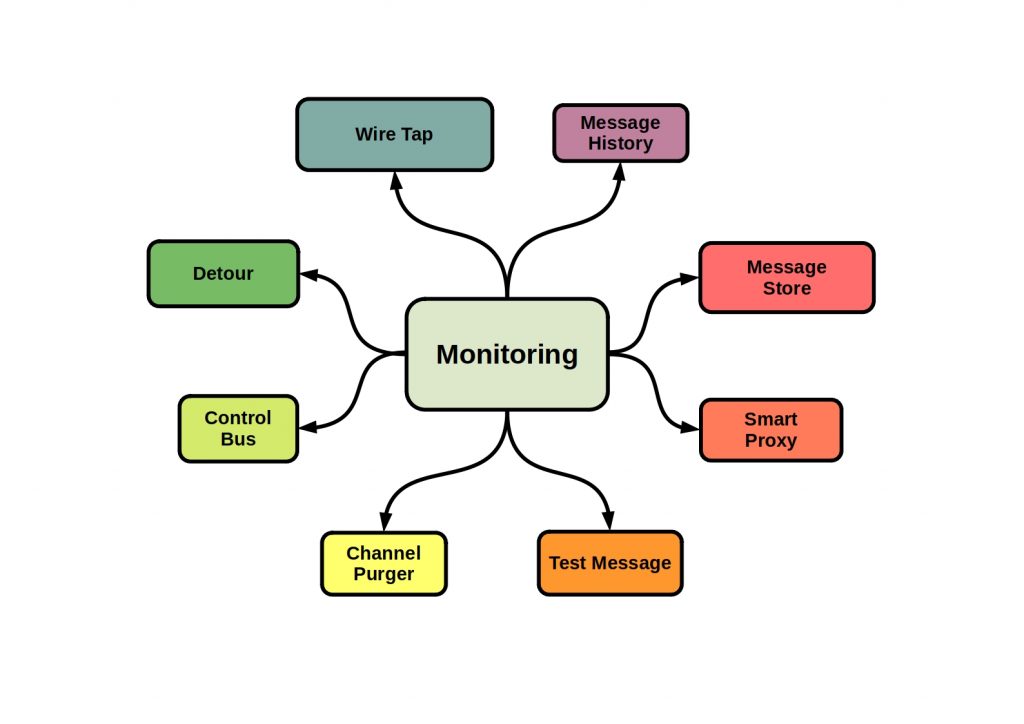

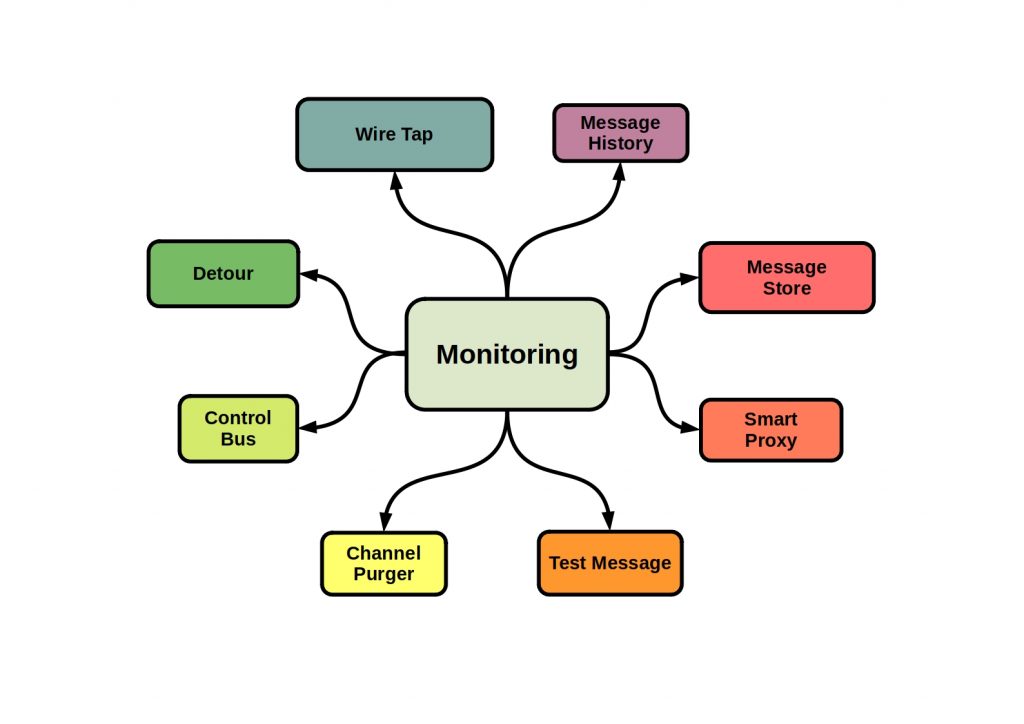

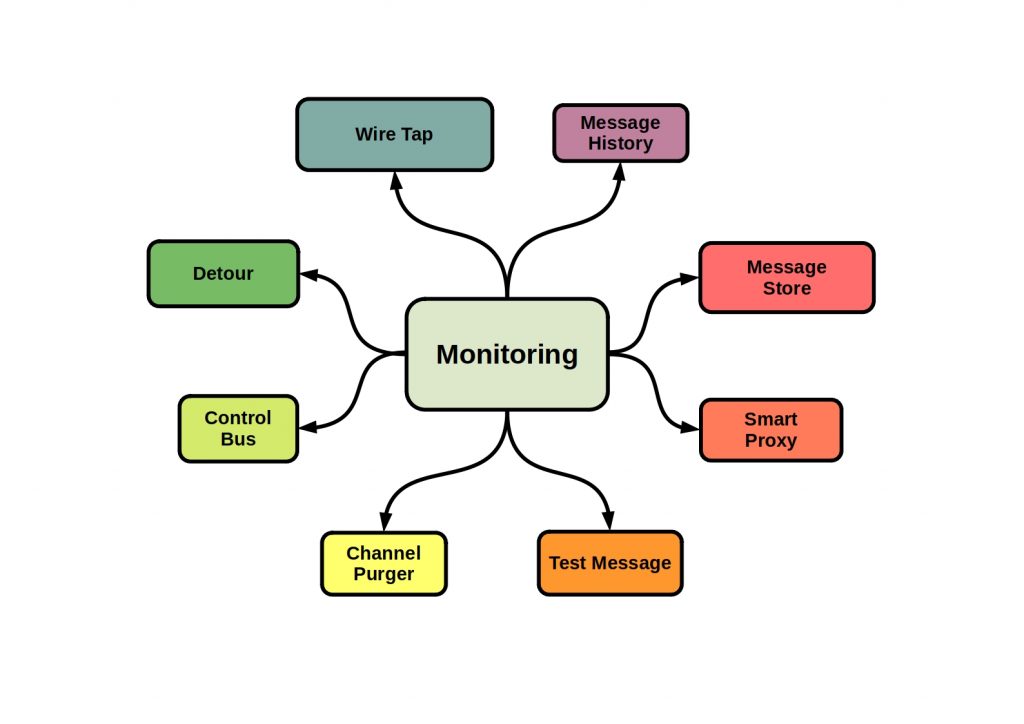

How do I monitor my messaging system and keep it running?

A flexible messaging architecture unfortunately leads to a certain degree of complexity on the other side. Especially when it comes to integrating many message producers and consumers decoupled from each other in a messaging system, with partly asynchronous messaging, monitoring during operation can become difficult.

For this purpose, system management patterns have been developed to provide the right monitoring tools. The main goal is to prevent bottlenecks and hardware overloads in order to guarantee the smooth flow of messages.

The following figure shows some test and monitoring patterns.

What are the basic systems?

With a typical system management solution, for example, the data flow can be controlled by checking the number of data sent and received, or the processing time.

This is contrasted with the actual checking of the message information contained.